Table of Contents

Overview

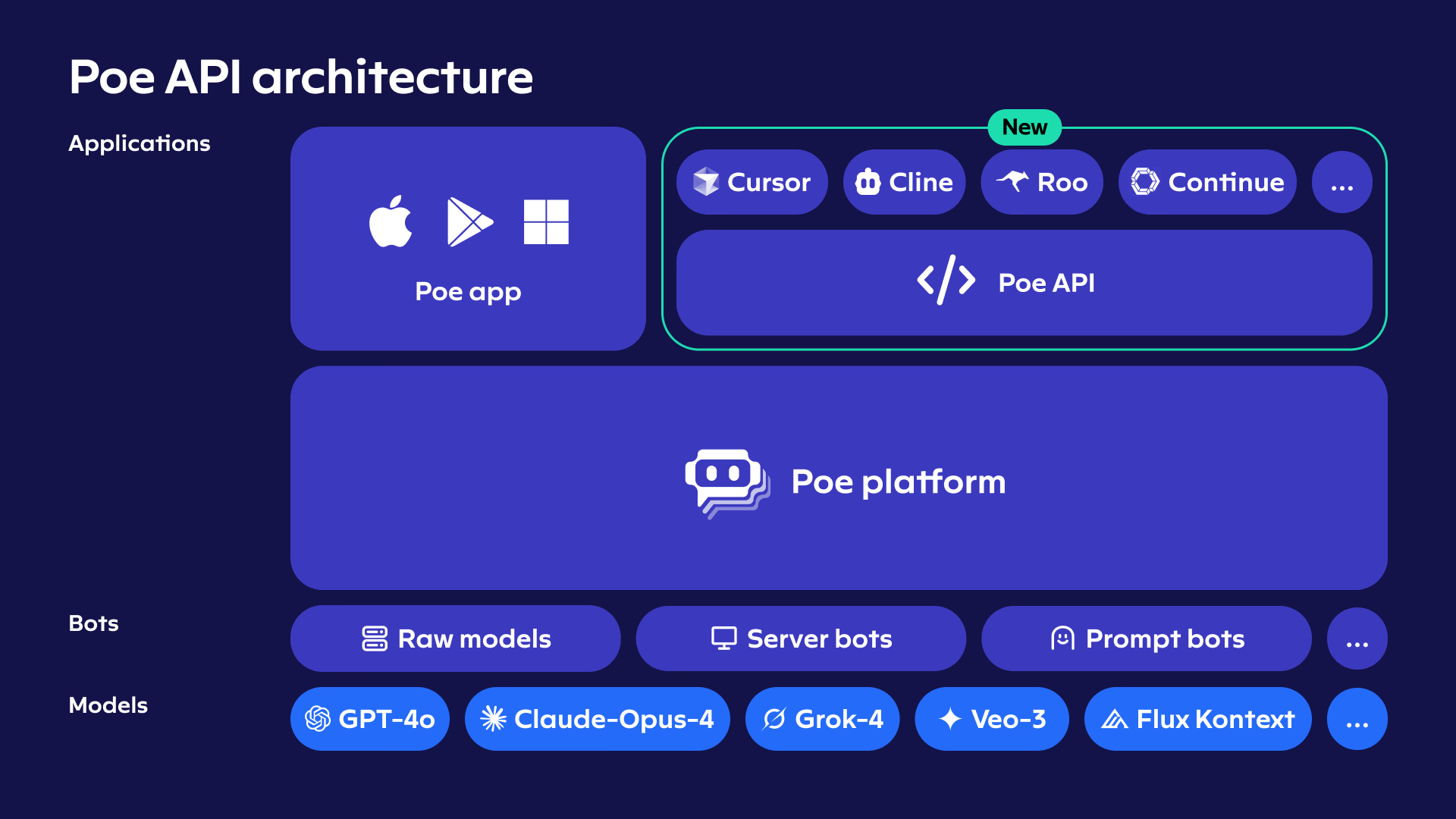

In the rapidly evolving landscape of AI, managing multiple APIs for different models presents significant challenges for developers. Poe’s unified API, launched in July 2025, addresses this complexity by providing a single, OpenAI-compatible interface for accessing over 100 leading AI models across text, image, video, and audio generation. This innovative platform consolidates access to models like Claude, Imagen 4, and Veo 3 through one API key and unified billing system, streamlining AI development workflows.

Key Features

Poe’s API delivers comprehensive functionality designed for seamless AI integration across multiple modalities.

- OpenAI-compatible API endpoint: Ensures easy integration into existing workflows with minimal learning curve for developers familiar with OpenAI’s structure

- Unified access to 100+ models: Provides instant access to cutting-edge AI models from major providers including OpenAI, Anthropic, Google, and Meta through a single interface

- Python SDK available: Offers dedicated SDK for Python developers, simplifying API interactions and accelerating development

- Subscription-based point system: Utilizes transparent point-based billing tied to existing Poe subscriptions, providing predictable costs and scalable access

- Compatible with existing OpenAI workflows: Enables seamless migration from OpenAI implementations while expanding capabilities

How It Works

Poe’s API architecture provides straightforward integration through familiar endpoints. Developers interact with the system using the standard /v1/chat/completions endpoint, maintaining OpenAI compatibility, or through the comprehensive Python SDK. When requests are made, the system intelligently routes them to the selected AI model while consuming Poe points from your subscription. These points are included with Poe subscriptions, with additional points available for purchase at \$30 per million tokens, enabling flexible scaling without service interruption.

Use Cases

The versatility of Poe’s unified API enables powerful applications across diverse development scenarios.

- Cross-modal content workflows: Build applications that seamlessly generate and combine text, images, videos, and audio from a single API, enabling rich multimedia experiences

- Dynamic model selection: Develop intelligent systems that automatically choose optimal models for specific tasks (Claude for reasoning, Imagen 4 for high-quality images) without switching APIs

- OpenAI-compatible applications with expanded capabilities: Enhance existing OpenAI-based applications by integrating advanced models like Claude 3.5, Gemini, or specialized video/audio generation models

- Simplified multi-provider integration: Eliminate complexity of managing multiple API keys, billing systems, and documentation across different AI providers

Pros \& Cons

Advantages

- Streamlined integration: OpenAI-compatible structure enables rapid development with familiar patterns

- Comprehensive model access: Single API provides access to leading models from all major AI providers

- Advanced multimodal capabilities: Native support for text, image, video, and audio generation enables sophisticated applications

- Transparent pricing: Point-based system aligned with subscription tiers offers predictable and scalable cost management

Disadvantages

- Platform dependency: API access requires active Poe subscription, creating additional cost considerations

- Variable community model reliability: While premium models maintain high quality, community-contributed models may show inconsistent performance

- Evolving feature set: As a newer service, some advanced features may still be under development

How Does It Compare?

When evaluating Poe’s API against the current AI integration landscape, its unified approach offers distinct advantages. OpenRouter, serving over 2.5 million users, provides access to 400+ models from 60+ providers through a similar unified interface, focusing on reliability and cost optimization. Vercel AI Gateway offers access to 100+ models with advanced features like budget monitoring, load balancing, and automatic failovers, targeting enterprise deployments.

Google Vertex AI provides comprehensive access to Google’s models (Gemini 2.5 Pro, Imagen 4, Veo 3) alongside partner models like Claude through Anthropic, with enterprise-grade security and scaling. Anthropic’s API focuses exclusively on Claude models (3.5 Sonnet, 3.5 Haiku, 3.5 Opus) with advanced reasoning capabilities and large context windows.

OpenAI’s API has evolved beyond its original GPT focus, now offering a comprehensive suite including GPT-4o, DALL-E 3, Whisper, and embedding models, though still requiring separate integrations for non-OpenAI models. Azure AI Services provides Microsoft’s integrated approach with extensive enterprise features and hybrid cloud deployment options.

Poe’s API distinguishes itself through its subscription-based model that leverages existing Poe accounts, making it particularly attractive for individual developers and teams already using the Poe platform. The unified billing through Poe points eliminates per-token pricing complexity found in traditional APIs, while maintaining broad model access competitive with OpenRouter and Vercel AI Gateway.

Final Thoughts

Poe’s API represents a significant advancement in unified AI development, successfully bridging the gap between platform diversity and developer simplicity. By providing OpenAI-compatible access to over 100 models through subscription-based pricing, it addresses key pain points in multi-provider AI integration. While the platform continues evolving and requires Poe subscription for access, its combination of broad model coverage, familiar interface patterns, and transparent pricing makes it a compelling choice for developers building next-generation AI applications. The service particularly excels for teams seeking to experiment with diverse AI capabilities without the complexity of managing multiple provider relationships and billing systems.