Table of Contents

- Overview

- Core Features & Capabilities

- How It Works: The Workflow Process

- Ideal Use Cases

- Strengths and Strategic Advantages

- Limitations and Realistic Considerations

- Competitive Positioning and Strategic Comparisons

- Pricing and Access

- Technical Architecture and Platform Details

- Launch Reception and Community Response

- Important Caveats and Realistic Assessment

- Final Assessment

Overview

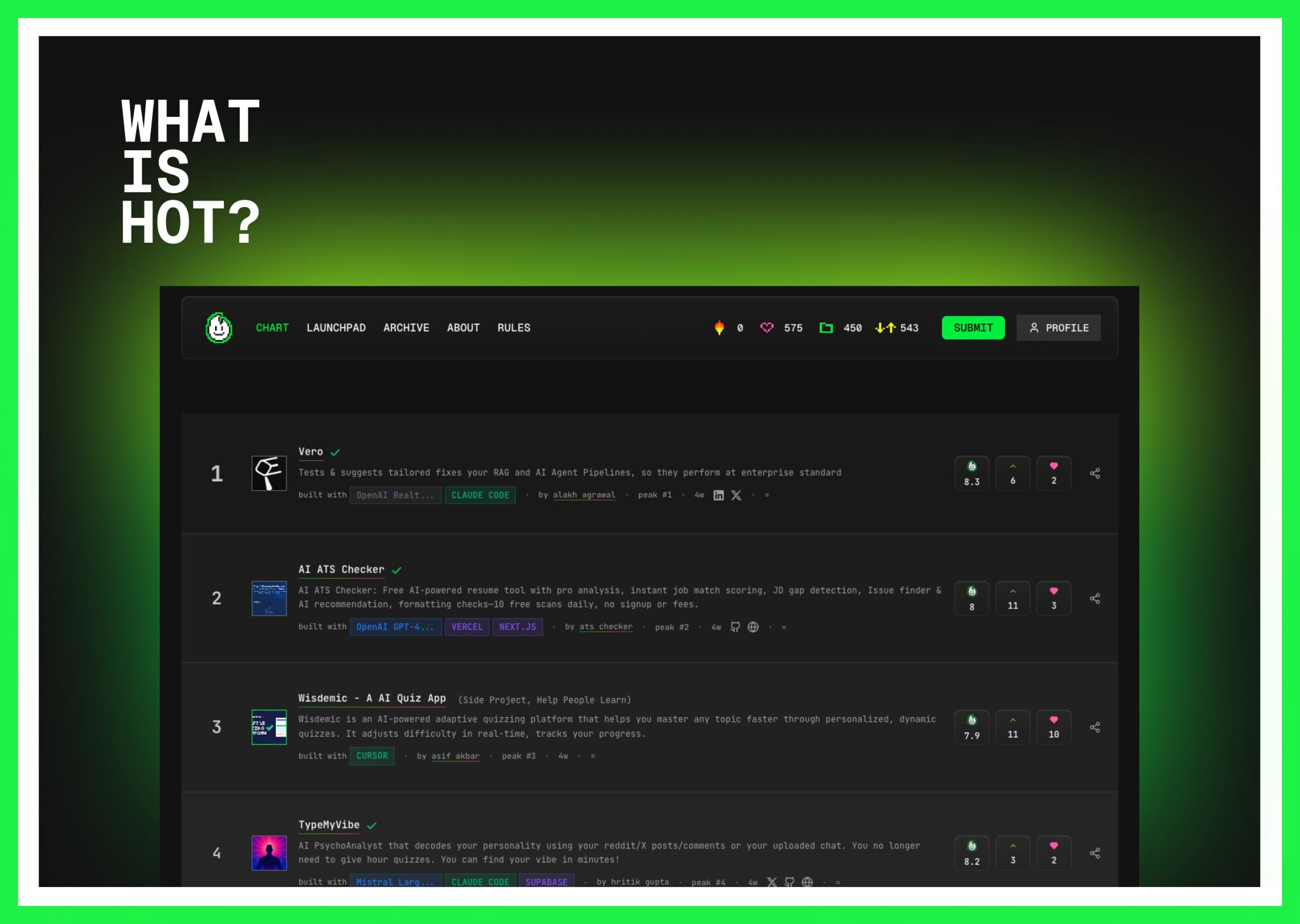

Hot100.ai is a weekly discovery platform launched on Product Hunt on October 29, 2025, that showcases and ranks AI-powered projects using an automated AI-powered scoring system called Flambo. The platform addresses a genuine discovery challenge in the rapidly expanding ecosystem of AI-native tools: identifying high-quality, production-ready applications among the thousands of projects launched monthly, particularly those built with modern AI development tools like Cursor, Bolt, v0, Replit, Lovable, and Claude Code.

Positioned as “the weekly chart for AI projects,” Hot100.ai launched with approximately 400+ projects already ranked and continues to add new submissions weekly. Rather than relying solely on popularity metrics like Product Hunt upvotes or organic social media traction, the platform emphasizes objective evaluation of both technical innovation and practical utility, attempting to surface genuinely useful projects regardless of their launch-day visibility.

The platform achieved 165 upvotes with 19 comments on its Product Hunt launch day, reaching number 8 position out of approximately 200 launches that day, indicating meaningful interest from the builder and discovery-focused community.

Core Features & Capabilities

Hot100.ai provides specialized functionality focused on AI project discovery and transparent evaluation.

AI-Powered Scoring System (Flambo): Uses a proprietary AI-based evaluation system to objectively score project submissions. The scoring algorithm focuses on two critical dimensions: technical innovation (code quality, architectural sophistication, novel implementation approaches) and practical utility (real-world problem-solving effectiveness, user adoption indicators, business impact). The system maintains consistent evaluation criteria with standardized prompts and low randomness, providing reproducible scoring rather than random fluctuation between evaluations.

Weekly Rankings Updated Every Monday: Fresh rankings published automatically each Monday morning, ensuring current insights into emerging trends and project momentum. This consistent cadence provides predictable discovery opportunities and enables tracking of week-to-week momentum shifts in the AI-builder ecosystem.

Focus on Modern AI Development Tools: Specializes in projects specifically built with Cursor, Bolt, v0, Replit, Lovable, Claude Code, and similar AI-native development platforms known as “vibe coding” tools. This specialization reflects where cutting-edge AI application development is concentrated and enables focused discovery within this rapidly evolving ecosystem.

Dual-Axis Evaluation Methodology: Projects are scored explicitly on two independent dimensions rather than a single aggregate score. Technical innovation assesses architectural depth, code sophistication, and novel implementation approaches. Utility assesses practical value, user adoption potential, problem-solving effectiveness, and business impact. This separation provides visibility into what specifically drives each project’s ranking position.

Technical Transparency Requirements: Developers submitting projects must disclose full technical specifications including architecture details, deployment infrastructure (cloud vs. self-hosted), API integrations, LLM choices, and development frameworks used. This transparency enables informed evaluation by both Flambo and human reviewers while helping users understand implementation decisions.

Project Categorization by Framework and Tools: Projects are organized by the specific development tools and AI models used (Cursor, Bolt, OpenAI, Mistral, Claude, v0, Replit, etc.), enabling filtered discovery for users seeking projects built with specific technology stacks or learning from particular implementation patterns.

Scoring Bonuses and Penalties: The system rewards certain characteristics (live demos accessible without waitlists, verified security implementation, clear documentation) and penalizes others (waitlist-only access with no preview, vague descriptions, missing technical specifications), creating incentives for transparency and production readiness.

Historical Archive and Trend Analysis: Platform maintains historical rankings data enabling analysis of framework adoption trends, tool popularity evolution, and which development approaches gain traction over time—valuable for developers, tool creators, and investors tracking the AI-building ecosystem.

Detailed Technical Specifications: Each project listing includes comprehensive technical details including deployment architecture, performance metrics (where applicable), security implementations, and architectural patterns—providing learning opportunities for developers exploring best practices in AI-native application development.

Hybrid Evaluation Approach: Combines automated AI scoring with lightweight human review to verify submission quality, ensure accurate self-reported metadata, and reduce gaming or manipulation of the ranking system.

How It Works: The Workflow Process

Hot100.ai operates through a streamlined submission and evaluation workflow culminating in weekly rankings published every Monday.

Step 1 – Project Submission: Developers submit AI-powered projects through the Hot100.ai platform using a Launchpad submission flow. Submissions include project description, live demo or preview link, comprehensive technical stack details, and information about which AI development tools and models were used in building the application.

Step 2 – Automated Evaluation by Flambo: The Flambo AI scoring system analyzes each submission using its dual-axis methodology with standardized evaluation prompts. The system evaluates technical innovation (code quality, architectural sophistication, novel implementations compared to similar projects) and practical utility (real-world effectiveness, user adoption signals, business impact, problem-solving clarity). Scoring incorporates consistent evaluation prompts designed for reproducibility and low randomness to maintain fair comparison across submissions.

Step 3 – Human Verification: Submissions undergo lightweight human review to verify technical accuracy of self-reported metadata, ensure legitimate project submissions rather than spam, and confirm that projects meet basic quality standards. This hybrid approach reduces gaming while avoiding bottlenecks of purely manual review processes.

Step 4 – Scoring and Ranking: Projects receive numerical scores on both innovation and utility dimensions. Rankings are compiled with these scores, organized by overall position and filterable by technology stack used. The scoring system applies bonuses for live demos and verified implementations while applying penalties for vague descriptions or waitlist-gated access.

Step 5 – Weekly Publication: Finalized rankings publish every Monday morning, creating a new “chart” of top-ranked projects for that week. Historical data from previous weeks remains accessible for trend analysis, enabling tracking of project momentum and tool adoption patterns over time.

Step 6 – Discovery and Navigation: Users browse rankings by overall position, filter by specific development tools or AI models used, review detailed technical specifications for learning, and discover new projects matching their technology interests or use case requirements.

Ideal Use Cases

Hot100.ai serves diverse audiences within the AI development ecosystem with different discovery and learning needs.

For Developers Building AI Applications: Gain visibility for projects in a curated discovery channel emphasizing technical merit over popularity contests, receive objective feedback on innovation and utility positioning relative to peers, benchmark work against other projects in the same technology category, and identify architectural best practices from top-ranked implementations using similar stacks.

For Enthusiasts and End Users: Discover emerging AI tools and applications beyond mainstream coverage, explore projects built with specific development approaches to understand capabilities of different tools, identify new solutions to existing problems that traditional software doesn’t address, and stay current with latest practical developments in AI applications rather than just research announcements.

For Investors and Analysts: Track which AI development tools and approaches are gaining measurable traction among builders, identify emerging use-case verticals attracting developer attention and experimentation, analyze competitive dynamics within specific AI application categories, and understand market dynamics through systematic project analysis rather than anecdotal evidence.

For Researchers and Tool Developers: Study how developers are adopting specific frameworks and development tools in production applications, identify emerging patterns in AI application architecture and implementation approaches, track effectiveness of different development paradigms (vibe coding vs. traditional development), and understand real-world usage of their tools to inform product roadmap decisions.

For Learning and Technical Inspiration: Examine detailed technical specifications of top-ranked projects to learn implementation patterns, study code architecture and deployment approaches used by successful builders, understand best practices for production-ready AI applications, and gather inspiration for new development directions based on what’s actually working in production.

Strengths and Strategic Advantages

Merit-Focused Evaluation Over Popularity: Attempts to surface quality projects regardless of launch-day audience size, social media reach, or marketing budget. Projects can achieve high rankings based on technical and practical merit rather than promotional effectiveness, addressing a genuine gap in discovery channels.

Objective AI-Based Scoring with Consistency: Automated evaluation using standardized prompts reduces human bias and subjective judgment, providing consistent scoring criteria applied uniformly across all submissions regardless of submitter profile or social following.

Hybrid Human + AI Review: Combination of automated scoring with human verification balances computational efficiency with quality assurance, reducing gaming attempts and verification errors while maintaining scalability.

Technical Transparency Requirements: Mandated stack disclosure and technical specifications provide valuable insights into implementation approaches, enable informed decision-making by users evaluating tools, and create learning opportunities for developers studying successful projects.

Weekly Consistency and Predictability: Regular Monday publication schedule creates habitual discovery opportunity with clear expectation-setting for when new rankings appear, enabling systematic tracking of ecosystem trends.

Free Access and Low Barriers to Entry: No cost for browsing, discovering projects, or submitting new work. Removes friction for both builders seeking visibility and discovery-focused users exploring new tools.

Unique Historical Data on Framework Trends: Archive of rankings over time provides valuable longitudinal data about which tools and development approaches gain adoption—useful for developers choosing technologies, investors tracking markets, and tool creators understanding competitive dynamics.

Focus on Modern AI Development Ecosystem: Specialization in Cursor, Bolt, v0, Claude Code, and similar “vibe coding” tools provides concentrated visibility into where cutting-edge development is occurring rather than broad, undifferentiated catalog diluting signal with noise.

Limitations and Realistic Considerations

Limited Verification of Submitted Metadata: Lightweight human review relies substantially on accurate self-reporting by project submitters. Inaccurate technical descriptions, inflated metrics, or misleading capability claims can influence scoring without deep technical audits detecting discrepancies.

Scoring Based on Descriptions Rather Than Deep Code Analysis: Flambo evaluation depends on project submissions, demos, and descriptions rather than comprehensive code audits, security assessments, or technical deep dives. Implementation details visible only in source code may not be fully captured in rankings.

Early-Stage Platform with Limited Historical Data: Launched October 29, 2025, the platform has minimal historical data on trend stability and pattern persistence. Scoring methodology and platform features likely still evolving based on community feedback and operational learnings.

Submission Verification is Lightweight: Light human review means obvious low-quality submissions or spam might be caught, but subtle issues, misleading presentations, or overstated capabilities may slip through verification without deeper investigation.

Dependency on Accurate Self-Reporting: Projects must honestly disclose their technology stack, features, and performance characteristics. Users must independently verify claims before investing significantly in tools or making critical adoption decisions.

Limited to Specific Tool Ecosystem: Specialization in Cursor, Bolt, v0, Replit, Lovable, and Claude Code ecosystems excludes innovative projects built with other development approaches or traditional methodologies, potentially missing important innovations outside this focused scope.

Data Still Accumulating: As new platform with relatively small dataset, patterns may shift substantially as platform matures and project submission volume increases. Current trends may not predict long-term ecosystem evolution.

Sustainability Model Unclear: Free access for both users and submitters raises questions about long-term platform funding, maintenance resources, and business model sustainability without disclosed revenue strategy.

Competitive Positioning and Strategic Comparisons

Hot100.ai occupies a specialized niche within the AI project discovery and showcasing landscape, differentiating through its focus and automated evaluation methodology.

vs. Product Hunt: Product Hunt relies on community voting and social dynamics—projects with strong launch marketing, established founder networks, or timing advantages often reach top positions regardless of technical merit. Hot100.ai attempts objective evaluation of innovation and utility independent of popularity contests. Product Hunt provides broader visibility across all product categories and community feedback mechanisms; Hot100.ai provides merit-focused discovery specifically for AI-built projects potentially surfacing overlooked quality work. Both serve different discovery preferences—Product Hunt for social validation, Hot100.ai for technical merit.

vs. GitHub Trending: GitHub trending shows popular repositories based on star velocity (growth rate compared to baseline) and engagement metrics (forks, issues, pull requests) during specific periods. Trending reflects developer community interest but doesn’t systematically evaluate fitness for specific use cases or practical utility beyond source code popularity. Hot100.ai evaluates practical application readiness and utility; GitHub shows repository popularity among developers. Different discovery objectives—GitHub for open-source code popularity, Hot100.ai for complete application merit.

vs. Hacker News: Hacker News emphasizes community discussion and voting on technical merit, but relies on community members discovering, submitting, and voting on projects—creating visibility bias toward well-connected submitters. Hot100.ai’s automated scoring applies consistent evaluation criteria to all submissions regardless of submitter profile. Hacker News provides broader technical community perspective and discussion quality; Hot100.ai provides systematic evaluation without requiring social capital.

vs. There’s An AI For That: There’s An AI For That (TAAFT) is a comprehensive AI tool directory covering 12,000+ AI tools (as of mid-2025) across all categories and use cases, launched in late 2022. TAAFT provides broad coverage with user voting, tagging, and categorization by task type. TAAFT emphasizes breadth and comprehensive coverage; Hot100.ai emphasizes depth, specialization in AI-native development tools, and weekly curated rankings. TAAFT serves users seeking any AI tool for any task; Hot100.ai serves builders and enthusiasts tracking cutting-edge AI-builder ecosystem specifically.

vs. Indie Hackers: Indie Hackers is a community platform for indie builders showcasing projects with community feedback, discussion forums, revenue transparency, and networking capabilities. Relies on community engagement, voting, and social dynamics. Hot100.ai provides algorithmic evaluation independent of community size or social following. Indie Hackers emphasizes community support and revenue-focused entrepreneurship; Hot100.ai emphasizes objective technical evaluation of AI-specific projects.

Key Differentiators: Hot100.ai’s core differentiation lies in its focus specifically on projects built with modern AI-native development tools (“vibe coding” ecosystem), automated yet reproducible scoring methodology emphasizing technical merit over popularity, dual-axis evaluation explicitly distinguishing innovation from utility rather than single aggregate score, weekly ranking cadence providing regular discovery rhythm, and specialization in early-stage AI application discovery rather than broad undifferentiated tool catalogs. While competitors excel at different dimensions (Product Hunt at broad visibility, GitHub at code popularity, TAAFT at comprehensive coverage, Indie Hackers at community support), Hot100.ai uniquely combines automated evaluation with AI-builder ecosystem specialization.

Pricing and Access

Hot100.ai operates completely free for both users and project submitters as of October 2025.

Free Discovery Platform: Browsing rankings, filtering by tools and categories, reviewing detailed technical specifications, and accessing historical data all available at no cost without registration requirements.

Free Project Submission: Developers can submit projects for evaluation and ranking for free without subscription requirements, paywalls, or submission limits clearly documented.

No Premium Tiers: As of October 2025, no premium tiers, paid features, enhanced visibility options, or subscription models exist on the platform.

Sustainability Model Unclear: The platform’s long-term business model and revenue strategy are not publicly disclosed. Free access and free submissions without advertising raise questions about platform sustainability, ongoing development funding, and long-term operational viability.

Technical Architecture and Platform Details

Web-Based Interface: Accessible through modern web browser at hot100.ai without requiring downloads, installations, or native applications.

Submission Infrastructure: Developers submit project metadata, descriptions, demo links, and technical specifications through web form interface called “Launchpad” for submission processing.

Evaluation Engine: Flambo scoring system analyzes submissions using consistent, standardized prompts designed to minimize randomness and ensure reproducible evaluation across different submission times and evaluators.

Filtering and Categorization: Projects filterable by AI development tool (Cursor, Bolt, v0, Replit, Lovable, Claude Code, etc.), AI model provider (OpenAI, Anthropic, Mistral, Google, etc.), and application category or use case.

Weekly Update Cycle: Rankings compiled and published every Monday with historical data preserved for longitudinal trend analysis and momentum tracking.

API Access Unknown: Whether programmatic API access to rankings data, project metadata, or historical trends is available or planned is not publicly documented as of October 2025.

Newsletter and Community Engagement: Platform provides weekly newsletter summarizing trends in tools, models, and developer workflows based on submission patterns and ranking changes.

Launch Reception and Community Response

Hot100.ai’s October 29, 2025 Product Hunt launch generated positive reception with 165 upvotes and 19 comments by launch day, achieving number 8 position out of approximately 200 launches that day. This indicated meaningful interest from builders and discovery-focused users in the AI development community.

Early feedback emphasized appreciation for merit-focused evaluation addressing frustration with popularity-driven discovery channels, automated consistency reducing subjective bias, and specialization in modern “vibe coding” development tools. The premise of a weekly “chart” specifically for AI-built projects resonated with developer community seeking systematic discovery beyond social media algorithms.

Reddit discussions in communities like r/VibeCodersNest highlighted the challenge of surfacing quality AI projects among high-volume launches and Flambo’s approach to addressing this through standardized evaluation criteria.

Important Caveats and Realistic Assessment

Scoring Transparency Could Be Improved: While the platform describes evaluation criteria (innovation and utility), the specific weighting formulas, calculation methods, and threshold values are not fully disclosed publicly. Users must trust the methodology without complete algorithmic visibility, making it difficult to understand precisely why projects rank as they do.

Early-Stage Platform Risk: As October 29, 2025 launch, the platform has limited operational history, unproven long-term reliability, and uncertain sustainability. Scoring methodology, ranking stability, and platform features remain unproven at scale with potential for significant evolution.

Submission Gaming Potential: Despite human review, determined submitters could potentially game metrics, inflate technical descriptions, or misrepresent capabilities. Users should independently verify project claims, test functionality, and validate technical specifications before relying on tools for production use.

Tool Ecosystem Bias: Focus on specific modern tools (Cursor, Bolt, v0, Replit, Lovable, Claude Code) means excellent projects built with traditional development approaches, alternative AI tools, or non-“vibe coding” methods may be excluded or receive lower visibility despite quality.

Sustainability Questions Without Revenue Model: Free access and free submissions create genuine questions about long-term platform funding, ongoing maintenance, feature development, and operational sustainability. No disclosed business model raises viability concerns.

Data Accumulation Phase: Limited historical data (only weeks since launch) means trends and patterns may shift substantially as platform grows, submission volume increases, and ecosystem matures. Current observations may not predict long-term dynamics.

Verification Limitations: Lightweight human review cannot deeply verify technical claims, code quality, security implementations, or performance characteristics without resource-intensive auditing that would create submission bottlenecks.

Final Assessment

Hot100.ai addresses a practical and growing discovery challenge: identifying genuinely useful AI projects among thousands launched monthly in the rapidly expanding “vibe coding” ecosystem, particularly those representing cutting-edge development practices using modern AI-native tools like Cursor, Bolt, and v0. The platform’s emphasis on objective evaluation, technical transparency requirements, and merit-focused ranking differentiates it meaningfully from popularity-driven alternatives while providing a curated view of where AI application development is heading in the builder community.

The platform’s greatest strategic strengths lie in its focused specialization on modern AI development tools where innovation is concentrated rather than diluted broad coverage, dual-axis evaluation explicitly distinguishing technical innovation from practical utility enabling nuanced project assessment, automated yet reproducible scoring using standardized prompts reducing subjective bias, free access lowering barriers to discovery and submission encouraging broad participation, weekly consistency providing predictable discovery rhythm and momentum tracking, and historical archive enabling longitudinal analysis of tool adoption and ecosystem trends valuable for multiple stakeholder types.

However, prospective users should approach with realistic expectations about current maturity stage and inherent limitations. As an October 29, 2025 launch, Hot100.ai has limited operational history proving long-term reliability, unproven sustainability without disclosed business model raising viability questions, early-stage dataset lacking extensive historical patterns for confident trend prediction, evaluation methodology potentially still evolving based on community feedback and operational learnings, and dependency on accurate self-reporting without deep technical audits creating verification gaps.

Hot100.ai appears optimally positioned for developers building AI applications with modern “vibe coding” tools seeking visibility in curated discovery channels emphasizing merit, developers interested in understanding current trends and architectural best practices in AI-native application development, investors and analysts systematically tracking which tools and approaches gain measurable developer adoption rather than relying on anecdotal evidence, enthusiasts seeking high-quality AI projects beyond mainstream Product Hunt or social media visibility, researchers studying AI development patterns and tool ecosystem evolution through structured data, and learners exploring implementation approaches used by successful builders in the AI-native development space.

It may be less suitable for organizations seeking comprehensive AI tool directories with broad coverage across all development paradigms, teams requiring deep technical code audits and security assessments before tool adoption, users seeking projects built with specific tools outside the current “vibe coding” focus ecosystem, organizations uncomfortable with early-stage platforms and preferring established discovery channels with proven track records, teams needing guaranteed long-term platform stability for strategic planning purposes, or users requiring transparent algorithmic scoring details for understanding ranking methodology.

For developers building next-generation AI applications with modern tools like Cursor, Bolt, v0, and Claude Code, Hot100.ai provides a meaningful alternative to popularity-driven discovery channels, emphasizing technical merit and practical utility over social media dynamics. The platform’s long-term success depends on maintaining evaluation consistency as submission volume scales, growing dataset size to establish reliable trend patterns, preserving ranking stability and credibility over time, developing sustainable business model that doesn’t compromise free-access discovery value proposition, and continuing to evolve evaluation methodology based on ecosystem feedback while maintaining scoring reproducibility that builders trust.