Table of Contents

Overview

Packmind Open Source addresses a specific challenge in modern development: ensuring that AI-assisted coding remains consistent with organizational standards and architectural principles. Rather than treating AI code generation as autonomous, Packmind captures an organization’s established coding practices into a structured engineering playbook and distributes this context to AI coding assistants. This approach helps development teams scale AI-assisted coding while maintaining code quality, consistency, and compliance with established standards.

Key Features

Packmind OSS provides three core components working together to enforce coding standards across AI-assisted development:

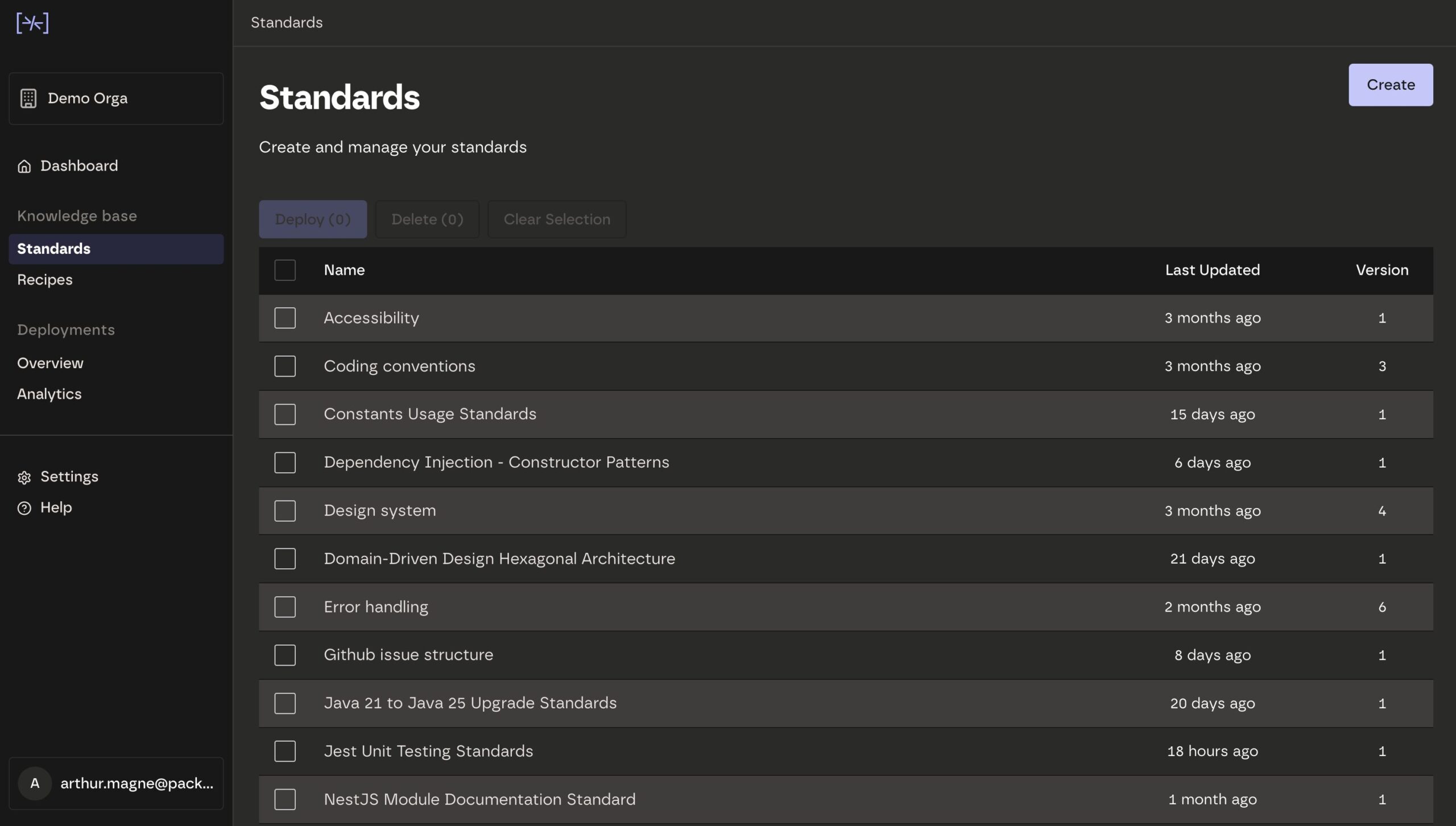

- Structured Engineering Playbook Creation: Automatically aggregate coding standards, architectural patterns, and best practices from existing documentation, code reviews, and team expertise into a versioned, machine-readable playbook.

- AI Assistant Integration and Alignment: Distribute the engineering playbook to AI coding assistants (GitHub Copilot, Cursor, Claude Code, and others) so these tools understand and follow your organization’s specific coding standards during code generation.

Governance and Drift Detection: Monitor repositories for deviations from the established playbook, identify where standards aren’t being followed, and provide automated repair capabilities to bring code back into compliance.

Open-Source Foundation with Enterprise Options: The core platform operates under Apache 2.0 open-source license, supporting unlimited users and repositories, with paid tiers adding advanced enforcement, SSO, RBAC, and automated repair workflows.

Enterprise-Grade Security: SOC 2 Type II certification (since 2024) confirms rigorous security and compliance controls, with support for air-gapped deployments and integration with internal LLMs.

How It Works

Packmind OSS operates through a three-stage process designed to capture organizational knowledge and propagate it through development workflows. First, teams collaboratively define their engineering playbook by aggregating scattered standards from documentation, architectural decision records, code review discussions, and team expertise. Packmind automatically surfaces these patterns and structures them into versioned standards.

Second, this playbook is distributed to both AI coding assistants (through MCP server integration or direct API connection) and your code repositories (via pre-commit hooks and CI/CD integration). AI tools receive the playbook as optimized context, enabling them to generate code aligned with your standards. Repository guardrails automatically check for compliance.

Third, Packmind continuously monitors for deviations. When code doesn’t match the established standards, the system identifies the drift, flags it during code review, and can automatically rewrite non-compliant code to match requirements. This transforms standards enforcement from manual review responsibility to automated, continuous validation.

Use Cases

Packmind OSS addresses several development challenges where AI adoption creates risks:

- Governing AI-Generated Code at Scale: Rapidly scale AI-assisted coding across teams while maintaining architectural integrity and preventing inconsistent patterns that accumulate technical debt. The playbook ensures AI tools generate code aligned with organizational standards from the outset.

Onboarding and Knowledge Preservation: Accelerate new developer onboarding by encoding experienced developers’ knowledge into executable standards. Capture institutional coding practices before they leave, preventing knowledge loss and maintaining consistency across team transitions.

Compliance and Regulatory Adherence: Enforce security practices, architectural patterns, and compliance requirements automatically across all code (AI-generated and human-written). Provide audit trails demonstrating standards application for regulated industries.

Code Review Efficiency: Streamline code review processes by automatically flagging and fixing standard violations pre-review, allowing human reviewers to focus on logic, architecture, and complex design decisions rather than routine compliance checks.

Pros & Cons

Advantages

Packmind OSS offers meaningful benefits for organizations scaling AI-assisted development:

- Transforms fragmented knowledge into enforced standards: Converts scattered coding knowledge and practices into machine-executable standards that guide AI and humans consistently.

Reduces code review overhead: Automatically identifies and fixes standard violations before human review, enabling reviewers to focus on higher-level concerns.

Scales standards without cultural overhead: Eliminates reliance on manual enforcement and individual expertise, making standards application consistent across large teams.

Open-source foundation with enterprise optionality: Free core platform with transparent codebase enables customization and community contribution while paid tiers provide advanced governance features for enterprises.

Disadvantages

While effective for its specific focus, Packmind OSS has meaningful limitations:

- Requires initial playbook definition work: Implementing the system demands upfront effort to codify existing practices into structured standards. Organizations with undefined or inconsistent standards may struggle with this step.

Advanced governance features in paid tier: While core platform is open-source, comprehensive enforcement, automated repair, SSO, RBAC, and other enterprise features require paid subscription.

Organization-specific context required: Packmind injects organizational standards into AI workflows, which requires clear definition of your organization’s engineering practices. Teams with minimal documented standards may see limited value.

Different category from traditional linters: Unlike ESLint or SonarQube, Packmind focuses on distributing organizational context to AI assistants rather than catching errors in completed code. Both approaches are complementary but serve different purposes.

How Does It Compare?

The code quality and governance landscape includes several distinct categories of tools, each addressing different aspects of development consistency.

ESLint, SonarLint, and traditional linters excel at detecting code smells, style violations, and potential bugs in completed code through static analysis. These tools are primarily reactiveâthey identify problems after code is written. Packmind differs fundamentally by being proactive: it distributes standards to AI assistants during code generation, preventing inconsistent patterns before they’re written. While linters remain valuable for human-written code and catch issues ESLint-style tools miss, they don’t address the specific challenge of AI-assisted code alignment.

Codiga combines static analysis with customizable rule definitions and team-wide rule sharing, operating similarly to ESLint but with additional organizational enforcement. Like traditional linters, Codiga analyzes completed code. Packmind’s focus on distributing context to AI assistants pre-generation represents a different approach, though both can coexist in a development workflow.

CodeRabbit and GitHub Advanced Security focus on AI-powered code review during pull requests, analyzing AI-generated and human-written code to identify quality issues and vulnerabilities. These tools specialize in post-generation analysis and review automation. Packmind’s upstream approachâensuring AI generates compliant code from the startâaddresses a complementary problem, reducing the volume of violations CodeRabbit and similar tools need to catch.

GitHub Advanced Security and Dependabot handle security scanning, dependency management, and vulnerability identification within the GitHub ecosystem. Packmind complements these tools by ensuring AI-generated code aligns with organizational security and architectural standards before reaching the scanning stage.

Coderabbit and similar AI code reviewers use AI agents to analyze entire codebases and suggest improvements during pull request reviews. While these tools provide intelligent feedback on code quality, they don’t distribute organizational playbooks to guide AI assistants during initial code generation.

Packmind’s distinctive positioning centers on upstream standards distribution to AI assistants rather than post-generation analysis. It bridges the gap between human expertise (captured in an engineering playbook) and AI code generation, ensuring consistency at the source. Organizations adopting AI-assisted coding without Packmind often find their codebases drift as different AI assistants generate code from different training contexts. Packmind injects organizational context into that process.

The tool category is evolving rapidly. In 2025, GitHub Advanced Security has introduced AI-powered automatic fixes and enhanced scanning, while CodeRabbit has expanded to include CLI support and MCP integration. Packmind’s differentiation increasingly depends on organizations’ specific needs: teams prioritizing upstream standards application and AI alignment benefit from Packmind, while teams focusing on post-generation analysis and review automation may prioritize CodeRabbit or GitHub Advanced Security.

Final Thoughts

Packmind Open Source addresses a genuine problem in modern development: the challenge of maintaining code consistency and quality as AI-assisted coding accelerates. By capturing organizational practices into a distributable playbook and injecting this context into AI assistants, it transforms how standards enforcement worksâmoving from reactive review to proactive guidance.

The platform works best for organizations with clearly defined coding standards and a commitment to ensuring AI adoption maintains architectural integrity. Teams already using linters like ESLint or GitHub Advanced Security should view Packmind as complementary rather than replacementâdifferent tools addressing different stages of the development lifecycle.

For organizations grappling with rapid AI adoption while concerned about code drift and consistency, Packmind OSS provides a practical foundation. The open-source model makes the core platform accessible immediately, while paid enterprise features accommodate larger teams requiring advanced governance. If maintaining standards across AI-assisted coding at organizational scale is a priority, Packmind merits serious evaluation.