Table of Contents

Overview

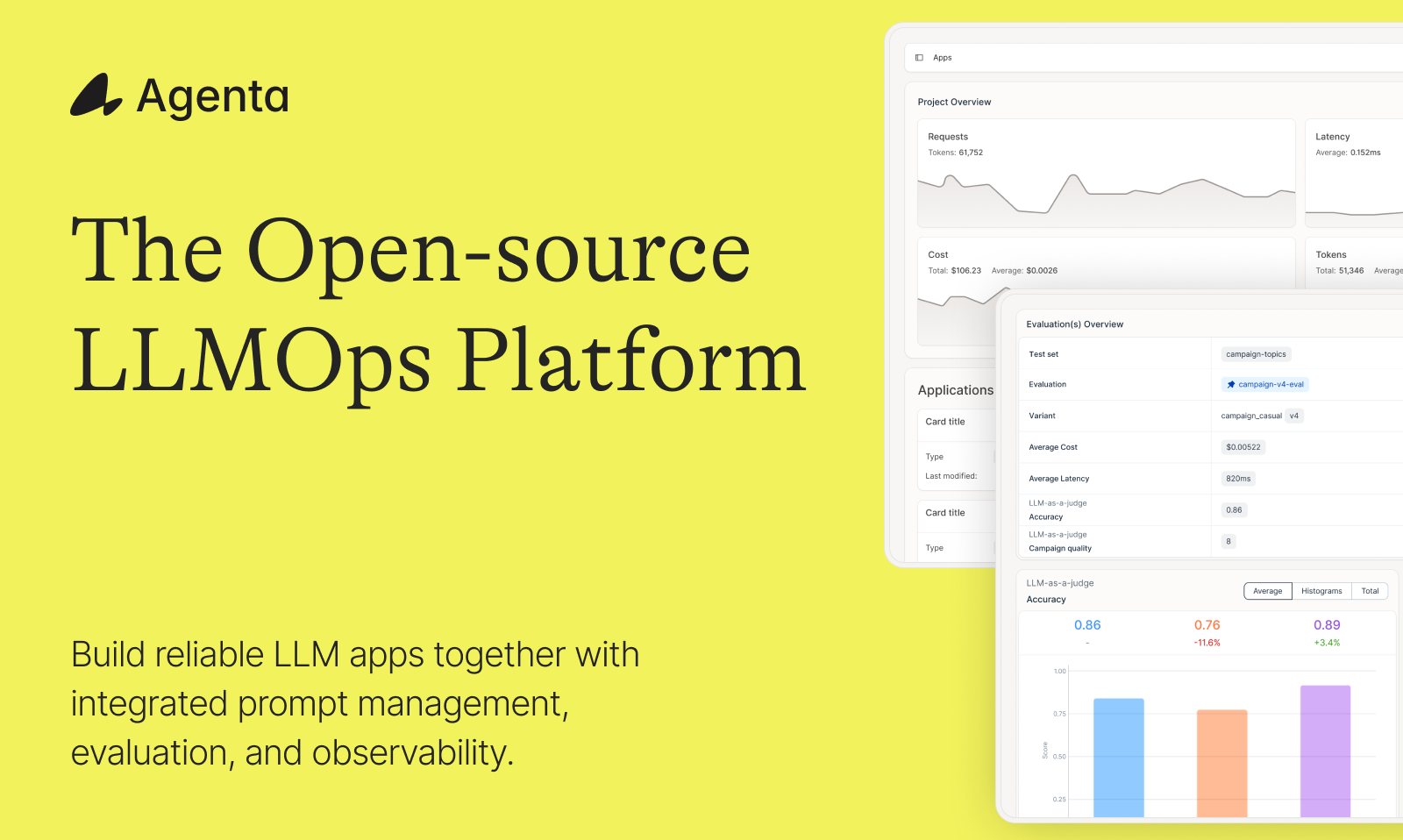

Building AI applications that are not just clever but also consistently reliable is one of the biggest challenges in the industry today. Agenta emerges as a powerful open-source LLMOps platform designed to tackle this head-on. Unlike generic monitoring tools, it focuses deeply on the iterative development cycle—providing a comprehensive suite of tools to manage prompts, run systematic evaluations, and debug complex AI behaviors. By fostering collaboration between developers and domain experts, Agenta helps teams ship high-quality LLM applications faster and with much greater confidence.

Key Features

Agenta packs a robust feature set aimed at streamlining the entire lifecycle of an LLM application. Here are the highlights:

- Prompt Management \& Playground: Keep a clean, version-controlled history of all your prompts. The interactive playground allows you to test prompts across 50+ models (OpenAI, Claude, local models) side-by-side without changing code.

- Systematic Evaluation: Move beyond anecdotal testing. Agenta enables you to run prompts against defined test sets (Golden Datasets) using both AI-based scorers (e.g., RAGAS, correctness) and human review to measure performance objectively.

- Observability \& Tracing: When things go wrong, you need to know why. The platform’s OpenTelemetry-native tracing gives you deep visibility into your LLM chains and RAG pipelines, making it easier to pinpoint latency bottlenecks or hallucination sources.

- Human-in-the-Loop Collaboration: AI quality isn’t just a coding problem. Agenta includes a user-friendly interface where product managers and subject matter experts can annotate outputs, compare variations, and refine prompts without needing to touch the codebase.

- Open-Source \& Vendor Neutral: As an open-source platform, Agenta offers complete transparency and control. You can self-host it for data privacy or use the cloud version, avoiding the lock-in typical of closed ecosystems like LangSmith.

How It Works

The workflow is designed for “Prompt Engineering as Code.” Developers begin by integrating the lightweight Agenta SDK directly into their Python application (compatible with LangChain, LlamaIndex, or vanilla code). Once integrated, the code serves as the backend, while the Agenta UI becomes the control center.

From the UI, team members can tweak prompts and parameters (like temperature) and instantly deploy the new configuration as a robust API endpoint—without redeploying the core application. You can then run batch evaluations against these configurations to score them on accuracy or tone. After iterating and optimizing, the best-performing version can be promoted to production with a single click.

Use Cases

This streamlined process enables a variety of powerful workflows for teams building with LLMs:

- A/B Testing Prompts: Systematically compare the performance of “Chain-of-Thought” vs. “Zero-Shot” prompting strategies on your specific dataset to find the winner.

- Regression Testing: Implement a continuous evaluation pipeline (CI/CD for AI) to ensure that a new model update (e.g., GPT-4o to GPT-5) doesn’t degrade your chatbot’s accuracy.

- RAG Optimization: Use tracing to debug your retrieval step—analyzing exactly which documents were retrieved and how they influenced the final answer.

- Non-Technical Collaboration: Allow domain experts (e.g., legal or medical professionals) to review and “grade” AI outputs directly in the UI to ensure domain accuracy.

Pros \& Cons

Like any tool, Agenta has its unique strengths and weaknesses.

Advantages

- Open-Source Freedom: Unlike LangSmith, Agenta allows you to self-host the entire stack, making it ideal for enterprises with strict data privacy requirements (GDPR/HIPAA).

- Evaluation-First Mindset: While other tools focus on monitoring after deployment, Agenta excels at the pre-deployment optimization phase.

- Decoupled Prompts: It successfully separates prompt logic from application code, allowing PMs to iterate on copy without blocking developers.

Disadvantages

- Self-Hosting Overhead: While powerful, managing the self-hosted Docker/Kubernetes version requires DevOps resources compared to using a fully managed SaaS.

- Smaller Ecosystem: Compared to the massive LangChain ecosystem, Agenta’s community and third-party plugin library are still growing.

How Does It Compare?

In the crowded LLMOps landscape, Agenta carves out a niche focused on developer experience and open standards.

- Vs. LangSmith:

- LangSmith is a polished, closed-source SaaS optimized for the LangChain ecosystem.

- Agenta is open-source and framework-agnostic. Choose Agenta if you need self-hosting or want to avoid vendor lock-in to LangChain’s proprietary format.

- Vs. Arize Phoenix:

- Phoenix is excellent for deep observability and visualizing embedding clusters (UMAP).

- Agenta focuses more on prompt management and versioning. Use Phoenix for deep debugging of retrievals; use Agenta for managing the prompt engineering lifecycle.

- Vs. Helicone:

- Helicone is primarily a “Gateway” for caching, logging, and cost tracking.

- Agenta is a “Development Platform.” You might use Helicone to lower your API bills, but you would use Agenta to actually improve the quality of your AI’s responses.

Final Thoughts

Agenta is a compelling choice for teams serious about treating “Prompt Engineering” as an engineering discipline rather than a guessing game. Its open-source foundation, combined with a sharp focus on evaluation and collaboration, addresses the “black box” problem of LLM development. If your team needs a reliable way to iterate on prompts without getting bogged down in spreadsheet-based testing, Agenta offers the structured workflow you’ve been missing.