Table of Contents

Overview

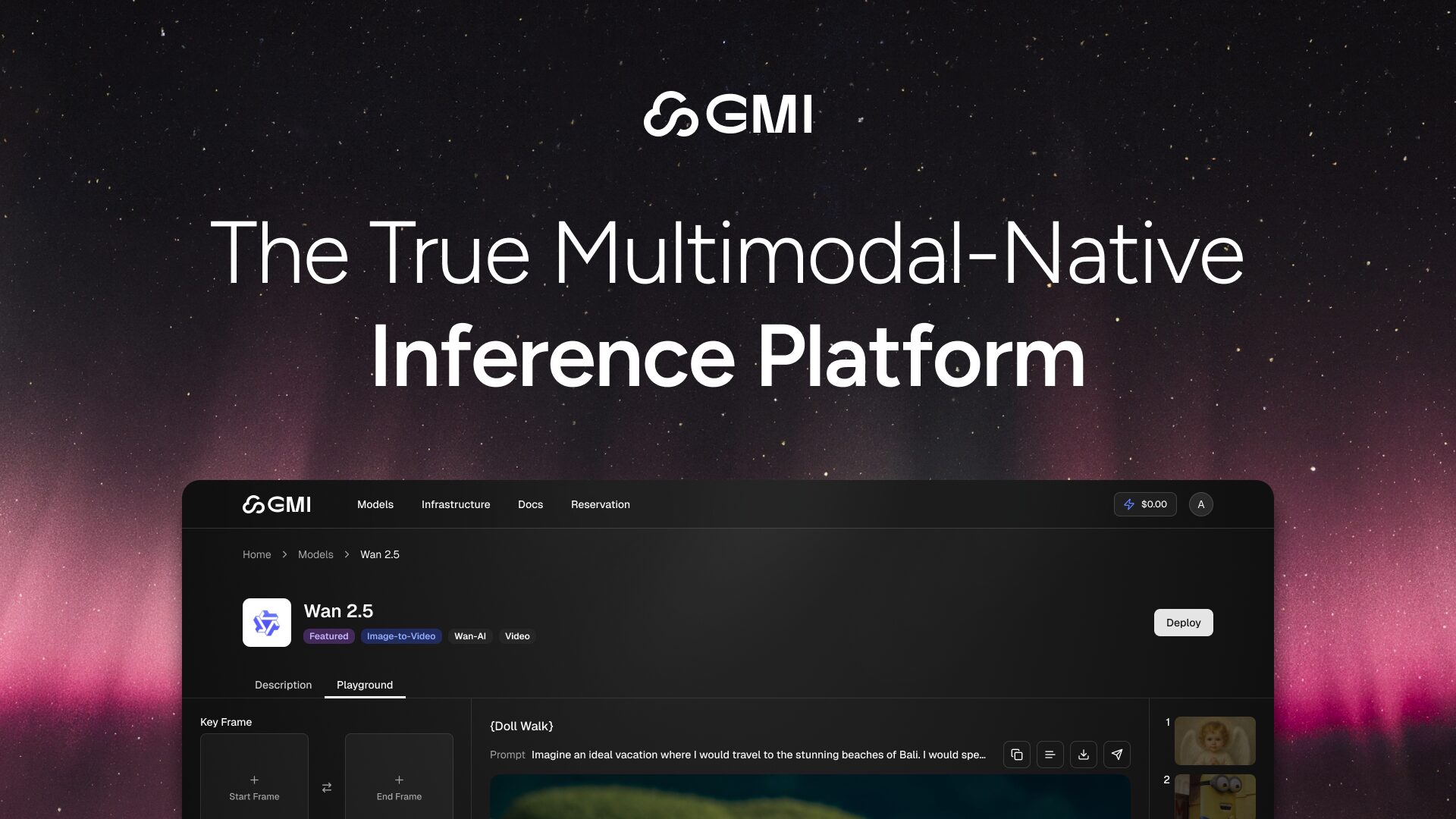

In the rapidly evolving landscape of artificial intelligence, processing multiple data types simultaneously has transitioned from experimental capability to fundamental requirement for production-grade applications. The GMI Inference Engine represents a specialized multimodal-native platform designed to streamline how AI models process text, images, video, and audio through unified infrastructure. By eliminating the complexity of maintaining separate pipelines for different data modalities, GMI Cloud aims to deliver enterprise-grade scaling, comprehensive observability, robust model versioning, and optimized inference performance for real-time multimodal applications.

Key Features

The GMI Inference Engine provides a focused set of capabilities designed for demanding AI inference workloads:

Unified Multimodal Pipeline: Process text, image, video, and audio data within a single cohesive infrastructure, reducing operational complexity and improving resource efficiency across diverse content types.

Optimized Inference Performance: The platform delivers enhanced processing speeds through software and hardware co-optimization techniques including quantization and speculative decoding. According to GMI Cloud’s Inference Engine 2.0 announcement in November 2025, the platform achieves up to 1.46 times faster inference, 25 to 49 percent higher throughput with advanced caching and elastic scaling, and 30 percent lower costs compared to baseline configurations.

H100 and H200 GPU Access: Leverage cutting-edge NVIDIA H100 and H200 GPUs for high-performance computational workloads. The H200 features 141 GB HBM3e memory with 4.8 TB/s bandwidth, providing substantial improvements for large model inference compared to H100’s 80 GB HBM3 with 3.35 TB/s bandwidth.

Enterprise Observability and Management: Gain operational insights through comprehensive monitoring, logging capabilities, and intelligent auto-scaling that dynamically adapts to workload demands without manual intervention, ensuring consistent performance and resource optimization.

How It Works

The GMI Inference Engine optimizes model serving infrastructure through a specialized architecture engineered for multimodal workloads. The platform handles diverse data modalities concurrently by intelligently distributing computational tasks across high-performance GPUs. This three-layer scheduling architecture enables global resource deployment, solving cross-region and cross-cluster automatic scaling challenges particularly relevant for applications serving international user bases with varying traffic patterns.

The system employs both queue-based and load-balancing scheduling methods to manage workloads efficiently. End-to-end optimizations spanning software and hardware layers work together to improve serving speed while reducing compute costs at scale. The platform’s intelligent auto-scaling maintains stable throughput and ultra-low latency by adapting in real time to demand fluctuations.

Use Cases

The GMI Inference Engine targets specific high-performance AI deployment scenarios:

Real-time Voice and Video AI Applications: Develop interactive applications requiring instantaneous processing of spoken language and visual cues, such as conversational AI assistants, video analysis systems, and multimodal content moderation.

Enterprise-Scale Model Serving: Deploy and manage complex multimodal models across large organizations with confidence in scalability, performance consistency, and cost management for production workloads.

High-Throughput Content Generation: Execute content creation or insight generation at significant scale, suitable for media production pipelines, large-scale data analysis, and automated content workflows requiring consistent performance.

Pros and Cons

Advantages

Performance Optimization for Multimodal Workloads: The unified pipeline architecture specifically addresses the complexity of managing multiple data types, reducing integration overhead and operational burden compared to maintaining separate specialized systems.

Simplified Infrastructure Management: Centralized dashboard for managing bare metal resources, containers, firewalls, and elastic IPs streamlines deployment and monitoring across single-node to multi-region configurations.

Automatic Scaling Capabilities: Built-in elastic scaling across clusters and regions addresses peak traffic challenges and global deployment requirements without manual resource provisioning.

Competitive GPU Pricing: GMI Cloud has demonstrated cost reductions for customers, with reported examples including 45 percent lower compute costs and 65 percent reduction in inference latency compared to traditional providers.

Disadvantages

Specialized Focus May Not Suit All Workloads: For applications requiring only text-based inference or simple single-modality processing, the advanced multimodal capabilities may represent unnecessary complexity and potential cost overhead.

Infrastructure Expertise Beneficial: While the platform aims to simplify deployment, fully leveraging advanced features including multi-region orchestration, model versioning, and observability tools benefits from solid understanding of cloud infrastructure and AI deployment practices.

Limited Public Operational History: As a relatively recent offering with the Inference Engine 2.0 launched in November 2025, long-term operational examples and extensive community feedback remain sparse compared to more established platforms.

Pricing Transparency: Public documentation provides limited detailed pricing comparisons and cost structures, requiring direct consultation for comprehensive cost planning.

How Does It Compare?

The AI inference platform landscape in late 2025 has become highly specialized and competitive. Understanding where GMI Inference Engine fits requires examining multiple categories of providers, each with distinct strengths and target use cases.

Specialized Inference Platforms

Together AI

Together AI positions itself as the AI Native Cloud, focusing on reliable building, deployment, and scaling of AI-native applications. The platform supports over 200 open-source large language models with sub-100ms latency and automated optimization.

Key differentiators include the ATLAS adaptive speculator system announced in October 2025, which delivers up to 400 percent inference speedup through real-time workload learning. The system employs a dual-speculator framework combining static models trained on extensive data with adaptive models that learn continuously from traffic patterns. Performance benchmarks show 500 tokens per second on DeepSeek-V3.1, matching or surpassing specialized inference chips.

Together AI emphasizes cost efficiency through techniques like FP8 quantization contributing 80 percent speedup and Turbo Spec adding another 80-90 percent gain. The platform raised 305 million dollars in 2025 due to growing enterprise adoption. Compared to GMI, Together AI focuses more heavily on LLM-specific optimizations and open-source model accessibility rather than comprehensive multimodal pipeline integration.

Replicate

Replicate underwent significant transformation in November 2025 when Cloudflare announced acquisition plans. The platform provides a cloud-based machine learning model deployment service with over 50,000 production-ready models and a developer community exceeding 800,000 users.

Replicate uses the open-source Cog tool to package and deploy models, emphasizing rapid prototyping and experimentation. The platform supports diverse model types including LLMs like Llama, image generation models like Stable Diffusion, and various multimodal applications. Pricing follows a pay-per-inference model based on actual usage.

Following the Cloudflare acquisition, Replicate’s catalog will integrate with Cloudflare Workers AI platform, enabling developers to deploy AI models globally with minimal code. This positions Replicate as a model marketplace and discovery platform rather than a specialized inference optimization service like GMI. While both handle multimodal workloads, Replicate emphasizes breadth of pre-trained model access over infrastructure-level performance optimization.

RunPod

RunPod operates as a specialized AI cloud platform launched in 2022, focusing exclusively on GPU computing for AI workloads. The platform provides on-demand Pods for containerized GPU instances and Serverless GPU Endpoints for rapid scalable deployment across 30-plus global regions.

Key technical advantages include FlashBoot technology enabling near-instant cold starts, direct GPU passthrough minimizing virtualization overhead, and per-second billing for cost optimization. RunPod offers 32 unique GPU models compared to AWS’s 11, providing greater hardware selection flexibility. The platform supports fractional GPU allocation for cost-sensitive workloads that don’t require full GPU resources.

Performance benchmarks show RunPod delivering approximately 75 percent cost reduction compared to major cloud providers for equivalent GPU access. For LLM inference specifically, RunPod achieves consistently low latency through isolated containers and purpose-built infrastructure. Compared to GMI, RunPod offers broader GPU variety and more granular billing but focuses less on unified multimodal pipeline orchestration and more on flexible GPU access at competitive prices.

Fireworks AI and Groq

Fireworks AI provides dedicated inference hardware stacks optimized specifically for cost and latency, with direct API access and pre-tuned AI models to reduce deployment complexity. The platform targets production inference workloads requiring predictable performance.

Groq differentiates through custom Language Processing Unit hardware architecture designed for ultra-low latency inference. The LPU approach delivers exceptional speed for language model inference through specialized silicon rather than general-purpose GPUs. While impressive for text-based workloads, Groq’s specialized hardware focus contrasts with GMI’s GPU-based multimodal approach.

SiliconFlow

SiliconFlow positions itself as a high-performance inference and deployment platform. Independent benchmarks from 2025 claim up to 2.3 times faster inference speeds and 32 percent lower latency compared to leading AI cloud platforms while maintaining accuracy across text, image, and video models. The platform provides unified OpenAI-compatible APIs and fully managed infrastructure with strong privacy guarantees.

Hyperscaler Cloud Platforms

AWS Amazon Bedrock

Amazon Bedrock has evolved substantially through 2024-2025, transforming from primarily a text-focused foundation model service into a comprehensive multimodal AI platform. The service now offers broad capabilities addressing various aspects of AI application development.

Amazon Bedrock Data Automation, reaching general availability in early 2025, automates extraction, transformation, and insight generation from documents, images, audio, and videos through a single unified API. This eliminates the previous need to stitch together multiple services and manage complex JSON outputs manually. The system handles document classification, data extraction, validation, and structuring automatically.

For multimodal retrieval, Amazon Bedrock Knowledge Bases now supports Amazon Nova Multimodal Embeddings, enabling semantic search across text and image content. Integration with Knowledge Bases allows extracted insights from unstructured multimodal content to populate vector databases for retrieval-augmented generation applications.

Batch inference capabilities support multimodal models including Anthropic Claude 3.5 Sonnet and Meta Llama 3.2 family for image-to-text use cases at 50 percent of on-demand pricing. This addresses cost concerns for bulk processing workloads.

AWS Bedrock integrates deeply with the broader AWS ecosystem including S3 for storage, Amazon Transcribe for audio, Amazon Comprehend for text analysis, and API Gateway for real-time interactions. This integration strength appeals to organizations already invested in AWS infrastructure but may create migration complexity for those considering alternatives.

Compared to GMI, Bedrock offers greater breadth of services and tighter integration with enterprise AWS deployments but may involve more complexity to configure and optimize for specific inference performance requirements. GMI’s focused approach to GPU-accelerated multimodal inference may provide simpler deployment for teams prioritizing raw inference speed over ecosystem integration.

Google Cloud Vertex AI

Google Cloud Vertex AI provides comprehensive machine learning platform capabilities including training, deployment, and management. The platform supports TPU acceleration through custom Tensor Processing Units optimized for specific deep learning workloads, alongside traditional GPU options.

Vertex AI integrates seamlessly with Google’s data analytics tools including BigQuery for enhanced data processing workflows. The platform’s extensive global infrastructure leverages Google’s network to minimize latency for worldwide deployments. However, costs can escalate for high-throughput inference tasks, and deep integration with Google’s ecosystem may complicate migration to alternative platforms.

For organizations requiring tight integration with Google Cloud services and TPU-specific optimizations, Vertex AI presents strong advantages. GMI Cloud focuses specifically on GPU-based inference optimization rather than the broader ML lifecycle platform approach.

GPU Infrastructure Providers

Lambda Labs

Lambda Labs has repositioned itself as “The Superintelligence Cloud,” operating gigawatt-scale AI factories for training and inference. The platform provides access to cutting-edge NVIDIA GPUs including H100, B200, B300, GB200, and GB300 configurations with Quantum-2 InfiniBand networking.

Lambda offers three deployment models: Private Cloud for reserving tens of thousands of GPUs, 1-Click Clusters for on-demand B200 GPU clusters with InfiniBand, and On-Demand Instances starting at 2.49 dollars per hour for H100 access. The platform targets both AI training at massive scale and cost-effective inference deployment.

Lambda emphasizes transparent pricing without complex tier systems and specialized AI infrastructure with pre-configured environments for machine learning frameworks. The company serves over 50,000 customers including Intuitive, Writer, Sony, Samsung, and Pika. Professional services include custom AI infrastructure design and enterprise-scale cloud migration consulting.

Compared to GMI, Lambda provides broader GPU hardware options spanning latest-generation chips and focuses on both training and inference workloads at enterprise and research scales. GMI concentrates specifically on optimized inference serving with multimodal pipeline integration.

CoreWeave

CoreWeave operates as the “AI Hyperscaler,” distinguishing itself through purpose-built cloud infrastructure for AI workloads. In May 2025, CoreWeave acquired Weights \& Biases, integrating the AI developer platform into its cloud services. This acquisition produced several new capabilities announced in June 2025.

Mission Control Integration enables AI engineers to correlate infrastructure events directly with training runs, simplifying diagnostics for hardware failures, errors, and networking timeouts. W\&B Inference powered by CoreWeave Cloud Platform provides simple access to leading open-source models including DeepSeek R1-0528, LLaMA 4 Scout 17Bx16E, and Phi 4 Mini 3.8B. W\&B Weave Online Evaluations offers real-time insights into AI agent performance during production operations.

Performance benchmarks demonstrate CoreWeave’s optimization focus. In April 2025, using NVIDIA GB200 Grace Blackwell Superchips, CoreWeave achieved 800 tokens per second on Llama 3.1 405B model, representing industry-leading inference performance for models of this scale. For H200 instances, CoreWeave achieved 33,000 tokens per second on Llama 2 70B, representing 40 percent improvement over H100 instances.

CoreWeave emphasizes metal-to-token observability, combining infrastructure monitoring with application-level performance tracking. The platform maintains typical node goodput of 96 percent through advanced cluster health management. SOC2 Type 1 certification and partnerships with HIPAA and ISO 27001-compliant data centers address enterprise security requirements.

Compared to GMI, CoreWeave provides deeper integration between infrastructure management and model development workflows through the Weights \& Biases acquisition. Both platforms offer H100 and H200 access, but CoreWeave has demonstrated record-breaking performance on specific benchmarks and provides more comprehensive ML operations tooling. GMI focuses more narrowly on inference serving optimization with multimodal pipeline integration.

GMI Inference Engine’s Competitive Position

GMI Inference Engine occupies a specialized niche within this competitive landscape. The platform’s primary differentiation centers on unified multimodal pipeline architecture specifically designed for concurrent processing of text, image, video, and audio within a single infrastructure framework.

Against Specialized Inference Platforms: Compared to Together AI’s LLM-focused optimizations or Replicate’s model marketplace approach, GMI emphasizes cohesive multimodal workflows. While Together AI delivers exceptional performance for language models through adaptive speculators, GMI targets applications requiring simultaneous processing of diverse data types without switching between specialized services.

Against Hyperscaler Platforms: AWS Bedrock and Google Vertex AI offer broader AI service ecosystems with extensive integrations. GMI provides more focused infrastructure specifically optimized for inference performance rather than comprehensive ML lifecycle management. Organizations prioritizing raw inference speed and simplified multimodal deployment may find GMI’s specialized approach more efficient than configuring and optimizing broader platforms.

Against GPU Infrastructure Providers: Lambda Labs and CoreWeave provide extensive GPU access with flexibility across training and inference workloads. GMI differentiates through purpose-built inference optimization including automatic cross-region scaling, unified multimodal support, and integrated observability rather than general-purpose GPU rental.

The platform serves organizations with specific requirements: production applications demanding low-latency multimodal inference, teams seeking simplified deployment compared to configuring hyperscaler services, and workloads requiring elastic scaling across geographic regions. GMI’s announced cost reductions of 30 percent and performance improvements through techniques like quantization and speculative decoding position it competitively for inference-specific workloads, though broader ML platforms may suit organizations requiring comprehensive AI development capabilities beyond inference serving.

Final Thoughts

The GMI Inference Engine represents a focused solution for organizations deploying multimodal AI applications requiring optimized inference performance. The platform’s unified pipeline architecture addresses genuine operational complexity when managing diverse data types, while automatic scaling and comprehensive observability features support production deployment requirements.

Performance improvements including 1.46 times faster inference and 25 to 49 percent higher throughput through advanced caching and elastic scaling, combined with reported 30 percent cost reductions, demonstrate tangible value for inference-heavy workloads. Access to latest-generation H100 and H200 GPUs ensures computational capabilities match demanding model requirements.

The platform particularly suits organizations operating globally with variable traffic patterns, as the three-layer scheduling architecture and cross-region automatic scaling directly address these challenges. Companies building real-time multimodal applications such as conversational AI with voice and video, content analysis systems, or interactive creative tools may find the specialized infrastructure advantageous compared to assembling equivalent capabilities across multiple services.

However, prospective users should carefully evaluate fit against specific requirements. Organizations with primarily text-based workloads may find more optimized solutions in LLM-focused platforms like Together AI. Those requiring comprehensive ML lifecycle management beyond inference might benefit from broader platforms like AWS Bedrock or Google Vertex AI despite greater configuration complexity. Teams seeking maximum hardware flexibility and extensive GPU options could consider providers like Lambda Labs or CoreWeave.

The relatively recent launch of Inference Engine 2.0 in November 2025 means long-term operational track records and extensive community resources remain limited compared to established platforms. Organizations should conduct pilot testing to validate claimed performance improvements against their specific workload patterns and verify cost-effectiveness for their usage profiles.

For teams confronting the genuine complexity of deploying production multimodal AI applications and seeking infrastructure purpose-built for this use case, GMI Inference Engine merits serious evaluation. The platform delivers meaningful capabilities for a specific problem domain rather than attempting to serve all possible AI workload types, representing a legitimate specialized option within the increasingly diverse AI infrastructure landscape.