Table of Contents

- Overview

- Key Features

- How It Works

- Use Cases

- Pros \& Cons

- How Does It Compare?

- Lyria Camera vs Suno AI (Leading Text-to-Music Generator)

- Lyria Camera vs Endlesss (Collaborative Multiplayer Music Creation)

- Lyria Camera vs RJDJ Reactive Music (Pioneer Reactive iOS App)

- Lyria Camera vs RipX Reactive 3D Music (AR Spatial Audio App)

- Lyria Camera vs Udio (Text-to-Music with Audio Extension)

- Final Thoughts

Overview

Lyria Camera is an experimental AI-powered mobile application developed by Google DeepMind and Magenta (Google’s AI music research project) announced December 2024 transforming smartphone cameras into real-time musical instruments generating continuously evolving soundtracks matching visual environments. By combining Gemini’s advanced multimodal image understanding with Lyria RealTime API for live music generation, Lyria Camera analyzes camera feed detecting scenes, objects, colors, lighting, and motion translating visual information into musical descriptors like “reflective piano, cityscape calm” or “energetic drums, nature vibrant” which Lyria RealTime API converts into adaptive instrumental music streaming in real-time creating immersive audiovisual experiences where physical world becomes interactive musical performance space.

Released as free experimental web application accessible through browsers on mobile and desktop devices, Lyria Camera represents breakthrough in multimodal AI interaction demonstrating how vision and audio generation models cooperate seamlessly within unified creative workflow. Unlike traditional music generation requiring manual text prompting, Lyria Camera operates autonomously interpreting visual information from camera as natural music generation interface eliminating explicit user commands beyond pointing camera at surroundings. The application leverages Lyria 2, Google DeepMind’s latest AI music model capable of generating high-fidelity 48kHz stereo audio with professional-grade quality across diverse genres and instrumental compositions, accessed through Lyria RealTime variant optimized for continuous streaming music generation with minimal latency essential for real-time camera-based interaction.

Available through Google AI Studio enabling developers remixing and building upon underlying technology, Lyria Camera targets creative professionals exploring generative art, content creators seeking synchronized audiovisual experiences for vlogs or social media, experimental musicians interested in environmental sound interaction, accessibility researchers developing mood-enhancing assistive technologies, and AR/VR developers prototyping immersive experiences where physical environments trigger adaptive soundscapes. The application positions itself as creative exploration tool and technology demonstration rather than production-ready commercial product showcasing potential directions for multimodal AI integration in artistic and entertainment applications.

Key Features

Real-Time Visual-to-Musical Translation: Core capability continuously analyzing live camera stream through Gemini’s multimodal vision understanding extracting visual features including scene composition (indoor/outdoor, urban/nature, crowded/sparse), dominant colors and lighting conditions (warm/cool, bright/dim, saturated/muted), detected objects and subjects (people, vehicles, architecture, natural elements), motion characteristics (static/dynamic, fast/slow, smooth/erratic), and spatial relationships. These visual descriptors automatically convert into musical parameters and textual prompts fed to Lyria RealTime API generating corresponding soundtracks without manual prompt writing or parameter adjustment. The translation happens seamlessly in background enabling users focusing on visual exploration while system handles complex vision-to-audio mapping maintaining musical coherence despite continuous environmental changes.

Gemini Multimodal Image Understanding: Powered by Gemini 3’s state-of-the-art vision capabilities processing video frames understanding semantic meaning beyond simple object detection including contextual scene interpretation (bustling marketplace versus serene library), emotional tone and atmosphere (energetic versus contemplative), aesthetic qualities (industrial versus organic, minimal versus complex), and temporal dynamics tracking changes across frames. Gemini’s advanced multimodal reasoning generates rich textual descriptions bridging visual and linguistic domains creating musical instructions Lyria understands and interprets musically. The model’s ability understanding subtle visual nuances (architectural style, weather conditions, crowd energy) enables sophisticated musical responses matching environmental character rather than generic mappings.

Lyria RealTime API for Continuous Music Streaming: Audio generation through Lyria RealTime, specialized variant of Lyria 2 model optimized for real-time performance operating through WebSocket connection maintaining persistent communication channel enabling continuous bi-directional interaction. Unlike offline music generators planning complete song structures (intro-verse-chorus-outro), Lyria RealTime uses chunk-based autoregression generating audio in 2-second segments looking backward at recent context (approximately 10 seconds) maintaining rhythmic groove and melodic continuity while looking forward at current controls and prompts determining style adaptations. This streaming architecture minimizes perceived latency ensuring music transitions smoothly as camera moves through environment without jarring discontinuities or generation delays creating fluid responsive soundtrack experience.

Adaptive Soundtrack Matching Mood and Movement: Generated music dynamically responds to environmental changes and camera movement with tempo, instrumentation, harmonic complexity, and mood evolving based on visual input. Pointing camera at calm nature scene might generate ambient piano with sustained chords and slow tempo; panning to busy street triggers uptempo rhythms with brighter instrumentation and increased density; moving indoors adjusts reverb and spatial characteristics reflecting acoustic environment. The adaptation occurs gradually through smooth transitions rather than abrupt changes maintaining musical coherence while remaining responsive to visual information creating soundtrack feeling intentionally composed for specific moments rather than arbitrarily generated music playing independently of visuals.

Multi-Genre Instrumental Generation: Lyria 2’s training across diverse musical styles enables generating appropriate genre-specific music matching environmental character: classical orchestration for museums or historic architecture, electronic ambient for modern minimalist spaces, acoustic folk for natural outdoor settings, jazz for urban cafes or nightlife scenes, and experimental soundscapes for abstract or unusual visuals. The genre selection happens automatically based on visual prompts Gemini generates rather than requiring manual genre specification enabling musically-appropriate soundtracks emerging organically from environmental interpretation. All music remains instrumental without vocals focusing on atmospheric support rather than lyrical content which would distract from or poorly match arbitrary visual content.

Immersive Audiovisual Feedback Loop: Creates unified multisensory experience where vision directly influences audio perception and generated audio enhances visual perception creating synesthetic experience. Users report heightened awareness of environmental details when accompanied by responsive soundtrack: noticing color patterns generating harmonic changes, movement rhythms reflected in musical tempo, lighting shifts affecting musical brightness or darkness. This feedback loop transforms passive camera usage into active creative performance where deliberate camera movements (panning, zooming, tilting) become musical gestures shaping resulting soundtrack enabling performative interaction with environment through visual framing choices.

Browser-Based Accessibility Without Installation: Runs as progressive web application accessible through mobile and desktop browsers without requiring app store downloads, installations, or platform-specific builds. Users simply navigate to provided URL granting camera permissions then immediately begin generating music. This web-first approach reduces friction enabling instant experimentation particularly valuable for demo and experimental applications where installation barriers prevent user engagement. Cross-platform browser compatibility ensures functionality across iOS, Android, Windows, macOS, and ChromeOS devices with modern browsers supporting required web APIs for camera access and audio playback.

Developer Access Through Google AI Studio: Underlying technology accessible to developers through Google AI Studio documentation and API access enabling building custom applications leveraging Lyria RealTime and Gemini vision integration. Developers can remix Lyria Camera’s approach creating variations with different musical styles, alternative visual analysis parameters, customized prompt generation logic, or integration into larger applications requiring real-time adaptive music. The open API approach encourages experimentation and derivative works accelerating exploration of multimodal AI applications beyond Google’s own demo implementations.

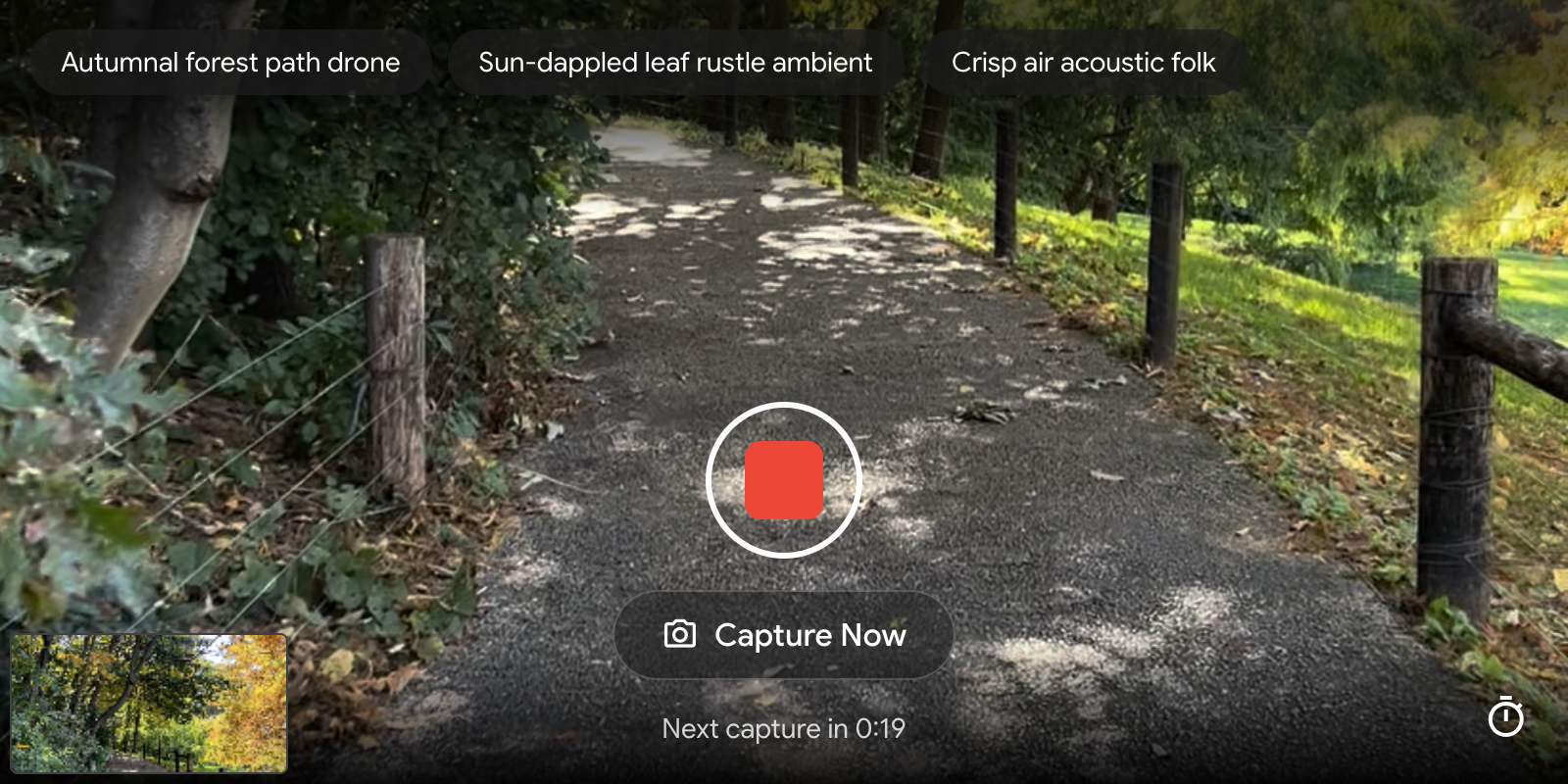

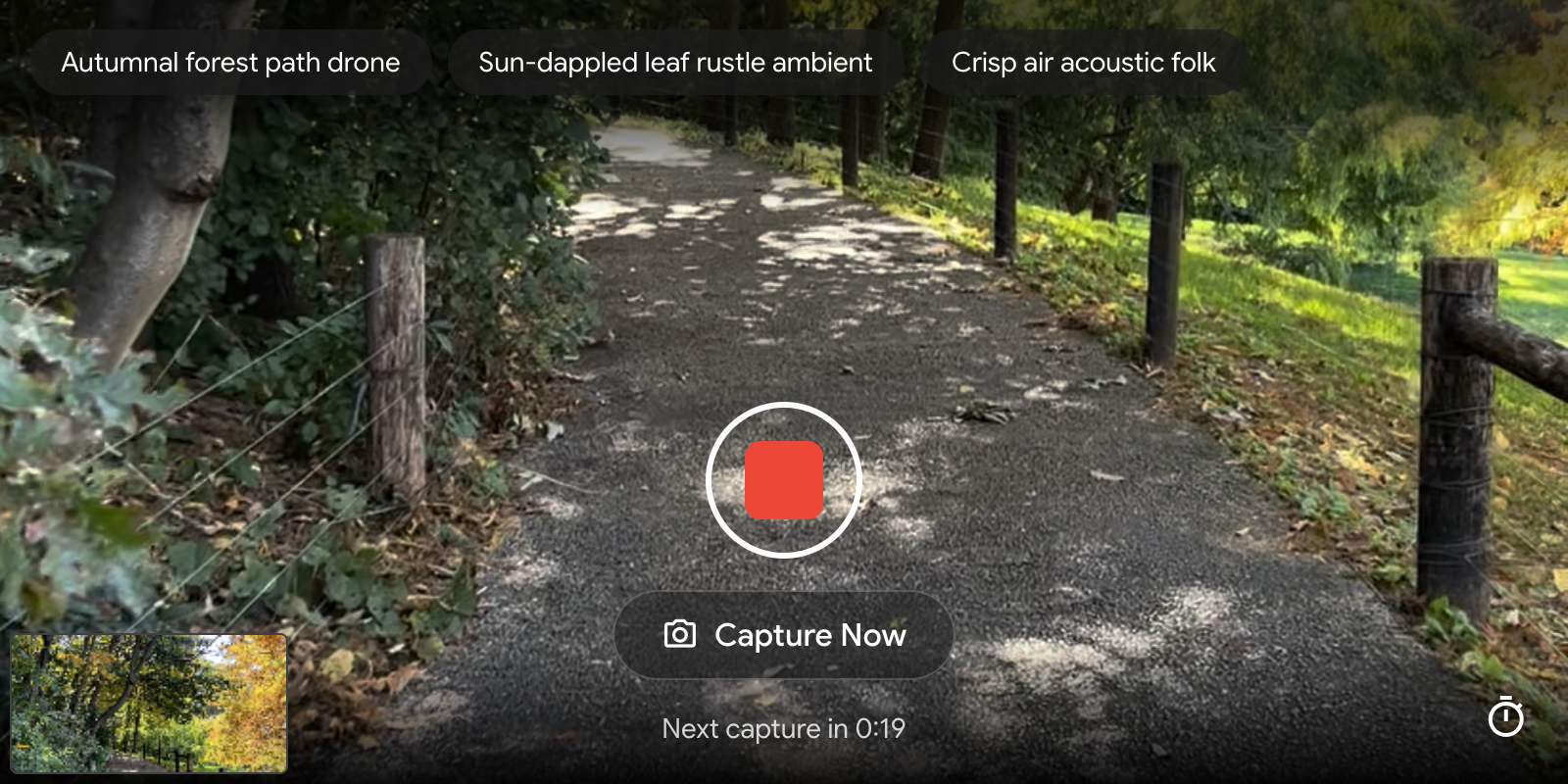

Minimal User Interface Focusing on Experience: Deliberately simple interface without complex controls, parameter sliders, or configuration options ensuring attention remains on environmental exploration and resulting music rather than technical operation. Screen displays camera feed with minimal overlays showing generated musical prompts optionally (for transparency about AI decision-making) but otherwise presenting unobstructed view of surroundings. This minimalist design philosophy prioritizes immediate experiential understanding over technical control aligning with experimental/artistic positioning rather than professional music production tool.

SynthID Watermarking for AI-Generated Content: Audio outputs include imperceptible digital watermark through Google’s SynthID technology enabling identification of AI-generated content while remaining inaudible to human listeners. This responsible AI practice addresses growing concern about distinguishing AI-generated media from human-created content supporting transparency and attribution as AI-generated audio becomes increasingly realistic and prevalent. The watermarking operates in frequency domain embedding information surviving common audio transformations like compression or format conversion maintaining detectability across distribution channels.

How It Works

Lyria Camera operates through sophisticated integration combining computer vision, natural language generation, and real-time audio synthesis:

Step 1: Camera Feed Acquisition and Processing

Application requests camera permission from browser then establishes video stream from device’s camera (rear-facing preferred for environmental capture though front-facing supported). Video frames stream continuously to processing pipeline typically at 15-30 frames per second though actual analysis rate may differ from capture rate based on computational requirements. Frames resize and preprocess meeting Gemini’s input requirements maintaining aspect ratio while optimizing for efficient inference balancing visual detail preservation with processing speed constraints necessary for real-time operation.

Step 2: Gemini Visual Analysis and Scene Understanding

Processed video frames feed to Gemini 3 model performing multimodal understanding extracting high-level semantic meaning including scene classification (urban/rural, indoor/outdoor, natural/artificial), primary objects and subjects (people, buildings, vehicles, flora), environmental conditions (weather, lighting, time of day inferred from visual cues), aesthetic qualities (color palette, composition, visual rhythm), and motion characteristics (camera movement speed, direction, stability, subject motion). Rather than simply listing detected objects, Gemini synthesizes holistic scene description understanding context, atmosphere, and character analogous to how human might verbally describe environment incorporating subjective impressions alongside objective observations.

Step 3: Musical Descriptor Generation via Gemini

Based on visual understanding, Gemini generates textual musical descriptors serving as prompts for Lyria RealTime. These descriptors follow specific format combining instrumental specifications (piano, strings, drums, synthesizer), mood/character adjectives (reflective, energetic, melancholic, vibrant), genre/style indicators (ambient, jazz, electronic, classical), and environmental context (cityscape, nature, interior, motion). Example outputs might be “reflective piano, cityscape calm, slow tempo” for quiet urban scene, “energetic drums, nature vibrant, fast-paced” for forest with movement, or “ethereal synthesizer, abstract ambient, spacious” for minimalist modern interior. The prompt generation balances specificity providing clear musical direction with flexibility enabling Lyria creative interpretation preventing overly prescriptive instructions limiting musical variety.

Step 4: Lyria RealTime Music Generation

Musical descriptors transmit through WebSocket connection to Lyria RealTime API which generates audio chunks implementing described musical characteristics. The model operates autoregressively predicting next audio segment based on recent audio history (maintaining rhythmic/harmonic continuity) and current descriptive prompts (adapting to new environmental information). Generation occurs in 2-second chunks with model context window spanning approximately 10 seconds of recent audio enabling maintaining consistent groove, melodic development, and harmonic progression while smoothly transitioning based on updated prompts. Audio generates in 48kHz stereo providing professional-grade fidelity with spatial characteristics appropriate for streaming and playback through smartphone speakers or headphones.

Step 5: Smooth Transitions and Musical Coherence

As camera moves through environment and Gemini generates updated musical descriptors, Lyria RealTime smoothly transitions between musical states rather than abruptly changing styles. The model’s architecture supports gradual morphing where instrumentation, tempo, harmonic complexity, and mood shift progressively across multiple 2-second chunks creating musical journey paralleling physical movement through space. Transition logic prioritizes musical coherence preventing nonsensical jumps (sudden genre shifts, rhythmic discontinuities, harmonic clashes) while remaining responsive to significant environmental changes requiring more dramatic musical shifts. This balance between continuity and responsiveness distinguishes real-time adaptive music from simply concatenating unrelated musical fragments.

Step 6: Audio Streaming and Playback

Generated audio chunks stream back through WebSocket connection to Lyria Camera application which buffers and plays audio through device speakers or connected headphones. Buffer management balances latency (minimizing delay between camera movement and musical response) with audio continuity (preventing clicks, pops, or silence gaps from underrun conditions). The streaming architecture supports indefinite duration unlike fixed-length generation typical of offline music AI enabling soundtrack continuing as long as user maintains application open and camera active creating truly endless generative music experience.

Step 7: Optional Prompt Display and Transparency

Application optionally displays generated musical prompts on screen providing transparency about AI decision-making process showing users how visual information translates into musical instructions. This educational component helps users understanding relationship between what camera sees and resulting music fostering appreciation for AI’s creative interpretation while enabling more intentional camera usage if users want exploring how specific visual patterns influence musical outcomes. The transparency aligns with responsible AI principles ensuring users comprehend system operation rather than perceiving magic black box.

Step 8: Continuous Loop and Interaction

Process repeats continuously as long as application remains active creating persistent audiovisual feedback loop. Users actively participate by choosing what camera points toward, how quickly moving through environment, framing and composition decisions, and duration spent on particular scenes. These choices become performative gestures shaping musical outcome transforming users from passive consumers into active co-creators despite not directly controlling musical parameters. The interaction model encourages exploration, experimentation, and playfulness supporting artistic and creative rather than utilitarian or task-oriented application usage.

Use Cases

Given specialization in real-time camera-responsive music generation, Lyria Camera addresses scenarios where synchronized audiovisual experiences or environmental music creation provide value:

Generative Art and Creative Installations:

Artists and creative technologists leverage Lyria Camera for interactive art installations where gallery visitors’ movements and interactions generate unique soundscapes transforming exhibition spaces into participatory musical environments. The application projects on large screens with accompanying audio creating immersive installations where physical exploration directly shapes sonic experience. Artists appreciate elimination of manual music composition while maintaining artistic control through environmental staging, lighting design, and visitor interaction patterns shaping resulting musical outcomes. Installations can incorporate multiple cameras generating polyphonic soundscapes from different spatial perspectives or collaborative performances where multiple visitors’ camera positions collectively influence unified musical composition.

Content Creation for Vlogs and Social Media:

Video creators, vloggers, and social media influencers use Lyria Camera generating unique soundtracks for visual content providing alternative to licensed music libraries or generic royalty-free tracks. The tight synchronization between visuals and audio creates cohesive aesthetic where soundtrack feels composed specifically for footage rather than generic background music. Creators walking through locations documenting travel, urban exploration, nature documentation, or lifestyle content benefit from adaptive music matching environmental transitions, energy shifts, and visual pacing. The AI-generated nature with SynthID watermarking addresses copyright concerns enabling monetization and distribution without licensing complications though requiring disclosure of AI-generated audio per platform policies.

Experimental Music Performance and Composition:

Forward-thinking musicians and composers incorporate Lyria Camera into live performances using camera as unconventional instrument generating real-time music through visual improvisation. Performances might involve projecting camera feed on stage while walking through prepared visual environments, interacting with props, artwork, or lighting triggering musical changes, or collaborative performances where musicians play traditional instruments alongside AI-generated soundtrack responding to visual conductor gestures or environmental staging. The approach opens experimental music practices exploring relationships between vision, movement, and sound while challenging traditional notions of musical authorship, composition, and performance.

Mood Enhancement and Accessibility Applications:

Researchers and developers exploring accessibility technologies investigate Lyria Camera’s potential for mood enhancement, emotional regulation, or assistive applications. Individuals with visual processing differences might experience environments more fully through additional auditory dimension highlighting visual features through musical translation. Therapeutic applications might use carefully designed visual environments generating calming or stimulating soundscapes supporting emotional regulation, stress reduction, or focus enhancement. The technology potentially assists individuals with synaesthesia experiencing natural cross-modal perception by artificially creating visual-auditory associations others can experience and understand.

Augmented and Virtual Reality Soundscape Design:

AR/VR developers prototype immersive experiences where virtual or augmented visual elements generate adaptive soundtracks enhancing presence and immersion. Rather than pre-composing fixed music scores for VR environments, Lyria Camera’s approach enables procedural soundtrack generation responding to user viewing behavior, interaction patterns, and environmental changes within virtual spaces. The real-time responsiveness proves particularly valuable for open-world VR where user path unpredictability makes fixed linear soundtracks inadequate requiring either simplistic ambient loops or complex interactive music systems Lyria RealTime potentially simplifies through AI-driven adaptation.

Urban Soundwalking and Environmental Awareness:

Urban explorers, psychogeographers, and environmental awareness practitioners use Lyria Camera for soundwalking experiences where city exploration gains musical dimension highlighting visual patterns, architectural rhythms, social dynamics, and environmental changes. The practice transforms routine commutes or neighborhood walks into creative experiences fostering mindfulness and present-moment awareness through enhanced sensory engagement. Educational applications might use Lyria Camera teaching about urban design, environmental perception, or multimodal awareness by making visual patterns audible revealing spatial relationships, movement flows, or aesthetic qualities otherwise overlooked in purely visual observation.

Creative Exploration and Play:

General users without specific creative goals enjoy Lyria Camera for pure experiential delight and playful exploration discovering how different environments, objects, movements, and visual compositions generate varied musical responses. The low-stakes experimental nature encourages creative risk-taking and exploration without requiring musical knowledge, technical skills, or artistic training. Users share generated experiences on social media comparing how different locations generate musical variations, discovering surprising correlations between visual and musical patterns, or simply enjoying transformed daily activities through musical augmentation.

Pros \& Cons

Advantages

Highly Immersive and Creatively Inspiring Experience: The seamless integration of vision and audio creates uniquely engaging multisensory experience where familiar environments transform through musical augmentation. Users report heightened awareness, increased mindfulness, and creative inspiration from experiencing world through dual visual-auditory lens. The immersion transcends typical app engagement creating memorable artistic encounters encouraging continued exploration and sharing with others.

State-of-the-Art AI Technology Demonstration: Showcases cutting-edge capabilities of Google’s Gemini vision model and Lyria music generation working cooperatively demonstrating practical multimodal AI application beyond isolated single-modality demos. The integration validates technical feasibility of real-time vision-to-audio pipelines proving latency, quality, and coherence sufficient for interactive applications opening possibilities for future commercial applications building upon demonstrated technology.

Transforms Everyday Scenes Into Art: Elevates mundane daily activities—commuting, walking, waiting—into creative experiences by layering AI-generated soundtrack over routine visual observations. This transformation democratizes artistic experience enabling anyone with smartphone creating personalized generative art without specialized skills, equipment, or training. The artistic value emerges from unique AI interpretation and user’s creative camera work rather than technical musical ability lowering barriers to creative expression.

No Musical Knowledge Required: Complete elimination of musical interface, parameters, or terminology enables universal accessibility regardless of musical training or technical background. Users focus entirely on visual exploration while AI handles musical translation complexity. This accessibility contrasts with traditional music creation tools requiring understanding of musical concepts (tempo, key, instrumentation) or audio production techniques (mixing, effects, arrangement) creating gentler onboarding for creative technology exploration.

Browser-Based Instant Access: Web application model eliminates installation friction, storage requirements, platform restrictions, or update management enabling instant experimentation from any modern browser-equipped device. This accessibility proves particularly valuable for experimental applications where asking users installing dedicated app creates adoption barrier preventing casual trying. Cross-platform browser support ensures broad device compatibility maximizing potential user base.

Developer-Friendly API Access: Availability through Google AI Studio with documentation and API access encourages developer experimentation, remixing, and building derivative applications exploring variations on core concept. The open approach accelerates innovation beyond Google’s own implementations enabling community-driven exploration of possibilities and use cases Google didn’t anticipate fostering ecosystem around multimodal music generation technology.

Responsible AI with SynthID Watermarking: Transparent approach embedding imperceptible watermarks identifying AI-generated audio addresses growing concerns about distinguishing synthetic media from human-created content. This responsible deployment demonstrates Google’s commitment to AI transparency and attribution establishing precedent for generative audio applications as technology proliferates.

Disadvantages

Niche Creative Use Case with Limited Practical Utility: While artistically compelling and technologically impressive, Lyria Camera lacks clear practical application beyond creative exploration, entertainment, and demonstration. Users may try application once or twice enjoying novelty then abandon due to absence of concrete use case fitting into daily workflows or solving actual problems. The experimental positioning limits adoption versus applications addressing specific pain points or providing tangible value proposition.

Requires Capable Hardware and Stable Connectivity: Real-time processing through cloud-based Gemini and Lyria APIs demands reliable high-bandwidth internet connection with low latency for acceptable experience. Devices with slow processors, limited memory, or poor network connectivity experience stuttering audio, delayed responses, or application failures creating frustrating rather than immersive experience. Requirements exclude users in bandwidth-constrained environments, developing regions with limited connectivity, or situations without reliable internet (remote outdoor locations, international travel without data) limiting where technology proves usable.

Limited User Control Over Musical Output: Deliberate simplicity eliminating musical parameters or controls means users cannot specify preferred genres, adjust tempo, select instrumentation, or otherwise guide musical direction beyond indirect influence through camera framing. Power users or musicians desiring specific musical outcomes face frustration from inability controlling AI decisions potentially generating unwanted styles, inappropriate genres, or musically uninteresting results for particular visual content. The lack of manual override or refinement options positions application as take-it-or-leave-it experience rather than controllable creative tool.

Music Quality Varies Based on Visual Input: Generated music quality depends heavily on visual content richness, clarity, and interpretability. Visually ambiguous, poorly lit, or chaotic scenes generate confused musical responses lacking coherent direction while visually simple or static scenes produce musically monotonous results. Users must actively seek visually interesting, well-lit, compositionally strong camera subjects for best musical outcomes creating skill ceiling and effort requirement contradicting completely effortless positioning.

Privacy Concerns with Continuous Camera Upload: Real-time camera feed processing requires streaming video to Google servers raising privacy questions about data retention, usage, and potential exposure of sensitive visual information (private spaces, identifiable individuals, confidential documents). While Google states alignment with privacy policies, continuous camera upload represents greater privacy risk than offline processing or one-time image uploads. Users in sensitive environments (workplaces, private homes, around children) may justifiably hesitate using application despite assurances about data handling.

Experimental Status Without Long-Term Support Guarantee: Positioned as research demonstration and experimental application rather than committed product means no guarantee of continued availability, maintenance, API stability, or feature development. Google frequently discontinues experimental projects after initial launch period leaving early adopters dependent on technology without migration paths or alternatives. Users building significant workflows or experiences around Lyria Camera face abandonment risk if Google ceases support.

Limited Commercial Viability or Monetization Path: Unclear business model or revenue generation strategy suggests unsustainable long-term support without direct monetization through subscriptions, licensing, or advertising. The creative/experimental positioning without clear target customer segment willing paying for service creates uncertainty about continued investment and development versus quick abandonment after research publication or technology validation.

Musical Clichés and Predictable Mappings: AI’s musical interpretations risk becoming clichéd or predictable with similar visual scenes generating similar musical responses across users. Nature scenes defaulting to ambient piano, urban environments triggering electronic beats, and fast movement producing uptempo rhythms creates formulaic outcomes limiting creative surprise or unique artistic voice. The system’s lack of randomness, stylistic variation controls, or intentional creative rule-breaking produces technically competent but potentially artistically boring results over extended use.

How Does It Compare?

Lyria Camera vs Suno AI (Leading Text-to-Music Generator)

Suno AI is widely-regarded leading AI music generation platform enabling users creating complete 2-minute songs with vocals, lyrics, instrumentation, and production from text prompts supporting custom lyrics, style specifications, genre selection generating 500 songs for \$10/month with mobile app and custom mode for detailed control serving millions of users creating shareable original music.

Input Modality:

- Lyria Camera: Visual camera feed as music generation interface; no text prompts or manual controls

- Suno AI: Text prompts describing desired song including genre, mood, instruments, and optional custom lyrics

Output Type:

- Lyria Camera: Continuous streaming instrumental soundtrack adapting to camera in real-time; indefinite duration

- Suno AI: Fixed-length complete songs (typically 2-4 minutes) with vocals, lyrics, verses, choruses, structured composition

Real-Time Capability:

- Lyria Camera: Live generation responding immediately to camera movements and environmental changes

- Suno AI: Offline generation requiring 30-60 seconds creating fixed song then playback

Vocal/Lyrics:

- Lyria Camera: Instrumental only without vocals appropriate for environmental soundtrack

- Suno AI: Includes AI-generated or custom vocals with lyrics core to song generation

Use Case:

- Lyria Camera: Interactive audiovisual experiences, creative installations, experimental performance

- Suno AI: Song creation for music production, content soundtracks, personal enjoyment, commercial release

When to Choose Lyria Camera: For experimental real-time audiovisual experiences, interactive installations, camera-driven music generation, or exploring vision-audio AI integration.

When to Choose Suno AI: For creating complete shareable songs, traditional music composition with vocals and lyrics, controlled music production, or commercial music creation needs.

Lyria Camera vs Endlesss (Collaborative Multiplayer Music Creation)

Endlesss was innovative online multiplayer loop station enabling collaborative music creation through built-in drums, synths, effects where users jam together online creating evolving musical pieces with innovative workflow perfect for generating ideas though platform ceased operations May 2024 then revived under HabLab London organization founded by Imogen Heap with sustainable future under development.

Interaction Model:

- Lyria Camera: Solo camera-based AI generation without direct musical control or collaboration

- Endlesss: Multiplayer collaborative human performance with loops, instruments, real-time jamming

Music Creation:

- Lyria Camera: AI-generated instrumental music from visual interpretation; no manual playing

- Endlesss: Human performance using virtual instruments, loops, samples; active musical playing

Collaboration:

- Lyria Camera: Single-user experience without multi-user interaction or collaboration features

- Endlesss: Core multiplayer focus enabling remote collaborative jamming with other musicians

Control Level:

- Lyria Camera: Indirect influence through camera framing; minimal musical parameter control

- Endlesss: Direct musical control over instruments, loops, effects, timing, mixing

Accessibility:

- Lyria Camera: No musical knowledge required; completely passive music generation

- Endlesss: Requires some musical sense and rhythm for meaningful participation

When to Choose Lyria Camera: For zero-skill AI music generation, visual-driven composition, solo experimental experiences, or demonstrating multimodal AI capabilities.

When to Choose Endlesss: For collaborative music making with other musicians (if platform remains available), loop-based composition, social musical interaction, or active performance-based creation.

Lyria Camera vs RJDJ Reactive Music (Pioneer Reactive iOS App)

RJDJ (Reality Jockey) was groundbreaking iPhone app detecting ambient sounds, sampling or mutating them into harmonies, mashing with music creating augmented audio experiences through Pure Data and LibPd with iOS achieving 300,000+ downloads though platform discontinued after pioneering reactive music concepts establishing foundation for environment-responsive audio applications.

Input Source:

- Lyria Camera: Visual camera feed interpreted through AI generating music from images

- RJDJ: Audio microphone input processing ambient sounds creating reactive music from existing audio

Technology Approach:

- Lyria Camera: Modern generative AI with neural networks (Gemini vision, Lyria music generation)

- RJDJ: Procedural audio processing through Pure Data patches and algorithmic composition

Music Generation:

- Lyria Camera: Complete musical compositions generated from scratch matching visual interpretation

- RJDJ: Audio manipulation and augmentation building on existing environmental sounds

Platform:

- Lyria Camera: Browser-based cross-platform web application

- RJDJ: iOS-only native app (now discontinued)

Legacy Status:

- Lyria Camera: Active December 2024 release representing current state-of-art

- RJDJ: Historical pioneer from 2008-2014 era establishing reactive music concepts but no longer operational

When to Choose Lyria Camera: As RJDJ discontinued, for modern AI-powered reactive music using latest generative models, visual-based music generation, or cross-platform browser accessibility.

When to Choose RJDJ: No longer available; historical significance as pioneer established reactive music category inspiring subsequent applications.

Lyria Camera vs RipX Reactive 3D Music (AR Spatial Audio App)

RipX is AR-enabled music app transforming listening, creating, and performing through 3D spatial audio where users walk around instruments in augmented reality hearing them through immersive spatial audio providing novel music interaction method though focused on existing music playback and manipulation rather than generative creation.

Core Function:

- Lyria Camera: Generates new AI music from camera visuals creating original compositions

- RipX: Spatializes and manipulates existing music through 3D AR visualization

Creation vs Playback:

- Lyria Camera: Generative creation producing original music matching environment

- RipX: Enhanced playback and visualization of pre-existing music tracks

AR Implementation:

- Lyria Camera: Visual input drives music generation but not AR display; standard camera view

- RipX: Full AR mode with 3D instrument visualization overlaid on physical environment

Music Source:

- Lyria Camera: AI-generated instrumental music created from scratch

- RipX: User’s existing music library or uploaded tracks

Target Use:

- Lyria Camera: Creative generation, experimental composition, audiovisual experiences

- RipX: Enhanced music listening, spatial audio production, interactive performance with existing tracks

When to Choose Lyria Camera: For AI music generation from visual environments, creating original adaptive soundtracks, or exploring generative multimodal AI.

When to Choose RipX: For spatial audio experiences with existing music, AR visualization of familiar tracks, 3D instrument placement, or enhanced music listening rather than creation.

Lyria Camera vs Udio (Text-to-Music with Audio Extension)

Udio is Suno’s main competitor offering text-to-music generation with audio-to-audio AI extension staying closer to initial audio files making it helpful for musicians seeking co-production tool supporting custom lyrics, style specifications, and iterative refinement generating complete songs with professional quality priced competitively with Suno’s subscription model.

Input Method:

- Lyria Camera: Visual camera feed automatically interpreted generating music without text

- Udio: Text prompts describing desired music plus optional audio files for extension/variation

Real-Time vs Batch:

- Lyria Camera: Real-time streaming generation adapting continuously to camera movement

- Udio: Batch generation creating fixed-length tracks requiring processing time before playback

Music Structure:

- Lyria Camera: Continuous evolving instrumental without verse/chorus/bridge structure

- Udio: Structured complete songs with traditional musical form, vocals, production

Use Case:

- Lyria Camera: Interactive experiences, installations, experimental audiovisual art

- Udio: Music production, song creation, content soundtracks with structured compositions

When to Choose Lyria Camera: For camera-driven generative experiences, real-time adaptive music, visual-audio exploration, or multimodal AI demonstrations.

When to Choose Udio: For traditional song creation with structure and vocals, music production workflows, co-creation with AI for commercial tracks, or controlled composition process.

Final Thoughts

Lyria Camera represents fascinating convergence of Google’s leading AI capabilities—Gemini’s sophisticated multimodal vision understanding and Lyria 2’s professional-grade music generation—creating novel interaction paradigm where physical environment becomes musical interface through smartphone camera. The December 2024 release demonstrates technical feasibility and creative potential of real-time vision-to-audio translation proving latency, quality, and coherence sufficient for immersive interactive experiences while showcasing possibilities for future multimodal AI applications extending beyond creative demonstrations into practical applications across entertainment, accessibility, education, and commerce.

The elimination of explicit musical controls or parameters through camera-as-interface creates uniquely accessible creative technology where users without musical training, audio production knowledge, or technical skills generate personalized adaptive soundtracks simply through visual exploration and camera framing. This radical simplification democratizes generative music enabling universal participation in AI-powered creativity while preserving artistic value through users’ compositional choices regarding what to frame, how to move, and duration to linger creating indirect but meaningful creative agency over musical outcomes. The seamless multimodal integration where Gemini’s semantic scene understanding generates musically-appropriate textual prompts feeding Lyria’s continuous streaming generation creates illusion of AI directly hearing visual world despite complex multi-stage processing pipeline operating behind scenes.

The application particularly excels for artists creating interactive installations, content creators seeking unique synchronized soundtracks for visual media, experimental musicians exploring unconventional performance interfaces, accessibility researchers developing mood-enhancing technologies, AR/VR developers prototyping adaptive soundscape systems, and general users enjoying playful creative exploration transforming routine activities into artistic experiences through musical augmentation of familiar environments.

For users requiring traditional music composition control with vocals and structured songs, Suno AI and Udio provide comprehensive text-to-music platforms generating shareable commercial-quality tracks. For collaborative real-time musical performance with other musicians, Endlesss (if platform stabilizes under new HabLab London stewardship) offers multiplayer jamming versus Lyria Camera’s solo AI-driven generation. For spatial audio experiences with existing music rather than generative creation, RipX delivers AR-enhanced listening experiences. For historical context, pioneering RJDJ established reactive environment-responsive audio concepts though platform no longer operational.

But for the specific intersection of real-time camera-driven AI music generation, seamless vision-audio multimodal integration, zero-skill accessibility, and state-of-the-art generative AI demonstration, Lyria Camera provides unique capability no established alternative replicates comprehensively. The application’s primary limitations—niche creative positioning without clear practical utility, hardware and connectivity requirements, limited user control over musical parameters, variable quality depending on visual input, privacy concerns from continuous camera upload, experimental status without long-term support guarantees, unclear monetization or sustainability model, and risk of predictable clichéd musical mappings—reflect expected constraints of early-stage experimental technology demonstrating possibilities rather than delivering production-ready commercial product.

The critical value proposition centers on experiential delight and creative inspiration: if exploring cutting-edge multimodal AI capabilities; if transforming familiar environments through musical augmentation creates value; if camera-as-instrument interaction model proves intriguing; if zero-barrier creative tools enable artistic expression previously inaccessible; or if demonstrating state-of-the-art AI integration for educational, research, or promotional purposes—Lyria Camera provides compelling experimental platform worth exploring despite limitations preventing serious production use or commercial application.

The technology’s future depends on community adoption inspiring derivative applications, demonstration value attracting developer interest in Lyria RealTime API, Google’s continued investment beyond initial research publication, discovery of compelling use cases justifying sustained development, and potential integration into Google products (Google Lens, YouTube, Photos) where camera-responsive features enhance existing applications rather than standalone experimental demos. For early adopters recognizing value in experimental creative technology and accepting ephemeral nature of research demonstrations, Lyria Camera delivers on promise: transforming smartphone cameras into musical instruments generating adaptive soundtracks matching visual world creating immersive audiovisual experiences demonstrating remarkable sophistication of multimodal AI integration—offering glimpse into future where AI seamlessly bridges human sensory modalities creating rich multisensory experiences from single-modality inputs expanding creative possibilities and sensory awareness beyond biological limitations.