Table of Contents

Overview

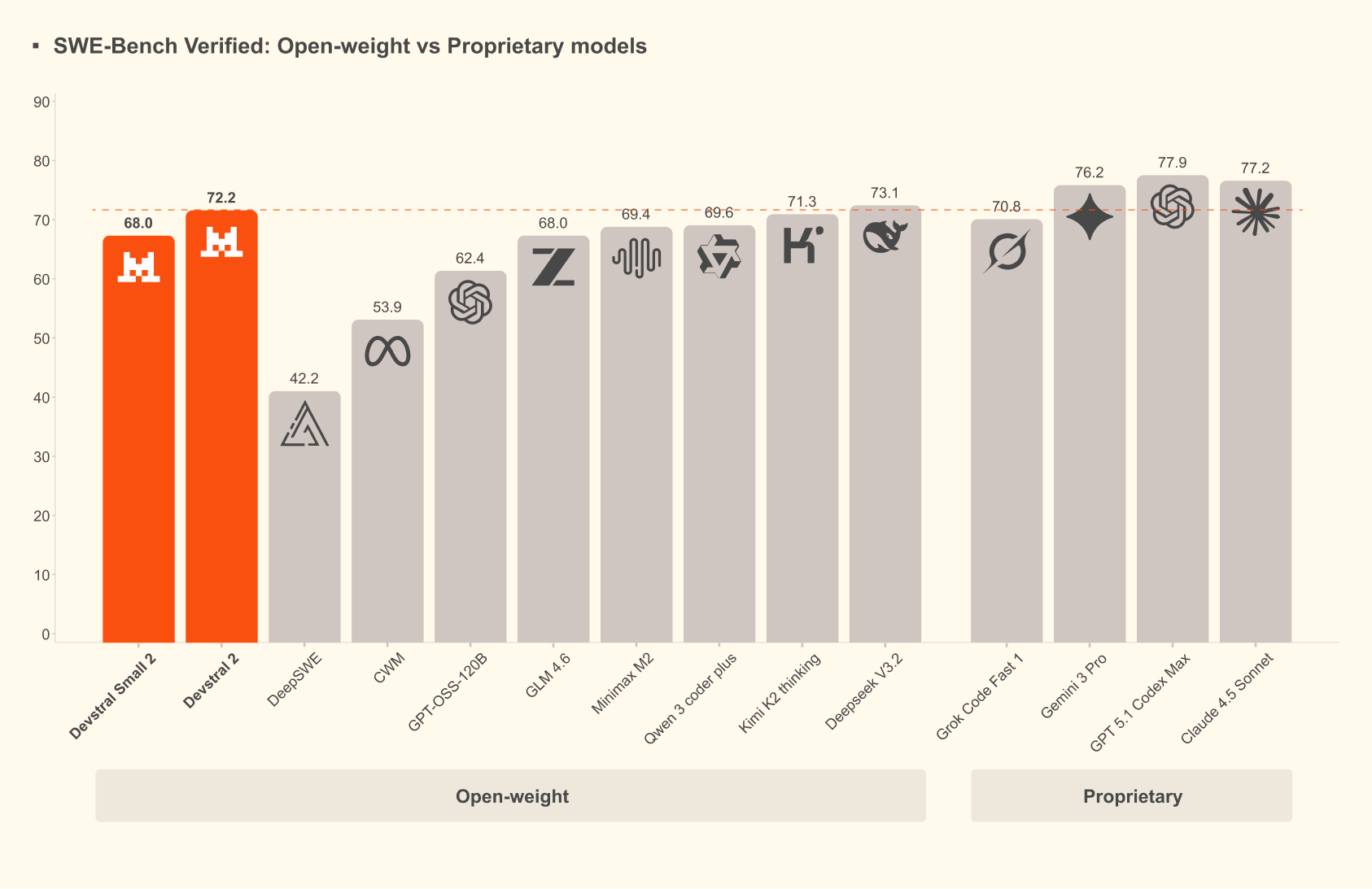

Devstral 2 is Mistral AI’s latest open-weight coding model family launched December 2025 combining specialized software engineering capabilities with production-ready agentic intelligence designed for autonomous code generation, modification, and refactoring tasks. The model family includes two sizes: Devstral 2 (123B parameters) achieving 72.2% on SWE-bench Verified benchmark and Devstral Small 2 (24B parameters) achieving 68.0% on the same benchmark, with both supporting 256K token context windows enabling analysis of large codebases. Rather than simply matching general-purpose language models on code tasks, Devstral 2 represents specialized optimization for agentic software engineering workflows where models navigate codebases autonomously, propose multi-file modifications, and reason about architecture-level changes.

Shipped alongside Mistral Vibe CLI—an open-source command-line agent for end-to-end code automation released under Apache 2.0 license—Devstral 2 enables developers interacting with coding models directly from terminal environments. The December 2025 announcement positioned Devstral 2 as 7x smaller than DeepSeek V3 and 8x smaller than Kimi K2 while matching or exceeding their performance on essential software reasoning benchmarks. Currently free via API during limited launch period with planned token-based pricing (\$0.40 per million input tokens, \$1.20 per million output tokens post-launch), Devstral 2 targets development teams, coding infrastructure providers, and organizations automating code-related workflows seeking open-weight alternatives to proprietary models.

Key Features

SOTA Open-Weight Code Model: Achieves 72.2% on SWE-bench Verified—highest among open-weight code models—approaching closed-source leaders while remaining fully open-weight enabling local deployment, fine-tuning, and commercial use without API dependency. The open-weight status differentiates from closed-source alternatives providing model ownership and deployment flexibility.

Devstral Small 2 for Local Deployment: 24B parameter model optimized for running on consumer hardware (single GPU or well-equipped laptops) with 68.0% SWE-bench Verified performance positioning it among strongest open-source models in size class. Local deployment enables offline operation, complete data privacy, and latency-critical applications without cloud dependencies.

256K Token Context Window: Ultra-long context supporting analysis of entire large codebases, long project histories, and multi-file dependencies. The extended context prevents token-limited exploration common with smaller context windows constraining code understanding to isolated files.

Multi-File Orchestration: Understands entire codebase architecture enabling proposing and implementing changes across multiple files simultaneously understanding dependencies and maintaining consistency. The architectural reasoning prevents isolated changes introducing bugs through unintended side effects.

Agentic Code Workflows: Designed specifically for autonomous agent-driven software engineering tasks where models explore repositories, identify issues, propose solutions, and execute modifications end-to-end without human intervention for each step. The agentic design philosophy differs from pure code completion emphasizing iterative problem-solving.

Mistral Vibe CLI Integration: Purpose-built command-line agent interface enabling developers accessing Devstral directly from terminal with interactive chat, file references using @ symbol, shell command execution with !, and slash commands for configuration. The CLI provides accessible entry point for code automation without requiring API integration.

Project-Aware Context Scanning: Automatically analyzes file structure and Git status providing relevant project context without manual specification. Understanding project state and organization improves suggestions for compatibility with existing patterns and conventions.

Tool-Augmented Intelligence: Equipped with tools for file manipulation (reading, editing, creating), code searching (grep, semantic search), version control operations (git), and command execution enabling comprehensive codebase interaction. The tool set enables agents taking direct action beyond suggestions.

Apache 2.0 License: Completely permissive licensing enabling commercial deployment, redistribution, fine-tuning, and incorporation into products without restrictions or royalty concerns. The unrestricted licensing eliminates legal complexity common with stricter open-source models.

Fine-Tuning and Customization Support: Full support for task-specific fine-tuning enabling organizations adapting model for proprietary patterns, internal standards, and specialized domains. Fine-tuning enables domain-specific optimization beyond base model capabilities.

How It Works

Developers run Vibe CLI in project root directory enabling agent scanning codebase structure, understanding Git history, and learning project conventions. Users describe desired changes in natural language (“Fix this memory leak,” “Add type hints,” “Refactor for performance”). Devstral analyzes codebase context, proposes multi-file modifications, checks compatibility, and executes changes coordinating edits across architecture. Developers review changes through standard Git workflows, test locally, and commit. The agentic loop enables iterative refinement through continued dialogue.

Use Cases

Large-Scale Code Refactoring: Teams refactoring large legacy codebases deploy Devstral autonomously modernizing patterns, updating dependencies, and improving code quality across thousands of files systematically.

Automated Bug Fixing: Organizations running Devstral against issue databases and bugtracker integrations automatically generating fixes for reported bugs with minimal human intervention dramatically compressing bug resolution timelines.

Code Quality Enforcement: Teams set Devstral to enforce company standards (linting, formatting, type annotations, security patterns) automatically updating codebases maintaining consistency without manual effort.

Rapid Feature Implementation: Development teams describe feature requirements enabling Devstral generating implementations across multiple files understanding existing architecture and generating code matching patterns.

Multi-Language Code Migration: Organizations migrating between languages or frameworks leverage Devstral understanding source code semantics and target language idioms enabling efficient migration reducing manual translation burden.

On-Premise and Privacy-Sensitive Deployment: Organizations in regulated industries (finance, healthcare, defense) deploy Devstral Small 2 locally on-premises maintaining data sovereignty and eliminating cloud storage of sensitive code.

Pros \& Cons

Advantages

State-of-the-Art Open Performance: 72.2% SWE-bench Verified for open-weight model represents highest open-source performance approaching proprietary leaders while maintaining complete model ownership and deployment flexibility.

7x Cost-Efficient vs Closed-Source: Dramatic cost advantage over Claude Sonnet and GPT-4 for equivalent tasks enabling large-scale deployment without prohibitive API expenses. The cost efficiency enables previously unfeasible large-scale automation.

Open-Weight with Unrestricted Licensing: Apache 2.0 licensing eliminates legal complexity, proprietary restrictions, or commercial limitations enabling deployment across any context without vendor lock-in.

Specialized Agentic Capabilities: Purpose-built for autonomous code workflows understanding multi-file systems, architecture patterns, and iterative refinement. The specialization dramatically outperforms general-purpose models on complex code reasoning.

Local Deployment Option: Devstral Small 2 enabling offline operation on consumer hardware provides privacy, data sovereignty, and independence from cloud infrastructure unavailable with closed-source API-only alternatives.

Comprehensive Tooling: Native command-line agent with file manipulation, code search, and version control enables comprehensive agentic workflows without requiring external integration.

Disadvantages

Human Evaluation Preference Gap: Independent evaluations show Claude Sonnet 4.5 preferred in 53.1% vs Devstral 2’s 42.9%, indicating performance gap remains despite strong benchmark scores. The preference gap suggests user experience lags despite benchmark equivalence.

Recent Launch with Limited Production Data: December 2025 release means unproven long-term stability and limited production deployment examples creating risk for mission-critical deployment.

Complex Local Deployment Requirements: Devstral 2 requires 4+ H100 GPUs or equivalent limiting deployment to organizations with significant infrastructure. Small 2 requires consumer GPU for meaningful performance.

Context Window Still Limited vs Largest Codebases: 256K tokens covers most codebases but very large monorepos or projects with extensive history may exceed capacity requiring chunking or iterative analysis.

Fine-Tuning Requires Expertise: While supported, effective fine-tuning demands understanding model internals, data preparation, and evaluation limiting accessibility compared to API services abstracting complexity.

Tool Accuracy Variability: Code generation quality varies with complexity, clarity of specifications, and codebase idiosyncrasies. Complex architectural changes may require human refinement.

How Does It Compare?

Devstral 2 vs Code Llama (Meta)

Code Llama is Meta’s code-specialized foundation model family (7B-70B parameters) released August 2023 supporting infilling, long context (16K base, 100K effective), and instruction following with permissive Apache 2.0 licensing.

Model Size:

- Devstral 2: 123B parameters

- Code Llama: Up to 70B parameters

Benchmark Performance:

- Devstral 2: 72.2% SWE-bench Verified

- Code Llama 70B: Older benchmarks; not directly comparable on SWE-bench Verified

Context Window:

- Devstral 2: 256K tokens

- Code Llama: 100K effective context

Agentic Capabilities:

- Devstral 2: Purpose-built for autonomous agent workflows

- Code Llama: Code completion and generation focused

CLI Tooling:

- Devstral 2: Native Vibe CLI with integrated tooling

- Code Llama: No native CLI; requires integration

Release Date:

- Devstral 2: December 2025

- Code Llama: August 2023 (mature, established)

When to Choose Devstral 2: For latest agentic capabilities, larger context, and integrated CLI tooling.

When to Choose Code Llama: For established model with proven production deployments and broader ecosystem integration.

Devstral 2 vs GPT-4.1 (OpenAI)

GPT-4.1 is OpenAI’s API-only coding-optimized model family (GPT-4.1, GPT-4.1 mini, GPT-4.1 nano) with 1-million-token context, released April 2025 achieving 52-54.6% on SWE-bench Verified with focus on frontend coding and instruction reliability.

Model Access:

- Devstral 2: Open-weight, fully deployable locally

- GPT-4.1: API-only, vendor-dependent

Performance:

- Devstral 2: 72.2% SWE-bench Verified

- GPT-4.1: 52-54.6% SWE-bench Verified (lower score)

Context Window:

- Devstral 2: 256K tokens

- GPT-4.1: 1 million tokens

Cost:

- Devstral 2: \$0.40/\$1.20 per million tokens (post-beta)

- GPT-4.1: Proprietary pricing; 26% lower than GPT-4o

Licensing:

- Devstral 2: Apache 2.0; unrestricted commercial use

- GPT-4.1: Proprietary; API-dependent vendor lock-in

Human Evaluation:

- Devstral 2: Outperforms DeepSeek V3; lags Claude Sonnet 4.5

- GPT-4.1: Balanced performance; accuracy degrades with larger context

When to Choose Devstral 2: For open-weight model, stronger SWE-bench performance, and local deployment.

When to Choose GPT-4.1: For larger context (1M tokens), API convenience, and proven OpenAI ecosystem.

Devstral 2 vs DeepSeek Coder V2

DeepSeek Coder V2 is Chinese AI company DeepSeek’s code-specialized model achieving 43.4% on LiveCodeBench competitive programming benchmark with 236B total parameters (21B active MoE), 128K context, supporting 338 programming languages.

Model Architecture:

- Devstral 2: Dense Transformer 123B

- DeepSeek Coder V2: Sparse MoE 236B (21B active)

SWE-bench Performance:

- Devstral 2: 72.2% Verified

- DeepSeek Coder V2: Competitive but not directly comparable SWE-bench scoring

Inference Efficiency:

- Devstral 2: Dense model; straightforward inference

- DeepSeek Coder V2: MoE reduces active computation; more efficient inference

Language Support:

- Devstral 2: Major languages

- DeepSeek Coder V2: 338 programming languages (broader support)

Human Preference:

- Devstral 2: 42.8% win vs 28.6% loss vs DeepSeek V3.2

- DeepSeek Coder V2: Strong performance but human evaluation comparison unclear

Licensing:

- Devstral 2: Apache 2.0; unrestricted

- DeepSeek Coder V2: Proprietary licensing restrictions

When to Choose Devstral 2: For open-weight status, simpler architecture, and unrestricted licensing.

When to Choose DeepSeek Coder V2: For broader language support and potentially more efficient inference through MoE.

Devstral 2 vs Claude 3.7 Sonnet (Anthropic)

Claude 3.7 Sonnet is Anthropic’s latest model (December 2025) focused on balanced performance, long-context understanding, and safety with 200K token context supporting multiple modalities.

Model Access:

- Devstral 2: Open-weight, fully deployable

- Claude 3.7 Sonnet: API-only, vendor-dependent

Coding Performance:

- Devstral 2: 72.2% SWE-bench Verified

- Claude 3.7 Sonnet: Strong general performance; human evaluators prefer 53.1% vs Devstral 2’s 42.9%

Context Window:

- Devstral 2: 256K tokens

- Claude 3.7 Sonnet: 200K tokens

Cost:

- Devstral 2: \$0.40/\$1.20 per million tokens (post-beta)

- Claude 3.7 Sonnet: Proprietary pricing; typically higher per-token

Licensing and Ownership:

- Devstral 2: Complete ownership; local deployment

- Claude 3.7 Sonnet: Vendor lock-in; API-dependent

User Preference:

- Devstral 2: Stronger benchmark scores

- Claude 3.7 Sonnet: Stronger user preference indicating qualitative advantages

When to Choose Devstral 2: For open-weight access, cost efficiency at scale, and local deployment.

When to Choose Claude 3.7 Sonnet: For strongest user preference, multimodal support, and proven Anthropic quality.

Final Thoughts

Devstral 2 represents significant step forward for open-weight coding models combining specialized agentic capabilities, strong benchmark performance, and unrestricted licensing addressing growing developer demand for open alternatives to closed-source coding models. The 72.2% SWE-bench Verified score positions it among strongest open-source models approaching closed-source leaders, while the specialized agentic design optimizes for real-world software engineering workflows beyond pure code completion.

The combination of Devstral 2 (enterprise-grade) and Devstral Small 2 (local deployment) provides choice matching different deployment requirements from large organizations with infrastructure to individual developers valuing privacy and independence. The native Vibe CLI integration demonstrates thoughtful developer experience considering terminal-native workflows.

However, human evaluation results showing Claude Sonnet 4.5 preferred 53.1% versus Devstral’s 42.9% suggest qualitative gaps persist despite strong benchmark scores. The December 2025 launch date means unproven long-term stability and limited production deployment examples creating legitimate risk for mission-critical systems. The Devstral 2 infrastructure requirements (4+ H100 equivalent) limit deployment to organizations with significant infrastructure.

For development teams valuing open-source principles, cost efficiency at scale, and local deployment control, Devstral 2 provides compelling infrastructure for autonomous code automation. For organizations prioritizing absolute performance quality and accepting vendor lock-in, Claude Sonnet 4.5 and GPT-4.1 remain stronger choices despite higher costs.

For coding infrastructure providers, AI-powered development tools, and organizations automating code workflows at enterprise scale, Devstral 2 represents milestone in making powerful agentic coding capabilities accessible through open-weight models—democratizing access to automation previously requiring proprietary API dependencies while maintaining competitive performance approaching closed-source leaders.