Table of Contents

Mastra

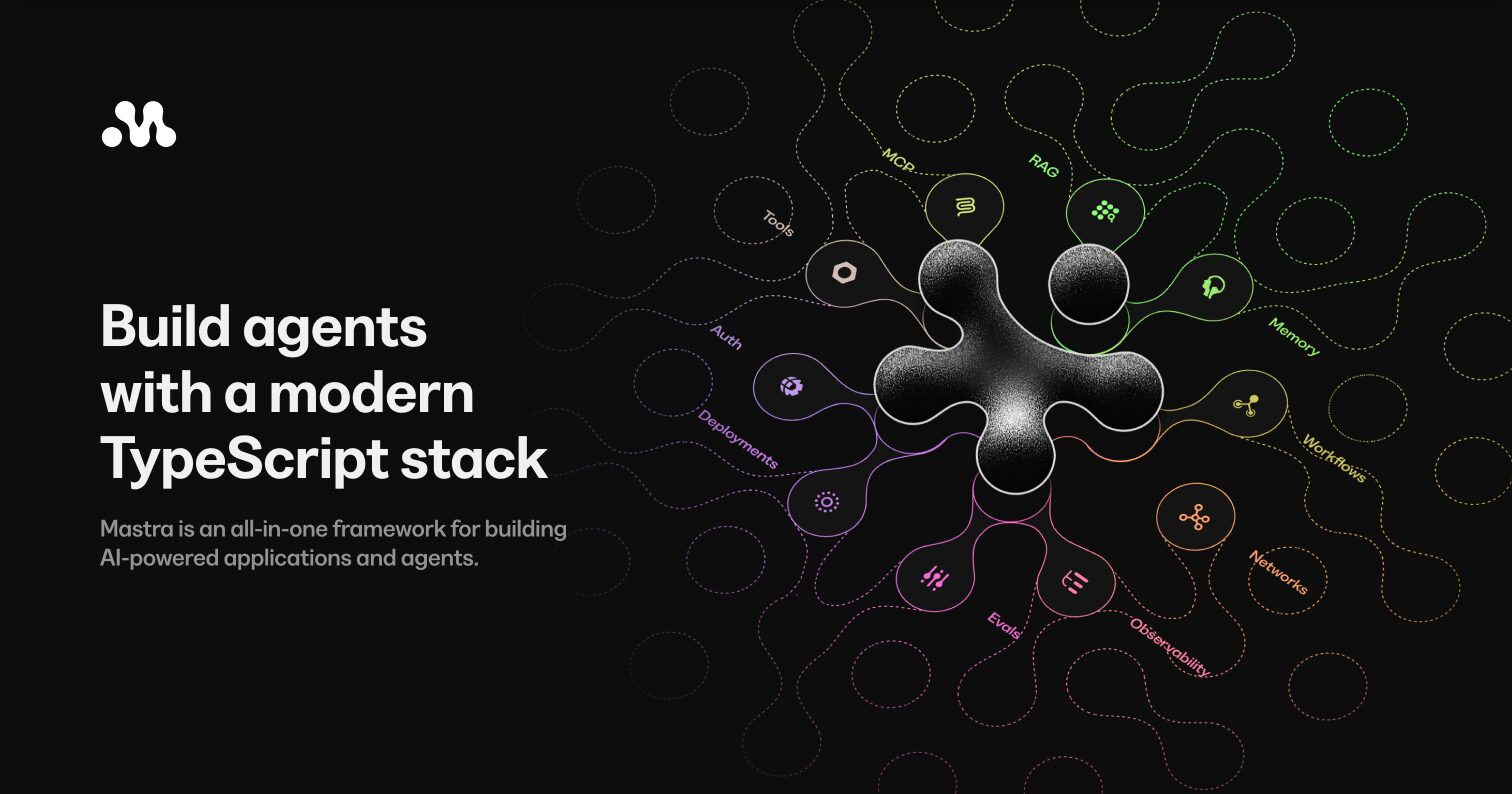

From the team behind Gatsby, Mastra is a new, opinionated framework designed specifically for building AI-powered applications and agents using a modern TypeScript stack. It provides a complete set of primitives—workflows, memory, streaming, evaluations, and tracing—along with a local “Studio” UI for visual debugging. Start building immediately with: npm create mastra@latest

Features

- Model Routing: Connect to 40+ LLM providers (OpenAI, Anthropic, Gemini, etc.) through a single standard interface.

- Strictly Typed Workflows: Build deterministic, graph-based agent workflows where every input and output is validated with TypeScript.

- Local Dev Studio: A built-in interactive GUI (running on localhost) to visualize agent behavior, inspect traces, and debug steps in real-time.

- Built-in Evals & Tracing: Native tools to measure agent performance (accuracy, recall) and track token usage without needing external SaaS tools initially.

- Memory Management: Persistent “semantic memory” and conversation history handling to create long-running, context-aware agents.

How It Works

- Install: Run

npm create mastra@latestto scaffold a new project. - Define: Write your agents, tools, and workflows in standard TypeScript files.

- Debug: Boot up the Mastra Studio locally to visually test your agents, run evaluations, and tweak prompts.

- Deploy: Ship your agents as standard Node.js/Next.js applications to your preferred infrastructure (Vercel, AWS, etc.).

Use Cases

- Custom AI Assistants: Building specialized support bots that have access to your company’s API and documentation.

- Complex RAG Applications: Orchestrating multi-step retrieval workflows where data is fetched, filtered, and synthesized from multiple sources.

- Agent Reliability Engineering: Using the built-in evaluations to ensure your agent doesn’t “hallucinate” before pushing updates to production.

Pros & Cons

- Pros: TypeScript-first (not a Python port); “Batteries-included” (everything from memory to GUI is in the box); Open Source; Great developer experience (DX) from the Gatsby team.

- Cons: Requires coding knowledge (not a low-code tool); focused heavily on the JavaScript/TypeScript ecosystem (less relevant for Python AI teams); newer ecosystem compared to LangChain.

Pricing

- Free (Open Source): The core framework and local Studio are free and open source (MIT).

- Mastra Cloud (Optional): A managed platform for deployment and hosted observability is available for teams who don’t want to self-host.

How Does It Compare?

- vs. LangChain: LangChain is Python-first and extremely broad, often feeling “glued together” when used in JavaScript. Mastra is TypeScript-native from the ground up, offering better type safety and a more cohesive experience for web developers.

- vs. LlamaIndex: LlamaIndex specializes deeply in data retrieval (RAG) and ingestion pipelines. Mastra is a broader application framework that includes RAG but focuses more on the end-to-end “app building” lifecycle (workflows, UI, evaluations).

- vs. Vercel AI SDK: Vercel AI SDK is primarily a frontend library for connecting UI to LLMs. Mastra is a backend framework for logic and orchestration. They are often used together—Mastra handles the complex agent logic on the server, while Vercel AI SDK handles the chat UI streaming in the browser.

Final Thoughts

Mastra does for AI agents what Gatsby did for React websites: it bundles the best practices into an opinionated, developer-friendly framework. If you are a TypeScript developer tired of wrestling with Python-centric tools or hacking together disparate libraries to get a production-ready agent, Mastra feels like a breath of fresh air. It is the “Web Developer’s AI Framework.”