Table of Contents

Trails

The Debugger for AI Agents

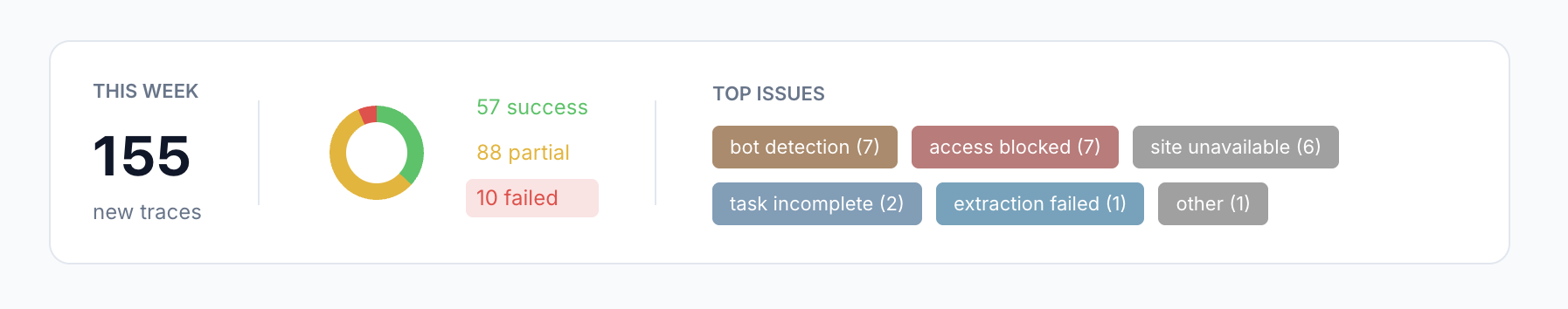

Trails is a specialized observability tool designed to make sense of the chaos in AI agent development. While most tools just list your logs, Trails automatically analyzes thousands of agent runs to surface aggregate insights and failure patterns. It is particularly optimized for agents using the browser-use library, allowing developers to visualize exactly where an agent got stuck, view the browser state at the moment of failure, and tag recurring bugs. Instead of reading JSON traces one by one, you can see the “big picture” of your agent’s reliability.

Key Features

- Aggregate Pattern Analysis: Scans thousands of runs to identify common failure modes (e.g., “Agent fails at checkout 40% of the time”).

- Visual Replay: Walk through every step of your agent’s execution with synchronized screenshots and reasoning logs.

- Browser-Use Native: Out-of-the-box parsing for

browser-useagent formats, making it instant to set up for developers using that framework. - Error Categorization: Automatically tags and groups runs by error type, allowing you to filter noise and prioritize the most critical bugs.

User Workflow

- Ingest: Drop in your agent’s execution logs (or connect via SDK).

- Analyze: The dashboard highlights red flags, showing which steps commonly lead to failure.

- Debug: Drill down into a specific failed run to see the screenshot and internal thought process, then create a fix in your code.

Use Cases

- Debugging Browser Agents: Visualizing why an autonomous web-browsing agent failed to click a specific button.

- Performance Monitoring: Tracking success rates across different versions of your agent’s prompts.

- Regression Testing: ensuring that a “fix” for one bug didn’t break a different part of the agent’s workflow.

Pros & Cons

- Pros: specialized for the unique pain points of agentic workflows (loops, browser state), much faster than digging through raw text logs, free/lightweight.

- Cons: Very early stage (beta), primarily focused on the

browser-useecosystem (less general-purpose than LangSmith), “Get in Touch” gating for access.

Pricing

- Early Access: Currently Free / Beta (Requires contact).

How Does It Compare?

vs. LangSmith

LangSmith is the enterprise standard for general LLM tracing. It is excellent for seeing “Input vs. Output.” However, Trails is better for agentic loops. If you need to debug a 50-step browser automation agent where the context changes every second, Trails’ visual replay is more intuitive than LangSmith’s text-heavy tree view.

vs. Arize Phoenix

Phoenix is a powerful open-source ML observability platform. It focuses heavily on evaluations and embeddings. Trails is more focused on the developer experience of debugging—specifically, “Why did my agent fail this task?”—rather than high-level metrics drift.

vs. AgentOps

AgentOps is a direct competitor that offers session replays and cost tracking for agents. Trails distinguishes itself with its deep integration into the browser automation workflow, visualizing the visual state of the DOM/browser alongside the logs, which is critical for web-navigating agents.

Final Thoughts

Trails addresses the “Black Box” problem of autonomous agents. When an agent runs for 20 minutes and fails, finding the error is usually a nightmare. By visualizing the “trail” of thought and action, this tool turns hours of log-digging into minutes of fixing. It is an essential utility for any developer building agents that interact with the web.