Table of Contents

- 1. Executive Snapshot

- 2. Impact and Evidence

- 3. Technical Blueprint

- 4. Trust and Governance

- 5. Unique Capabilities

- 6. Adoption Pathways

- 7. Use Case Portfolio

- 8. Balanced Analysis

- 9. Transparent Pricing

- 10. Market Positioning

- 11. Leadership Profile

- 12. Community and Endorsements

- 13. Strategic Outlook

- Final Thoughts

1. Executive Snapshot

Core Offering Overview

Claude Opus 4.6 is the most advanced artificial intelligence model in Anthropic’s Claude family, released on February 5, 2026. It represents a significant capability leap from its predecessor, Opus 4.5 (released in November 2025), while maintaining identical base pricing at $5 per million input tokens and $25 per million output tokens. The model introduces three headline features: a 1 million token context window (in beta), native “Agent Teams” functionality enabling multiple specialized sub-agents to collaborate on complex tasks, and “Adaptive Thinking” that dynamically adjusts computational effort across four levels (Low, Medium, High, Max) based on task complexity.

Anthropic positions Opus 4.6 as the transition from “vibe coding” to “vibe working”—shifting AI utility beyond code generation into sustained, autonomous professional knowledge work across finance, legal, product management, and enterprise operations. The model is accessible through Claude.ai (consumer interface), Claude Code (developer CLI), the Claude API, AWS Bedrock, and Google Cloud Vertex AI.

Scott White, who leads product at Anthropic, told TechCrunch that Opus has evolved from a model highly capable in one particular domain—software development—into a program that is “really useful for a broader set” of knowledge workers, including product managers, financial analysts, and professionals across diverse industries.

Key Achievements and Milestones

- February 5, 2026: Official release of Claude Opus 4.6, Anthropic’s first major model launch of 2026.

- Terminal-Bench 2.0 Record: Achieved 65.4% success rate, surpassing GPT-5.2 (64.7%) to become the top-performing model for agentic coding, system administration, and terminal task completion.

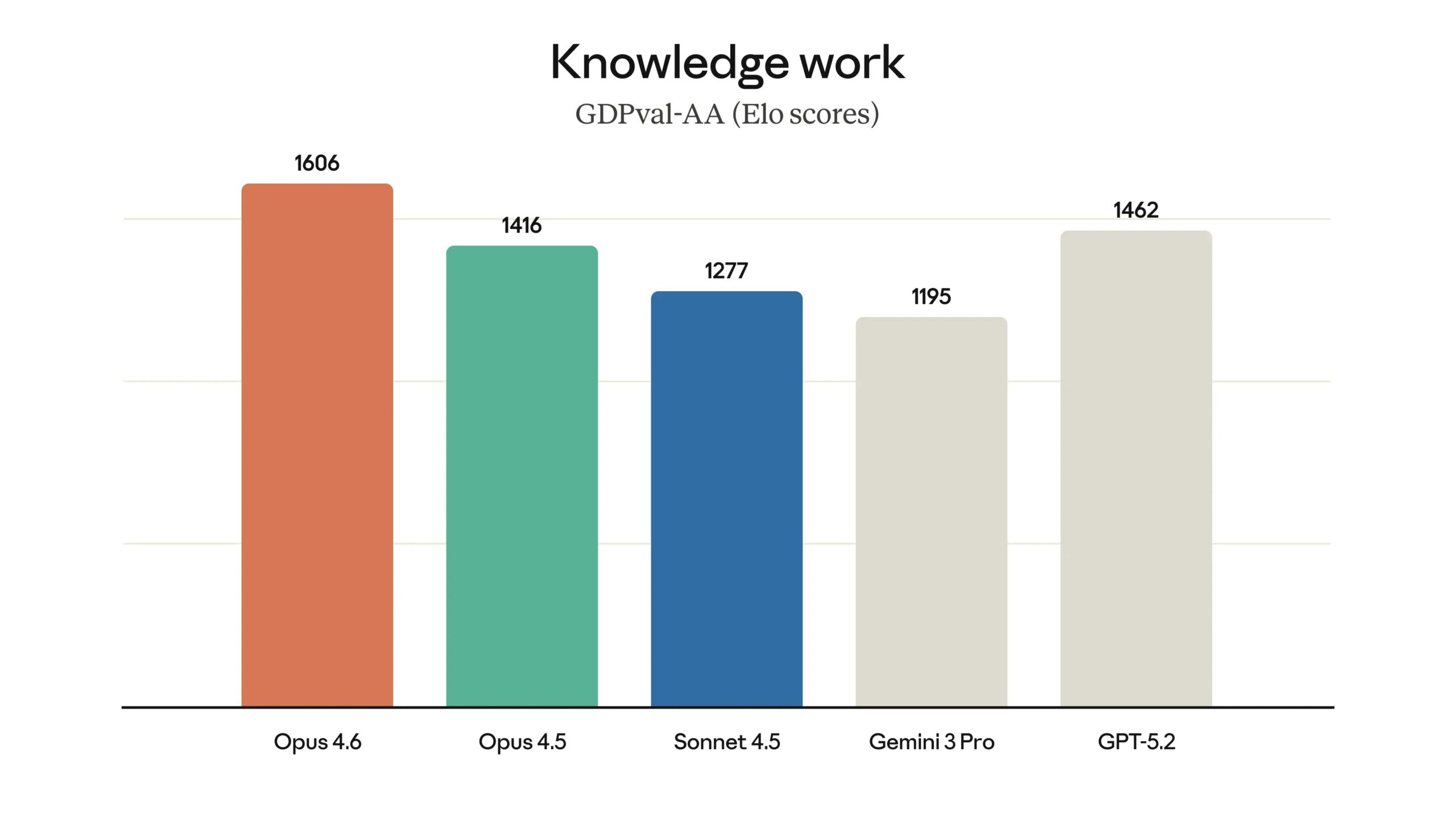

- GDPval-AA Dominance: Scored 1,606 Elo on the GDPval-AA benchmark (economically valuable knowledge work across finance, legal, and professional domains), outperforming GPT-5.2 by 144 Elo points and its own predecessor Opus 4.5 by 190 Elo points.

- Humanity’s Last Exam: Currently holds the leading position on this complex multidisciplinary reasoning test.

- Finance Agent Benchmark: Ranks number one among all frontier models on assessments of essential financial analyst tasks.

- 128K Output Tokens: Doubled the maximum output from the previous 64K limit, enabling generation of entire code modules and comprehensive analyses in a single pass.

- PowerPoint Integration: Claude is now directly accessible as a side panel within Microsoft PowerPoint, allowing presentations to be crafted within the application with real-time AI assistance—a step up from the previous workflow that required file transfers between Claude and PowerPoint.

Adoption Statistics

Anthropic reached $1 billion in annualized revenue as of December 2024, representing a tenfold increase year-over-year. Enterprise clients constitute approximately 80% of the company’s customer base, as confirmed by CEO Dario Amodei in a discussion with CNBC. The company’s valuation has grown dramatically, reaching $61.5 billion in early 2025, with a tender offer in February 2026 suggesting a valuation approaching $350 billion. Opus 4.6 was deployed simultaneously across Claude.ai, the Claude API, AWS Bedrock, and Google Cloud Vertex AI at launch, ensuring immediate global enterprise availability.

The model’s primary adoption driver is Claude Code, Anthropic’s command-line developer tool, which has attracted users beyond professional software engineers—including product managers, financial analysts, and other knowledge workers who use it as a general-purpose task engine.

2. Impact and Evidence

Client Success Stories

While Anthropic has not published named case studies specifically for Opus 4.6 (given its recency), the model’s predecessor Opus 4.5 and the broader Claude platform serve enterprise customers across multiple sectors:

- Software development teams: Companies use Claude Code for autonomous code refactoring, debugging, and test generation across large codebases. Opus 4.6’s improved agentic coding capabilities extend Claude Code’s ability to handle multi-file operations and sustained development tasks.

- Financial services: The model’s number one ranking on the Finance Agent benchmark validates its applicability for financial analysis, risk assessment, and regulatory document processing. The 1 million token context window enables ingestion of entire fiscal year datasets in a single session.

- Legal technology: Law firms leverage the expanded context window to review extensive case archives, cross-reference testimony, and synthesize findings across thousands of pages.

- Knowledge workers broadly: CNBC reporting confirms that Anthropic has observed growing adoption among non-engineers who use Claude Code as a versatile task execution engine, reflecting the “vibe working” positioning.

Performance Metrics and Benchmarks

Opus 4.6 achieves state-of-the-art results across multiple critical evaluations:

| Benchmark | Opus 4.6 Score | GPT-5.2 Score | Opus 4.5 Score | Significance |

|---|---|---|---|---|

| Terminal-Bench 2.0 | 65.4% | 64.7% | — | Premier agentic coding and terminal task benchmark |

| GDPval-AA | 1,606 Elo | 1,462 Elo | 1,416 Elo | Economically valuable professional knowledge work |

| Humanity’s Last Exam | Leading | — | — | Complex multidisciplinary reasoning |

| Finance Agent | #1 rank | — | — | Financial analyst task completion |

The Terminal-Bench 2.0 benchmark measures real-world terminal task completion across coding, system administration, and file manipulation—the tasks developers actually perform in their working environments. GDPval-AA assesses performance on tasks with direct economic value across finance, legal, and other professional domains.

Third-Party Validations

- CNBC: Dedicated coverage characterizing the release as marking the transition from “vibe coding” to “vibe working,” with direct quotes from Anthropic leadership.

- TechCrunch: Published analysis of the Agent Teams feature and Opus 4.6’s broadened applicability beyond software engineering.

- Laravel News: Highlighted the Adaptive Thinking feature, 128K output tokens, and the new Compaction API as significant developer-facing improvements.

- Artificial Analysis: Published detailed benchmarking analysis confirming Opus 4.6’s pricing parity with Opus 4.5 while delivering substantially greater capability.

- Multiple independent reviewers: YouTube creators, Zenn, Qiita, and technical blog communities published extensive evaluations corroborating Anthropic’s benchmark claims.

3. Technical Blueprint

System Architecture Overview

Claude Opus 4.6 introduces three architectural innovations:

Adaptive Thinking: Unlike previous models with binary “thinking on/off” toggles, Opus 4.6 evaluates the complexity of each prompt and assigns one of four computational effort levels—Low, Medium, High, or Max. This allows the model to conserve tokens and reduce latency on simple requests while deploying deep reasoning chains for complex problems. The effort parameter is now generally available (GA) and no longer requires a beta header.

Agent Teams: A hierarchical multi-agent system where a “Team Lead” session decomposes complex tasks and spawns independent “Teammate” sessions. Each teammate operates with its own context window and specialized role, executing sub-tasks in parallel. Results are synthesized by the Team Lead into a coherent output. This productizes the multi-agent orchestration pattern that developers previously had to build from scratch using external frameworks.

Context Compaction API (Beta): For long-running conversations that approach the context window limit, Compaction summarizes and compresses conversation history, allowing the model to maintain coherence over extended interactions without losing critical context. This is essential for sustaining multi-hour autonomous workflows.

API and SDK Integrations

Key API specifications for Opus 4.6:

- Model ID:

claude-opus-4-6 - Context Window: 200K tokens (standard), 1M tokens (beta, requires

anthropic-beta: context-1m-2026-02-05header) - Maximum Output: 128,000 tokens (SDKs require streaming for large

max_tokensvalues to avoid HTTP timeouts) - Effort Parameter (GA):

thinkingparameter with four levels (Low, Medium, High, Max), combinable with Adaptive Thinking for cost-quality tradeoffs - Fine-Grained Tool Streaming (GA): Now generally available on all models and platforms without a beta header

- Inference Geography: New

inference_geoparameter allows specification of US-only inference at 1.1x pricing for data residency compliance - Prefilling: Removed for Opus 4.6 (returns 400 error); supported in Opus 4.5

- Availability: Claude.ai, Claude API, AWS Bedrock, Google Cloud Vertex AI

Scalability and Reliability Data

The model supports enterprise-scale deployment through multiple vectors:

- Batch Processing API: 50% cost reduction ($2.50/$12.50 per million tokens) for asynchronous workloads, ideal for nightly batch processing of code reviews, test generation, and document analysis.

- Prompt Caching: Up to 90% cost savings for repeated prompt patterns, critical for high-volume enterprise deployments.

- Context Compaction: Prevents degradation of response quality during extremely long sessions—a common failure point in competing models during sustained agentic workflows.

- 128K Output Tokens: Enables generation of complete code modules, comprehensive reports, and detailed analyses without splitting into multiple requests.

Anthropic has not published formal SLA uptime percentages for Opus 4.6 specifically. Enterprise customers access guaranteed throughput and lower latency through priority tier arrangements.

4. Trust and Governance

Security Certifications

Anthropic maintains a comprehensive compliance portfolio through its Trust Center:

- SOC 2 Type II: Verified controls for security, availability, and confidentiality of customer data.

- ISO 27001: Certified Information Security Management System.

- ISO 42001: Certified AI Management System—one of the first major AI companies to achieve this standard.

- HIPAA Eligibility: HIPAA-ready for enterprise customers requiring processing of protected health information (PHI) under a Business Associate Agreement (BAA).

- CSA STAR Level 1: Cloud Security Alliance self-assessment for cloud security.

Data Privacy Measures

Anthropic enforces strict data handling policies:

- Non-training policy: API inputs and outputs are not used to train future models. Enterprise customer data is segregated from training pipelines.

- Data residency controls: The new

inference_geoparameter in Opus 4.6 allows per-request specification of US-only processing at 1.1x pricing, addressing data sovereignty requirements. - Agent Teams compartmentalization: Sub-agents in the Agent Teams architecture access only the specific context slices necessary for their assigned sub-tasks, minimizing data exposure during complex workflows.

- Trust Center: Publicly accessible at trust.anthropic.com with detailed documentation of security practices, compliance certifications, and data handling policies.

Regulatory Compliance Details

Opus 4.6 aligns with emerging global AI regulatory frameworks:

- EU AI Act: Anthropic publishes system cards and model documentation that address transparency requirements for general-purpose AI systems.

- Lowest over-refusal rates: Anthropic describes Opus 4.6 as having the lowest over-refusal rates among recent Claude models—meaning it more accurately distinguishes between harmful requests (which it refuses) and legitimate professional queries (which it handles appropriately). This addresses a common enterprise complaint about AI models being overly cautious.

- Usage policies: Anthropic maintains detailed acceptable use policies and safety evaluations, with the model undergoing extensive red-teaming before release.

5. Unique Capabilities

- Adaptive Thinking: The signature innovation of Opus 4.6. Rather than applying uniform computational effort to every prompt, the model dynamically assesses task complexity and allocates one of four effort levels. Simple factual questions receive “Low” effort (fast, cheap), while complex multi-step reasoning problems trigger “High” or “Max” effort (thorough, more expensive). This creates a built-in cost-intelligence optimizer that no competitor currently offers at the model architecture level.

- Agent Teams: A native multi-agent orchestration system where a Team Lead decomposes complex tasks into sub-tasks, spawns independent Teammate sessions to execute them in parallel, and synthesizes results. This eliminates the need for external orchestration frameworks (LangGraph, CrewAI, AutoGen) for many use cases, providing a first-party multi-agent solution integrated directly into the model.

1 Million Token Context Window (Beta): The largest context window available on an Opus-class model. At 1M tokens, developers can load entire codebases, legal archives, or financial datasets into a single conversation. Premium pricing ($10/$37.50 per million tokens) applies for requests exceeding 200K input tokens.

128K Output Tokens: Doubled from the previous 64K limit. This enables the model to generate complete code modules, entire documentation sets, or comprehensive analytical reports without requiring multiple sequential requests—a significant productivity improvement for batch generation workflows.

Context Compaction (Beta): A new API capability that summarizes and compresses conversation history for long-running tasks. This prevents the “context cliff” problem where models lose coherence as conversations approach the context window limit, enabling truly sustained multi-hour autonomous workflows.

PowerPoint Side Panel Integration: Claude is now embedded directly within Microsoft PowerPoint as an interactive side panel, allowing users to craft and edit presentations with real-time AI assistance without switching between applications. This represents Anthropic’s expansion beyond developer tools into mainstream productivity software.

6. Adoption Pathways

Integration Workflow

Migrating to Opus 4.6 from previous Claude models requires minimal technical effort:

- Update the

modelparameter in API calls fromclaude-opus-4-5toclaude-opus-4-6. - Optionally add the

thinkingparameter to control effort levels (Low, Medium, High, Max) for cost optimization. - For 1M context, add the beta header

anthropic-beta: context-1m-2026-02-05. - For US-only inference, add

inference_geo: "us"(1.1x pricing applies). - For large outputs, update

max_tokensto leverage the new 128K limit (streaming required in SDKs). - Agent Teams are accessible through Claude Code and the API’s multi-agent orchestration endpoints.

For existing Opus 4.5 users managing costs within budget, the upgrade delivers pure capability improvements at identical pricing—no additional budget allocation required.

Customization Options

- Effort level selection: Manual control over Low/Medium/High/Max thinking effort for precise cost-quality tradeoffs.

- Context window sizing: Standard 200K or beta 1M, with pricing differentiation allowing budget-conscious users to stay within the standard tier.

- Batch processing: 50% cost reduction for asynchronous workloads.

- Prompt caching: Up to 90% savings for repeated prompt patterns.

- Data residency: Per-request US-only inference for compliance requirements.

- Agent Teams configuration: Programmatic control over Team Lead behavior, teammate spawning, and task decomposition strategies.

Onboarding and Support Channels

- Claude.ai: Browser-based interface with Pro ($20/month) and Max ($100/month) subscription tiers.

- Claude API: Direct programmatic access with comprehensive documentation.

- Claude Code: Command-line interface for developer workflows.

- Anthropic Cookbooks: Open-source code repositories with example implementations and integration patterns.

- Developer Discord: Community support and feature discussion.

- Enterprise support: White-glove onboarding, solution architecture guidance, and priority throughput for enterprise customers.

- AWS Bedrock and Google Cloud Vertex AI: Enterprise cloud marketplace deployment with existing billing and access management.

7. Use Case Portfolio

Enterprise Implementations

Opus 4.6 targets several high-value enterprise verticals:

- Software engineering: Agentic coding via Claude Code for autonomous multi-file refactoring, test generation, debugging, and code review. The Terminal-Bench 2.0 leadership validates this as the model’s strongest domain.

- Financial services: Number one rank on the Finance Agent benchmark. The 1M context window enables processing of complete fiscal year datasets, audit trails, and regulatory filings in a single session.

- Legal technology: Extended context and improved reasoning support comprehensive case review, contract analysis, and regulatory compliance assessment across thousands of pages.

- Product management: Agent Teams enable decomposition of product strategy tasks—competitive analysis, feature specification, user story generation, and roadmap planning—into parallel sub-tasks.

- Enterprise productivity: PowerPoint integration signals expansion into mainstream office productivity, targeting the 80% of Anthropic’s enterprise client base.

Academic and Research Deployments

- Literature review acceleration: The 1M context window allows researchers to load hundreds of papers simultaneously and synthesize findings across the full corpus.

- Cybersecurity research: In internal testing, the model autonomously identified over 500 zero-day vulnerabilities, demonstrating capabilities relevant to academic security research.

- AI safety research: Anthropic’s public commitment to interpretability research (led by Chris Olah) and Constitutional AI provides a research framework that academic institutions can build upon.

ROI Assessments

Formal third-party ROI studies have not been published for Opus 4.6 specifically. However, the economic case is supported by several data points:

- Pricing parity: Identical base pricing to Opus 4.5 ($5/$25) with substantially greater capability means existing users receive a free capability upgrade.

- Adaptive Thinking cost savings: The ability to route simple queries to “Low” effort (fewer tokens consumed) while reserving “High/Max” for complex tasks can reduce API costs by an estimated 30–40% for mixed workloads.

- Agent Teams parallelism: Independent reports indicate 2–3x reduction in turnaround time for complex multi-step tasks compared to sequential single-agent execution.

- Batch API discount: 50% cost reduction for asynchronous processing enables significant savings for nightly batch operations.

8. Balanced Analysis

Strengths with Evidential Support

- Benchmark leadership: Top positions on Terminal-Bench 2.0 (65.4%), GDPval-AA (1,606 Elo), Humanity’s Last Exam, and Finance Agent provide concrete, measurable evidence of superiority over GPT-5.2 and Gemini 3 Pro in agentic coding and professional knowledge work.

- Price-performance improvement: Delivering substantially more capability (1M context, 128K output, Agent Teams, Adaptive Thinking) at identical pricing to Opus 4.5 represents exceptional value for existing customers.

- Enterprise compliance maturity: SOC 2 Type II, ISO 27001, ISO 42001, HIPAA eligibility, and the new data residency controls address the compliance requirements that block adoption in regulated industries.

- Architectural innovation: Adaptive Thinking’s four-tier effort system is a genuine first-in-class feature that no competitor currently replicates, enabling built-in cost optimization without sacrificing capability on complex tasks.

- Multi-platform availability: Simultaneous launch across Claude.ai, the API, AWS Bedrock, and Google Cloud Vertex AI ensures enterprise customers can adopt through their existing cloud procurement channels.

- Massive financial backing: Approximately $12 billion+ in total investment from Amazon ($8B), Google ($3B+), and other investors provides Anthropic with the compute resources and financial runway to sustain frontier model development.

Limitations and Mitigation Strategies

- Premium context pricing: The 1M token context window charges $10/$37.50 per million tokens (2x standard) for requests exceeding 200K tokens, making sustained large-context usage expensive. Mitigation: Use Context Compaction to summarize conversation history before crossing the 200K threshold; leverage prompt caching (up to 90% savings) for repeated context patterns.

- Latency in Max mode: The “Max” effort Adaptive Thinking setting generates extensive internal reasoning chains, resulting in significantly higher latency. Mitigation: Reserve Max effort for asynchronous background tasks; use Low or Medium for interactive, latency-sensitive applications.

- Higher base pricing than competitors: At $5/$25 per million tokens, Opus 4.6 is more expensive per token than Gemini 3 Pro (~$3.50/$10.50) and competitive GPT-5.2 models. Mitigation: The Adaptive Thinking effort system allows cost reduction on simple tasks; Batch API provides 50% savings for asynchronous workloads.

- No video understanding: While enhanced image and document analysis is supported, video comprehension is not yet available (expected in a future release). Mitigation: Pre-process video into transcripts and key frame images for analysis.

- Agent Teams maturity: As a newly launched feature, Agent Teams may exhibit edge cases and reliability issues in complex production workflows. Mitigation: Use Agent Teams for well-defined, decomposable tasks and maintain human oversight for critical workflows during the early adoption period.

- Prefilling removed: Opus 4.6 removes support for response prefilling (returns a 400 error), which some developers relied on for constrained generation. Mitigation: Use system prompts and structured output formatting as alternatives; prefilling remains available on Opus 4.5 if needed.

9. Transparent Pricing

Plan Tiers and Cost Breakdown

API Pricing:

| Tier | Input Cost (per M tokens) | Output Cost (per M tokens) | Conditions |

|---|---|---|---|

| Standard | $5.00 | $25.00 | Context up to 200K tokens |

| Long Context (Beta) | $10.00 | $37.50 | Context exceeding 200K tokens (all tokens charged at premium rate) |

| US-Only Inference | $5.50 | $27.50 | 1.1x standard pricing for data residency |

| Batch Processing | $2.50 | $12.50 | 50% discount, asynchronous processing |

| Prompt Caching | Up to 90% savings | — | For repeated prompt patterns |

Consumer Subscriptions:

| Plan | Monthly Cost | Features |

|---|---|---|

| Free | $0 | Limited access to Claude models |

| Pro | $20/month | Full Opus 4.6 access, higher usage limits |

| Max | $100/month | Maximum usage limits, priority access, advanced features |

Total Cost of Ownership Projections

For a standard enterprise coding workflow processing 1 billion tokens annually:

- Naive approach (all standard pricing): $5,000 input + $25,000 output = $30,000/year

- Optimized approach (Batch API for nightly jobs, Low effort for simple queries, prompt caching): Estimated $12,000–$18,000/year (40–60% savings through feature optimization)

- High-context workflows (frequent 1M token usage): Premium pricing applies, potentially doubling costs for context-heavy applications. Context Compaction mitigates this by reducing unnecessary context accumulation.

The pricing parity with Opus 4.5 means that the upgrade itself carries zero incremental cost for existing users—all new capabilities are delivered at the same price point.

10. Market Positioning

Competitor Comparison Table

| Feature | Claude Opus 4.6 | GPT-5.2 (OpenAI) | Gemini 3 Pro (Google) |

|---|---|---|---|

| Context Window | 200K standard / 1M beta | ~128K–200K | Up to 2M |

| Max Output | 128K tokens | Varies by tier | Varies by tier |

| Standard Pricing (Input/Output) | $5 / $25 per M tokens | ~$5 / $15 per M tokens | ~$3.50 / $10.50 per M tokens |

| Terminal-Bench 2.0 | 65.4% (1st) | 64.7% (2nd) | ~60% |

| GDPval-AA Elo | 1,606 (1st) | 1,462 | 1,195 |

| Multi-Agent | Native Agent Teams | Single agent focus | Integrated app ecosystem |

| Effort Control | Adaptive Thinking (4 levels) | Chain-of-thought modes | Deep Think mode |

| Data Residency | US-only inference option | Available | Available |

| Compliance | SOC 2 II, ISO 27001, ISO 42001, HIPAA | SOC 2 II, various | SOC 2, ISO, various |

| Batch Discount | 50% | Varies | Varies |

Unique Differentiators

Opus 4.6 differentiates through three primary axes:

- Adaptive Thinking: The only frontier model with a four-tier, built-in effort control system that automatically optimizes the cost-intelligence tradeoff at the architectural level. Competitors offer binary thinking modes but lack granular self-regulation.

Agent Teams (native): While competitors require external orchestration frameworks (LangChain, CrewAI, AutoGen) for multi-agent workflows, Opus 4.6 productizes this pattern natively—reducing integration complexity and improving reliability.

Reasoning quality per token: Despite higher per-token pricing than Gemini 3 Pro, Opus 4.6 delivers the highest quality of reasoning applied to each token, as evidenced by its 144 Elo point lead over GPT-5.2 on GDPval-AA and its Terminal-Bench 2.0 leadership. Organizations optimize for output quality rather than token cost typically find Opus 4.6 more cost-effective on a per-task basis.

11. Leadership Profile

Dario Amodei — CEO and Co-Founder

Dario Amodei co-founded Anthropic in 2021 after serving as VP of Research at OpenAI, where he led the team that developed GPT-2 and GPT-3. His academic background includes a Ph.D. in computational neuroscience from Princeton University. Named to Time Magazine’s 100 Most Influential People list, Amodei has steered Anthropic’s focus on developing “steerable” AI systems with rigorous safety properties. He authored the influential “Scaling Laws for Neural Language Models” paper, which correctly predicted the performance improvements seen in subsequent model generations. In a CNBC interview in January 2026, Amodei confirmed that enterprise clients constitute approximately 80% of Anthropic’s revenue base.

Daniela Amodei — President and Co-Founder

Daniela Amodei serves as President of Anthropic, overseeing business operations, people, and go-to-market strategy. Prior to Anthropic, she held senior roles at OpenAI, including VP of People and VP of Operations. Her leadership has been instrumental in scaling Anthropic from a research lab to a company generating over $1 billion in annualized revenue while maintaining its safety-first culture.

Chris Olah — Lead Researcher, Mechanistic Interpretability

Chris Olah is one of the world’s foremost researchers in neural network interpretability. His work at Anthropic on mechanistic interpretability—visualizing and understanding how large language models process information internally—provides the scientific foundation for the transparency and safety claims that distinguish Claude from competitors. His research has been extensively cited across the AI safety community.

Scott White — Product Lead

Scott White leads product development for Claude and provided the key public commentary around the Opus 4.6 launch. In interviews with TechCrunch and CNBC, White articulated the “vibe working” thesis—positioning Opus 4.6 as a tool for sustained professional work rather than short interactive chats—and described the model’s evolution toward serving a broader set of knowledge workers beyond software engineers.

Patent Filings and Publications

Anthropic’s intellectual contributions are primarily expressed through academic publications and open-source research:

- Constitutional AI: The foundational paper describing Anthropic’s approach to training AI systems using a set of principles (a “constitution”) rather than relying exclusively on human feedback, enabling more predictable and transparent AI behavior.

- Scaling Laws for Neural Language Models: Co-authored by Dario Amodei, this paper established the mathematical relationships between model size, training data, and performance that guide frontier model development across the industry.

- Mechanistic Interpretability: Chris Olah’s ongoing body of work on understanding the internal representations and circuits of large language models, providing a scientific basis for AI safety and transparency.

- Numerous safety and alignment publications: Anthropic regularly publishes research on red-teaming, model evaluation, and AI safety methodology through its research blog and academic venues.

12. Community and Endorsements

Industry Partnerships

- Amazon Web Services (AWS): Amazon has invested up to $8 billion in Anthropic, making AWS the “primary cloud and training partner.” Claude Opus 4.6 is deeply integrated into AWS Bedrock for enterprise distribution.

- Google Cloud: Google has invested over $3 billion in Anthropic and owns approximately 14% equity. Opus 4.6 is available through Google Cloud Vertex AI.

- Microsoft PowerPoint: Direct integration as a side panel within PowerPoint marks Anthropic’s expansion into the Microsoft productivity ecosystem.

- Sequoia Capital and GIC: Leading a $20 billion fundraising round in February 2026, with a tender offer suggesting a company valuation approaching $350 billion.

Media Mentions and Awards

- CNBC: Dedicated article on the “vibe working” paradigm shift, with direct quotes from CEO Dario Amodei and product lead Scott White.

- TechCrunch: In-depth coverage of Agent Teams and the broadening of Opus beyond software engineering.

- Time Magazine: Dario Amodei named to 100 Most Influential People list.

- Laravel News: Technical analysis of Adaptive Thinking, 128K output, and the Compaction API.

- Artificial Analysis: Independent benchmarking confirmation of performance claims.

- Multiple international outlets: Coverage in Japanese tech media (Qiita, Zenn, Nikkei), Economic Times, Digital Applied, and numerous AI-focused publications.

- Developer communities: Extensive analysis and discussion across Reddit, YouTube, Dev.to, and LinkedIn, with broadly positive reception focused on the benchmark leadership and pricing parity.

13. Strategic Outlook

Future Roadmap and Innovations

Based on observable trends, public statements, and product trajectory:

- Claude Cowork expansion: Anthropic is developing proactive collaboration features where Claude transitions from a reactive chatbot to an active teammate that monitors projects, identifies issues, and initiates tasks autonomously. Opus 4.6’s Agent Teams and PowerPoint integration are early manifestations of this vision.

- Video understanding: The next capability frontier. Opus 4.6 enhanced image and document analysis but does not yet support video comprehension. Industry expectations suggest video will be included in a near-term release.

- Context Compaction maturation: Currently in beta, the Compaction API is expected to graduate to general availability, making sustained 1M token workflows more cost-effective and reliable.

- Enterprise verticalization: Given the Finance Agent benchmark leadership and broadening beyond software engineering, expect specialized fine-tuning and tooling for specific enterprise verticals (financial services, legal, healthcare).

- Claude 5.0: While Anthropic chose the incremental “4.6” designation for this release, the next generational leap (presumably Claude 5.0) would represent a full architecture transition. The 4.6 naming signals that Anthropic views this as a major capability upgrade within the current architecture rather than a generational shift.

Market Trends and Recommendations

The AI model market in 2026 is defined by several key trends that Opus 4.6 addresses directly:

- Shift from chat to work: The market is moving from conversational AI toward autonomous task execution. Opus 4.6’s Agent Teams and sustained agentic capabilities position it at the leading edge of this transition.

- Cost-quality optimization: As AI usage scales, enterprises need granular control over the cost-intelligence tradeoff. Adaptive Thinking is the first model-native solution to this problem, providing automatic cost optimization without external orchestration.

- Multi-agent maturation: The multi-agent pattern is becoming standard for complex enterprise workflows. Opus 4.6’s native Agent Teams reduce the need for external frameworks, simplifying deployment and improving reliability.

- Compliance as a differentiator: With AI regulation accelerating globally (EU AI Act, proposed US regulations), Anthropic’s SOC 2, ISO 27001, ISO 42001, and HIPAA certifications—combined with the new data residency controls—provide a compliance advantage that accelerates enterprise procurement cycles.

- Revenue-driven competition: At $1 billion+ annualized revenue and approaching $350 billion valuation, Anthropic is now competing at the scale of major technology companies. The investment from Amazon, Google, and Sequoia ensures sustained access to frontier compute resources.

Recommendations:

- For developers: Adopt Opus 4.6 for CI/CD pipelines, legacy code refactoring, and autonomous development workflows via Claude Code and Agent Teams. Leverage the Batch API for nightly processing to reduce costs by 50%.

- For enterprise decision-makers: Evaluate Opus 4.6’s data residency controls and compliance certifications against internal requirements. The combination of SOC 2 Type II, ISO 27001/42001, and HIPAA eligibility addresses the most common enterprise procurement barriers.

- For financial and legal teams: The 1M context window and GDPval-AA leadership make Opus 4.6 the strongest current option for processing large document collections with economically valuable reasoning.

- For cost-conscious organizations: Use Adaptive Thinking’s effort levels strategically—”Low” for routing and classification, “High/Max” for analysis and decision-making—to optimize the cost-quality frontier across mixed workloads.

Final Thoughts

Claude Opus 4.6 represents a meaningful maturation of Anthropic’s model portfolio. By maintaining pricing parity with Opus 4.5 while delivering 1M token context, 128K output, Agent Teams, and Adaptive Thinking, Anthropic has executed one of the most capability-dense upgrades in frontier AI history without increasing costs—a rare combination that undercuts the common objection that cutting-edge AI is prohibitively expensive.

The model’s benchmark leadership tells a clear story: Opus 4.6 is the strongest available model for agentic coding (Terminal-Bench 2.0, 65.4%) and economically valuable professional knowledge work (GDPval-AA, 1,606 Elo). The 144 Elo point lead over GPT-5.2 on GDPval-AA is not incremental—it represents a significant gap in performance on the tasks that enterprise customers care about most.

Adaptive Thinking is the standout architectural innovation. By building cost-intelligence optimization directly into the model rather than leaving it to external orchestration, Anthropic has addressed one of the most persistent friction points in enterprise AI deployment: the inability to balance quality and cost without complex prompt engineering or routing logic. This feature alone changes the economics of deploying frontier models at scale.

Where Opus 4.6 faces legitimate challenges is in the per-token cost compared to Gemini 3 Pro and competitive OpenAI offerings. Organizations that optimize primarily for token throughput rather than output quality may find better economics elsewhere. The 1M context window’s premium pricing (2x standard) also limits its accessibility for sustained large-context usage—though Context Compaction and prompt caching provide meaningful mitigation paths.

The broader strategic picture is equally compelling. Anthropic’s trajectory—from research lab to $1 billion in annualized revenue, backed by $12 billion+ from Amazon, Google, Sequoia, and others, with a valuation approaching $350 billion—positions the company as one of the three defining players in frontier AI alongside OpenAI and Google DeepMind. Opus 4.6’s combination of raw capability, enterprise compliance, and developer experience makes it the most viable candidate for organizations seeking to deploy AI for high-value professional work in 2026.

It is no longer just a chatbot. With Agent Teams and Adaptive Thinking, Claude Opus 4.6 is a scalable, intelligent workforce—one that adjusts its own effort and cost to match the task at hand.