Table of Contents

Overview

In the ever-evolving landscape of AI, a new contender has emerged, promising to push the boundaries of reasoning and problem-solving. Meet QwQ-32B, a 32 billion parameter open-source large language model (LLM) developed by Alibaba’s Qwen team. This powerful model isn’t just another LLM; it’s designed with a focus on complex reasoning, leveraging reinforcement learning and a novel ‘thinking mode’ to tackle multi-step tasks with impressive accuracy. Let’s dive into what makes QwQ-32B a noteworthy addition to the AI toolkit.

Key Features

QwQ-32B boasts a compelling set of features that set it apart from other LLMs:

- 32B parameter LLM: A substantial model size allows for a deeper understanding of complex patterns and relationships in data, leading to improved performance.

- Reinforcement learning fine-tuning: The model is fine-tuned using reinforcement learning from human feedback (RLHF), enhancing its ability to align with human preferences and expectations.

- ‘Thinking mode’ for complex tasks: This innovative approach simulates a deliberation process, allowing the model to carefully consider each step in a multi-step task, resulting in more accurate outputs.

- Open-source under Apache 2.0: This permissive license allows for broad use, modification, and distribution, fostering collaboration and innovation within the AI community.

- Strong reasoning performance: QwQ-32B excels in tasks requiring logical deduction, inference, and problem-solving.

How It Works

QwQ-32B’s architecture is built around the principle of enhancing reasoning capabilities. The model applies scaled reinforcement learning from human feedback (RLHF) to fine-tune its abilities, particularly when dealing with multi-step reasoning challenges. A key element is its unique ‘thinking mode.’ This mode essentially allows the model to “think” through a problem before providing an answer. By simulating a deliberation process, QwQ-32B can more effectively navigate complex tasks and improve overall accuracy.

Use Cases

QwQ-32B’s capabilities make it well-suited for a variety of applications:

- Complex reasoning tasks: Ideal for scenarios requiring logical deduction, inference, and problem-solving.

- Coding assistance: Can assist developers with code generation, debugging, and understanding complex code structures.

- Math problem-solving: Capable of tackling mathematical problems requiring multi-step reasoning and calculations.

- Research and writing support: Can aid researchers and writers with literature reviews, idea generation, and content creation.

- AI agent integration: Can be integrated into AI agents to enhance their reasoning and decision-making abilities.

Pros & Cons

Like any technology, QwQ-32B has its strengths and weaknesses. Let’s take a look:

Advantages

- High reasoning accuracy, particularly in multi-step tasks.

- Open-source accessibility promotes collaboration and innovation.

- Innovative ‘thinking mode’ enhances task handling.

- Strong coding and mathematical capabilities.

Disadvantages

- Large computational requirements may limit accessibility for some users.

- Still under development, meaning it may be subject to changes and improvements.

- Limited real-world testing data available.

How Does It Compare?

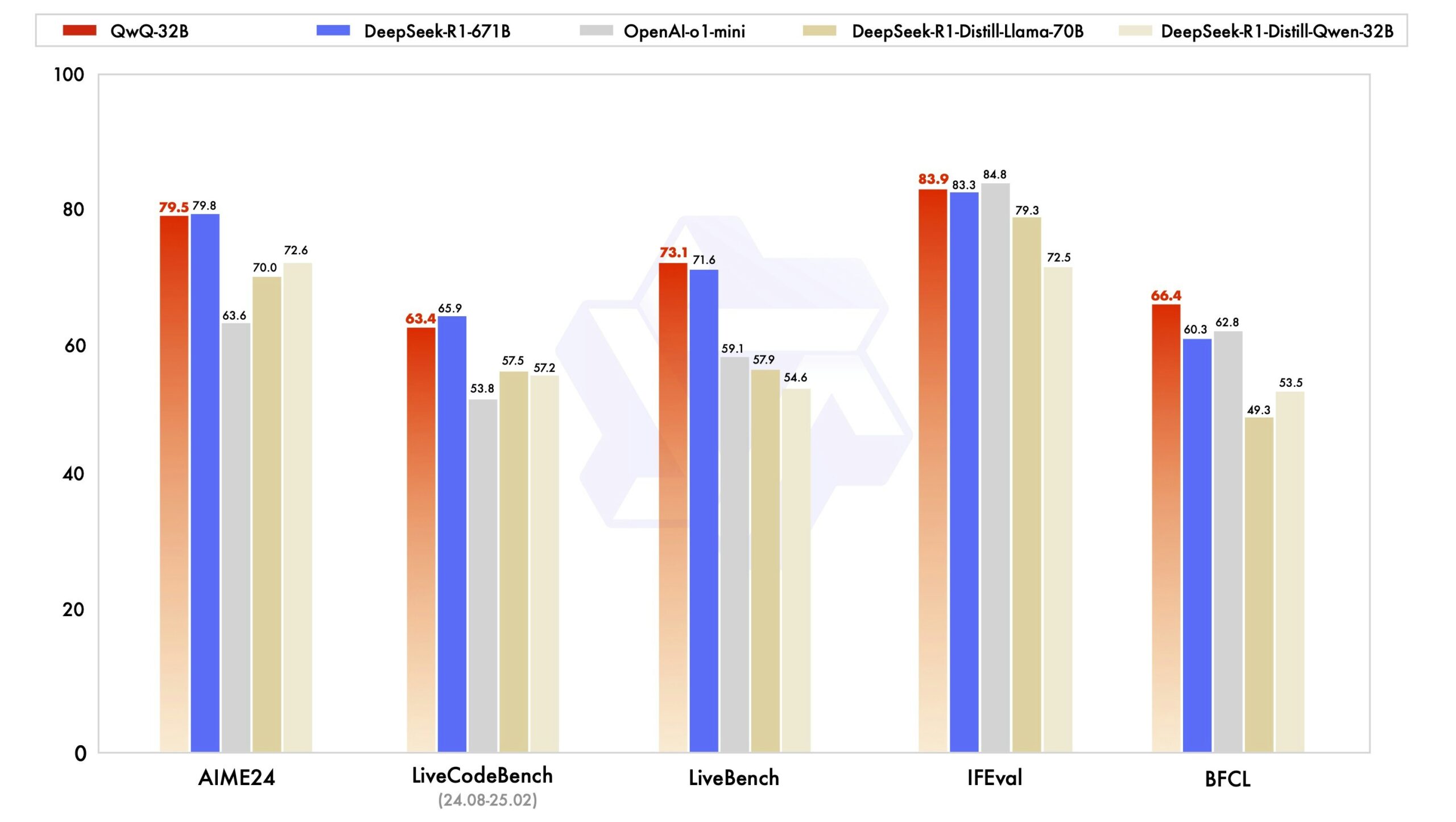

When considering QwQ-32B, it’s natural to compare it to other prominent LLMs. A key comparison is with GPT-4. While GPT-4 is a powerful model, it is closed-source and commercially licensed. QwQ-32B, on the other hand, is open-source and free to use. Another comparable model is DeepSeek-R1. While both models are of a similar scale, they differ in their underlying architecture and training methodologies. Each model offers a unique approach to language understanding and generation.

Final Thoughts

QwQ-32B represents a significant step forward in the development of open-source LLMs. Its focus on complex reasoning, coupled with its innovative ‘thinking mode,’ makes it a promising tool for a wide range of applications. While it’s still under development and requires substantial computational resources, its open-source nature and strong performance make it a valuable asset for researchers, developers, and anyone interested in exploring the potential of AI.