Table of Contents

Overview

In the ever-evolving landscape of AI, a new contender has emerged, promising impressive performance and accessibility. Meet Mistral Small 3.1, a 24B-parameter, open-source multimodal language model from Mistral AI. This model is designed for high performance across a variety of tasks, including multilingual processing and vision-language understanding. Licensed under the permissive Apache 2.0 license, it aims to democratize advanced AI capabilities, making them available to a wider audience. Let’s dive deeper into what makes Mistral Small 3.1 a noteworthy addition to the AI toolkit.

Key Features

Mistral Small 3.1 boasts a compelling set of features that make it a powerful and versatile tool:

- 24B parameters: This substantial parameter count allows for complex pattern recognition and nuanced language understanding.

- Multimodal (text + image): The ability to process both text and image inputs opens doors to a wide range of applications, bridging the gap between visual and textual data.

- 128K token context window: A large context window enables the model to maintain coherence and understand long-range dependencies in text, leading to more accurate and relevant outputs.

- Apache 2.0 open-source license: This permissive license allows for free use, modification, and distribution, fostering innovation and collaboration within the AI community.

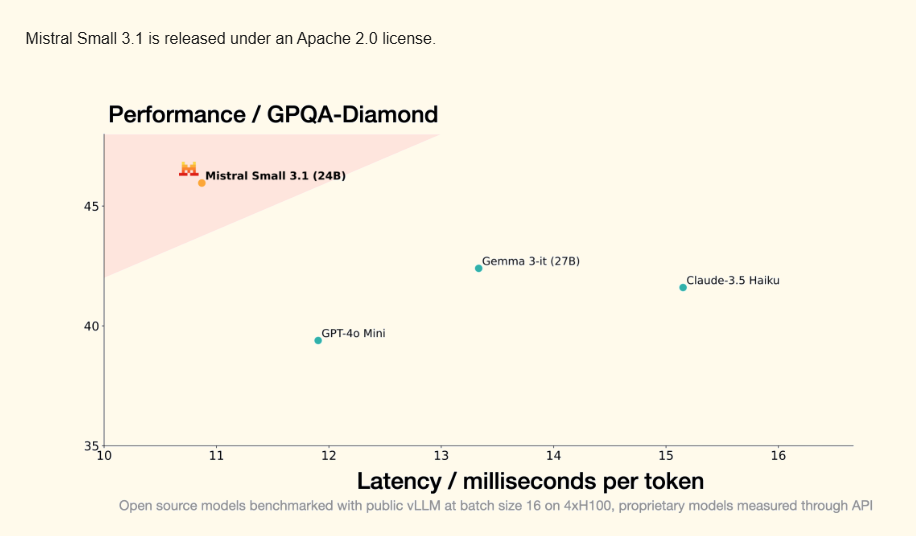

- Outperforms Gemma 3 and GPT-4o Mini: Mistral Small 3.1 demonstrates competitive performance, surpassing other popular models in certain benchmarks.

- Optimized for consumer GPUs: The model is designed to run efficiently on standard consumer-grade hardware, making it accessible to a broader range of users.

- Multilingual support: Its multilingual capabilities allow for processing and generating text in various languages, expanding its applicability to global audiences.

How It Works

Mistral Small 3.1 leverages an advanced transformer architecture to process both text and image inputs. The model ingests data, analyzes the relationships between different elements, and generates outputs based on its learned patterns. The large 128K token context window allows it to manage complex inputs and maintain coherence over longer sequences. Furthermore, the model supports fine-tuning, enabling users to adapt it for specific tasks and optimize its performance for their particular needs. The efficient design allows for operation on standard hardware, making it more accessible than some of its larger counterparts.

Use Cases

Mistral Small 3.1’s capabilities make it suitable for a diverse range of applications:

- Multimodal AI assistants: Creating AI assistants that can understand and respond to both text and image inputs, enhancing user interaction and problem-solving.

- Document analysis: Extracting key information, summarizing content, and identifying patterns within large volumes of text and images.

- Visual question answering: Answering questions based on the content of images, enabling more intuitive and informative interactions with visual data.

- Long-form text generation: Generating coherent and engaging long-form content, such as articles, stories, and reports.

- Research and education tools: Providing researchers and educators with a powerful tool for exploring and analyzing complex datasets.

Pros & Cons

Like any AI tool, Mistral Small 3.1 has its strengths and weaknesses. Understanding these can help you determine if it’s the right choice for your needs.

Advantages

- Open-source and accessible, promoting transparency and community-driven development.

- Multimodal capability, allowing for processing of both text and image data.

- Efficient hardware usage, enabling operation on consumer-grade GPUs.

- Competitive performance, rivaling or surpassing other popular models in certain tasks.

Disadvantages

- Requires tuning for optimal task results, potentially requiring expertise and effort to achieve desired performance.

- Limited public documentation compared to larger models, which may present a learning curve for some users.

How Does It Compare?

When considering Mistral Small 3.1, it’s important to understand how it stacks up against its competitors. Gemma 3 offers strong performance but operates under a proprietary license, limiting its flexibility. GPT-4o Mini boasts a broader ecosystem but comes with more restrictive licensing terms. Mistral Small 3.1 distinguishes itself with its open-source nature, competitive performance, and multimodal capabilities, offering a compelling alternative for users seeking a balance of power and accessibility.

Final Thoughts

Mistral Small 3.1 represents a significant step forward in the democratization of AI. Its open-source nature, multimodal capabilities, and efficient hardware requirements make it an attractive option for a wide range of users. While it may require some tuning to achieve optimal results, its potential applications are vast and its competitive performance makes it a model worth exploring. As the AI landscape continues to evolve, Mistral Small 3.1 stands out as a promising tool for innovation and discovery.