Table of Contents

Overview

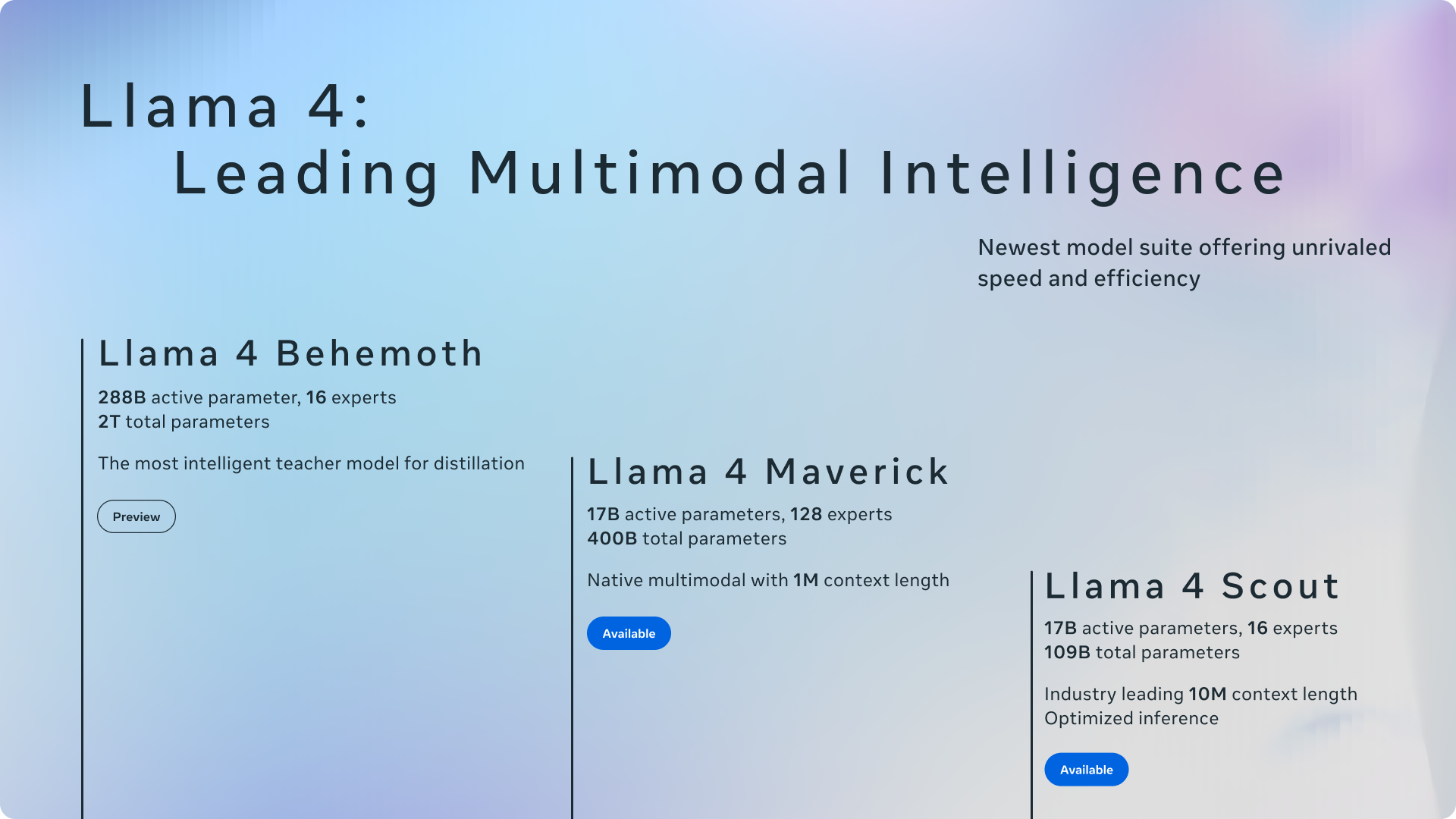

The world of AI is constantly evolving, and Meta continues to push the boundaries with its latest offering: Llama 4. This family of multimodal AI models is designed to understand both text and images, opening up a world of possibilities for developers and users alike. Built with a sophisticated mixture-of-experts architecture, Llama 4 promises high performance across a range of natural language and visual comprehension tasks. Let’s dive deeper into what makes Llama 4 a noteworthy contender in the AI landscape.

Key Features

Llama 4 boasts a powerful set of features designed to handle complex multimodal tasks:

- Multimodal Input (Text + Images): Llama 4 can process and understand both text and image inputs simultaneously, enabling more nuanced and context-aware interactions.

- Mixture-of-Experts Architecture: This innovative architecture utilizes multiple specialized expert subnetworks, dynamically activated based on the input, leading to improved scaling and performance.

- High-Performance Benchmarks: Llama 4 is designed to deliver strong performance across various benchmarks, indicating its capabilities in handling complex AI tasks.

- Open and Scalable: The architecture is built to be scalable, allowing for future growth and adaptation. The potential for open access makes it even more appealing for developers.

- Native Support for Vision-Language Tasks: Llama 4 is specifically designed to excel in tasks that require understanding the relationship between visual and textual information.

How It Works

The magic behind Llama 4 lies in its mixture-of-experts architecture. Instead of relying on a single, monolithic model, Llama 4 utilizes multiple specialized subnetworks, each trained to handle specific types of data or tasks. When an input is received, the system dynamically activates the most relevant expert subnetworks to process it. This approach allows for better scaling and performance compared to traditional models. Furthermore, Llama 4 is trained on diverse multimodal datasets, ensuring a coherent understanding of combined text and visual inputs. This comprehensive training enables the model to effectively interpret and respond to complex prompts involving both images and text.

Use Cases

Llama 4’s multimodal capabilities unlock a wide range of potential applications:

- Image Captioning: Generate descriptive and accurate captions for images, providing context and understanding.

- Visual Question Answering: Answer questions about images, demonstrating a deep understanding of visual content.

- Text Generation: Create coherent and relevant text based on image inputs, enabling creative content generation.

- Language Translation with Visual Cues: Translate languages while considering visual context, leading to more accurate and nuanced translations.

- Interactive Assistants with Image Inputs: Develop interactive assistants that can understand and respond to image-based queries, enhancing user experience.

Pros & Cons

Like any powerful tool, Llama 4 has its strengths and weaknesses. Understanding these will help you determine if it’s the right choice for your needs.

Advantages

- Strong performance on vision-language tasks: Excels at tasks requiring understanding of both visual and textual information.

- Scalable architecture: Designed to handle increasing data and complexity.

- Open-access potential: The possibility of open access makes it attractive for developers and researchers.

Disadvantages

- High computational demands: Requires significant computing resources to run effectively.

- May require fine-tuning for specific domains: Might need additional training to perform optimally in specialized areas.

How Does It Compare?

The AI landscape is competitive, and Llama 4 faces strong rivals. Here’s a brief comparison:

- GPT-4V: Similar in its strong image + text integration capabilities.

- Gemini: Benefits from a tight integration within the Google ecosystem.

- Claude 3 Opus: Excels in text-based tasks but lacks native multimodal capabilities.

Each model has its own strengths and weaknesses, so the best choice depends on your specific requirements and priorities.

Final Thoughts

Llama 4 represents a significant step forward in multimodal AI. Its ability to understand and process both text and images opens up exciting possibilities for a wide range of applications. While it may have some limitations in terms of computational demands and the need for fine-tuning, its potential for open access and strong performance on vision-language tasks make it a compelling option for developers and researchers looking to push the boundaries of AI. As the technology continues to evolve, Llama 4 is definitely a model to watch.