Table of Contents

Overview

In the ever-evolving landscape of large language models (LLMs), a new contender has emerged, promising impressive capabilities in complex reasoning. Meet MiMo, an open-source LLM suite developed by Xiaomi, designed with a particular focus on excelling in math and code-related tasks. With its transparent model releases and Apache 2.0 license, MiMo offers a compelling alternative for researchers and developers looking to harness the power of AI for specialized applications. Let’s dive into what makes MiMo tick and how it stacks up against the competition.

Key Features

MiMo boasts a range of features that make it a noteworthy addition to the open-source LLM community:

- Open-Source (Apache 2.0): MiMo’s open license allows for unrestricted use, modification, and distribution, fostering collaboration and innovation.

- Specialized for Math and Code: Unlike general-purpose LLMs, MiMo is specifically optimized for tasks requiring mathematical reasoning and coding proficiency.

- Supports Pretraining, SFT, and RLHF Versions: The suite includes base models, supervised fine-tuned (SFT) variants, and reinforcement learning (RL) versions, providing flexibility for different use cases.

- High Performance at 7B Scale: MiMo achieves impressive performance while maintaining a relatively small 7 billion parameter size, making it more accessible to researchers with limited computational resources.

- Transparent Model Releases: Xiaomi provides clear and transparent releases of the MiMo models, ensuring reproducibility and trust within the community.

How It Works

MiMo models are built upon a foundation of extensive pretraining on diverse datasets. This initial phase equips the models with a broad understanding of language and concepts. To further refine their capabilities, the models undergo supervised fine-tuning (SFT), where they are trained on specific datasets tailored to math and code-related tasks. Finally, reinforcement learning from human feedback (RLHF) is employed to align the models’ behavior with human preferences and improve their overall performance. The models are readily available on GitHub, allowing developers to deploy them for custom applications or utilize them for academic research.

Use Cases

MiMo’s specialized capabilities make it well-suited for a variety of applications:

- Mathematical Reasoning Tasks: MiMo can be used to solve complex mathematical problems, assist with equation solving, and provide explanations for mathematical concepts.

- Coding Assistants: The models can aid in code generation, debugging, and understanding complex code structures.

- Academic NLP Research: MiMo provides a valuable resource for researchers exploring new techniques in natural language processing, particularly in the areas of reasoning and code understanding.

- Base for Custom AI Agents: Developers can leverage MiMo as a foundation for building custom AI agents tailored to specific domains and tasks.

- LLM Performance Benchmarking: MiMo can serve as a benchmark for evaluating the performance of other LLMs in math and code-related tasks.

Pros & Cons

Like any tool, MiMo has its strengths and weaknesses. Let’s break them down:

Advantages

- Open License: The Apache 2.0 license promotes accessibility, collaboration, and innovation.

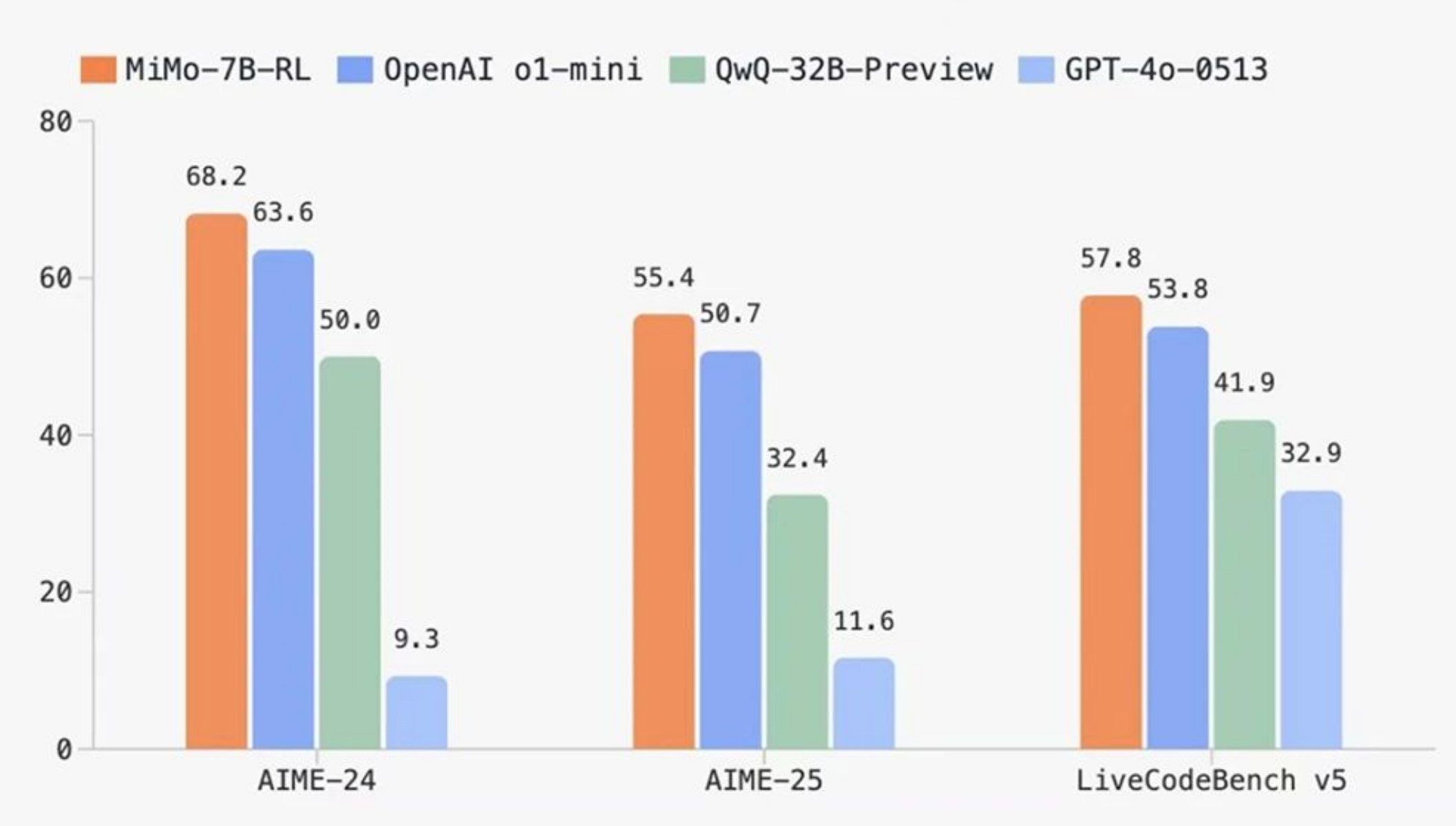

- Strong Math/Code Performance: MiMo excels in tasks requiring mathematical reasoning and coding proficiency.

- Community Access: The open-source nature of MiMo encourages community contributions and support.

Disadvantages

- Requires ML Expertise to Deploy: Deploying and fine-tuning MiMo models requires a certain level of machine learning expertise.

- Limited Documentation: The documentation for MiMo may be less extensive compared to more established LLMs.

- Smaller Ecosystem than GPT-based Models: MiMo’s ecosystem is still developing, meaning fewer pre-built tools and resources may be available compared to GPT-based alternatives.

How Does It Compare?

When considering MiMo, it’s important to understand how it stacks up against other popular LLMs:

- LLaMA: While LLaMA is a strong open-source LLM, it is less optimized for complex reasoning tasks compared to MiMo.

- Mistral: Mistral is known for its performance, but it doesn’t have the same focus on math and code as MiMo.

- GPT-4: GPT-4 offers superior overall performance, but it is a closed-source model, limiting customization and transparency.

Final Thoughts

MiMo represents a significant step forward in the development of specialized, open-source LLMs. Its focus on math and code, combined with its transparent model releases and Apache 2.0 license, makes it a valuable tool for researchers and developers seeking to push the boundaries of AI. While it requires some expertise to deploy and has a smaller ecosystem than some of its competitors, MiMo’s unique capabilities and open nature make it a compelling option for those looking to harness the power of AI for complex reasoning tasks.