Table of Contents

Overview

In the rapidly evolving world of Large Language Model (LLM) applications, understanding and optimizing performance is paramount. Enter Langfuse Custom Dashboards, a powerful component of the Langfuse open-source LLM observability platform. Designed for teams building and deploying LLM-powered applications, Langfuse offers tailored insights into your application’s behavior, enabling you to debug, analyze, and optimize with precision. Let’s dive into what makes Langfuse a valuable tool for LLM developers.

Key Features

Langfuse boasts a comprehensive suite of features designed to give you deep visibility into your LLM applications:

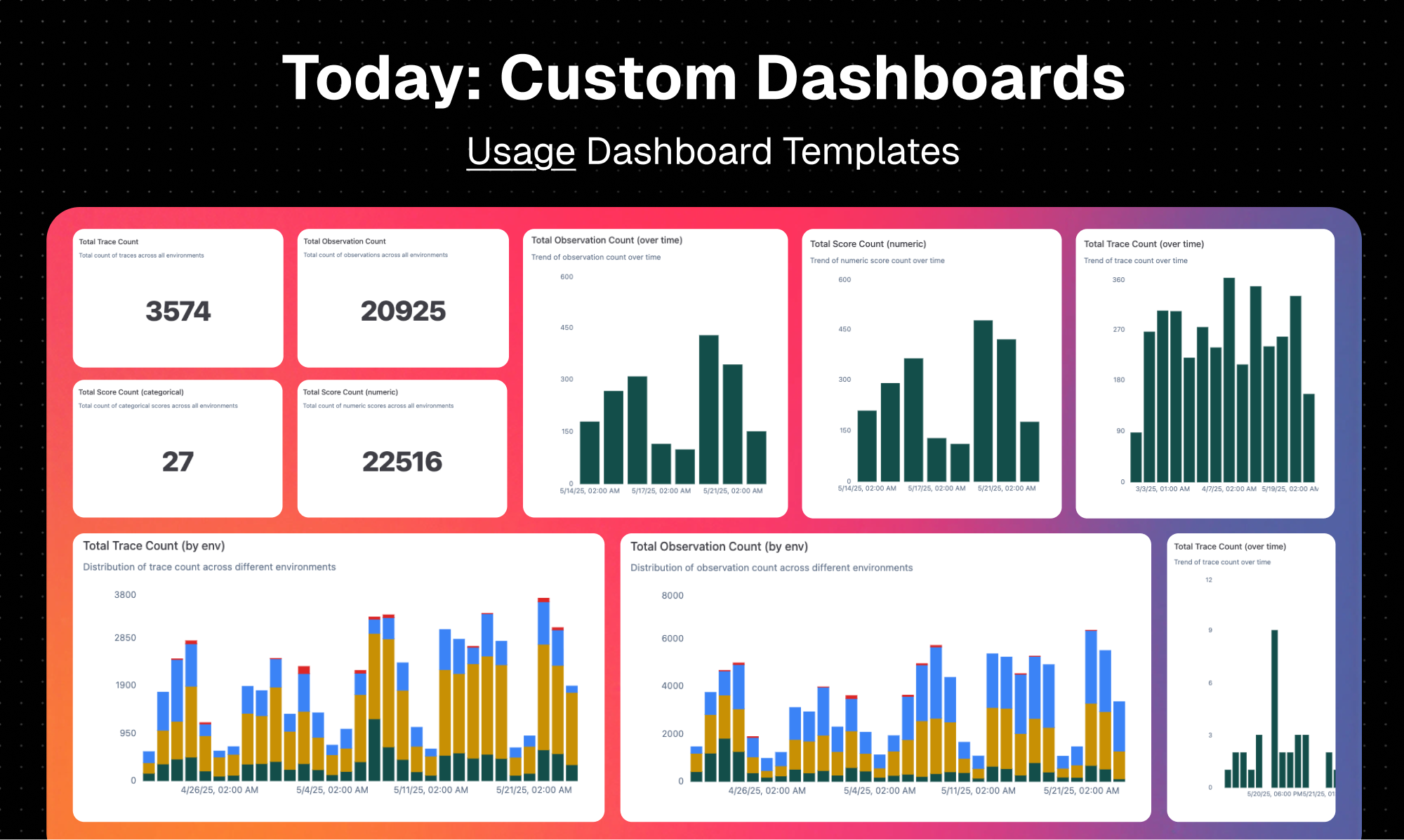

- Custom dashboards: Create tailored views into your LLM application’s performance, focusing on the metrics that matter most to your specific use case.

- Real-time tracing: Track requests and responses in real-time, providing immediate insights into application behavior and potential bottlenecks.

- Integrated observability: Seamlessly integrates with your existing LLM stack, providing a unified view of your application’s performance.

- Debugging tools: Identify and resolve issues quickly with powerful debugging tools that allow you to drill down into individual requests and responses.

- Analytics and monitoring: Track key metrics over time to identify trends and proactively address potential problems.

- Open-source: Benefit from the flexibility and transparency of an open-source platform, allowing you to customize and extend Langfuse to meet your specific needs.

How It Works

Langfuse operates by capturing the interactions between your LLM application and its users. It meticulously records the input and output of your LLM, along with relevant metadata. This data is then visualized and organized into customizable dashboards. This allows developers to monitor application performance, trace errors back to their source, and iterate on their models and prompts based on data-driven insights. The result is a streamlined workflow for understanding and improving your LLM applications.

Use Cases

Langfuse’s versatility makes it suitable for a variety of LLM application development scenarios:

- Debugging LLM pipelines: Pinpoint the source of errors in complex LLM pipelines by tracing requests and responses through each step.

- Monitoring model behavior: Track key metrics like latency, accuracy, and cost to ensure your models are performing as expected.

- Custom analytics for AI apps: Create custom dashboards to track the metrics that are most relevant to your specific AI application.

- Team-based development analysis: Facilitate collaboration by providing a shared view of application performance and behavior.

- Visualization of production LLM metrics: Gain real-time visibility into the performance of your LLM applications in production.

Pros & Cons

Like any tool, Langfuse has its strengths and weaknesses. Understanding these can help you determine if it’s the right fit for your needs.

Advantages

- Open-source: Offers flexibility, transparency, and community support.

- Tailored dashboard views: Allows you to focus on the metrics that matter most to your specific use case.

- Deep LLM analytics: Provides granular insights into the performance and behavior of your LLM applications.

- Collaborative debugging: Facilitates teamwork by providing a shared view of application performance.

Disadvantages

- Requires setup: Setting up and configuring Langfuse can require some technical expertise.

- Geared toward technical users: The platform is primarily designed for developers and engineers.

- Limited integrations outside LLM stacks: Integrations are primarily focused on LLM-related tools and services.

How Does It Compare?

When evaluating LLM observability platforms, it’s important to consider the alternatives. PromptLayer offers similar functionality, but its dashboards are less customizable than Langfuse’s. Arize AI provides broader ML observability capabilities, but it’s not as specifically tailored to LLM applications as Langfuse. This makes Langfuse a strong choice for teams that need deep, customizable insights into their LLM applications.

Final Thoughts

Langfuse Custom Dashboards offer a powerful and flexible solution for teams building and deploying LLM-powered applications. Its open-source nature, combined with its deep LLM analytics and customizable dashboards, makes it a compelling choice for those seeking to optimize the performance and behavior of their AI applications. While it requires some initial setup and is geared towards technical users, the insights it provides can be invaluable for driving innovation and ensuring the success of your LLM projects.