Table of Contents

Overview

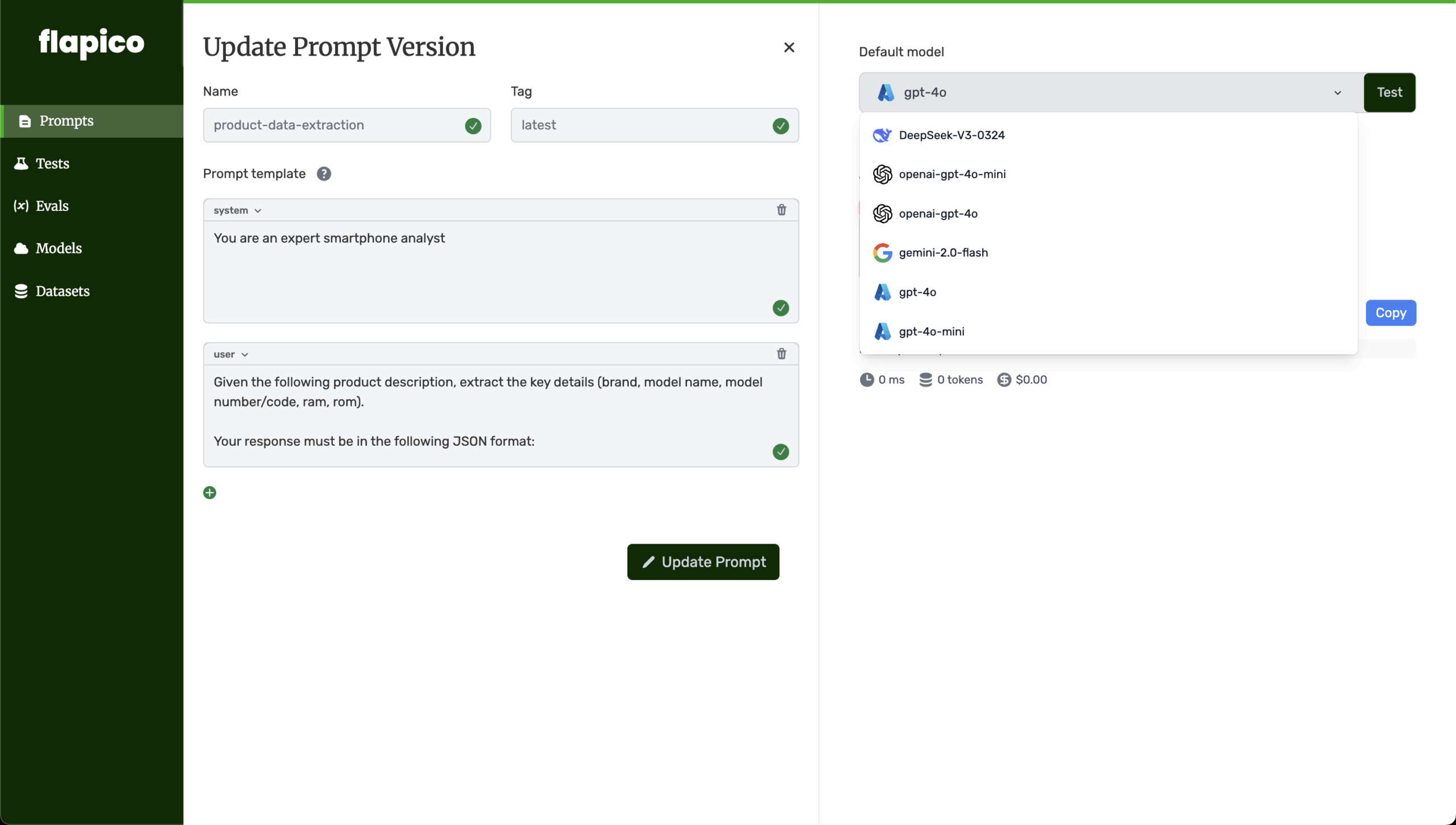

In the rapidly evolving world of Large Language Models (LLMs), managing and optimizing prompts is crucial for achieving desired outcomes. Enter Flapico, a collaborative platform designed to streamline the entire prompt engineering lifecycle. Flapico helps teams manage, version, test, and evaluate prompts, ultimately enhancing the production reliability of LLM applications. Let’s dive into what makes Flapico a contender in the prompt engineering space.

Key Features

Flapico boasts a robust set of features designed to make prompt management a breeze:

- Prompt Versioning: Track changes and revert to previous versions of your prompts, ensuring you never lose valuable work.

- Quantitative Evaluation Metrics: Measure the performance of your prompts using a variety of metrics, allowing for data-driven optimization.

- Collaborative Editing and Testing: Work together with your team to create, test, and refine prompts in a shared environment.

- GitHub Integration: Seamlessly integrate Flapico with your existing GitHub workflows for version control and deployment.

- Test Suite Management: Organize and manage your prompt testing efforts with comprehensive test suite management tools.

How It Works

Flapico simplifies prompt engineering through its intuitive web interface. Users can create, version, and test prompts directly within the platform. The system facilitates structured evaluations and comparisons by allowing users to define custom test cases and analyze the results. These results are then presented through analytics, allowing for data-driven decisions. Furthermore, Flapico supports team collaboration, making it easy for multiple users to contribute to the prompt engineering process.

Use Cases

Flapico offers a range of practical applications for teams working with LLMs:

- Improving Prompt Quality for LLM Apps: Refine your prompts to achieve better accuracy, relevance, and overall performance in your LLM applications.

- Collaborative Testing by AI Teams: Enable seamless collaboration among team members to test and optimize prompts more effectively.

- Streamlining Prompt Management in Production: Simplify the process of managing prompts in a production environment, ensuring consistency and reliability.

- Ensuring Output Consistency: Maintain consistent output from your LLM applications by carefully managing and versioning your prompts.

Pros & Cons

Like any tool, Flapico has its strengths and weaknesses. Understanding these can help you determine if it’s the right fit for your needs.

Advantages

- Decouples prompts from code: This separation allows for independent iteration and management of prompts without requiring code changes.

- Enables quantitative prompt testing: Provides data-driven insights into prompt performance, leading to more effective optimization.

- Facilitates team collaboration: Makes it easy for teams to work together on prompt engineering projects.

Disadvantages

- Requires LLM knowledge: Users need a solid understanding of LLMs and prompt engineering principles to effectively use the platform.

- May need integration setup for CI/CD workflows: Integrating Flapico into existing CI/CD pipelines may require some initial setup and configuration.

How Does It Compare?

The prompt engineering landscape is growing, and Flapico faces competition from other platforms. Here’s how it stacks up against a couple of alternatives:

- PromptLayer: While PromptLayer offers strong logging and monitoring capabilities, it’s less focused on collaborative testing features compared to Flapico.

- ChainForge: ChainForge provides robust evaluation tools, but it lacks the comprehensive version control features found in Flapico.

Final Thoughts

Flapico offers a compelling solution for teams looking to streamline their prompt engineering workflows. Its focus on collaboration, versioning, and quantitative testing makes it a valuable tool for improving the reliability and performance of LLM applications. While it requires some LLM expertise and may need integration efforts, the benefits of decoupled prompts and data-driven optimization can be significant.