Table of Contents

Overview

In the rapidly evolving landscape of artificial intelligence, managing and optimizing Large Language Model requests across various providers presents significant operational challenges. LLM Gateway emerges as a comprehensive solution, offering a unified API interface that enables organizations to route, manage, and analyze their LLM requests with enhanced efficiency. The platform ensures optimal performance, cost-effectiveness, and reliability across chosen models while maintaining full compatibility with OpenAI’s API format.

Key Features

LLM Gateway provides a robust set of functionalities designed to streamline and enhance LLM operations across enterprise environments:

Unified API for Multiple LLMs: The platform delivers a single, OpenAI-compatible interface for interacting with 56 models across 13 leading AI providers, eliminating the complexity of managing multiple disparate APIs and enabling seamless integration into existing workflows.

Intelligent Request Routing: Advanced routing algorithms dynamically direct requests to optimal LLM providers based on configurable criteria including performance metrics, cost optimization, and model-specific capabilities, ensuring efficient resource utilization and improved response quality.

Response Caching System: Integrated caching mechanisms reduce both latency and operational costs by serving frequently requested or identical queries directly from cache, minimizing redundant API calls to underlying providers.

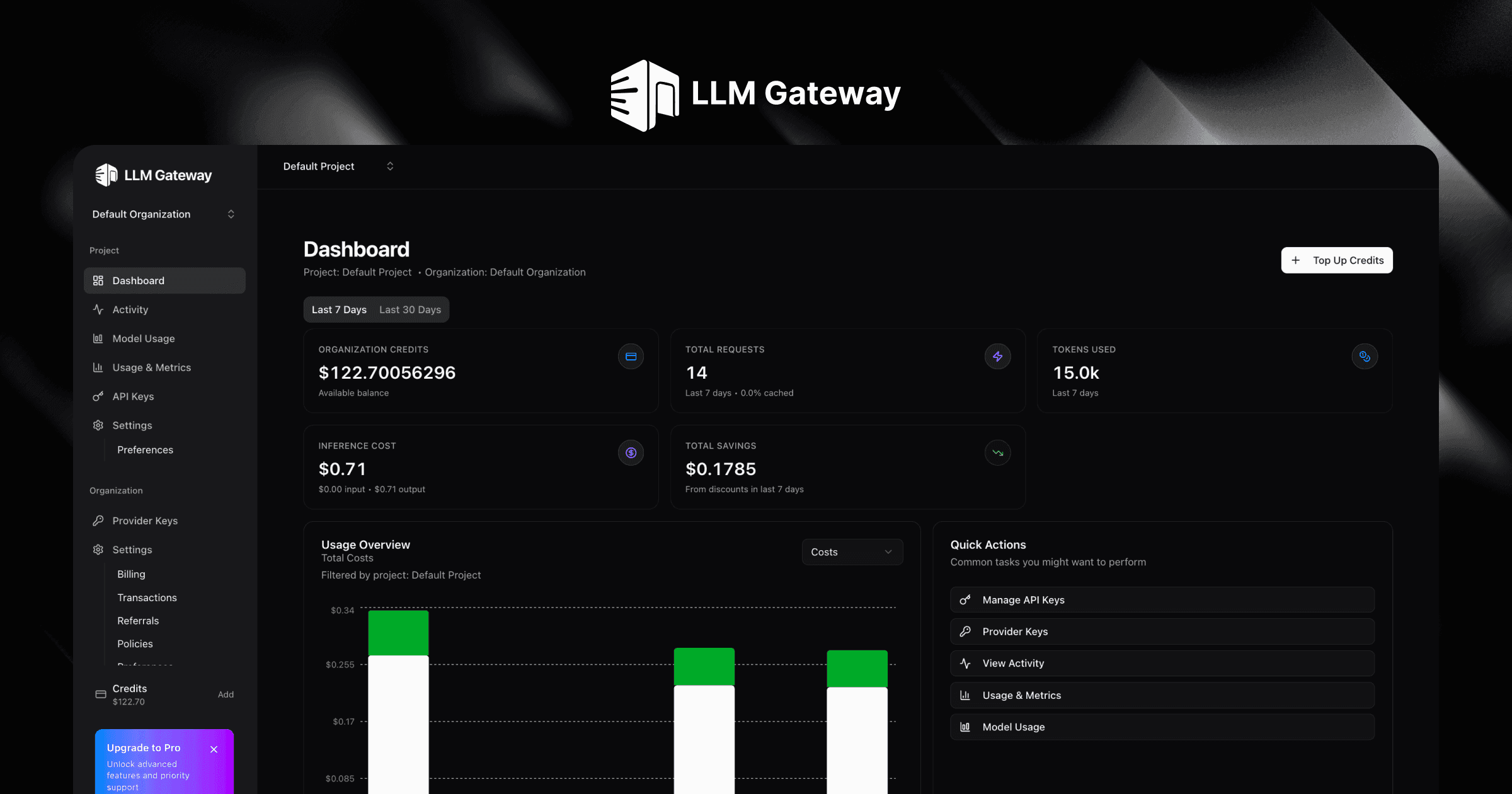

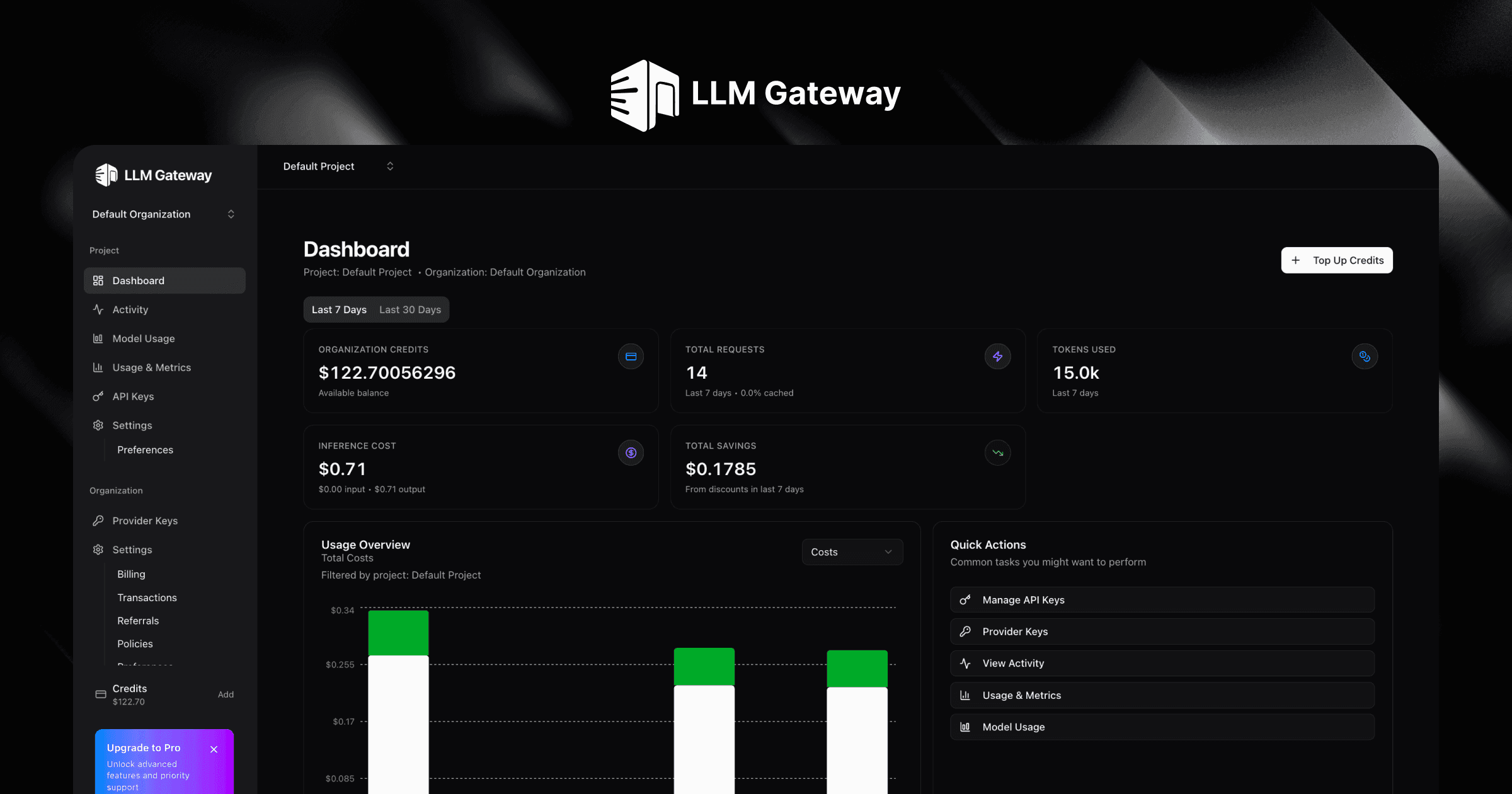

Comprehensive Analytics Dashboard: Real-time monitoring provides detailed insights into LLM usage patterns, tracking request volumes, response latencies, cost distributions, and error rates across all connected providers, enabling data-driven optimization strategies.

Advanced Load Balancing: Sophisticated distribution algorithms spread LLM requests across multiple providers using customizable rules, preventing bottlenecks and maintaining high availability during peak demand periods.

Automated Provider Fallback: Configurable backup provider systems ensure service continuity by automatically rerouting requests when primary providers experience downtime or performance degradation, maintaining uninterrupted service delivery.

How It Works

The LLM Gateway architecture facilitates seamless integration and sophisticated control mechanisms. Organizations integrate the gateway through its OpenAI-compatible API, requiring minimal code changes from existing implementations. Administrators configure routing rules and provider preferences, establishing policies for request handling across different scenarios. When LLM requests are submitted, the system applies real-time routing decisions based on pre-configured metrics including performance thresholds, cost parameters, and reliability indicators. The platform continuously collects detailed analytics for each transaction, providing comprehensive data that enables ongoing optimization of LLM strategies and cost management.

Use Cases

The platform serves diverse organizational needs across multiple operational contexts:

Enterprise AI Development Teams: Development teams leverage intelligent routing and automated fallback mechanisms to ensure applications remain performant and cost-effective while adapting to changing LLM provider landscapes and service availability.

Multi-Provider AI Strategy Organizations: Large enterprises centralize LLM management operations, reduce vendor dependencies, and maintain consistent service levels across diverse business units and applications through unified governance frameworks.

Research and Analytics Teams: Research organizations utilize the platform for comprehensive A/B testing across different LLMs, gathering detailed performance metrics and making informed decisions about optimal model selection for specific research tasks and workflows.

Pros \& Cons

Advantages

Streamlined Multi-Provider Integration: Reduces operational complexity by offering a single, unified interface for multiple LLM APIs, significantly decreasing development and maintenance overhead.

Performance and Cost Optimization: Intelligent routing combined with comprehensive analytics enables organizations to fine-tune LLM usage for maximum efficiency, cost savings, and performance optimization.

Centralized Operational Control: Provides unified monitoring and management capabilities for all LLM requests, performance metrics, and costs across different providers through a single operational dashboard.

Vendor Independence: Offers flexibility to switch or combine LLM providers seamlessly, ensuring organizations avoid vendor lock-in while maintaining operational continuity.

Disadvantages

Potential Latency Introduction: Routing requests through an additional gateway layer may introduce minimal latency compared to direct API calls, though this is typically offset by optimization benefits.

Configuration Management Requirements: Initial setup of routing rules, provider preferences, and monitoring systems requires planning and ongoing management to maintain optimal performance.

Gateway Dependency Considerations: LLM operations become dependent on the gateway’s reliability and uptime, necessitating proper redundancy and monitoring strategies.

How Does It Compare?

The LLM gateway landscape in 2025 features several specialized solutions, each addressing different organizational priorities and technical requirements.

Portkey positions itself as an enterprise-focused platform emphasizing advanced security features, comprehensive guardrails, and sophisticated prompt management capabilities. While Portkey excels in governance and compliance scenarios, LLM Gateway provides more streamlined routing capabilities with lower operational overhead and more transparent pricing structures.

OpenRouter operates as a model marketplace offering access to 300+ models with automatic failover capabilities, but charges a 5% markup on all requests and lacks self-hosting options. LLM Gateway differentiates itself through MIT-licensed open-source availability, zero gateway fees on Pro plans when using own API keys, and comprehensive self-hosting capabilities.

Helicone specializes in high-performance observability with excellent proxy-based integration, particularly strong in cost tracking and caching. However, LLM Gateway offers more comprehensive routing logic and multi-provider fallback strategies while maintaining competitive performance characteristics.

TensorZero provides inference optimization with built-in experimentation features as an open-source alternative. LLM Gateway complements such solutions by focusing specifically on routing and provider management rather than inference optimization, making them potentially complementary in complex architectures.

The competitive landscape demonstrates that LLM Gateway’s strength lies in balancing comprehensive routing capabilities with operational simplicity, offering both cloud-hosted and self-hosted deployment options while maintaining cost transparency and vendor independence.

Final Thoughts

LLM Gateway represents a mature solution for contemporary LLM management challenges in dynamic AI environments. By providing streamlined multi-provider integration, intelligent routing capabilities, and comprehensive analytics, the platform empowers development teams and enterprises to build more resilient, cost-effective, and high-performing AI applications. While introducing a management layer, the benefits of optimization, vendor independence, and operational visibility make it a valuable infrastructure component for organizations committed to scaling their LLM operations effectively. The platform’s open-source availability and flexible deployment options further enhance its appeal for organizations requiring full control over their AI infrastructure while maintaining compatibility with existing OpenAI-based implementations.