Table of Contents

Overview

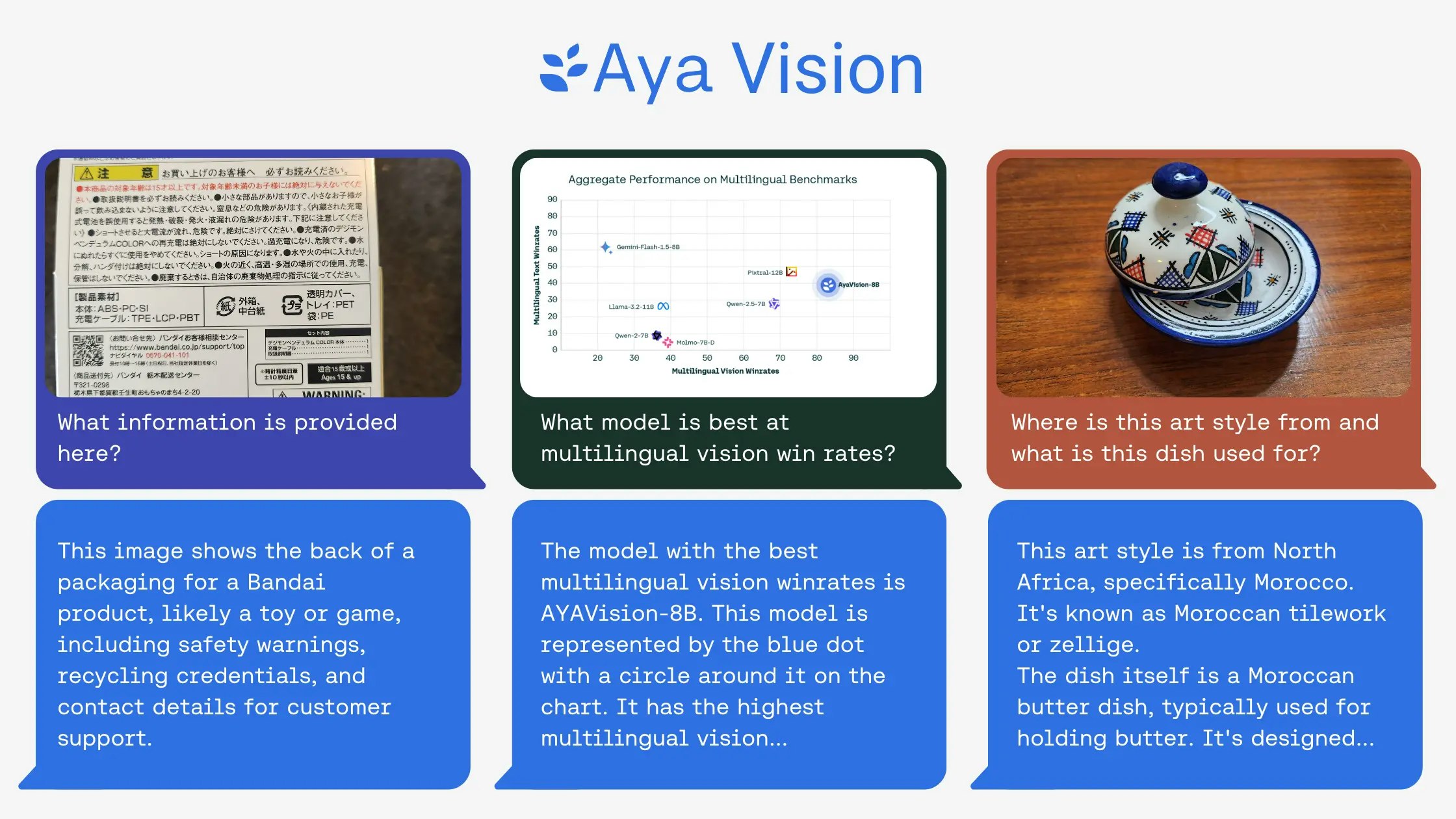

In the rapidly evolving world of AI, multilingual capabilities are becoming increasingly vital. Enter Aya Vision, a groundbreaking set of open-weight, multilingual, and multimodal AI models developed by Cohere For AI. These models are designed to excel in vision-language tasks across a multitude of languages, outperforming many larger, more resource-intensive models in multilingual benchmarks. Let’s dive into what makes Aya Vision a noteworthy contender in the AI landscape.

Key Features

Aya Vision boasts a compelling set of features that make it a powerful tool for various applications:

- Multilingual vision-language capabilities: Aya Vision is built to understand and process both images and text in a wide range of languages, making it ideal for global applications.

- Open-weight models: Unlike many proprietary models, Aya Vision’s open weights allow for greater transparency, customization, and community contribution.

- Available in 8B and 32B parameters: This offers flexibility in terms of computational resources, allowing users to choose the model size that best suits their needs.

- High performance in benchmarks: Aya Vision demonstrates strong performance in various benchmarks, showcasing its effectiveness in vision-language tasks.

- Access via Hugging Face and Kaggle: This provides easy access and integration for developers and researchers, fostering collaboration and innovation.

How It Works

Aya Vision leverages transformer-based architectures, a proven approach in natural language processing and computer vision. These architectures are trained on diverse multilingual datasets, carefully aligning visual and textual data. The models are designed to process and understand images alongside text, supporting a wide range of languages. This allows Aya Vision to effectively bridge the gap between visual and linguistic understanding, enabling it to perform complex tasks.

Use Cases

Aya Vision’s unique capabilities open doors to a variety of exciting applications:

- Multilingual image captioning: Generate accurate and contextually relevant captions for images in multiple languages.

- Visual question answering: Answer questions about images in different languages, demonstrating a deep understanding of both visual and textual information.

- Cross-lingual image search: Search for images using text queries in one language and retrieve relevant images based on their content, even if the image descriptions are in a different language.

- Educational tools: Develop interactive learning tools that can explain visual concepts in multiple languages, making education more accessible.

- Research in AI fairness and inclusivity: Study and mitigate biases in AI models across different languages and cultures, promoting fairness and inclusivity.

Pros & Cons

Like any technology, Aya Vision has its strengths and weaknesses. Let’s examine the advantages and disadvantages:

Advantages

- High multilingual support: Its core strength lies in its ability to handle a wide range of languages effectively.

- Open weights: This fosters transparency, customization, and community collaboration.

- Strong performance: Aya Vision delivers impressive results in various benchmarks.

- Community-supported: Being open-weight, it benefits from the collective knowledge and contributions of the AI community.

Disadvantages

- Large model size requires significant compute: Running the 8B and 32B parameter models requires substantial computational resources.

- Still under academic evaluation: While promising, the models are still undergoing rigorous academic evaluation and refinement.

How Does It Compare?

When comparing Aya Vision to other vision-language models, several key differences emerge. CLIP, while powerful, offers less comprehensive multilingual support. Flamingo, another notable model, is more proprietary and closed, limiting customization and community contribution. PaLI-X shares similar goals with Aya Vision but is generally less accessible to the broader AI community. Aya Vision distinguishes itself through its combination of strong multilingual capabilities, open weights, and accessibility.

Final Thoughts

Aya Vision represents a significant step forward in the development of multilingual and multimodal AI. Its open-weight nature, strong performance, and accessibility make it a valuable tool for researchers, developers, and organizations seeking to leverage the power of AI across diverse languages and cultures. While the large model size and ongoing academic evaluation are factors to consider, the potential benefits of Aya Vision are undeniable.