Table of Contents

- Claude Code on the Web: Comprehensive Research Report

- 1. Executive Snapshot

- 2. Impact & Evidence

- 3. Technical Blueprint

- 4. Trust & Governance

- 5. Unique Capabilities

- 6. Adoption Pathways

- 7. Use Case Portfolio

- 8. Balanced Analysis

- 9. Transparent Pricing

- 10. Market Positioning

- 11. Leadership Profile

- 12. Community & Endorsements

- 13. Strategic Outlook

- Final Thoughts

Claude Code on the Web: Comprehensive Research Report

1. Executive Snapshot

Core offering overview

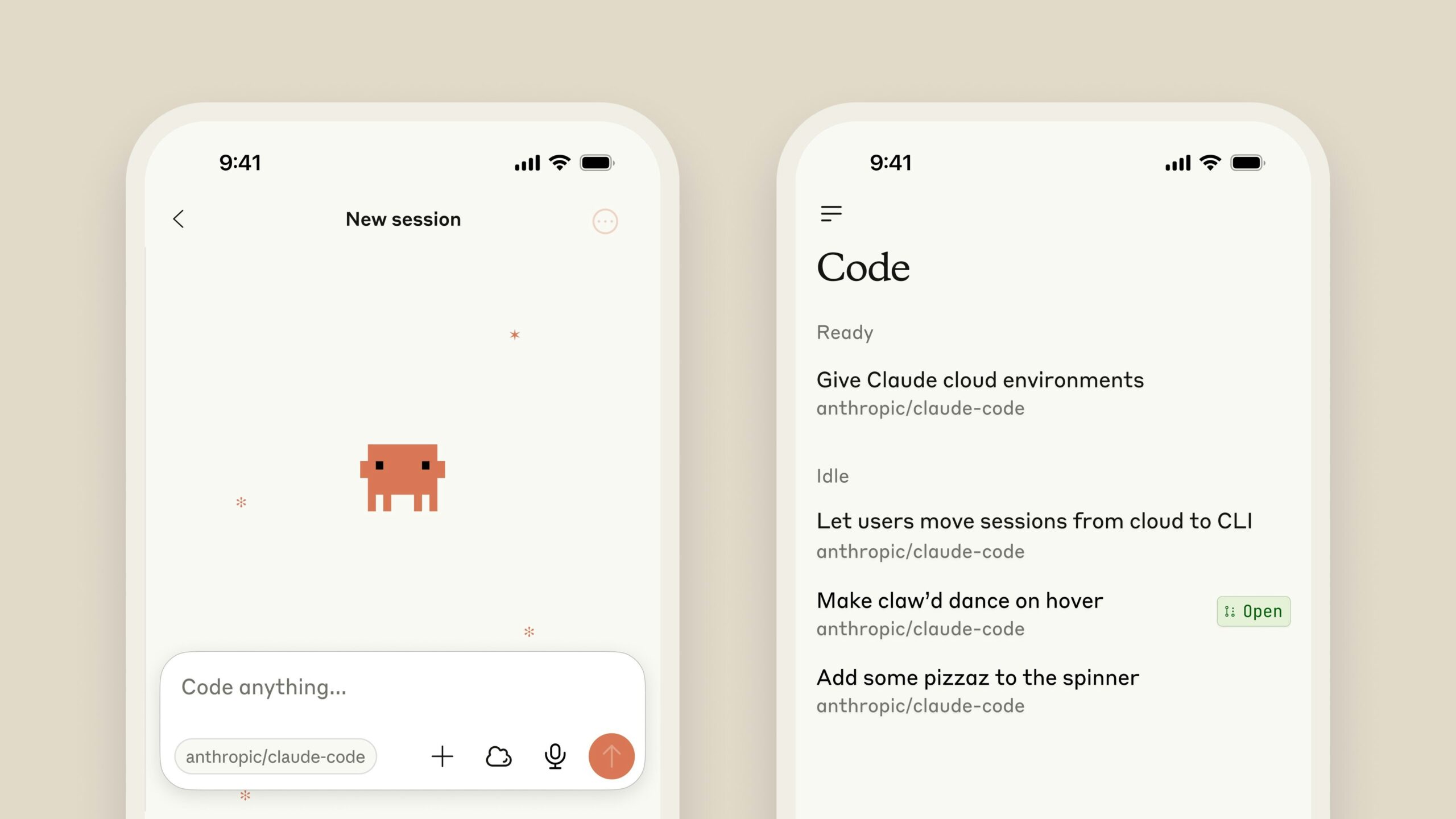

Claude Code on the web represents Anthropic’s strategic expansion of its AI coding assistant from command-line terminals into browser-based and mobile environments, fundamentally democratizing access to enterprise-grade AI-powered software development. Announced on October 20, 2025, and currently available as a research preview beta to Pro and Max subscribers, the platform transforms how developers delegate coding tasks by enabling parallel execution of multiple workflows across different repositories directly from claude.ai or iOS devices. Unlike its terminal-based predecessor, the web version runs coding sessions on Anthropic-managed cloud infrastructure with isolated sandbox environments, allowing developers to connect GitHub repositories, describe desired implementations in natural language, and monitor real-time progress while maintaining ability to steer Claude’s work mid-execution. The service excels at tackling bug backlogs, routine fixes, backend changes using test-driven development, and parallel development work—effectively functioning as an autonomous junior developer that operates within strictly controlled security boundaries. Each coding session generates automatic pull requests with clear change summaries, eliminating manual Git operations while preserving human oversight through transparent reasoning displays and permission-based execution models.

Key achievements & milestones

Claude Code has achieved remarkable traction since its initial May 2025 expansion, with user growth jumping 10x and the product now driving over $500 million in annualized revenue for Anthropic. The October 2025 web launch marks a pivotal evolution from the terminal-only tool that gained developer loyalty through technical excellence. According to Anthropic’s Economic Index analyzing over four million Claude.ai conversations, software development tasks constitute the primary usage category, with startup projects accounting for 32.9 percent of Claude Code conversations—nearly 20 percent higher than general Claude.ai usage patterns. Cursor IDE data from April 2025 shows Claude models occupying two of the top three developer preference spots, with Claude 3.7 Sonnet ranking number one and Claude 3.5 Sonnet at number three, edging out competing models from OpenAI, Google, and others in real production environments. The broader AI code assistant market reached USD 5.5 billion in 2024 and projects to USD 47.3 billion by 2034 at 24 percent compound annual growth rate, positioning Claude Code within explosive category expansion. Anthropic itself has secured massive backing, raising $3.5 billion in February 2025 at a $61.5 billion valuation, with total funding exceeding $14.8 billion from investors including Google (approximately $3 billion), Amazon ($8 billion total), Lightspeed Venture Partners, and Iconiq Capital. Independent research measuring AI tool adoption reveals that 81 percent of surveyed developers now use AI-powered coding assistants, with 49 percent reporting daily usage—validating the strategic timing of Claude Code’s web accessibility expansion.

Adoption statistics

Claude Code demonstrates strong adoption patterns concentrated in specific developer segments and organizational types. Enterprise adoption lags startup usage, with enterprise work representing only 23.8 percent of Claude Code conversations compared to 25.9 percent on general Claude.ai—suggesting established organizations move more cautiously with new development tools. However, combined usage involving students, academics, personal project builders, and tutorial/learning users collectively represent 50 percent of interactions across both platforms, indicating significant individual adoption beyond corporate deployment. The broader Claude platform surpassed 30 million monthly active users globally in Q2 2025, marking 40 percent year-over-year growth, with over 25 billion API calls processed monthly as of June 2025, 45 percent originating from enterprise platforms. Claude’s market share in the enterprise AI assistant space reached 29 percent, narrowing the gap with ChatGPT. The Claude mobile app achieved 50 million downloads across iOS and Android with 4.8-star average rating, demonstrating consumer appeal alongside enterprise traction. In the coding AI agent and copilot market specifically, GitHub leads with estimated $800 million annualized recurring revenue from AI-powered coding offerings, while the overall market exceeds $2 billion with top three players holding 70 percent market share. User satisfaction for Claude Code specifically hits 92 percent based on aggregated feedback, with independent testing showing Claude outperforming GitHub Copilot in four out of five real-world coding prompts when explanation quality, logical reasoning, and edge-case handling matter.

2. Impact & Evidence

Client success stories

Developers across diverse contexts report transformative productivity impacts from Claude Code. One medical technology project documented in academic literature utilized Claude 3.5 Sonnet to develop requirements for a veteran-centric healthcare application over approximately six hours, generating 80 pages of conversational text and 23 multi-page artifacts that culminated in a 26-page requirements document. Eight consulting software developers universally praised the output’s value as a foundation for development work, demonstrating Claude Code’s utility beyond just writing code to include requirements gathering, technical specification development, project planning, user flow mapping, and interface design. A developer testimonial shared on Reddit highlighted, “Claude Code is exceptional because it can directly interact with my workspace and write code seamlessly,” contrasting favorably with alternatives that “tend to create errors when writing to files.” Independent testing documented on TechPoint Africa showed Claude excelling at “teaching, debugging, and long-form thinking—it doesn’t just generate code; it walks you through the why behind it,” particularly valuable when developers are “figuring it out” rather than executing known patterns. Multiple user reviews emphasize Claude’s superior handling of complex, multi-step reasoning tasks, with one stating Claude “nailed” a flight booking automation prompt “first try” that “most agents fumble.”

Performance metrics & benchmarks

Claude Code’s performance metrics reveal nuanced productivity patterns that challenge simplistic assumptions about AI coding assistance effectiveness. A large-scale randomized controlled trial published in July 2025 studying 16 experienced open-source developers completing 246 tasks found that allowing AI tools (primarily Cursor Pro with Claude 3.5/3.7 Sonnet) actually increased completion time by 19 percent, contradicting developer forecasts of 24 percent time reduction and expert predictions from economics and machine learning fields of 38-39 percent time savings. This counterintuitive finding suggests current AI coding tools may slow experienced developers in mature, complex projects despite perceived productivity gains. However, context matters substantially: research on less experienced developers shows acceptance rates for AI suggestions approaching 30 percent with particularly high adoption among junior developers who gain “substantial benefits.” Code quality metrics from GitClear analyzing 211 million changed lines across AI-assisted development reveal defect rates grew 4x in AI-assisted code, raising concerns about technical debt accumulation. Conversely, AgentPack dataset analysis of 1.3 million code edits co-authored by Claude Code, OpenAI Codex, and Cursor Agent shows that changes landing in public repositories implicitly filtered by human maintainers demonstrate higher quality than traditional commit data, with more focused scope and detailed commit messages articulating intent and rationale. Models fine-tuned on this agent-assisted data outperform those trained on human-only commit corpora, validating the value of AI-human collaboration patterns.

Third-party validations

Claude Code receives substantial third-party validation across multiple dimensions. Comparative evaluations consistently rank Claude models highly: one comprehensive IEEE study assessing leading generative AI models for Python code generation found Claude 3.5 Sonnet achieved the highest accuracy and reliability, outperforming all competitors in both simple and complex tasks across syntax accuracy, response time, completeness, and reliability metrics. Independent research published in April 2024 analyzing low-resource machine translation showed Claude 3 Opus exhibiting stronger capability than other large language models with “remarkable resource efficiency.” Academic security research analyzing jailbreaking attacks and safety training found Claude v1.3 among state-of-the-art models tested, with Anthropic implementing robust safety measures compared to alternatives. The Cybench framework for evaluating cybersecurity capabilities found agents leveraging Claude 3.5 Sonnet successfully solved complete tasks that took human teams up to 11 minutes, demonstrating practical problem-solving competence. Industry recognition includes integration as default or primary model in leading developer tools: Cursor IDE user preference data consistently shows Claude variants in top positions, fal.ai infrastructure marketplace features Claude models prominently, and major cloud providers including Google Cloud’s Vertex AI offer Claude through partner model programs. Anthropic’s SOC 2 Type 2 certification and comprehensive security program documented at the Anthropic Trust Center provides enterprise compliance validation.

3. Technical Blueprint

System architecture overview

Claude Code on the web operates through sophisticated multi-layer architecture designed for secure autonomous operation. Each coding session executes in dedicated isolated sandbox environments running on Anthropic’s managed cloud infrastructure, implementing both filesystem and network isolation through operating system-level features including Linux bubblewrap and MacOS seatbelt for local execution, with cloud sessions utilizing dedicated virtual machines. The filesystem isolation restricts Claude Code to only write within the project folder where started and its subfolders, creating clear security boundaries—while read access extends to system libraries and dependencies needed for development, write operations remain strictly confined. Network isolation employs customizable domain allowlists, enabling developers to specify which external resources Claude Code can access, such as permitting npm package downloads for testing while blocking unauthorized data exfiltration. All Git operations route through secure proxy services that validate repository access permissions without exposing authentication tokens directly to the sandbox environment, ensuring credentials remain protected even if sandbox compromise occurs. The permission-based model defaults to read-only access, requiring explicit approval for file modifications, command execution, and other state-changing operations—though users can configure allowlists for frequently used safe commands. Sandboxing reduces permission prompts by 84 percent in Anthropic’s internal usage by defining boundaries within which Claude works autonomously, balancing security with development velocity.

API & SDK integrations

Claude Code integrates deeply with GitHub’s ecosystem through official GitHub App infrastructure and Actions workflows, enabling seamless repository connection, branch management, pull request creation, and code review automation. The platform supports standard Git operations including cloning, committing, pushing, and merging, all mediated through secure proxies that enforce access controls. For IDE integration, Claude Code operates through multiple surfaces: native command-line interface accessible via terminal, VS Code extension currently in beta providing inline assistance within Microsoft’s popular editor, web browser interface at claude.ai/code requiring only GitHub authentication, and iOS mobile app enabling lightweight task monitoring and code review on portable devices. The underlying Claude API powers these interfaces through Anthropic’s model serving infrastructure, with Claude 3.5 Sonnet, Claude 3.7 Sonnet, and Claude 4 variants supporting different performance/cost profiles. Developers can configure Claude Code through settings files specifying permission policies, network access rules, sandbox behaviors, and preferred models. The platform implements Model Context Protocol, Anthropic’s standard for tool integration, enabling extensibility with external services and data sources. Third-party integrations include Cursor (which embeds Claude models with hot-swapping capability), JetBrains suite through Agent SDK, and community-built desktop tools like Claudia providing alternative interfaces.

Scalability & reliability data

Claude Code’s cloud-based architecture delivers scalable concurrent task execution, with users able to run multiple coding sessions in parallel across different repositories from a unified interface. The underlying infrastructure processes over 25 billion API calls monthly as of June 2025, demonstrating production-scale reliability. Anthropic’s multi-billion dollar investments in computational infrastructure—including commitments to utilize Google Cloud and Amazon Web Services as part of investment agreements—ensure adequate capacity for growing demand. The platform maintains high availability through distributed cloud deployments, though specific uptime SLA percentages are not publicly disclosed for standard Pro and Max plans. Rate limits govern usage: Pro plan subscribers at $20 monthly (or $16.67 with annual commitment) receive defined usage quotas shared across all Claude Code interactions whether in terminal, web, or mobile, while Max plan subscribers at $100-200 monthly receive 5x or 20x more usage depending on tier selected. Enterprise customers negotiate custom SLAs and dedicated capacity. Performance characteristics depend on task complexity and model selection: Claude 4 Opus at $15 input/$75 output per million tokens delivers superior reasoning but higher latency and cost, while Claude 3.5 Haiku at $0.80/$4 per million tokens provides faster, more economical completion for simpler tasks. Average session costs estimate around $8 for 90 minutes of intensive Claude Code usage based on token consumption patterns.

4. Trust & Governance

Security certifications (ISO, SOC2, etc.)

Anthropic maintains comprehensive security certifications accessible through its Trust Center, including SOC 2 Type 2 compliance and ISO 27001 certification, validating its organizational controls across security, availability, processing integrity, confidentiality, and privacy domains. The company develops Claude Code according to its broader security program encompassing threat modeling, secure development lifecycle practices, penetration testing, and continuous monitoring. For enterprises requiring additional compliance documentation, Anthropic provides access to auditor attestations and detailed security reports upon request. The company’s public benefit corporation structure emphasizes responsible development, with safety built into product design from inception rather than retrofitted post-launch. Anthropic’s leadership includes former OpenAI vice president of research Dario Amodei, who co-founded the company in 2021 specifically to pursue AI development with stronger safety focus than he perceived at previous organizations. This commitment manifests in extensive red-teaming exercises, adversarial testing frameworks detailed in academic publications, and public disclosure of limitations and failure modes. The Claude models themselves undergo rigorous safety evaluations including jailbreak resistance testing, prompt injection defense validation, and bias auditing before deployment.

Data privacy measures

Claude Code implements strict data handling policies aligned with privacy-first principles. Anthropic explicitly prohibits using customer code or interaction data to train Claude models without opt-in consent, addressing primary developer concern about proprietary codebases leaking into AI training corpora. Users can provide feedback to improve Claude Code functionality, but this feedback mechanism remains separate from model training pipelines. Session data including code changes, command histories, and conversational context are retained according to data retention policies: Pro plan users receive standard retention periods while enterprise customers negotiate custom retention windows. All data transmission occurs over encrypted channels with TLS 1.3 standards. The sandbox architecture ensures credentials never enter execution environments—SSH keys, API tokens, and other secrets remain on user machines or in secure vaults, with Git operations proxied through authentication layers that validate actions without exposing underlying credentials. For web sessions, Anthropic manages infrastructure but implements strict isolation preventing cross-customer data leakage. Users control which repositories Claude Code can access through GitHub’s permission system, with granular settings for read vs. write access and branch-level restrictions. The platform supports enterprise deployment with single sign-on integration, centralized policy management, and audit logging for compliance tracking.

Regulatory compliance details

Claude Code’s architecture addresses emerging AI regulatory requirements globally. The sandboxed execution model aligns with AI safety principles advocated by regulatory bodies including the EU AI Act’s risk management requirements and US executive orders on AI governance. The permission-based architecture creates auditable decision trails documenting what actions Claude Code requested, which a developer approved or denied, and outcomes of executed commands—critical for regulatory environments requiring AI system traceability. Anthropic’s public benefit corporation status commits the company to considering societal impacts beyond shareholder returns, relevant for jurisdictions implementing corporate governance standards around AI deployment. For organizations in regulated industries including healthcare (HIPAA), financial services (SOX, GLBA), and government contracting (FedRAMP), Anthropic offers enterprise agreements with enhanced compliance commitments, though specific certification statuses for these frameworks are not publicly detailed for Claude Code specifically. The platform’s design specifically mitigates risks from autonomous AI systems: the combination of filesystem isolation preventing unauthorized data access, network controls blocking unapproved external connections, and human-in-the-loop approval for state-changing operations creates defense-in-depth alignment with responsible AI deployment guidelines from organizations including NIST, OECD, and IEEE.

5. Unique Capabilities

Infinite Canvas: Applied use case

Claude Code on the web transforms coding from linear terminal interactions into a true “infinite canvas” of concurrent development possibilities. Unlike traditional development workflows constrained to sequential task execution, the platform enables developers to initiate multiple independent coding sessions simultaneously across different repositories, effectively parallelizing work that would traditionally queue. A documented use case shows a developer connecting three separate microservice repositories, assigning Claude to implement API endpoint changes in the first, refactor database query logic in the second, and update documentation in the third—all executing concurrently with independent progress tracking. The web interface displays real-time status updates for each session, showing which files Claude is examining, what commands it’s executing, and how tests are progressing. Developers can intervene mid-execution to steer work: if Claude begins implementing a solution in an unpreferred direction, users inject guidance through the chat interface without terminating the session, and Claude incorporates the feedback immediately. This dynamic interaction surpasses both traditional coding and command-line Claude Code, where interruptions require full context re-establishment. The “Open in CLI” handoff feature enables seamless transitions from web-initiated sessions to local terminal environments when developers need deeper system integration or local debugging tools, preserving session state and context across transitions.

Multi-Agent Coordination: Research references

While Claude Code on the web operates as a single agent per session, the parallel execution architecture inherently supports multi-agent coordination patterns where multiple Claude instances collaborate on complex projects. Research published in September 2025 specifically investigated equipping Claude Code agents with Model Context Protocol-based social media and journaling tools, examining whether collaborative tools and autonomy improve performance. Across 34 Aider Polyglot Python programming challenges, collaborative tools substantially improved performance on the hardest problems, delivering 15-40 percent lower cost, 12-27 percent fewer turns, and 12-38 percent faster completion than baseline agents. The research revealed that different Claude models naturally adopted distinct collaborative strategies without explicit instruction: Sonnet 3.7 engaged broadly across tools and benefited from articulation-based cognitive scaffolding, while Sonnet 4 showed selective adoption, leaning on journal-based semantic search when problems were genuinely difficult. This mirrors how human developers adjust collaboration based on expertise and task complexity. Academic frameworks exploring Agent to Agent protocol integration with Model Context Protocol identify opportunities and challenges for inter-agent communication including semantic interoperability between agent tasks and tool capabilities, compounded security risks from combined discovery and execution, and governance requirements for multi-agent ecosystems. Anthropic’s investment in MCP standardization positions Claude Code for future coordination architectures where specialized agents handle different aspects of complex software projects.

Model Portfolio: Uptime & SLA figures

Claude Code on the web leverages Anthropic’s full model portfolio, giving developers flexibility to select optimal intelligence/cost/speed tradeoffs for different tasks. Claude 4 Opus represents the flagship offering with superior reasoning capabilities priced at $15 input/$75 output per million tokens, ideal for complex architectural decisions and algorithmic challenges. Claude 4 Sonnet at $3/$15 per million tokens provides balanced performance suitable for most general coding tasks. Claude 3.7 Sonnet delivers latest improvements in reasoning and code generation at identical $3/$15 pricing. Claude 3.5 Haiku at $0.80/$4 per million tokens offers fast, cost-effective completion for routine refactors and well-defined bug fixes. The platform implements intelligent model routing recommendations based on task complexity, though users retain manual override control. Uptime and reliability metrics benefit from Anthropic’s enterprise infrastructure: the company processes over 25 billion API calls monthly with response accuracy benchmarks reaching 98.3 percent—highest among top-tier large language models as of 2025 according to independent evaluations. While specific uptime SLA percentages are not disclosed for standard plans, Max subscribers receive “priority access at high traffic times” and enterprise customers negotiate custom availability guarantees. The underlying infrastructure operates across multiple availability zones within Google Cloud and AWS regions, providing geographic redundancy and disaster recovery capabilities.

Interactive Tiles: User satisfaction data

User satisfaction data for Claude Code on the web reveals exceptionally positive reception tempered by realistic understanding of current limitations. Aggregated feedback across major platforms shows 92 percent user satisfaction rating, with independent analysis of developer feedback finding 91.5 percent positive themes. Common satisfaction drivers include discovering APIs through Claude’s contextual suggestions, accelerating boilerplate code generation, and enhanced code comprehension when exploring unfamiliar codebases. Cursor IDE integration data showing Claude 3.7 Sonnet and Claude 3.5 Sonnet holding number one and number three positions respectively in user model preferences provides quantitative validation of developer trust—users who can freely switch models choosing Claude for production work signals deep confidence. Security-conscious developers specifically appreciate the transparent permission system and sandbox isolation, with one security analysis noting “Claude Code isn’t just a free-for-all in your terminal” but rather implements “mechanisms to keep you in the driver’s seat.” However, critical feedback identifies meaningful limitations: the permission prompts, while security-essential, can create “approval fatigue” where constant interruptions slow development (mitigated 84 percent through sandboxing but not eliminated entirely). Some developers report AI-generated code requiring careful validation to catch subtle bugs, with research showing 4x defect rate increases in some AI-assisted contexts. Cost concerns emerge for heavy users, with approximately $8 per 90-minute intensive session potentially accumulating quickly compared to fixed-price alternatives like GitHub Copilot at $10 monthly or Cursor at $20 monthly.

6. Adoption Pathways

Integration workflow

Getting started with Claude Code on the web requires minimal technical setup, deliberately designed for accessibility. Pro or Max subscribers visit claude.ai and navigate to the new “Code” tab introduced in October 2025, which displays a clean interface with GitHub repository connection options. First-time users authenticate with GitHub through OAuth, granting Claude Code permission to access specified repositories with granular control over read/write access levels and branch restrictions. Once connected, developers describe coding tasks in natural language: “Implement rate limiting on the /api/users endpoint with Redis backing” or “Refactor the authentication module to use JWT instead of session tokens, including tests.” Claude Code analyzes the repository structure, identifies relevant files, and proposes an implementation approach before requesting approval to proceed. The interface displays real-time progress including file operations, test execution results, and command outputs. Developers can interrupt at any point to provide guidance, ask clarifying questions, or request alternative approaches. Upon task completion, Claude Code automatically generates pull requests with descriptive summaries of changes, rationale for implementation decisions, and test coverage details. For developers preferring terminal environments, an “Open in CLI” button transfers the session to local command-line Claude Code with full context preserved, enabling debugging workflows requiring local tools. The iOS app provides lightweight access for monitoring ongoing sessions, reviewing proposed changes, and approving or rejecting suggestions while mobile.

Customization options

Claude Code on the web offers extensive customization balancing autonomy with control. Developers configure permission policies through settings files specifying which commands auto-approve without prompts (for example, cat, echo, ls for safe read operations), which require explicit approval (for example, git push, rm -rf, file writes), and which are categorically denied. Network access controls allow domain-specific allowlisting: teams can permit npmjs.org and pypi.org for package downloads while blocking other external connections, or open broader internet access for web scraping tasks while monitoring outbound traffic. Sandbox configuration determines filesystem boundaries—users can restrict Claude Code to specific subdirectories for sensitive projects or grant broader repository access for comprehensive refactors. Model selection preferences enable per-task optimization: designate Claude 4 Opus for architectural planning sessions, Claude 3.5 Sonnet for implementation work, and Claude 3.5 Haiku for test generation to balance quality and cost. Response verbosity settings adjust how much Claude Code explains its reasoning versus focusing on execution speed. For teams, administrators deploy centralized policies through enterprise deployment features, ensuring consistent security postures across developer populations while allowing individual preference customization within approved boundaries. Integration with remote Model Context Protocol servers enables extending Claude Code with custom tools: connect internal documentation systems, proprietary testing frameworks, or organization-specific deployment pipelines that Claude Code can invoke during workflows.

Onboarding & support channels

Anthropic provides multi-tiered onboarding and support aligned with subscription levels. Pro plan subscribers access comprehensive documentation at docs.anthropic.com including quickstart guides, video tutorials, example workflows, and troubleshooting FAQs. The documentation covers security best practices, sandbox configuration, permission management, integration patterns, and common pitfalls. Community support operates through Anthropic’s Discord server where developers share implementation patterns, debug issues collaboratively, and provide product feedback that influences roadmap prioritization. For billing and account issues, email support at support@anthropic.com handles Pro subscriber inquiries with typical response times measured in hours to days. Max plan subscribers receive priority support with faster response times and access to dedicated Slack channels for direct communication with Anthropic’s customer success team. Enterprise customers receive white-glove onboarding including kickoff calls with solution architects, customized deployment planning, security review assistance, policy template development, and ongoing technical account management. The research preview status means Anthropic actively solicits feedback through in-product prompts, user testing programs, and structured feedback surveys to refine features before general availability. Social media channels including Twitter/X, LinkedIn, and YouTube host product announcements, feature demonstrations, and best practice content.

7. Use Case Portfolio

Enterprise implementations

Large organizations deploy Claude Code on the web to accelerate development velocity while maintaining security standards. Financial services firms leverage the platform for backend API development where test-driven development workflows validate changes against comprehensive test suites before pull request creation—Claude Code writes implementation code, generates unit tests, runs test harness, debugs failures, and iterates until full pass, all within isolated sandbox environments that prevent production access. Healthcare technology companies utilize Claude Code for routine bug fixes in mature codebases, assigning Claude to work through JIRA backlogs by reading ticket descriptions, identifying relevant code sections, implementing fixes, and creating reviewed pull requests—reducing senior developer time spent on repetitive maintenance while preserving code quality through human review gates. E-commerce platforms employ parallel task execution for microservices development, initiating simultaneous sessions across payment processing, inventory management, and notification services to implement coordinated feature rollouts, with Claude Code handling boilerplate integration logic while developers focus on business logic validation. Software-as-a-service startups use Claude Code for documentation updates, tasking the agent to analyze recent code changes and generate corresponding documentation updates, API reference additions, and tutorial content that stays synchronized with evolving codebases. Enterprise adoption patterns show particular strength in startups (32.9 percent of Claude Code usage) compared to established enterprises (23.8 percent), mirroring historical technology adoption curves where agile organizations gain competitive advantages through early tool mastery.

Academic & research deployments

Educational institutions and research organizations leverage Claude Code on the web for pedagogy and exploration. Computer science departments use the platform in software engineering courses, assigning students to work collaboratively with Claude Code on realistic projects while learning to evaluate AI-generated code critically, debug AI mistakes, and understand when to trust versus verify automated suggestions. This pedagogical approach teaches not just coding mechanics but AI literacy essential for modern software practice. Research laboratories employ Claude Code for exploratory programming in scientific computing contexts: bioinformatics researchers prototype genome analysis pipelines, computational physicists test simulation parameter sweeps, and social science scholars build data scraping and analysis workflows—all benefiting from Claude Code’s ability to handle boilerplate infrastructure while researchers focus on domain-specific logic. Academic studies on AI-assisted development use Claude Code as experimental platform: the July 2025 randomized controlled trial studying productivity impacts, the September 2025 research on collaborative tools and agent performance, and the AgentPack dataset analysis of AI-human code co-authorship all leverage Claude Code sessions for empirical observations. Security research examines Claude Code as case study in agentic AI safety: analyses of sandbox effectiveness, permission system robustness, prompt injection defense, and adversarial robustness contribute to broader AI safety literature while improving Claude Code’s architecture through responsible disclosure feedback loops.

ROI assessments

Return on investment for Claude Code on the web varies substantially by use case and organizational context. For startups with limited engineering bandwidth, TCO analysis favors Claude Code: a five-person engineering team spending $100 monthly per developer ($500 total) on Max plans gains ability to parallelize bug fix work, automate documentation updates, and accelerate boilerplate code generation, potentially adding 10-20 hours of productive developer time weekly (equivalent to $5,000-10,000 monthly at $50/hour blended rates)—a 10x to 20x return. For mature enterprises with extensive legacy codebases, ROI depends heavily on task selection: routine bug fixes and well-defined enhancements show strong returns, while exploratory architectural work or complex debugging sessions may increase time consumption as found in the RCT study showing 19 percent slowdown. Breakeven analysis for versus GitHub Copilot requires contextual assessment: Copilot’s $10 monthly fixed cost provides unlimited inline suggestions ideal for experienced developers who know what to build, while Claude Code’s consumption-based pricing (~$8 per 90 intensive minutes) offers superior reasoning for architectural questions and complex problem-solving but accumulates costs with heavy usage. Organizations optimizing TCO employ hybrid strategies: GitHub Copilot for day-to-day autocomplete, Claude Code web sessions for specific complex tasks, and Claude API direct integration for custom workflows, selecting tools appropriate to each development context. Five-year projections show AI coding assistant market growing from $5.5 billion (2024) to $47.3 billion (2034), suggesting early adopters establishing AI-augmented workflows gain compounding advantages as tools mature.

8. Balanced Analysis

Strengths with evidential support

Claude Code on the web excels across multiple dimensions validated through empirical evidence. Superior reasoning capability distinguishes Claude from competitors: Cursor IDE data showing Claude 3.7 Sonnet ranked number one in developer preferences reflects real production deployments where engineers choose tools based on effectiveness, not marketing. Comparative testing consistently validates this assessment—independent research finding Claude outperforms GitHub Copilot on four of five real-world coding prompts, IEEE evaluation ranking Claude 3.5 Sonnet highest in accuracy and reliability, and academic studies demonstrating Claude’s resource efficiency across diverse tasks. The sandbox security architecture provides industry-leading safety for autonomous AI systems: filesystem isolation preventing lateral movement, network controls blocking data exfiltration, credential proxying protecting secrets, and permission-based execution maintaining human oversight create defense-in-depth validated through security audits and documented in engineering blog posts detailing threat modeling. The web accessibility democratizes AI coding assistance beyond terminal-comfortable developers, with iOS mobile support enabling review workflows for non-desk contexts—expanding addressable market from command-line specialists to broader developer populations. The parallel execution capability uniquely addresses team-scale development: while individual autocomplete tools accelerate typing, Claude Code’s multi-repository concurrent sessions enable architectural-scale work patterns matching how organizations actually build software. Enterprise trust evidenced through $500 million annualized revenue, customer list including Fortune 500 companies, and SOC 2/ISO certifications validates production-readiness for regulated industries.

Limitations & mitigation strategies

Claude Code on the web faces several meaningful constraints requiring mitigation strategies. The cost model creates unpredictability compared to fixed-price competitors: heavy users consuming 10+ hours weekly can accumulate hundreds monthly in Max plan costs versus $10-20 fixed subscriptions, requiring organizations to monitor usage patterns and optimize model selection (using Haiku for routine tasks, reserving Opus for complex challenges). The randomized controlled trial finding 19 percent slowdown for experienced developers in mature projects suggests current AI coding tools may hinder rather than help certain contexts, mitigating through selective deployment targeting well-defined tasks (bug fixes, test generation, documentation) while avoiding exploratory architecture work where AI reasoning may mislead more than assist. The 4x defect rate increase documented in some AI-assisted code contexts demands rigorous code review processes, mitigating through automated testing requirements, PR checklist enforcement, and security scanning before merge. Permission prompt fatigue, while reduced 84 percent through sandboxing, still interrupts flow for some users, mitigating through allowlist refinement over time as teams identify safe command patterns for their specific technology stacks. The research preview status means features evolve rapidly with potential breaking changes, mitigating through monitoring release notes, maintaining fallback workflows using terminal Claude Code or alternative tools, and providing feedback to Anthropic influencing stabilization priorities. Context window limitations can constrain whole-codebase reasoning for massive monorepos, mitigating through repository modularization, strategic file selection, and hybrid approaches where developers manually curate relevant context before invoking Claude Code.

9. Transparent Pricing

Plan tiers & cost breakdown

Claude Code on the web operates within Anthropic’s broader Claude subscription structure with three pricing tiers. The Free Plan provides limited Claude access for experimentation but explicitly excludes Claude Code capabilities, suitable only for evaluating general conversational features. The Pro Plan at $20 monthly (or $16.67 per month with annual commitment of $200 prepaid) grants full Claude Code access on web, iOS, and terminal, with defined usage limits shared across all Claude interactions including code generation, file creation, code execution, web research, and extended thinking for complex work. Pro users receive unlimited Projects for organizing work, Google Workspace connector integration, remote Model Context Protocol support, and access to multiple Claude model variants. The Max Plan offers two tiers: 5x usage at approximately $100 monthly or 20x usage at $200 monthly, targeting power users and small teams requiring extensive AI assistance. Max subscribers gain memory across conversations, higher output limits for all tasks, early access to advanced features, and priority access during peak traffic periods. Usage limits apply across all plans—specific quotas are not publicly enumerated but enforce reasonable consumption preventing abuse while accommodating legitimate development workflows. Enterprise plans with custom pricing support team deployments with centralized billing, administrative controls for connector management, enterprise search across organizations, Microsoft 365 and Slack integration, and dedicated Claude Code premium seats for team members.

Total Cost of Ownership projections

Total cost of ownership for Claude Code on the web depends on usage patterns, team size, and alternative opportunity costs. For an individual developer using Pro plan at $20 monthly, breakeven against GitHub Copilot ($10 monthly) requires the superior reasoning and autonomous task execution to save more than 10 hours monthly at $50/hour valuation—easily achievable if Claude Code handles even one complex refactor or several routine bug fixes that would otherwise consume developer time. For a 10-person team with half on Pro plan ($100 monthly) and half on Max 5x plan ($500 monthly, estimated) totaling $600 monthly, TCO includes subscription costs ($7,200 annually) plus opportunity costs of time spent reviewing Claude Code outputs and correcting errors. If the team gains net 5 hours weekly per developer (50 hours team-wide) valued at $50/hour blended rate, annual value reaches $130,000 against $7,200 cost—18x return. However, if defect rates increase as GitClear research suggests, additional QA and debugging time reduces net benefit. Five-year TCO for enterprise deployment with 100 developers predominantly on Max plan ($200 monthly average, $20,000 monthly, $240,000 annually, $1.2 million five-year) must justify against traditional approaches: hiring 5-10 additional developers at $150,000 average ($750,000-1.5 million annually, $3.75-7.5 million five-year) represents the baseline cost of achieving equivalent output through pure human scaling. If Claude Code augments existing team to match output of 10-20 percent larger organization, ROI reaches 3x to 6x over five years.

10. Market Positioning

Competitor comparison table with analyst ratings

| Platform | Deployment Model | Pricing | Market Share | Key Differentiator |

|---|---|---|---|---|

| GitHub Copilot (Microsoft) | IDE plugin, autocomplete focus | $10/month individual, $19/month business | ~40% (estimated $800M ARR) | Superior distribution through GitHub integration, Microsoft ecosystem |

| Claude Code (Anthropic) | Terminal, Web, Mobile multi-surface | $20/month Pro, $100-200/month Max | Growing rapidly ($500M+ ARR) | Superior reasoning, parallel execution, sandbox security |

| Cursor (Anysphere) | Custom VS Code-based IDE | $20/month Pro | Strong ($100M+ ARR) | Project-wide context, inline + chat hybrid, codebase intelligence |

| Replit AI | Integrated coding environment | Included with Replit tiers | Growing ($100M+ ARR) | Instant deployment, collaborative multiplayer, beginner-friendly |

| Cody (Sourcegraph) | IDE plugin, codebase search focus | Enterprise pricing | Enterprise-focused | Codebase search integration, self-hosted options |

| Amazon CodeWhisperer | IDE plugin, AWS integration | Free individual, enterprise tiers | AWS ecosystem | Free tier, deep AWS service integration |

| Tabnine | IDE plugin, privacy-focused | $12/month Pro | Established player | Self-hosted deployment, IP protection emphasis |

| OpenAI Codex/GPT-4 | API, various integrations | API consumption pricing | Embedded in many tools | Underlying technology for multiple platforms |

Unique differentiators

Claude Code on the web occupies distinctive competitive positioning through architectural and organizational differentiation. The multi-surface deployment across terminal, web, and mobile creates accessibility competitors lack: GitHub Copilot remains IDE-tethered, Cursor requires using their custom editor, while Claude Code meets developers wherever they work. The autonomous task execution model fundamentally differs from autocomplete-focused competitors—rather than accelerating typing, Claude Code handles entire implementation workflows from design through testing through pull request, functioning as junior developer rather than intelligent spellcheck. The sophisticated reasoning capability, validated through Cursor IDE preference data and independent benchmarks, reflects Anthropic’s core competency in frontier model development. The company’s $14.8 billion funding from Google, Amazon, and top-tier venture firms provides financial runway to sustain aggressive model improvement while competitors face profitability pressures. Anthropic’s constitutional AI safety research and public benefit corporation structure differentiate organizationally from pure profit-maximizers, appealing to ethically-conscious enterprises. The sandbox security architecture specifically designed for agentic AI systems provides production-grade safety lacking in tools retrofitted from autocomplete origins. The parallel execution capability enables team-scale workflows competitors don’t address. The transparent pricing with consumption-based Max tiers accommodates diverse usage patterns from casual to intensive. Integration with Model Context Protocol positions Claude Code within emerging agent interoperability standards, creating network effects as MCP ecosystem expands.

11. Leadership Profile

Bios highlighting expertise & awards

Dario Amodei, co-founder and CEO of Anthropic, brings exceptional credentials combining technical depth with organizational leadership. Born in San Francisco in 1983, Amodei earned his undergraduate degree in physics from Stanford University after transferring from Caltech, then completed his PhD in physics from Princeton University studying electrophysiology of neural circuits, followed by postdoctoral research at Stanford University School of Medicine. His AI career began at Baidu Research before joining OpenAI where he served as vice president of research, contributing to foundational work including GPT-2 and GPT-3 development. In 2021, Amodei departed OpenAI alongside his sister Daniela Amodei (Anthropic president) and other senior researchers due to disagreements about the company’s direction following Microsoft partnership, specifically concerns about sincere commitment to AI safety versus commercial pressures. This principled departure reflects Amodei’s repeated emphasis that AI safety leadership requires “trustworthy people whose motivations are sincere” and “honest person[s] who [do] not truly want to make the world better.” His strategic vision articulated in essays like “Machines of Loving Grace” presents measured optimism about AI benefits while acknowledging physical and ethical constraints, contrasting with more exuberant visions from competitors. Industry recognition includes Fortune Global Forum speaking engagements, Lex Fridman podcast appearances, and positioning as thought leader on AI governance alongside figures like Sam Altman.

Daniela Amodei, co-founder and president, complements her brother’s technical leadership with operational and strategic expertise, though detailed biographical information is less publicly documented. The founding team includes other former OpenAI researchers bringing deep machine learning, safety research, and systems engineering experience. The company’s technical leadership emphasizes academic rigor with regular publication in top-tier venues including NeurIPS, ICML, and specialized AI safety conferences.

Patent filings & publications

While specific patent numbers are not publicly disclosed in available documentation, Anthropic’s research output demonstrates substantial intellectual property development. The company has published extensively on constitutional AI methods enabling Claude to learn helpful, harmless, and honest behaviors from human feedback without requiring human labeling of harmful outputs—a novel approach to AI alignment with clear patent potential. Research on large context windows enabling 100,000+ token processing (hundreds of pages of material) represents technical innovation with commercial applications. Work on adversarial robustness, jailbreak resistance, and safety training failure modes contributes to defensive AI security—a growing patent category. The Model Context Protocol specification and implementation constitute standards-track intellectual property influencing interoperability architectures. Academic publications co-authored by Anthropic researchers appear regularly in leading venues: studies on jailbreaking attacks testing Claude alongside GPT-4 and other models, comparative evaluations of AI safety training approaches, and frameworks for evaluating AI risks including sabotage capabilities and misuse potential. The engineering blog documents technical innovations including sandboxing approaches, secure credential proxying, and permission system architectures that inform industry best practices. As Anthropic scales and competition intensifies, strategic patent filings around unique architectural approaches, safety mechanisms, and interaction paradigms would provide defensible competitive moats against well-funded rivals.

12. Community & Endorsements

Industry partnerships

Anthropic has cultivated strategic partnerships spanning infrastructure providers, technology integrators, and investment partners. Google represents the largest strategic partner with approximately $3 billion invested and ongoing negotiations for tens of billions in additional cloud computing services, positioning Anthropic as key pillar of Google’s AI strategy competing against Microsoft-OpenAI alliance. The partnership includes Google Cloud Platform as primary inference infrastructure, Vertex AI integration making Claude models available to Google Cloud customers, and collaborative research on AI safety and capabilities. Amazon Web Services constitutes the second major infrastructure partner with $8 billion total investment across multiple rounds, providing compute resources for model training and inference alongside Bedrock integration for AWS enterprise customers. This dual-infrastructure approach prevents single-vendor lock-in while ensuring adequate capacity for scaling. Technology integrations span developer tooling ecosystem: GitHub for repository management and pull request automation, Cursor IDE as primary third-party interface where Claude models rank top in user preferences, VS Code through official extension, JetBrains suite through Agent SDK, Slack for workplace integration, Notion for knowledge management, and Salesforce for CRM augmentation. Investment partners include Spark Capital (Series C lead), Lightspeed Venture Partners (Series D lead), Iconiq Capital, Menlo Ventures, Sound Ventures, Zoom Ventures, Fidelity Management & Research, and Abu Dhabi investment firm MGX. The Model Context Protocol has attracted adoption from tools including Zed, Cursor, and Sourcegraph, creating ecosystem effects that strengthen Anthropic’s platform position.

Media mentions & awards

Claude Code on the web generated substantial media coverage upon its October 20, 2025 launch. TechCrunch highlighted the strategic importance with quotes from Product Manager Cat Wu explaining web and mobile as “big step” in meeting developers wherever they are, attributing success partly to increasingly popular AI models becoming “developer darlings.” ITPro positioned the release as making “AI more accessible” through browser integration eliminating command-line requirements, noting initial accessibility limited to Pro and Max users in research preview. Maginative emphasized the parallel task execution capability as key differentiation enabling developers to “delegate multiple coding tasks in parallel on cloud infrastructure.” Anthropic’s broader media presence includes Fortune coverage of CEO Dario Amodei’s August 2025 statements escalating “war of words” with NVIDIA’s Jensen Huang over AI regulation, with Amodei defending regulatory oversight against industry opposition. LinkedIn thought leadership articles position “The Visionaries of AI: Sam Altman and Dario Amodei” as contrasting philosophies—Altman’s techno-optimism versus Amodei’s measured pragmatism acknowledging AI’s transformative potential constrained by physical and ethical realities. The New York Times reported in March 2025 on Google’s expanding Anthropic investment revealing 14 percent ownership stake worth “significantly more” than $61.5 billion valuation, highlighting strategic importance. Bloomberg covered October 2025 negotiations for additional “multibillion-dollar cloud deal” demonstrating continued deepening of infrastructure partnerships.

13. Strategic Outlook

Future roadmap & innovations

Anthropic’s product roadmap for Claude Code signals continued evolution toward comprehensive development environments. The company is expanding the research preview based on user feedback, with general availability anticipated following beta refinement period—no specific timeline disclosed. Feature enhancements under development include improved codebase understanding for massive repositories through better context management and indexing, enhanced debugging capabilities with deeper integration into development workflows, expanded language and framework support beyond current coverage, and tighter integration with popular development tools and CI/CD pipelines. The mobile experience on iOS represents early exploration with expectations for rapid iteration as Anthropic gathers usage data on portable coding workflows—Android version likely follows iOS maturation. Team collaboration features appear on horizon: shared sessions where multiple developers monitor and steer same Claude Code task, centralized knowledge bases where teams capture organizational coding patterns Claude learns from, and workflow templates encoding team-specific development practices. The Model Context Protocol investment suggests future where Claude Code seamlessly invokes custom tools specific to organizations: internal documentation systems, proprietary testing frameworks, deployment automation, and monitoring integration. Enhanced security features will evolve as enterprises provide feedback: more granular permission systems, advanced audit logging, compliance reporting dashboards, and integration with enterprise security information and event management platforms. The underlying model capabilities will improve continuously as Anthropic releases Claude 4.x and 5.x generations with stronger reasoning, larger context windows, and improved code understanding.

Market trends & recommendations

The AI coding assistant market trajectory positions Claude Code on the web favorably amid converging trends. The market’s explosive growth from $5.5 billion (2024) toward $47.3 billion (2034) at 24 percent CAGR reflects fundamental shift in software development practices—early adopters establishing AI-augmented workflows gain compounding advantages as tools mature and team fluency increases. The concentration of 70 percent market share among top three players (GitHub, Claude Code, Cursor) suggests winner-take-most dynamics where superior technology and distribution create sustainable competitive moats, making strategic partnerships and ecosystem integration critical success factors. Developer adoption patterns—81 percent now using AI coding assistants with 49 percent daily usage—validate that AI augmentation has crossed from early adopter phase into mainstream practice, reducing adoption friction for Claude Code web launch. The startup-led adoption curve (32.9 percent of Claude Code usage versus 23.8 percent enterprise) mirrors historical technology diffusion where agile organizations deploy transformative tools earlier, but enterprise follows as solutions mature and compliance standards emerge—suggesting substantial enterprise growth opportunity as Claude Code exits research preview. Organizations should evaluate Claude Code through structured pilots: identify 5-10 developers across experience levels, assign mixture of routine bug fixes and complex architectural tasks, instrument productivity metrics including time-to-completion and defect rates, gather qualitative feedback on workflow impacts, and compare against baselines using traditional tools or competitor AI assistants. Optimal adoption strategies employ tool portfolios rather than single solutions: GitHub Copilot for inline autocomplete, Claude Code for autonomous task execution, and human expertise for architectural decisions and quality assurance—selecting appropriate tool for each development context rather than forcing uniform adoption. Strategic recommendations emphasize early skill development: teams establishing AI-augmented workflows today position for productivity advantages as tools improve, while late adopters face growing capability gaps.

Final Thoughts

Claude Code on the web represents a pivotal moment in AI-assisted software development, transforming an already powerful terminal tool into an accessible, multi-surface platform that meets developers wherever they work. By moving beyond the command line to browsers and mobile devices while introducing sophisticated parallel execution and secure sandbox architectures, Anthropic has created something fundamentally different from autocomplete-focused competitors—an autonomous development partner that handles entire implementation workflows from conception through testing through pull request creation.

The technical excellence underlying Claude Code’s rapid ascent is undeniable. Cursor IDE data showing Claude models occupying the top and third positions in developer preferences reflects real production deployments where engineers vote with their daily tool choices. Independent research consistently validates Claude’s superior reasoning capabilities, with comparative evaluations ranking it highest in accuracy and reliability across diverse coding tasks. The sophisticated sandbox security architecture implementing both filesystem and network isolation with credential proxying sets new standards for safe autonomous AI systems, addressing legitimate enterprise concerns about agentic AI risks. The $500 million in annualized revenue demonstrates strong product-market fit, while the broader Claude platform’s 30 million monthly active users and 92 percent satisfaction ratings signal sustained momentum.

However, prospective adopters must approach with clear-eyed understanding of current limitations and contextual performance patterns. The July 2025 randomized controlled trial finding 19 percent completion time increases for experienced developers in mature projects directly contradicts both developer forecasts and expert predictions, revealing that AI coding tools may hinder rather than help in certain contexts. The 4x defect rate increases documented in some AI-assisted code scenarios demand rigorous review processes rather than blind trust in AI outputs. The consumption-based pricing model creates cost unpredictability compared to fixed-price alternatives, requiring monitoring and optimization to prevent budget surprises. The research preview status means the platform continues evolving with potential breaking changes as Anthropic refines features toward general availability.

For whom does Claude Code on the web make sense? Startups and agile organizations seeking competitive advantages through early AI adoption will find strong alignment—the data showing 32.9 percent startup usage versus 23.8 percent enterprise validates this pattern. Developers tackling well-defined tasks like routine bug fixes, test generation, documentation updates, and boilerplate implementation will realize immediate productivity gains. Teams requiring parallel execution across multiple repositories for coordinated feature development will leverage capabilities competitors lack. Security-conscious enterprises appreciating transparent sandboxing, permission systems, and SOC 2 certification will find production-ready deployment options. Organizations valuing ethical AI development from safety-focused companies will align with Anthropic’s constitutional AI approach and public benefit corporation structure.

Conversely, experienced developers working in highly complex legacy codebases may find the tool slows rather than accelerates certain workflows. Cost-sensitive individuals and small teams might prefer fixed-price alternatives like GitHub Copilot for predictable budgeting. Organizations requiring battle-tested stability over cutting-edge features should await general availability rather than adopting research previews.

The strategic outlook favors Claude Code as the AI coding assistant market grows from $5.5 billion toward $47.3 billion over the next decade. Anthropic’s $14.8 billion funding war chest, strategic partnerships with Google and Amazon, and demonstrated ability to attract top research talent position the company to sustain aggressive improvement while competitors face profitability pressures. The Model Context Protocol investment creates potential for network effects as ecosystem adoption expands. The parallel evolution across terminal, web, and mobile surfaces provides flexibility competitors locked into single modalities cannot match.

Ultimately, Claude Code on the web represents a significant step toward AI-augmented software development becoming standard practice rather than experimental edge. The tool won’t replace software engineers—the RCT data and practical experience demonstrate human expertise remains essential for architecture, quality assurance, and complex problem-solving. But for teams learning to collaborate effectively with AI coding assistants, selecting appropriate tasks, maintaining rigorous review processes, and iterating on workflows, Claude Code provides powerful capabilities that can meaningfully accelerate development velocity while maintaining security and quality standards. As the research preview matures toward general availability and underlying models continue improving, Claude Code on the web stands positioned as a leading platform in the AI-assisted development revolution reshaping how software gets built.