Table of Contents

Overview

Large Language Models have transformed task automation, but they often struggle when orchestrating complex, multi-step tool calls using traditional function-calling approaches. Code Mode introduces a paradigm shift to address this limitation: instead of teaching models to follow rigid tool-calling schemas, it empowers them to leverage their strongest capability, writing code. By providing the model with a single powerful tool, a sandboxed code execution environment, Code Mode transforms multi-tool workflows into unified script executions. Originally introduced by Cloudflare in September 2025 and subsequently validated by Anthropic’s engineering team, this approach can dramatically reduce token consumption and API round-trips in certain scenarios, though results vary depending on the specific use case.

Key Features

Code Mode effectiveness stems from its core features that fundamentally change how LLMs interact with external tools:

- Unified Code Execution Tool: Instead of presenting the LLM with dozens of individual tool definitions, Code Mode provides a single execute_code tool. The model generates TypeScript or similar code that can call your entire toolkit from one script, consolidating what would otherwise require multiple sequential tool calls.

- Secure Sandboxed Runtimes: Generated code runs in isolated environments with strict security controls. Cloudflare’s implementation uses V8 isolates that spin up in milliseconds with minimal memory overhead. Alternative implementations use Deno sandboxes. All executions include configurable timeouts, resource limits, and comprehensive logging.

- Automatic TypeScript Interface Generation: The system fetches MCP server schemas and automatically converts them into fully typed TypeScript interfaces with documentation comments. This gives the LLM the familiar context of real APIs rather than synthetic tool-calling formats, leveraging the vast amount of TypeScript code in its training data.

- Multi-Protocol Tool Support: Through integration with UTCP (Universal Tool Calling Protocol), Code Mode can orchestrate tools across different protocols including MCP servers, HTTP APIs, WebSocket connections, CLI commands, and file system operations, all within a single cohesive script.

- Internet Isolation with Binding Access: The sandbox is completely isolated from the internet by default. Tools are accessed through explicit bindings rather than network requests, preventing accidental data leakage and eliminating the possibility of API key exposure in generated code.

How It Works

The Code Mode workflow consolidates what would traditionally be a conversational back-and-forth into a single execution cycle.

First, when connecting to MCP servers or other tools, the system generates TypeScript type definitions representing the available APIs. These definitions include proper interfaces, parameter types, and documentation extracted from tool schemas. The LLM receives this TypeScript API surface as context.

When given a task, the LLM generates a complete TypeScript program designed to accomplish the goal. This script can call any registered tool, implement branching logic based on intermediate results, loop through data collections, and perform local post-processing. The code handles all orchestration that would otherwise require multiple model invocations.

The Code Mode runtime then executes this entire program within the secure sandbox. Results are returned to the LLM only via console.log() statements, meaning the model only receives the final structured output rather than every intermediate result.

Throughout execution, strict security rules apply: the sandbox cannot access the internet directly, has no filesystem access unless explicitly provided, and operates under configurable timeouts (default 3 seconds) to prevent infinite loops. All tool invocations route through the parent agent which handles authentication and access control.

Use Cases

Code Mode excels in scenarios that demand sophisticated tool orchestration with efficiency requirements:

- Complex AI Agent Development: Build agents that need to coordinate numerous tools for intricate workflows. DevOps automation requiring multiple API calls, research data processing spanning several services, or CRM operations involving sequential updates can all be consolidated into single script executions.

- Token and Latency Optimization: When an agent would otherwise make many sequential tool calls, copying intermediate results through the model’s context at each step, Code Mode can eliminate this overhead. Anthropic reported a test case reduction from 150,000 tokens to 2,000 tokens, though independent benchmarks show results vary significantly by use case.

- Data Filtering and Privacy: Execute data transformations locally before results enter the model context. Filter a 10,000-row spreadsheet down to relevant entries within the sandbox, so the model sees only the filtered subset rather than the entire dataset. Sensitive intermediate data can flow between tools without ever entering the model’s context.

- Multi-Tool Workflows: Chain multiple tool calls with conditional logic in a single execution. For example, search for information, process the results based on specific criteria, then update multiple downstream systems, all without returning to the model between steps.

Pros and Cons

Code Mode offers significant advantages but comes with important considerations that affect its suitability for different projects.

Advantages

- Leverages LLM Strengths: Models have extensive training on real-world code but limited exposure to synthetic tool-calling formats. Letting them write actual TypeScript leverages their strongest capabilities rather than forcing them into an unfamiliar paradigm.

- Efficient Multi-Step Batching: Multiple operations, API calls, conditional logic, and data transformations can execute in a single sandbox invocation, eliminating the token overhead of passing intermediate results through the model.

- Strong Security Model: V8 isolate-based sandboxing provides process-level isolation without container overhead. Internet isolation by default prevents unintended network access. Binding-based tool access eliminates API key exposure in generated code.

- Progressive Tool Loading: Rather than dumping all tool definitions into context upfront, agents can explore tool APIs dynamically, loading only what they need for the current task, similar to how an agentic coding assistant browses documentation.

- No API Keys in Generated Code: Tools are accessed through pre-authorized bindings, so the model never needs to handle or potentially leak credentials.

Disadvantages

- Added Infrastructure Complexity: Introducing a code execution layer requires deploying and maintaining sandbox infrastructure, whether through Cloudflare Workers, Deno, or other runtime environments.

- Inconsistent Efficiency Gains: While Anthropic reports dramatic token savings (up to 98.7%), independent benchmarks of UTCP Code-Mode showed 40-68% higher token consumption and more API calls than optimized traditional approaches in certain scenarios. Results depend heavily on task characteristics.

- Developer Expertise Required: Debugging LLM-generated code that orchestrates multiple tools requires developer-level skills. When the generated script fails, diagnosing whether the issue lies in the code logic, tool invocations, or data handling can be complex.

- Not Always the Right Tool: For simple single-tool calls or workflows with few steps, Code Mode adds unnecessary complexity. The approach shines with multi-step orchestration but may be overkill for straightforward tasks.

- Evolving Ecosystem: As a relatively new paradigm, tooling, documentation, and best practices are still maturing. Implementation patterns continue to evolve as the community gains experience.

How Does It Compare?

Code Mode represents a fundamentally different approach to tool orchestration compared to traditional methods and competing frameworks.

Code Mode vs Traditional Function Calling

Traditional function calling uses synthetic token formats that LLMs have limited exposure to in training. Each tool call requires a round-trip through the model, consuming tokens for intermediate results. Code Mode instead lets models write real TypeScript code they have seen extensively in training, batching multiple operations into single executions and only returning final results to the model.

Code Mode vs Standard MCP Usage

Standard MCP implementations expose tool definitions directly to the LLM and require individual calls for each operation. Code Mode converts MCP tools into TypeScript APIs that the model writes code against. According to Cloudflare’s engineering team, LLMs handle complex APIs better when presented as code rather than simplified tool-calling interfaces.

Code Mode vs Anthropic’s Code Execution Approach

Both Cloudflare’s Code Mode and Anthropic’s code execution with MCP arrive at the same core insight: let LLMs write code rather than call tools directly. Cloudflare’s approach loads all TypeScript type definitions upfront (with plans for dynamic browsing), while Anthropic uses a filesystem-based progressive disclosure where the agent lists directories and reads only needed tool files on demand. Both achieve similar efficiency gains through different implementation strategies.

Code Mode vs LangChain/LangGraph

LangChain and LangGraph provide comprehensive agent frameworks with graph-based orchestration, memory management, and extensive tool integrations. They use traditional tool-calling approaches by default. Code Mode is narrower in scope, focusing specifically on the tool invocation paradigm rather than providing a full agent framework. The approaches can be complementary, with Code Mode providing the execution layer within a LangGraph workflow.

Code Mode vs AutoGen

AutoGen specializes in multi-agent collaboration where multiple AI agents coordinate on tasks with human-in-the-loop support. Code Mode focuses on single-agent tool orchestration efficiency rather than multi-agent coordination. For complex workflows requiring multiple specialized agents, AutoGen provides capabilities Code Mode does not address, while Code Mode can optimize individual agent tool interactions.

Code Mode vs UTCP Direct Calling

UTCP (Universal Tool Calling Protocol) provides direct API access without MCP server overhead. Code Mode can work with UTCP tools, combining direct API access with the code execution paradigm. Using UTCP tools through Code Mode provides both the protocol efficiency of UTCP and the orchestration efficiency of code-based tool calling.

Technical Implementation

Code Mode is available through several implementation paths:

The Cloudflare Agents SDK provides native Code Mode support. Developers can wrap existing tool definitions with the codemode helper to enable code-based tool invocation:

import { codemode } from "agents/codemode/ai";

const {system, tools} = codemode({

system: "You are a helpful assistant",

tools: { /* tool definitions */ },

});

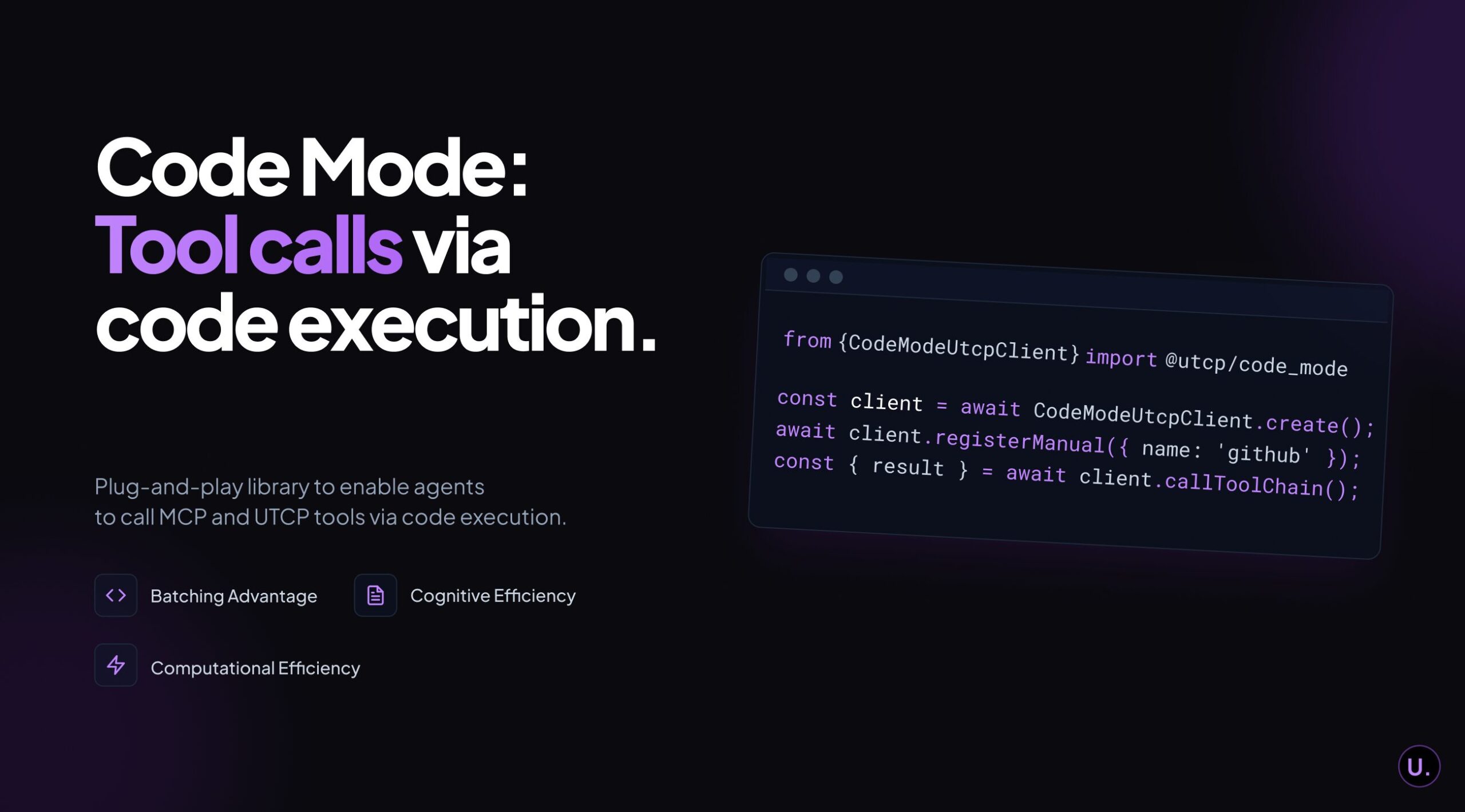

The universal-tool-calling-protocol/code-mode GitHub repository provides a plug-and-play library for enabling agents to call MCP and UTCP tools via code execution. Python, TypeScript, and Go implementations are available.

For local development, the Dynamic Worker Loader API is fully available when developing with Wrangler and workerd. Production deployment requires signing up for the beta program.

Important Considerations

Before adopting Code Mode, teams should evaluate several factors:

Benchmark your specific use case rather than relying on published efficiency claims. Performance varies significantly based on task complexity, number of tools involved, and data volumes. Independent testing showed that optimized traditional approaches (like file reference passing) achieved better efficiency than Code Mode in certain data analysis scenarios.

Consider your team’s debugging capabilities. When LLM-generated code fails mid-execution across multiple tool calls, diagnosis requires developer expertise and appropriate tooling.

Evaluate sandbox infrastructure requirements. Cloudflare Workers provide the most streamlined path for Code Mode deployment, but alternative sandbox implementations require additional operational considerations.

Final Thoughts

Code Mode represents an important evolution in AI agent tool orchestration, founded on a compelling insight validated by both Cloudflare and Anthropic: LLMs are better at writing code than using synthetic tool-calling formats. By shifting orchestration responsibility from conversational back-and-forth to single code executions, it unlocks potential efficiency gains for complex multi-tool workflows.

However, the approach is not universally superior to traditional methods. Independent benchmarks demonstrate that results vary significantly by use case, with some scenarios showing worse performance than optimized conventional approaches. Teams should evaluate Code Mode against their specific requirements rather than assuming efficiency gains will transfer to their workflows.

For developers building sophisticated AI agents that orchestrate numerous tools across multi-step workflows, Code Mode offers a fundamentally different paradigm worth serious consideration. The security model, progressive tool loading capabilities, and alignment with LLM training characteristics provide compelling advantages for the right use cases. As the ecosystem matures and best practices emerge, Code Mode may become an increasingly standard component of production AI agent architectures.