Table of Contents

Overview

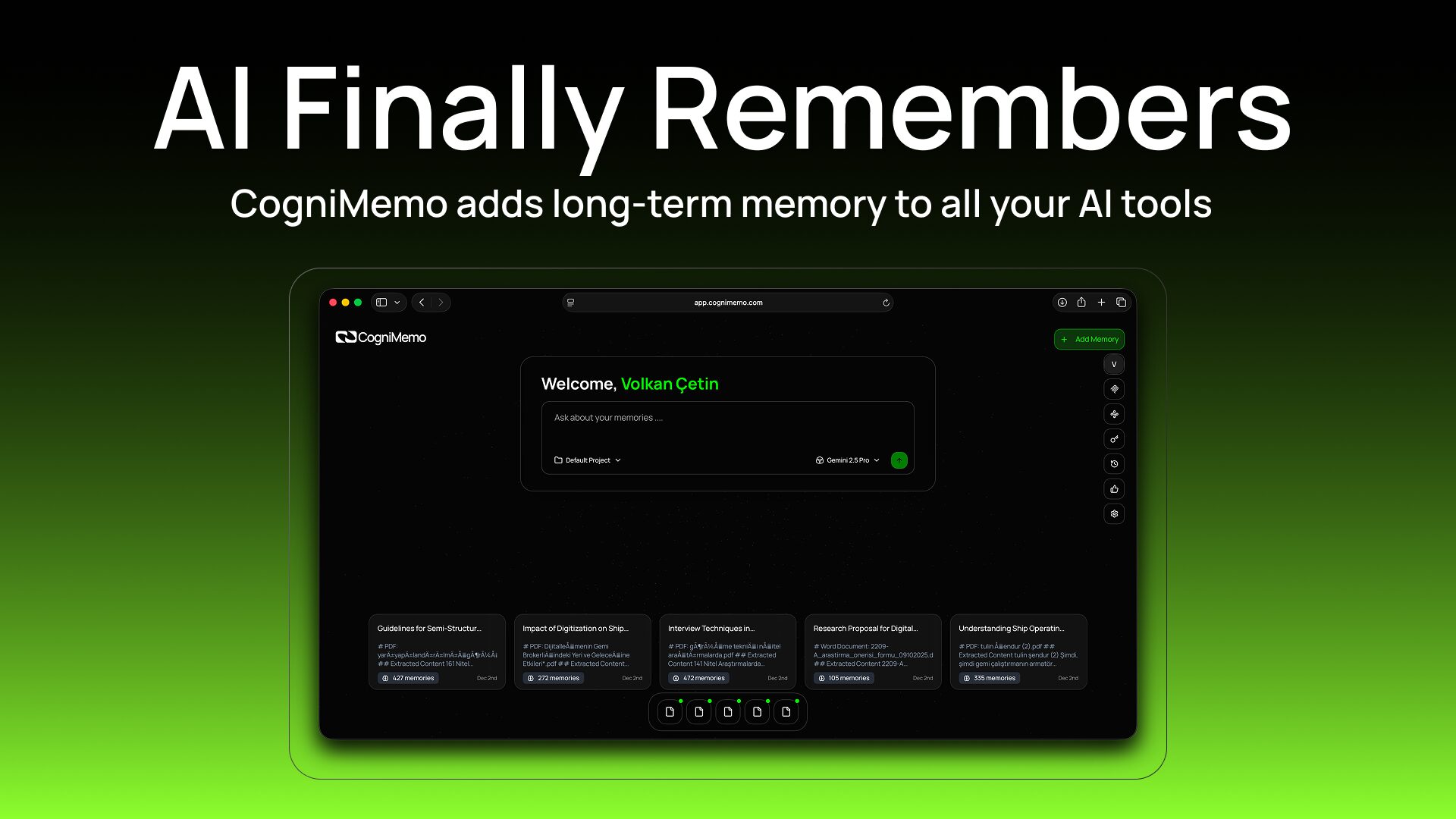

Imagine an AI that truly remembers you. Not just for the current conversation, but across sessions, understanding your preferences, recalling past tasks, and learning from every interaction. This isn’t science fiction anymore. CogniMemo is here to equip your AI applications with persistent, context-aware memory, making them smarter, more personalized, and more effective than ever before. Launched on Product Hunt on December 5, 2025 (achieving 124 upvotes and 5 comments, ranking #11 for the day), CogniMemo addresses a fundamental limitation plaguing current AI assistants: stateless LLMs that forget everything between conversations, requiring users to re-provide context repeatedly, wasting computational resources, and preventing personalized experiences that improve over time.

Built with a hybrid memory architecture combining vector databases for semantic search, key-value stores for fast lookups, and relational linking recognizing patterns, CogniMemo provides API-first integration enabling developers to add persistent memory to any AI application within minutes. The platform works with any LLM (OpenAI, Anthropic Claude, Google Gemini, Mistral, or local models like Ollama) through unified adapters, integrates with productivity tools (Notion, Slack, Google Drive) for automatic memory synchronization, and connects to vector databases (Pinecone, Weaviate, PostgreSQL, Redis) for scalable storage. Trusted by 2000+ developers during beta, CogniMemo transforms stateless AI into continuously learning systems that evolve with each user interaction. Say goodbye to stateless AI and hello to truly intelligent, evolving digital companions.

Key Features

- Persistent Context-Aware Memory Across Sessions: CogniMemo ensures your AI remembers users, their preferences, past tasks, decisions, and entire conversation histories, providing truly continuous and personalized experiences. Unlike temporary context windows limited to current conversations (typically 4k-128k tokens), Cogn iMemo stores information indefinitely in external memory accessible across all future interactions. The system maintains User-level memories (preferences, traits, history unique to individuals), Session-level memories (context specific to particular conversation threads), and Agent-level memories (learned patterns and knowledge accumulated by AI systems themselves). This multi-level approach enables AI to recall details from interactions occurring days, weeks, or months prior, eliminating repetitive reintroductions and context re-establishment that frustrate users and waste computational resources.

- Simple API Integration with Zero Infrastructure Management: Forget complex setup and infrastructure management. CogniMemo offers single, straightforward API integrating seamlessly into existing AI applications without requiring developers to build vector databases, manage embeddings, or configure storage infrastructure. The platform provides REST API endpoints for standard HTTP integration, LangChain integration for popular AI framework compatibility, and language-specific SDKs (Python, JavaScript, others) enabling developers to add memory capabilities with just a few lines of code. Example integration: initialize client with API key, add memory during interactions, search/retrieve memories when needed—typically 5-10 lines of code total versus hundreds or thousands required for self-hosted memory solutions.

- User and Session Tracking for Tailored Interactions: Effortlessly track individual users and their distinct sessions, allowing tailored interactions and deeper understanding of user behavior. The system assigns unique identifiers to each user enabling memory isolation (User A’s memories never leak to User B), maintains separate conversation threads within user accounts for topic-specific context management, and correlates memories across time enabling temporal reasoning about preference changes, behavior evolution, and relationship development. Session tracking provides granular control over memory scope—developers can scope memories to specific conversations, time periods, or interaction contexts ensuring relevant information retrieval without overwhelming with unrelated historical data.

- LLM Agnostic Universal Compatibility: Works flawlessly with wide range of Large Language Models including OpenAI (GPT-4, GPT-3.5), Anthropic (Claude 3.5 Sonnet/Opus/Haiku), Google (Gemini Pro/Ultra), Mistral models, and local models (Ollama, Hugging Face models), offering unparalleled flexibility. The platform provides unified adapters abstracting LLM-specific differences in API formats, prompting patterns, and response structures. Developers switch between LLM providers without rewriting memory integration code—same CogniMemo API calls work identically regardless of underlying model. This LLM agnosticism future-proofs applications against vendor lock-in and enables experimentation with different models while maintaining consistent memory capabilities across all choices.

- Learns from Every Interaction with Adaptive Memory: The more your AI interacts, the smarter it becomes. CogniMemo continuously learns and adapts based on each new piece of information, evolving the AI’s understanding over time. The system employs intelligent memory extraction identifying salient information worth storing from conversations (user preferences, important facts, task outcomes, relationship details), automatic memory updates refining existing knowledge as new information arrives (updating stale facts, resolving contradictions, consolidating redundant information), and pattern recognition discovering behavioral trends, preference clusters, and predictive insights from accumulated interaction history. Unlike static knowledge bases requiring manual curation, CogniMemo’s adaptive learning creates self-improving memory systems becoming more accurate and useful with continued usage.

- Hybrid Memory Architecture Combining Vector Search and Relational Links: CogniMemo employs sophisticated hybrid datastore combining vector embeddings for semantic similarity search, key-value stores for fast direct lookups, and graph databases capturing relational connections between memories. This multi-modal approach ensures different information types store in most efficient manner: vector databases enable finding conceptually similar memories even when exact keywords differ, key-value stores provide millisecond access to specific user attributes or facts, and graph databases maintain relationships showing how memories connect, influence each other, and form knowledge networks. The hybrid architecture delivers both speed (critical for real-time applications) and intelligence (understanding context beyond simple keyword matching).

- Privacy-First Design with Encrypted Storage: Security and privacy are paramount with encrypted data storage, role-based access controls limiting who can view/modify memories, and sandboxed execution preventing unauthorized access. The platform implements encryption at rest for stored memories, encryption in transit for API communications, and access controls enabling granular permissions (which users, applications, or agents can access specific memory scopes). For enterprise customers, CogniMemo provides compliance-ready features including audit logs tracking all memory operations, data residency controls specifying geographic storage locations, and retention policies automating memory lifecycle management per regulatory requirements.

- Developer-Ready API with Production-Scale Infrastructure: Integrate in minutes using simple SDKs, webhooks for event-driven architectures, and REST endpoints built for scalability. The platform provides comprehensive documentation with code examples, tutorials guiding common integration patterns, and API references documenting all endpoints. Production-ready features include rate limiting preventing abuse, automatic retry logic handling transient failures gracefully, webhook support for asynchronous memory updates, and monitoring dashboards showing memory usage, query performance, and system health. The infrastructure scales automatically handling traffic spikes without manual intervention or capacity planning.

- MCP (Model Context Protocol) Integration: CogniMemo supports Model Context Protocol enabling integration with AI-powered code editors and development tools including Cursor, Claude Desktop, VS Code, Windsurf, and Zed. MCP provides standardized interface for AI tools to access external context and memory, allowing developers to build persistent memory layers that work seamlessly across their entire AI toolkit ecosystem. The MCP integration enables scenarios like maintaining coding context across editor sessions, remembering project-specific patterns and preferences, and sharing learned knowledge between different AI assistants working on same projects.

- Memory Visualization and Relationship Mapping: The platform provides visual interfaces showing how memories connect, relate, and form knowledge graphs. Developers and end-users can browse memory networks, understand why particular memories were retrieved for given contexts, and identify gaps or inconsistencies in stored knowledge. This transparency builds trust in AI systems by making memory operations explainable rather than black-box processes.

How It Works

Implementing long-term memory for your AI is now remarkably simple through CogniMemo’s streamlined architecture:

Step 1: Developer API Integration

Developers integrate the CogniMemo API directly into their AI application using provided SDKs (Python, JavaScript, others) or standard REST HTTP requests. Integration typically requires: installing SDK via package manager (pip, npm), initializing client with API key obtained from CogniMemo dashboard, and adding memory operations at appropriate points in application flow (after user interactions, when facts are learned, during decision-making). The entire integration often completes in under 30 minutes for basic implementations.

Step 2: Memory Storage During Interactions

As users interact with your AI application, CogniMemo’s API intelligently stores conversation history and crucial user details. The system performs: automatic memory extraction identifying important information worth persisting (user preferences stated, tasks completed, decisions made, personal details revealed), semantic embedding generation creating vector representations enabling similarity search, and metadata tagging with timestamps, user IDs, session identifiers, and custom application-specific tags. Developers control what gets stored through API parameters specifying memory content, associated user/session, and optional metadata enriching retrieval.

Step 3: Intelligent Memory Retrieval

When users query the AI again (whether in same session or days/weeks later), CogniMemo swiftly retrieves the most relevant past context. The retrieval process involves: semantic search finding memories conceptually related to current query even without exact keyword matches, relevance scoring ranking memories by importance/recency/relatedness to prioritize most useful information, and context assembly packaging retrieved memories into format compatible with target LLM’s input requirements. The entire retrieval typically completes in under 100 milliseconds enabling real-time conversational experiences.

Step 4: LLM Prompt Augmentation

Retrieved memories get injected into LLM prompts providing historical context without developers manually managing conversation histories. CogniMemo formats retrieved information appropriately for target LLM including relevant past interactions, learned user preferences, and contextual facts enabling personalized responses. The system handles prompt engineering complexities (memory formatting, token budget management, relevance filtering) automatically, abstracting these concerns from application developers.

Step 5: Continuous Learning and Memory Updates

As conversations progress, CogniMemo continuously refines stored knowledge through: memory updates modifying existing facts when new information supersedes old (user changed preferences, updated contact information, revised goals), memory consolidation merging redundant or related memories reducing storage overhead while preserving information, and memory decay gradually reducing weight of outdated information ensuring recent interactions influence behavior more than ancient history. This continuous learning creates self-improving memory systems becoming more accurate representation of users over time.

Step 6: Cross-Application Memory Sharing (Optional)

Organizations can configure memories to be accessible across multiple applications enabling unified user profiles spanning different products. A user’s preferences learned in mobile app become available to web application, chat support system remembers issues reported through email, and personal AI assistant has access to task history from productivity tools—all without duplicate memory storage or synchronization overhead.

Use Cases

Given its specialized persistent memory capabilities, CogniMemo addresses various scenarios where stateless AI creates user experience problems:

Personalized AI Assistants That Truly Know Users:

- Shopping assistants learning style preferences, size information, budget constraints, and purchase history providing increasingly relevant product recommendations

- Coaching applications tracking fitness progress, dietary preferences, exercise tolerance, and motivational factors personalizing guidance over weeks/months

- Daily task managers understanding work patterns, priority preferences, delegation habits, and productivity rhythms adapting to individual workflows

- Financial advisors remembering investment goals, risk tolerance, life circumstances, and past decisions providing contextually appropriate recommendations

Smarter Customer Support with Historical Context:

- Support bots recognizing returning users recalling past issues, previous troubleshooting attempts, account specifics, and interaction preferences

- Faster resolution by avoiding repetitive information gathering—system already knows product versions, configuration details, past solutions attempted

- Proactive assistance identifying patterns across support interactions (same issue recurring, feature confusion, upgrade candidates) enabling preventative outreach

- Personalized communication adapting tone, detail level, and explanation style based on user’s technical sophistication and communication preferences learned over time

Engaging RPG Character Memory for Immersive Gaming:

- Game characters remembering player actions, dialogue choices, quest decisions, and relationship development creating persistent responsive NPCs

- Dynamic storylines evolving based on accumulated player history with branching narratives reflecting long-term consequences of past choices

- Relationship systems tracking reputation, trust levels, favors owed, and interpersonal dynamics with each NPC creating rich social gameplay

- Persistent world state where NPCs recall events player caused, locations visited, items traded, and alliances formed maintaining continuity across gaming sessions

Seamless Long Chat Sessions Maintaining Coherence:

- Research assistants maintaining context across extended analysis sessions (multi-day projects, evolving research questions, accumulated findings)

- Collaborative writing tools remembering story details, character backgrounds, plot threads, and stylistic preferences across multiple editing sessions

- Educational tutors tracking concept mastery, learning pace, struggle areas, and teaching method effectiveness adapting instruction over semester-long courses

- Therapeutic AI maintaining therapeutic relationship context, progress notes, coping strategies discussed, and emotional patterns identified across many sessions

Enterprise Knowledge Management and Institutional Memory:

- Corporate AI assistants building organizational knowledge graphs capturing decisions made, rationale documented, lessons learned, and best practices identified

- Sales tools remembering client interaction history, deal stages, stakeholder preferences, and competitive intelligence across long sales cycles

- Legal research assistants tracking case precedents researched, arguments developed, and legal strategies across months-long litigation

- Engineering documentation systems maintaining context about architecture decisions, technical debt, and implementation details as projects evolve

Pros \& Cons

Advantages

- Unmatched Ease of Implementation Eliminating Infrastructure Overhead: CogniMemo significantly simplifies development by eliminating need to build and manage vector databases, configure embedding models, design memory storage schemas, implement retrieval logic, or handle scaling infrastructure. Developers add persistent memory with few lines of code versus weeks/months building equivalent self-hosted systems. This ease-of-implementation dramatically reduces time-to-market for AI applications requiring memory capabilities.

- LLM Provider Agnostic Maximizing Flexibility: Enjoy freedom to use preferred LLM whether leading cloud provider (OpenAI, Anthropic), Google Gemini, Mistral, or locally hosted models (Ollama, Hugging Face) without vendor lock-in. The unified adapter layer means switching LLM providers requires zero changes to memory integration code—same CogniMemo API calls work identically regardless of underlying model. This flexibility future-proofs applications against LLM market changes and enables experimentation without rewriting memory systems.

- Production-Validated by 2000+ Developers: Trusted by 2000+ developers during beta testing demonstrates real-world usage validation beyond theoretical capabilities. This community adoption provides confidence that CogniMemo works reliably in actual applications rather than controlled laboratory conditions, reducing adoption risks for new users.

- Hybrid Memory Architecture Combining Speed and Intelligence: The combination of vector search (semantic understanding), key-value stores (fast direct access), and graph databases (relational reasoning) provides both performance and sophistication. Applications get millisecond retrieval latency enabling real-time conversational experiences while maintaining intelligent context-aware memory retrieval finding relevant information even when queries don’t match stored content exactly.

- Adaptive Learning Creating Self-Improving Systems: Unlike static knowledge bases requiring manual curation, CogniMemo continuously refines stored knowledge becoming more accurate representation of users, more effective at retrieving relevant context, and better at personalizing experiences automatically. This self-improvement means ROI increases with usage as memory quality compounds over time.

- Comprehensive Integration Ecosystem: Support for REST API, LangChain, Model Context Protocol, productivity tool syncing (Notion, Slack, Google Drive), and vector database connections (Pinecone, Weaviate, PostgreSQL, Redis) provides flexibility fitting diverse technology stacks and use cases without forcing architectural compromises.

Disadvantages

- Third-Party Dependency for Critical User Data: User memories and interaction histories rely on third-party service (CogniMemo) creating potential concerns around service availability, data portability, and vendor dependency. Organizations with strict data sovereignty requirements or concerns about external dependencies may hesitate adopting managed memory services versus self-hosted alternatives despite convenience advantages.

- Potential Privacy Considerations for Sensitive Applications: As with any tool handling user data, careful consideration of privacy implications and robust security measures are essential, especially for sensitive information (healthcare data, financial records, legal matters, personal identifying information). While CogniMemo implements encryption and access controls, organizations in highly regulated industries may require additional due diligence around data handling practices, compliance certifications, and audit capabilities before adoption.

- Pricing Structure Not Publicly Disclosed: As recently launched product (December 2025), detailed pricing tiers, usage limits, and cost structures remain unclear. Developers cannot accurately forecast expenses or compare costs against self-hosted alternatives without transparent pricing information. The lack of public pricing may slow adoption among cost-conscious organizations requiring budget approval before evaluation.

- Early-Stage Product with Limited Track Record: Product Hunt launch December 2025 indicates very recent market entry without extensive production track record beyond beta testing with 2000+ developers. Early adopters face risks around undiscovered bugs, evolving APIs potentially requiring migration efforts, feature gaps becoming apparent through broader usage, or potential service discontinuation if commercial viability doesn’t materialize.

- Requires Internet Connectivity for Memory Operations: As cloud-based service, CogniMemo requires network connectivity for memory storage and retrieval operations. Applications needing offline functionality or operating in connectivity-constrained environments cannot leverage CogniMemo’s capabilities during offline periods. Self-hosted alternatives like Mem0 (open-source) may better suit offline-first use cases despite requiring infrastructure management.

- Potential Latency for Real-Time Applications: While optimized for performance, external API calls introduce network latency potentially impacting user experience in latency-sensitive applications (real-time conversation, interactive gaming, millisecond-critical financial trading). Applications requiring sub-10ms response times may find API round-trip overhead unacceptable versus in-memory local solutions despite CogniMemo’s intelligent caching and optimization strategies.

How Does It Compare?

CogniMemo vs. Mem0

Mem0 (YC-backed, founded 2023) is an open-source memory layer for AI applications providing hybrid datastore architecture combining vector, key-value, and graph stores.

Deployment Model:

- CogniMemo: Managed cloud service with API-first integration; no infrastructure management required

- Mem0: Open-source self-hosted solution requiring infrastructure setup, configuration, and maintenance; also offers managed cloud option

Architecture:

- CogniMemo: Hybrid memory (vectors + relational links); proprietary implementation

- Mem0: Hybrid datastore (vector + key-value + graph); open architecture documented in research papers

Ease of Use:

- CogniMemo: “No infra” approach with simple API integration in minutes; fully managed service

- Mem0: Requires setting up databases (Qdrant/Pinecone for vectors, Neo4j for graph, Redis for key-value); more complex initial setup

Performance Benchmarks:

- CogniMemo: Performance claims not publicly benchmarked as of December 2025 launch

- Mem0: Achieves 26% relative improvements over OpenAI baseline on LoCoMo benchmark; research-validated performance

Community and Adoption:

- CogniMemo: 2000+ developers during beta; recently launched (Dec 2025)

- Mem0: Large open-source community; 10,000+ GitHub stars; extensive documentation and examples

Pricing:

- CogniMemo: Pricing not publicly disclosed; likely subscription-based SaaS

- Mem0: Open-source (free self-hosted); managed cloud option with usage-based pricing

LLM Support:

- CogniMemo: Works with any LLM (OpenAI, Anthropic, Gemini, Mistral, Ollama)

- Mem0: LLM-agnostic; documented integrations with major providers

When to Choose CogniMemo: For fully managed solution eliminating infrastructure overhead, when speed of implementation matters more than cost optimization, or when avoiding DevOps burden is priority.

When to Choose Mem0: For open-source preference, self-hosted control over data, cost optimization through infrastructure management, or when research-validated performance benchmarks reduce adoption risk.

CogniMemo vs. Zep

Zep is an AI memory platform building temporal knowledge graphs from user interactions and business data, achieving 94.8% accuracy on Deep Memory Retrieval benchmark.

Memory Architecture:

- CogniMemo: Hybrid memory with vectors, relational links, and pattern recognition

- Zep: Temporal knowledge graph (Graphiti engine) with bi-temporal tracking (event timeline + system timeline)

Temporal Reasoning:

- CogniMemo: Learns from interactions; adaptive memory updates over time

- Zep: Explicit temporal reasoning tracking when facts were valid, when they changed, and maintaining historical context

Performance Benchmarks:

- CogniMemo: No published benchmarks as of December 2025 launch

- Zep: 94.8% DMR benchmark accuracy (vs. MemGPT’s 93.4%); 18.5% accuracy improvements on LongMemEval; 90% latency reduction

Knowledge Graph:

- CogniMemo: Relational links between memories; visualization of memory networks

- Zep: Hierarchical knowledge graph with episode subgraphs (conversational data), semantic entity subgraphs, and community subgraphs (entity clusters)

Enterprise Focus:

- CogniMemo: Developer-focused with productivity tool integrations (Notion, Slack, Google Drive)

- Zep: Enterprise-focused with structured business data integration; validated on complex enterprise use cases

Latency:

- CogniMemo: Claims fast retrieval; specific metrics not publicly disclosed

- Zep: 90% latency reduction vs. full-context methods; 1.6k tokens per response vs. 115k in traditional approaches

Pricing:

- CogniMemo: Not publicly disclosed

- Zep: Self-hosted open-source version available; managed cloud service with enterprise pricing

When to Choose CogniMemo: For simpler integration without knowledge graph complexity, MCP protocol support, and productivity tool syncing.

When to Choose Zep: For enterprise applications requiring temporal reasoning, when validated benchmark performance reduces risk, or when sophisticated knowledge graph capabilities justify complexity.

CogniMemo vs. Supermemory

Supermemory is a long-term memory platform adding persistent, self-growing memory to AI applications with free tier available.

Positioning:

- CogniMemo: Universal AI memory layer for any application; API-first developer tool

- Supermemory: Memory engine + consumer app; both developer API and end-user product

Data Ingestion:

- CogniMemo: Stores memories from AI interactions; integrates with Notion/Slack/Drive

- Supermemory: Automatically ingests and processes data at scale in any format; extensive connector ecosystem

Features:

- CogniMemo: Persistent memory, context-aware retrieval, adaptive learning, memory visualization

- Supermemory: Memory API, automatic ingestion, semantic search, multimodality support, advanced filtering, reranking, query rewriting

Scalability:

- CogniMemo: Scalability claims without specific metrics

- Supermemory: Linear scaling; “incredibly fast and scalable” with affordable pricing

Pricing:

- CogniMemo: Not publicly disclosed; likely subscription-based

- Supermemory: Free tier available; affordable paid plans; transparent pricing model

Consumer vs. Developer:

- CogniMemo: Pure developer API/SDK offering; no end-user app

- Supermemory: Dual offering with both Memory API for developers and consumer “second brain” application

AI SDK Integration:

- CogniMemo: LangChain, MCP protocol, REST API, language SDKs

- Supermemory: Official Vercel AI SDK provider; memory router with direct LLM integration

When to Choose CogniMemo: For pure API solution without consumer app complexity, MCP protocol requirement, or LangChain ecosystem integration.

When to Choose Supermemory: For free tier to test before committing, when transparent pricing matters, if consumer second-brain app provides value alongside API, or when Vercel AI SDK integration is priority.

CogniMemo vs. Self-Hosted Solutions (Building Own Memory)

Self-hosted solutions involve developers building custom memory systems using vector databases (Pinecone, Weaviate, Qdrant), embedding models, and retrieval logic.

Development Time:

- CogniMemo: Minutes to integrate with few lines of code

- Self-Hosted: Weeks to months building infrastructure, implementing retrieval logic, handling edge cases

Infrastructure Management:

- CogniMemo: Zero infrastructure management; fully managed service

- Self-Hosted: Requires database setup, scaling, monitoring, backup, security, updates

Cost Structure:

- CogniMemo: Subscription fees (amount undisclosed); predictable operational expenses

- Self-Hosted: Infrastructure costs (databases, compute, storage) plus developer time; variable based on scale

Customization:

- CogniMemo: Limited to provided API capabilities; feature requests depend on vendor roadmap

- Self-Hosted: Complete control over memory architecture, retrieval algorithms, data models, and feature set

Data Control:

- CogniMemo: Data stored in third-party service; requires trust in vendor security and privacy

- Self-Hosted: Complete data sovereignty; data never leaves organizational infrastructure

Maintenance:

- CogniMemo: Automatic updates, scaling, optimization handled by vendor

- Self-Hosted: Ongoing maintenance burden including updates, performance tuning, bug fixes

Time to Market:

- CogniMemo: Immediate; integrate and deploy within hours

- Self-Hosted: Delayed; 4-12 weeks typical development time before production-ready

When to Choose CogniMemo: For rapid prototyping, when developer time costs exceed subscription fees, or when infrastructure management overhead is unacceptable.

When to Choose Self-Hosted: For maximum customization control, strict data sovereignty requirements, cost optimization at scale, or when vendor lock-in concerns outweigh convenience.

Final Thoughts

CogniMemo represents a meaningful advancement in making persistent AI memory accessible to developers without requiring deep expertise in vector databases, embedding models, or memory architecture design. The December 2025 Product Hunt launch (124 upvotes, ranking #11 for the day) validates market interest in managed memory services addressing the fundamental limitation of stateless LLMs forgetting everything between conversations—a problem preventing personalized AI experiences that improve over time.

What distinguishes CogniMemo from alternatives is its “no infrastructure” approach prioritizing developer experience and rapid integration over architectural flexibility. While open-source solutions like Mem0 provide more control and research-validated performance, and specialized platforms like Zep offer sophisticated temporal knowledge graphs with proven enterprise benchmarks, CogniMemo’s managed service model eliminates weeks/months of setup and ongoing infrastructure management enabling developers to add persistent memory within minutes rather than months.

The platform particularly excels for:

- Startups and small teams lacking DevOps resources to manage self-hosted memory infrastructure preferring subscription costs over infrastructure overhead

- Rapid prototyping scenarios where speed to market matters more than cost optimization and architectural control

- Non-technical founders building AI products without deep ML/database expertise who need turnkey solutions

- Developers exploring memory capabilities wanting to experiment before committing to self-hosted infrastructure investments

- Applications requiring MCP protocol for integration with AI-powered development tools (Cursor, Claude Desktop, VS Code, Windsurf, Zed)

For organizations requiring research-validated performance benchmarks, Zep’s 94.8% DMR accuracy and 18.5% LongMemEval improvements provide measurable confidence. For cost-conscious teams or those prioritizing data sovereignty, Mem0’s open-source option enables self-hosting with community support and transparent architecture. For teams with DevOps capacity seeking maximum customization, building custom solutions using Pinecone/Weaviate/Qdrant provides complete control despite development overhead.

But for the specific intersection of “managed service,” “rapid integration,” and “LLM-agnostic memory,” CogniMemo addresses capabilities that save meaningful development time and infrastructure complexity. The platform’s primary limitations—third-party dependency for critical data, undisclosed pricing creating budget uncertainty, early-stage product maturity with limited track record, and managed-only deployment preventing self-hosting—reflect expected constraints of recently-launched managed services prioritizing ease of use over flexibility.

The critical strategic question for developers isn’t whether AI applications need persistent memory (stateless limitations prove memory is essential for personalization), but whether to build, buy open-source, or subscribe to managed services. CogniMemo’s value proposition centers on eliminating infrastructure management overhead—worthwhile for organizations where developer time costs exceed subscription fees and time-to-market advantages justify vendor dependency tradeoffs.

If your AI application suffers from repetitive context re-establishment frustrating users, if building memory infrastructure diverts engineering resources from core product development, if rapid prototyping requires production-ready memory within days not months, or if MCP integration matters for development tool ecosystem—CogniMemo provides accessible solution worth evaluating. The 2000+ developer beta adoption and active Product Hunt community suggest committed team and growing ecosystem despite early-stage status.

For early adopters accepting managed service tradeoffs (vendor dependency, pricing uncertainty, limited customization), CogniMemo delivers on its promise of persistent AI memory without infrastructure complexity—transforming stateless conversations into continuous relationships where AI truly remembers, learns, and evolves with every interaction.