Table of Contents

- Cowork: Comprehensive Research Report

- 1. Executive Snapshot

- 2. Impact & Evidence

- 3. Technical Blueprint

- 4. Trust & Governance

- 5. Unique Capabilities

- 6. Adoption Pathways

- 7. Use Case Portfolio

- 8. Balanced Analysis

- 9. Transparent Pricing

- 10. Market Positioning

- 11. Leadership Profile

- 12. Community & Endorsements

- 13. Strategic Outlook

- Final Thoughts

Cowork: Comprehensive Research Report

1. Executive Snapshot

Core Offering Overview

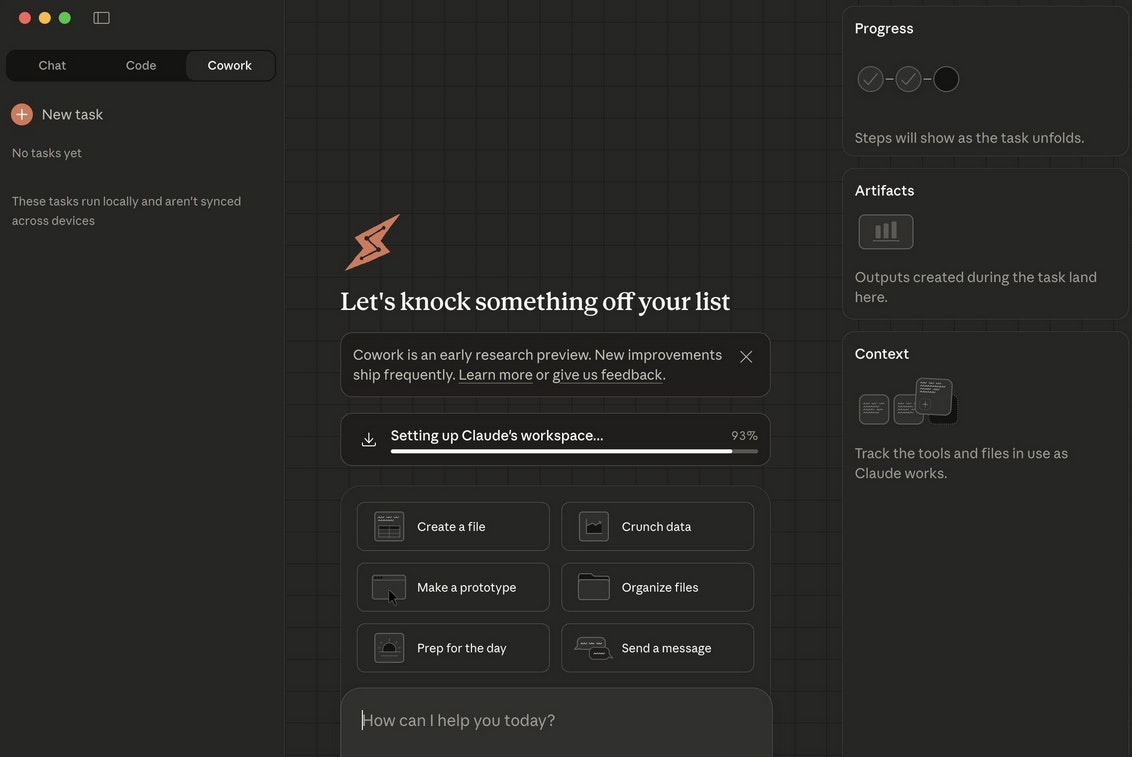

Cowork represents Anthropic’s strategic expansion of autonomous AI capabilities beyond software development into general knowledge work. Launched as a research preview on January 12, 2026, Cowork applies the same agentic architecture powering Claude Code—which evolved from research preview to billion-dollar product in six months—to non-coding workflows including document creation, file organization, data processing, and research synthesis. The service fundamentally reimagines human-AI collaboration by enabling Claude to operate with meaningful agency rather than merely responding to sequential prompts.

Built into the Claude Desktop application for macOS, Cowork grants Claude controlled access to user-designated folders where it can read, modify, create, and organize files without continuous manual intervention. Users describe desired outcomes through natural language instructions, then step away while Claude develops plans, executes multi-step workflows, and provides progress updates. This shift from conversational back-and-forth to asynchronous task delegation mirrors working relationships with human colleagues, compressing workflows that traditionally require hours of manual effort into minutes of AI-driven execution.

The platform operates on a sandboxed architecture where users explicitly define folder boundaries and connector permissions before Claude initiates work. This permission model balances autonomy with control: Claude executes complex workflows independently while requesting approval before potentially destructive actions like file deletion or major structural changes. The system leverages sub-agent coordination to decompose ambitious objectives into manageable subtasks,

then parallelizes execution where dependencies permit, accelerating completion times compared to sequential processing.

Cowork extends Claude’s capabilities through an initial skills library optimizing document creation, presentation generation, and spreadsheet formulation. These specialized skills enable production of professional outputs including Excel workbooks with functional formulas, PowerPoint presentations with consistent formatting, and structured Word documents without external software requirements. Integration with existing Claude connectors provides access to external information sources, while compatibility with Claude in Chrome enables workflows requiring browser interaction including web research and data extraction.

Key Achievements & Milestones

Anthropic’s announcement of Cowork coincides with significant organizational evolution reflecting the company’s confidence in agentic AI’s transformative potential. The company formalized Labs—an internal incubator focused on experimental products at the frontier of Claude’s capabilities—as a dedicated team tasked with rapid prototyping and iterative refinement based on user feedback. Mike Krieger, Instagram co-founder and Anthropic’s Chief Product Officer for two years, transitioned from executive leadership to co-lead Labs alongside Ben Mann, signaling hands-on commitment to building frontier experiences rather than traditional C-suite oversight.

Krieger’s credentials underscore Anthropic’s ambition: he scaled Instagram from startup to over eight hundred million monthly users, expanded engineering teams to four hundred fifty members within Facebook, and subsequently co-founded Artifact, a news recommendation platform acquired by Yahoo. His shift from CPO to Member of Technical Staff represents strategic prioritization of building over managing, recognizing that AI advancement velocity demands organizational structures enabling rapid experimentation. Ami Vora, joining Anthropic in late 2025, assumes Product organization leadership to scale reliable Claude experiences for millions of daily users while Labs explores frontier capabilities.

This dual-track approach—Labs incubating experimental products alongside Core Product scaling proven experiences—reflects lessons from Claude Code’s trajectory. Launched as a research preview in November 2024, Claude Code demonstrated market appetite for agentic AI by achieving billion-dollar product status within six months. The Model Context Protocol, another Labs innovation, reached one hundred million monthly downloads and emerged as an industry standard for connecting AI to tools and data. Cowork’s launch as a research preview follows this proven pattern: early release to gather real-world usage data, rapid iteration based on feedback, and progressive expansion as capabilities mature.

Remarkably, Anthropic disclosed that much of Cowork’s implementation involved AI assistance, with substantial portions built in under two weeks through what industry observers termed “vibe-coding”—leveraging AI to accelerate development cycles. This meta-application of autonomous agents to build autonomous agents demonstrates confidence in the technology’s practical utility while showcasing Anthropic’s willingness to dogfood experimental capabilities internally before public release.

Adoption Statistics

Quantitative adoption metrics remain limited given Cowork’s January 2026 launch and research preview status. The service currently restricts access to Claude Max subscribers—Anthropic’s premium tier priced at one hundred dollars monthly for Max 5x or two hundred dollars monthly for Max 20x—with Mac OS Desktop application requirement creating additional entry barriers. Users on Professional, Team, and Enterprise plans can join waitlists for future access, though expansion timelines remain undisclosed. This gated availability strategy enables controlled user growth while technical teams iterate on safety mechanisms, performance optimizations, and cross-platform support.

The absence of free trial periods and standalone Cowork subscriptions represents deliberate friction prioritizing quality over quantity during early stages. Prospective users must commit to full Max plan pricing without prior hands-on evaluation, relying instead on video demonstrations, community discussions, and published documentation to assess value propositions. This approach filters for serious adopters willing to invest substantially in cutting-edge capabilities while reducing support burden from casual experimenters unlikely to provide actionable feedback.

Early community reception reveals enthusiasm tempered by pragmatic concerns. Technical practitioners praise workflow automation potential, particularly for tedious file organization and document generation tasks consuming disproportionate time relative to value. Security researchers identified prompt injection vulnerabilities within days of launch, demonstrating both community engagement and the immature security posture characteristic of research previews. Users note higher token consumption compared to standard Claude conversations, with Cowork sessions potentially exhausting Max plan allowances faster than anticipated during intensive workflows.

Mac OS exclusivity generates frustration among Windows and Linux users representing substantial portions of potential audiences. Anthropic promises cross-platform expansion without specific timelines, positioning Mac OS availability as initial deployment enabling rapid iteration before tackling additional platform complexities. The strategic calculus prioritizes macOS’s robust virtualization frameworks and predictable security models over immediate market coverage, accepting temporary addressable market limitations in exchange for technical foundations supporting safer, more reliable agent execution.

2. Impact & Evidence

Client Success Stories

Formal case studies and detailed customer testimonials remain sparse given Cowork’s recent launch and research preview status. However, early adopter accounts and demonstration materials reveal compelling use case patterns. File organization workflows represent low-hanging fruit where Cowork delivers immediate value: users report chaotic downloads folders with hundreds of unsorted files becoming structured hierarchies within minutes. Claude analyzes file types, extracts metadata, identifies logical groupings, creates appropriate folder structures, and executes bulk renaming and relocation operations—workflows requiring hours of manual attention compressing into minutes of autonomous execution.

Expense reporting automation demonstrates data extraction and document creation capabilities. Users upload receipt screenshots to designated folders, instruct Cowork to compile expense reports, and receive Excel spreadsheets with extracted transaction details, calculated totals, and formatted layouts suitable for accounting system imports. This workflow eliminates repetitive manual data entry while providing audit trails through preserved original receipts. Early users emphasize time savings measured in hours per expense cycle, translating to meaningful productivity gains for professionals managing frequent business travel.

Report generation from scattered research notes showcases synthesis capabilities. Academics and consultants accumulate fragmented findings across multiple documents, PDFs, and text files throughout research phases. Cowork ingests these distributed sources, identifies thematic connections, structures coherent narratives, and produces polished drafts integrating citations and maintaining consistent terminology. While outputs require human review and refinement, the heavy lifting of organizing and articulating preliminary drafts compresses dramatically compared to manual composition from blank pages.

Marketing professionals leverage Cowork for batch content processing including email draft generation, social media response templates, and campaign material variations. By providing brand guidelines, reference materials, and desired output specifications, marketers receive consistent first drafts requiring minimal editing rather than starting from scratch. This workflow particularly benefits small teams lacking dedicated copywriting resources, democratizing professional content creation through AI augmentation.

Technical users familiar with Claude Code report distinct usage patterns: Code handles software development workflows while Cowork manages adjacent knowledge work including documentation generation, project organization, and stakeholder communication. This division of labor mirrors human team structures where specialized roles collaborate on interconnected deliverables. The ability to seamlessly transition between Claude Code’s terminal environment and Cowork’s visual interface reduces context switching while maintaining appropriate tooling for task characteristics.

Performance Metrics & Benchmarks

Quantitative performance benchmarks remain unpublished in official materials, consistent with research preview status prioritizing qualitative feedback over formal SLA commitments. User reports suggest task completion times varying dramatically based on workflow complexity, file volumes, and external dependency latency. Simple file organization operations complete within minutes, medium-complexity document generation requires ten to thirty minutes depending on source material volume, and sophisticated multi-step workflows involving external data sources may extend to hours.

Token consumption patterns differ significantly from standard conversational interactions. Cowork’s agentic architecture requires Claude to maintain execution context across extended workflows, monitor progress across parallel subtasks, and generate intermediate planning artifacts guiding execution. These overhead costs translate to higher per-task token usage compared to equivalent conversational exchanges. Users report Max 5x allowances—approximately two hundred twenty-five messages per five-hour window—depleting faster during intensive Cowork sessions, with some workflows consuming credits equivalent to dozens of conventional chat messages.

The sub-agent coordination architecture enabling parallel task execution delivers performance advantages for workflows with independent subtasks. When instructed to process multiple documents simultaneously, Cowork spawns coordinated sub-agents handling files concurrently rather than sequentially, compressing total execution time proportional to parallelization opportunities. However, this sophistication introduces coordination overhead and potential failure modes where sub-agent errors require manual intervention to resolve.

Session persistence requirements create workflow constraints: users must keep Claude Desktop application open throughout task execution, with application closure terminating sessions and abandoning incomplete work. This architectural limitation stems from Cowork’s current implementation relying on active application state rather than server-side job persistence. Long-running workflows spanning hours risk interruption from unexpected application crashes, system restarts, or accidental closures, necessitating proactive saving of intermediate results and resumption strategies for complex operations.

The absence of memory across sessions means Claude approaches each Cowork invocation fresh without accumulated context from prior interactions. Users cannot build on previous work through conversational continuity, instead providing complete context and instructions per session. This stateless design simplifies security and reduces system complexity but imposes repetition burdens for iterative workflows requiring multiple refinement cycles.

Third-Party Validations

Independent security assessments provide the most substantive third-party validation available during Cowork’s early days. PromptArmor, a security research firm specializing in AI vulnerability analysis, identified prompt injection attack vectors within days of launch. Their research demonstrated that malicious actors could embed hidden instructions in seemingly benign files—using techniques like single-point font sizes, white text on white backgrounds, and minimal line spacing to conceal prompts from human review—that Cowork would execute when processing these files.

The discovered attack pattern exploits Cowork’s trust in Anthropic’s API as an approved external service. While Cowork operates in sandboxed environments restricting most network access, the Anthropic API remains accessible for legitimate functionality. Attackers craft prompts instructing Claude to upload sensitive files to attacker-controlled accounts using the file upload API with attacker-provided credentials. This exfiltration mechanism bypasses sandbox protections by leveraging Claude’s legitimate API access for malicious purposes.

PromptArmor also documented denial-of-service vulnerabilities where files with incorrect extensions trigger repeated API errors, consuming system resources and degrading performance. When reporting these findings to Anthropic, the company acknowledged the vulnerabilities but declined immediate fixes, citing research preview status and existing user warnings about prompt injection risks. Anthropic’s response emphasizes that agent safety remains an active development area across the industry, with sophisticated defenses in place but no claims of complete protection against adversarial inputs.

Technology journalists and industry analysts provided mixed early assessments. TechCrunch positioned Cowork as a more accessible alternative to Claude Code, lowering technical barriers while maintaining powerful autonomous capabilities. Engadget highlighted pricing considerations, noting that even reduced-tier Professional subscribers at twenty dollars monthly cannot access Cowork without upgrading to hundred-dollar Max plans—a five-fold cost increase potentially limiting adoption among budget-conscious users.

Simon Willison, prominent AI security researcher and developer advocate, emphasized prompt injection as an ever-present threat requiring user vigilance. His analysis noted that until high-profile security incidents occur, persuading users to take these risks seriously proves difficult. Willison disclosed his own risky Claude Code usage patterns including –dangerously-skip-permissions flags that could enable havoc if malicious injections succeeded, acknowledging the normalization of deviance among early adopters prioritizing capability over security.

Industry comparisons position Cowork within the broader autonomous agent trend gaining momentum throughout 2025 and into 2026. Competitors including GitHub Copilot Workspace, Replit Agent, and Cursor target developer workflows, while Cowork deliberately expands addressable markets to knowledge workers without coding expertise. This positioning differentiates Anthropic’s strategy from competitors focusing exclusively on software development, recognizing that autonomous task execution value extends far beyond code generation.

3. Technical Blueprint

System Architecture Overview

Cowork’s technical foundation rests on the Claude Agent SDK, the same infrastructure powering Claude Code’s autonomous coding capabilities. This architectural reuse accelerates development while ensuring consistency across Anthropic’s agentic product portfolio. The SDK provides primitives for task decomposition, dependency tracking, progress monitoring, approval workflows, and state management—capabilities essential for reliable autonomous operation regardless of specific task domains.

The execution environment operates within sandboxed virtualization leveraging macOS’s security frameworks. When users grant Cowork access to specific folders, the system establishes isolated environments where Claude can interact with designated files without broader system access. This sandboxing approach balances autonomy with containment: Claude manipulates files freely within approved boundaries while unable to access system directories, personal folders, or network resources outside explicit permissions. The architecture mirrors principle-of-least-privilege security models where capabilities grant only minimum necessary access for legitimate functions.

Sub-agent coordination represents Cowork’s most sophisticated architectural element. Complex workflows decompose into discrete subtasks assigned to specialized sub-agents operating quasi-independently under orchestrator supervision. An expense report generation workflow might spawn sub-agents for image processing (extracting text from receipt photos), data validation (checking amount formats and currency codes), spreadsheet generation (creating formatted Excel files), and quality assurance (verifying formula correctness). These sub-agents execute in parallel where dependencies permit, with the orchestrator managing handoffs, resolving conflicts, and assembling final outputs.

This multi-agent architecture introduces coordination complexity absent from simpler single-agent designs. Sub-agents must communicate intermediate results, signal completion or failure states, and handle resource contention when multiple agents require simultaneous file access. The orchestrator implements sophisticated scheduling algorithms determining execution order, parallelization opportunities, and failure recovery strategies. When sub-agents encounter errors, orchestrators decide whether to retry operations, escalate to human review, or abort entire workflows to prevent cascading failures.

The skills library extends Claude’s baseline capabilities through specialized modules optimizing common output formats. Document creation skills encode best practices for professional formatting, consistent styling, and structural conventions. Presentation skills understand slideshow narratives, visual hierarchy principles, and content distribution across slides. Spreadsheet skills generate functional formulas, apply data validation rules, and implement conditional formatting. These pre-built skills reduce the prompt engineering burden on users while ensuring output quality exceeding what baseline language models might produce without domain-specific tuning.

API & SDK Integrations

Cowork integrates with Claude’s existing connector ecosystem, enabling access to external information sources and services. Connectors act as authenticated gateways linking Claude to databases, APIs, cloud storage, and web services. When users configure connectors—for example, authorizing access to company databases or project management platforms—Cowork can query these sources during task execution without requiring separate authentication workflows per operation.

The Model Context Protocol, Anthropic’s open standard for AI-tool integration reaching one hundred million monthly downloads, provides the technical foundation for connector functionality. MCP enables bidirectional communication between Claude and external systems, supporting both information retrieval and action execution. A project management connector might allow Cowork to read task lists, create new issues, update statuses, and retrieve project documentation—capabilities transforming Cowork from isolated file processor to integrated workflow orchestrator.

However, notable limitations constrain current integration capabilities. GSuite connector compatibility remains absent in Cowork’s initial release, preventing direct access to Google Drive, Gmail, Calendar, and Docs despite these services representing core productivity infrastructure for millions of users. This gap likely reflects technical or partnership constraints requiring resolution before enabling deep Google ecosystem integration. Users requiring GSuite functionality must employ workarounds including local file exports or manual data transfers, introducing friction absent from fully integrated workflows.

Claude in Chrome integration extends Cowork’s reach beyond local file systems to web-based workflows. When users run Claude’s browser extension alongside Cowork, tasks can incorporate real-time web research, data scraping, form filling, and web service interaction. An example workflow might instruct Cowork to research competitor pricing, compile findings into structured spreadsheets, then upload results to shared cloud storage—combining browser automation, data processing, and file generation into unified multi-step operations.

The technical implementation details regarding API rate limits, concurrency controls, and resource allocation policies remain largely undocumented in public materials. Users presumably share Max plan allowances across all Claude usage including standard conversations, Claude Code sessions, and Cowork tasks, though specific accounting mechanisms determining relative consumption remain opaque. This opacity complicates capacity planning for organizations attempting to predict usage patterns and associated costs.

Scalability & Reliability Data

Formal scalability specifications and reliability guarantees remain unpublished consistent with research preview status. Anthropic explicitly positions Cowork as experimental technology subject to rapid iteration, bugs, and breaking changes as development progresses. Users should expect operational instability, incomplete features, and workflow interruptions characteristic of early-stage software rather than production-grade reliability.

Session persistence limitations create reliability constraints for long-running workflows. Because Cowork requires continuous Claude Desktop application operation, system instability, application crashes, or user error terminating the app abandons in-progress work. Users executing multi-hour workflows must monitor sessions actively, accepting interruption risks that production systems would mitigate through server-side persistence and automatic resumption capabilities.

The absence of cross-device synchronization means workflows initiated on one machine cannot resume on others, limiting flexibility for mobile professionals working across multiple devices. Users traveling with laptops who begin tasks at offices cannot seamlessly continue on home computers without manual state transfer—a friction point modern cloud applications typically eliminate through ubiquitous synchronization. Anthropic’s roadmap includes cross-device sync, though implementation timelines remain unspecified.

Resource consumption patterns during intensive workflows remain largely uncharacterized in public documentation. Users processing large file volumes, generating extensive outputs, or executing complex multi-step operations may encounter memory limitations, performance degradation, or failure modes stemming from resource exhaustion. The sandboxed execution environment presumably imposes memory and CPU constraints preventing runaway processes from destabilizing host systems, though specific limits warrant clarification for capacity planning purposes.

Error handling sophistication determines practical reliability during actual usage. Mature agent systems gracefully handle partial failures, retry transient errors, provide actionable diagnostic information, and maintain system consistency despite component failures. Cowork’s maturity in these dimensions remains uncertain given limited operational history. Users should anticipate cryptic error messages, unexpected workflow terminations, and edge cases requiring manual intervention—typical characteristics of research-grade software prioritizing capability exploration over hardened reliability.

4. Trust & Governance

Security Certifications

Cowork does not advertise specific security certifications in available public documentation. However, Cowork inherits security postures from Anthropic’s broader Claude Enterprise platform, which implements compliance frameworks addressing enterprise security requirements. Claude Enterprise offerings include SOC 2 Type II compliance, providing independent attestation of security controls across availability, confidentiality, and processing integrity domains. Organizations requiring vendor compliance validation can reference Claude Enterprise certifications as proxies for underlying infrastructure security, though Cowork-specific audit scope remains unclear.

The Compliance API introduced for Claude Enterprise and Team plans in August 2025 provides programmatic access to usage data and customer content, enabling organizations to build continuous monitoring systems, automated policy enforcement, and comprehensive audit trails. While Cowork’s integration with Compliance API remains undocumented, the architectural relationship between products suggests potential for extending enterprise controls to agentic workflows once Cowork transitions from research preview to production offerings.

HIPAA compliance announcements for Claude Enterprise in January 2026 signal Anthropic’s commitment to regulated industry requirements. Healthcare and life sciences organizations can now deploy Claude for Business Associational Agreement-covered use cases, expanding addressable markets into highly regulated domains. However, Cowork’s current research preview status and Max-tier exclusivity likely preclude immediate HIPAA-compliant deployments until enterprise plan integration occurs.

The sandboxed execution architecture provides foundational security isolation limiting blast radius from compromised workflows. Even if prompt injection attacks succeed in manipulating Claude’s behavior, sandbox boundaries restrict access to designated folders rather than entire filesystems. This containment approach reduces but does not eliminate risks: sensitive files within approved folders remain vulnerable, and sophisticated attacks exploiting API access channels can circumvent local restrictions.

Data Privacy Measures

Anthropic’s general data handling practices apply to Cowork operations, with user-generated content including files, instructions, and outputs flowing through company infrastructure for processing. The company’s terms of service define data usage rights, retention policies, and privacy protections governing how Anthropic handles customer information. Users should review these policies carefully to understand implications for confidential or sensitive data processing through Cowork workflows.

The local file access model introduces unique privacy considerations compared to purely cloud-based services. Because Cowork directly manipulates files on user devices rather than requiring uploads to external servers, data theoretically remains more private than alternatives requiring cloud transfer. However, Claude’s processing still involves Anthropic’s inference infrastructure, meaning file contents traverse network connections and potentially touch multiple system components during execution. Users processing highly sensitive information should verify encryption-in-transit and data residency policies ensuring adequate protections.

Anthropic explicitly warns users against granting Cowork access to folders containing sensitive or confidential information during research preview phases. This guidance acknowledges security immaturity and potential for unintended data exposure through bugs, vulnerabilities, or misconfigurations. Responsible deployment practices mandate segregating production data from experimental tool access, accepting that research previews prioritize capability exploration over hardened data protection.

The absence of memory across Cowork sessions provides both privacy benefits and limitations. Because Claude doesn’t retain context between sessions, previously processed files and instructions don’t influence future behavior—preventing unintended information leakage across temporally separated workflows. However, this statelessness also means users must repeatedly provide context and permissions, reducing convenience while improving privacy boundaries.

Prompt injection vulnerabilities identified by PromptArmor create data exfiltration risks where malicious files trigger Claude to upload sensitive information to attacker-controlled accounts. While Anthropic implements defenses against such attacks, the company acknowledges agent safety remains an active development area without claims of complete protection. Users processing files from untrusted sources should assume prompt injection risks and implement corresponding mitigations including thorough file inspection before Cowork processing.

Regulatory Compliance Details

Specific regulatory compliance postures for Cowork including GDPR, CCPA, and industry-specific frameworks remain largely undocumented in accessible materials. However, Anthropic’s broader enterprise offerings address regulatory requirements through various mechanisms. The Compliance API provides audit trails and usage monitoring supporting GDPR accountability obligations, data retention controls enable adherence to minimization principles, and administrative controls facilitate user consent management and data subject rights fulfillment.

Cross-border data transfer considerations affect users processing information subject to geographic restrictions. Anthropic’s infrastructure likely replicates data across regions for performance and resilience, potentially moving European user data through US systems or vice versa. Organizations subject to GDPR or comparable frameworks should verify data residency options, transfer mechanisms like Standard Contractual Clauses, and compliance with evolving privacy regulations.

The research preview designation likely exempts Cowork from immediate compliance validation for enterprise procurement processes. Organizations with strict vendor assessment requirements may defer Cowork adoption until production release accompanied by compliance documentation, certifications, and contractual commitments. This cautious approach accepts missing early capability access in exchange for reduced regulatory risk during immature product phases.

Financial services, healthcare, and government sectors face heightened regulatory scrutiny demanding comprehensive compliance evidence before vendor approval. Cowork’s current positioning as experimental capability targeting individual Max subscribers rather than organizational deployments reflects realities that research previews rarely meet enterprise compliance standards. As Cowork matures and expands to Team and Enterprise plans, corresponding compliance investments will likely accompany broader availability.

Industry-specific frameworks including PCI-DSS for payment processing, FERPA for educational records, and defense-specific security requirements represent additional compliance domains Anthropic may address as Cowork applications expand into specialized verticals. The company’s track record implementing enterprise-grade controls for core Claude offerings suggests similar compliance maturity will eventually extend to agentic products, though timelines remain uncertain.

5. Unique Capabilities

Infinite Canvas: Applied Use Case

Cowork’s most distinctive capability involves sustained autonomous execution across extended timeframes without conversation interruptions or context limitations typical of chat-based interactions. Traditional Claude conversations impose message limits per time window, requiring users to wait for quota resets before continuing complex workflows. Cowork’s architecture removes these constraints for designated tasks: once users initiate workflows and provide necessary context, Claude persists through completion regardless of duration, handling complexity that would exhaust conversational approaches.

This “infinite canvas” manifests practically in workflows requiring iterative refinement across dozens of files. A user organizing years of accumulated photographs might instruct Cowork to analyze thousands of images, extract metadata including dates and locations, identify duplicates, group by themes, create folder hierarchies, and rename files according to consistent conventions. This workflow involves numerous individual operations each requiring processing time and intermediate decisions. Conversational approaches would fragment across multiple sessions with manual coordination between stages. Cowork handles the entire pipeline autonomously, checking in periodically with progress updates but fundamentally operating unsupervised until completion.

Long-running task capability particularly benefits workflows with external dependencies introducing latency. Web research tasks involving multiple searches, page loads, content extraction, and synthesis naturally accumulate wait times as external services respond. Cowork absorbs these delays without user attention, progressing through dependent tasks as prerequisites complete. Users initiate research assignments in mornings, then return afternoons to comprehensive reports rather than managing incremental progress through manual iteration.

However, the requirement that Claude Desktop remain open throughout execution limits practical “infinite” claims. Users cannot close applications, restart systems, or switch devices without terminating sessions. This constraint means “infinite” more accurately describes logical workflow scope rather than practical runtime, with sessions still bounded by system uptime and application stability. Future implementations incorporating server-side persistence could remove these limitations, enabling true background execution surviving client disruptions.

Multi-Agent Coordination: Research References

Cowork’s sub-agent architecture implements sophisticated coordination patterns enabling parallel execution of independent workflow components. This multi-agent approach draws from distributed systems research including task scheduling algorithms, dependency graph resolution, and consensus protocols ensuring consistency across concurrent operations. When users request complex outcomes requiring multiple distinct operations, Cowork’s orchestrator analyzes dependencies, identifies parallelization opportunities, spawns appropriate sub-agents, and manages execution coordination.

Academic research in multi-agent systems informs these architectural choices. Work on agent communication languages, contract net protocols for task allocation, and hierarchical planning systems provides theoretical foundations for practical implementations. Anthropic’s specific approach likely incorporates recent advances in large language model-based planning where agents generate structured plans including subtask definitions, dependency specifications, and success criteria before execution begins.

The sub-agent model particularly benefits workflows with natural decomposition into specialized functions. Document generation workflows might separate research subtasks from writing, with research agents gathering source materials while writing agents draft initial content, then synthesis agents combine outputs into coherent narratives. This division of labor mirrors human team structures while compressing timelines through parallel execution impossible in sequential approaches.

However, multi-agent coordination introduces failure modes absent from simpler architectures. Sub-agents may produce outputs incompatible with downstream consumers’ expectations, requiring retry loops or manual intervention. Coordination overhead consumes tokens and processing time even when workflows ultimately complete successfully. Debugging failures proves more complex when errors originate from sub-agent interactions rather than single linear execution paths.

Research references supporting Cowork’s architecture likely include recent publications in autonomous agents, including work on ReAct prompting patterns enabling language models to reason about actions iteratively, tree-of-thought approaches decomposing complex problems into manageable subtasks, and reflection mechanisms allowing agents to evaluate and correct their own outputs. These techniques transform general-purpose language models into reliable autonomous operators capable of sustained goal-directed behavior.

Model Portfolio: Uptime & SLA Figures

Cowork leverages Anthropic’s Claude model family, presumably utilizing Claude 3 Opus or subsequent versions providing maximum capability for complex reasoning and planning. The underlying model availability and performance directly impact Cowork reliability: when Anthropic’s inference infrastructure experiences degradation or capacity constraints, Cowork workflows suffer corresponding impacts including increased latency, elevated error rates, or complete unavailability.

Anthropic maintains high availability targets for core Claude services, typically achieving uptime exceeding ninety-nine percent across monthly measurement windows. This reliability enables production deployments by enterprise customers requiring dependable service access. However, specific SLA commitments for Cowork remain unpublished given research preview status prioritizing rapid iteration over contractual guarantees. Users should anticipate operational instability characteristic of experimental software rather than production-grade reliability.

The Max plan pricing positioning suggests Cowork targets serious adopters willing to invest substantially in cutting-edge capabilities rather than casual users requiring strict SLA adherence. At one hundred to two hundred dollars monthly, Max subscriptions implicitly purchase early access and influence over product direction rather than guaranteed uptime or support responsiveness. This positioning aligns with research preview objectives gathering feedback from sophisticated users comfortable with instability in exchange for frontier capability access.

Token consumption patterns introduce operational constraints affecting practical availability. Users exhausting Max plan allowances mid-workflow effectively experience service unavailability until quota resets occur at five-hour intervals. Unlike hard outages stemming from infrastructure failures, quota exhaustion represents expected system behavior enforcing usage limits. However, the end-user experience remains similar: inability to complete workflows due to service constraints beyond individual control.

Future production releases will likely introduce formal SLA commitments as Cowork transitions from research preview to general availability. Enterprise customers requiring contractual reliability guarantees, defined support response times, and financial penalties for SLA violations represent substantial market opportunities Anthropic will address through maturing operational practices. The company’s enterprise Claude offerings already include such commitments, suggesting similar frameworks will eventually extend to agentic products.

Interactive Tiles: User Satisfaction Data

User satisfaction metrics specific to Cowork remain largely unavailable given the product’s recent launch and limited user base. However, qualitative feedback from early adopters reveals enthusiasm tempered by pragmatic concerns. Users appreciate workflow automation potential, particularly for tedious tasks where Cowork’s autonomous operation eliminates hour-consuming manual effort. File organization, expense reporting, and document generation emerge as high-satisfaction use cases delivering clear value through measurable time savings.

Conversely, users express frustration with macOS exclusivity, lack of free trials, and steep pricing requirements. Windows and Linux professionals unable to access Cowork regardless of willingness to pay feel excluded from capabilities they consider compelling. The absence of trial periods forces prospective users into hundred-dollar-plus monthly commitments without hands-on validation, creating adoption friction typical of enterprise software but unusual for developer tools traditionally offering generous freemium tiers.

Security-conscious users report anxiety about granting file system access to autonomous agents, particularly after prompt injection vulnerability disclosures. While Anthropic provides transparency about risks and implements defenses, the fundamental tension between autonomy and control creates discomfort. Users must trust that Claude will interpret instructions correctly, avoid unintended destructive operations, and resist malicious manipulation through adversarial inputs—trust requirements exceeding typical software relationships.

Performance satisfaction varies based on workflow complexity and user expectations. Simple tasks completing within minutes receive positive reception, while longer workflows introduce uncertainty about progress and eventual success. The absence of granular progress indicators beyond periodic high-level updates leaves users uncertain whether operations proceed normally or have stalled indefinitely. This opacity creates anxiety during expensive workflows where failures waste both time and Max plan quota.

Comparative satisfaction assessments position Cowork favorably against manual alternatives while trailing specialized tools for particular domains. Users report Cowork excels at tasks lacking dedicated automation solutions, filling gaps between point solutions and custom scripting. However, for workflows where mature specialized tools exist—advanced photo organization software, professional expense management platforms, comprehensive research databases—Cowork’s generalist approach may not match domain-specific capabilities despite providing adequate functionality.

6. Adoption Pathways

Integration Workflow

Cowork adoption begins with Claude Max subscription procurement, requiring users to upgrade from free, Professional, or existing plans to Max 5x or Max 20x tiers. This financial commitment represents the primary adoption barrier, filtering for serious users while excluding casual experimenters. The upgrade process occurs through Anthropic’s web portal with prorated billing for users upgrading mid-cycle from lower subscription tiers.

Following subscription activation, users must install or update Claude Desktop for macOS to versions supporting Cowork. The application provides native integration between conversational Claude and agentic Cowork modes through a mode selector interface. Users switch between “Chat” and “Tasks” tabs depending on desired interaction patterns: Chat for standard conversational workflows, Tasks for autonomous operations.

Initial Cowork usage requires designating folders where Claude gains read-write access. The permission model implements explicit authorization where users navigate filesystem hierarchies selecting specific directories for Cowork operation. This granular control enables isolation strategies where users create dedicated working directories separate from sensitive personal or professional files, reducing exposure risks during experimental workflows.

Task initiation follows natural language instruction patterns familiar from conversational Claude usage. Users describe desired outcomes through prompts including success criteria, constraints, and contextual information guiding Claude’s planning. For example: “Organize my downloads folder by file type, create appropriately named subdirectories, and move all files into these categories. Delete obvious duplicates but ask before removing anything ambiguous.” This instruction style balances specificity with flexibility, providing enough direction for autonomous operation while permitting Claude discretion within defined boundaries.

Claude’s initial response outlines proposed approaches, anticipated steps, and potential issues warranting user awareness. Users review these plans, provide clarifications or adjustments, then authorize execution. This approval step prevents unintended consequences from misunderstood instructions while preserving workflow efficiency through upfront alignment rather than constant micro-approval requests.

Customization Options

Cowork offers limited customization in current implementations, reflecting research preview status prioritizing core functionality over extensive configurability. However, users can tailor experiences through several mechanisms. Prompt engineering represents the primary customization vector: carefully crafted instructions incorporating organizational preferences, stylistic requirements, and operational constraints guide Claude toward outputs matching specific needs.

For document generation workflows, users can provide style guides, template examples, and formatting specifications influencing Claude’s output characteristics. A user requesting marketing materials might include brand voice documentation, competitor examples, and target audience descriptions enabling Claude to generate content aligned with organizational standards. This context-driven customization substitutes for explicit configuration interfaces through comprehensive instruction provision.

Folder organization strategies provide structural customization: users designing thoughtful directory hierarchies for Cowork operation effectively constrain and guide autonomous behaviors. A consultant maintaining separate client folders can limit Cowork’s scope per engagement by granting access only to relevant directories, ensuring work product segregation without explicit project management features.

The skills library enables capability customization through selective skill application. As Anthropic expands available skills beyond the initial document, presentation, and spreadsheet set, users will select skills relevant to particular workflows. A researcher might load academic citation management skills while a marketer activates social media content optimization skills, tailoring Cowork’s capabilities to domain-specific requirements.

Integration with existing Claude connectors provides external customization: users authorizing specific data source connections determine information Claude can access during task execution. A sales professional might connect CRM databases enabling Claude to enrich prospect research with account history, while a developer connects code repositories providing context for technical documentation generation.

However, Cowork currently lacks extensive configuration interfaces typical of mature enterprise software. Users cannot define custom workflows, create reusable task templates, establish guardrails through policy languages, or implement approval chains beyond Claude’s default confirmation requests. These enterprise-grade customization capabilities will likely emerge as Cowork matures and targets organizational deployments requiring governance frameworks.

Onboarding & Support Channels

Anthropic provides onboarding resources through multiple channels reflecting different user sophistication levels. The Claude Help Center hosts comprehensive documentation explaining Cowork concepts, providing usage examples, and addressing common questions. Articles cover getting started procedures, capability descriptions, limitation disclosures, and safety recommendations. This self-service documentation enables independent learning for users preferring written materials over interactive assistance.

Video demonstrations and tutorials published by Anthropic and community creators showcase Cowork capabilities through concrete examples. Watching Claude autonomously organize files or generate reports provides intuitive understanding difficult to convey through text alone. These visual resources particularly benefit users transitioning from conversational AI paradigms to agentic workflows where autonomous operation fundamentally differs from prompt-response patterns.

Community resources including Reddit discussions, Discord servers, and social media channels enable peer learning and troubleshooting. Early adopters share usage patterns, prompt templates, workaround strategies, and cautionary tales helping newcomers avoid pitfalls. This grassroots knowledge base supplements official documentation with practical insights from real-world deployment experiences.

However, Cowork’s Max-tier exclusivity limits community size during early phases, reducing available peer support compared to broadly accessible products. The relatively small user base means fewer individuals encountering and documenting edge cases, uncommon workflows, or advanced techniques. This knowledge scarcity increases reliance on official Anthropic resources during problem resolution.

Direct support availability for Cowork users remains somewhat unclear in public materials. Max subscribers presumably receive priority support compared to lower-tier users, though specific response time commitments, support channel availability, and escalation procedures warrant clarification. Users experiencing blocking issues during critical workflows require rapid assistance unavailable through asynchronous community channels.

The research preview designation sets expectations for limited support: experimental software typically provides best-effort assistance rather than guaranteed resolution timeframes or comprehensive troubleshooting. Users should anticipate some issues remaining unresolved pending future product iterations rather than expecting immediate fixes for all problems encountered.

7. Use Case Portfolio

Enterprise Implementations

Cowork’s current Max-tier exclusivity limits formal enterprise deployments during research preview phases. However, anticipated enterprise use cases emerge from capability analysis and comparable product experiences. Knowledge management represents a compelling application: organizations accumulate vast unstructured information across file shares, emails, and collaboration platforms. Cowork could systematically organize these information repositories through metadata extraction, intelligent classification, duplicate detection, and hierarchical structuring—workflows currently requiring extensive manual curation or specialized software implementations.

Compliance and audit preparation workflows benefit from Cowork’s document processing capabilities. Regulated organizations must produce evidence packages demonstrating policy adherence, risk mitigation, and control effectiveness during audits. Cowork could compile scattered evidence across systems, extract relevant information, generate formatted reports, and structure materials according to auditor requirements—compressing preparation timelines while improving completeness through systematic rather than ad-hoc collection.

Research and competitive intelligence operations involve gathering information from diverse sources, extracting key insights, identifying patterns, and synthesizing findings into actionable recommendations. Cowork’s ability to process multiple documents simultaneously, integrate external connector data, and generate structured analyses positions it as a research assistant accelerating intelligence cycles. Analysts could delegate information gathering and preliminary synthesis to Cowork, reserving human effort for strategic interpretation and decision-making.

Marketing and sales enablement materials require continuous updates reflecting product evolution, competitive landscape changes, and customer feedback. Cowork could maintain living repositories of enablement content, automatically updating materials based on product release notes, competitive intelligence updates, and sales team feedback. This continuous maintenance reduces staleness typical of manually managed content while ensuring consistency across distributed materials.

However, enterprise adoption barriers remain substantial during research preview phases. The absence of Team and Enterprise plan integration prevents organizational procurement and deployment. IT governance requirements typically prohibit individual employees subscribing to premium services through personal accounts, especially for capabilities accessing company files. Security and compliance validation processes require formal vendor assessments incompatible with experimental product status. These obstacles suggest enterprise traction will emerge primarily after Cowork transitions from research preview to production offerings accompanied by appropriate commercial and compliance frameworks.

Academic & Research Deployments

Academic researchers face chronic challenges managing literature review workflows, data processing pipelines, and manuscript preparation tasks. Cowork’s document processing capabilities address several pain points. Literature reviews require collecting dozens to hundreds of papers, extracting key findings, identifying thematic patterns, and synthesizing insights into coherent narratives. Cowork could systematically process PDF libraries, extract abstracts and conclusions, identify methodological approaches, and draft preliminary literature review sections—accelerating early research phases while maintaining human oversight for intellectual synthesis.

Qualitative data analysis involves coding interview transcripts, field notes, and observation records according to thematic frameworks. Cowork could perform initial coding passes identifying potential themes, flagging relevant passages, and organizing materials by research questions. While human researchers must validate and refine these preliminary analyses, the mechanical work of systematically reviewing materials compresses substantially through AI assistance.

Grant proposal preparation demands integrating project descriptions, budgets, institutional boilerplate, biographical sketches, and literature reviews into prescribed formats varying across funding agencies. Cowork could compile these components from researcher-maintained libraries, adapt formatting to specific requirements, ensure consistency across sections, and generate preliminary drafts meeting submission standards—reducing proposal preparation time while enabling researchers to focus on intellectual content rather than administrative formatting.

Dissertation and thesis formatting represents a significant burden for graduate students mastering specialized style guides, citation formats, and institutional requirements. Cowork could systematically apply formatting rules, validate citation completeness, ensure figure and table numbering consistency, and identify formatting deviations requiring correction—mechanical tasks consuming disproportionate time relative to intellectual contribution.

However, academic adoption faces barriers beyond technical capability. University procurement processes typically prohibit individual faculty subscriptions to expensive commercial services without institutional contracts. Research budgets allocated to consumables, travel, and participant compensation rarely accommodate hundred-dollar monthly software subscriptions. Privacy concerns about processing unpublished research through third-party services create intellectual property and competitive risks. These constraints suggest academic adoption will require institutional licensing agreements, educational pricing tiers, or open-source alternatives addressing academic community norms.

ROI Assessments

Quantifying Cowork’s return on investment requires evaluating both direct cost savings and productivity multipliers. The most immediate financial impact stems from time savings: workflows requiring manual effort measured in hours compressing to minutes of autonomous execution. A consultant spending five hours weekly organizing project files and client deliverables could reclaim approximately two hundred sixty hours annually through Cowork automation. At loaded hourly rates of one hundred fifty dollars for professional services, this time savings generates thirty-nine thousand dollars in annual value—substantially exceeding Max plan costs of twelve hundred to twenty-four hundred dollars annually.

However, this calculation assumes perfect substitutability between manual effort and Cowork automation, which rarely holds in practice. Cowork outputs typically require human review, refinement, and correction, reducing net time savings below theoretical maximums. Additionally, users must invest time learning Cowork capabilities, crafting effective prompts, and establishing organizational workflows—upfront costs that defer net positive returns until productivity curves exceed learning investments.

Quality improvements represent additional value difficult to quantify financially but significant for organizational outcomes. Systematically organized information repositories improve team productivity through reduced search time and better knowledge accessibility. Consistently formatted deliverables enhance professional reputation and client satisfaction. Comprehensive analyses drawing from broader source materials improve decision quality and strategic outcomes. These qualitative benefits accumulate over time but resist precise financial measurement.

Risk mitigation through reduced human error provides defensive value justifying investment beyond offensive productivity gains. Manual data entry introduces transcription errors, file organization inconsistencies emerge from personal taxonomies, and document formatting deviations violate style guidelines. Cowork’s systematic approaches reduce these error categories, preventing downstream costs from mistakes including financial reconciliation failures, compliance violations, and customer dissatisfaction.

Total cost of ownership extends beyond subscription fees to include operational overhead. Users must maintain Max plan subscriptions continuously rather than purchasing task-based capacity, potentially overprovisioning during low-utilization periods. Mac OS hardware requirements may necessitate device upgrades for users on alternative platforms. Learning curves consume productive time during adoption phases. Token consumption patterns may necessitate higher-tier Max 20x subscriptions for intensive users, doubling annual costs to twenty-four hundred dollars.

Break-even analysis suggests professional knowledge workers billable at one hundred dollars-plus hourly rates recoup investments by saving roughly twelve to twenty-four hours annually—achievable thresholds for users with clear workflow automation opportunities. However, users with limited applicable use cases, low billable rates, or substantial manual task preferences may struggle achieving positive returns justifying subscriptions.

8. Balanced Analysis

Strengths with Evidential Support

Cowork’s primary competitive advantage stems from lowering autonomous AI barriers for non-developer audiences. While Claude Code requires terminal comfort and programming familiarity, Cowork operates through familiar visual interfaces and natural language instructions. This accessibility democratizes agentic capabilities, expanding addressable markets from developers to knowledge workers across professional domains. Early adoption patterns suggest meaningful uptake from consultants, marketers, researchers, and administrators—user segments underserved by developer-focused automation tools.

The architectural foundation sharing Claude Code’s proven agent SDK provides technical credibility and rapid capability evolution. Claude Code’s trajectory from research preview to billion-dollar product in six months validates underlying technology while demonstrating Anthropic’s capacity for iterative improvement based on user feedback. Cowork benefits from these investments without duplicating development costs, accelerating maturity compared to greenfield agentic platforms.

Sandboxed execution architecture balances autonomy with containment, addressing security concerns that prevent many organizations from adopting unrestricted autonomous agents. By limiting file access to designated folders, Cowork reduces blast radius from prompt injection attacks, configuration errors, or unintended destructive operations. This security model enables cautious early adoption by risk-averse users who reject tools requiring unrestricted system access.

The skills library provides differentiation beyond generic language model capabilities. Document, presentation, and spreadsheet generation skills encode domain expertise about professional formatting, structural conventions, and best practices. These specialized capabilities produce outputs exceeding what baseline models might generate from zero-shot prompting, delivering value through curated expertise rather than merely raw inference capability.

Integration with Claude’s connector ecosystem and MCP standard positions Cowork as an extensible platform rather than isolated tool. Users can incorporate organizational data sources, third-party services, and custom integrations through standardized connector frameworks. This extensibility future-proofs investments by enabling capability expansion without architectural limitations, accommodating evolving requirements through connector additions rather than platform replacements.

Anthropic’s transparent approach to limitations and risks builds trust through intellectual honesty. The company explicitly acknowledges prompt injection vulnerabilities, potential for destructive operations, and research preview instability rather than overstating capabilities or minimizing concerns. This transparency enables informed adoption decisions and sets realistic expectations reducing disappointment from unmet assumptions.

Limitations & Mitigation Strategies

Cowork’s Mac OS exclusivity represents the most significant adoption barrier, excluding Windows and Linux professionals regardless of willingness to pay. This platform limitation stems from architectural dependencies on macOS virtualization frameworks providing sandboxed execution environments. Anthropic promises cross-platform expansion without specific timelines, leaving non-Mac users unable to evaluate or adopt regardless of interest. Mitigation strategies include maintaining macOS virtual machines or secondary devices for Cowork access, though these workarounds introduce costs and complexity reducing practical viability.

Pricing requirements create accessibility challenges for budget-constrained users and small organizations. At one hundred to two hundred dollars monthly, Max plans exceed typical SaaS tool budgets for freelancers, students, and small businesses operating on thin margins. The absence of free trials compounds this barrier by forcing financial commitments before hands-on validation. Prospective users must rely on secondhand accounts, video demonstrations, and documentation rather than direct experience. Mitigation involves thoroughly evaluating use case applicability before subscribing, potentially pooling subscriptions across team members to amortize costs, or deferring adoption until Professional tier access becomes available.

Security vulnerabilities identified by independent researchers create legitimate data exfiltration and integrity risks. While Anthropic implements defenses, the company acknowledges agent safety remains an active development area without complete protection guarantees. Prompt injection attacks represent fundamental challenges for agentic systems processing untrusted inputs. Mitigation strategies include rigorously sandboxing Cowork access to non-sensitive folders, avoiding file processing from untrusted sources, carefully reviewing proposed actions before approval, and maintaining offline backups protecting against unintended modifications or deletions.

Session persistence limitations requiring continuous application operation restrict workflow flexibility. Long-running tasks remain vulnerable to interruption from application crashes, system restarts, or user error. The absence of server-side job persistence means failures abandon incomplete work without resumption capabilities. Mitigation involves chunking large workflows into smaller incremental operations enabling progress preservation, actively monitoring long-running sessions for issues, and maintaining stable system environments minimizing interruption risks.

The absence of memory across sessions forces users to repeatedly provide context and permissions for similar workflows. This statelessness increases overhead for iterative tasks requiring multiple refinement cycles. Users cannot build on previous work through conversational continuity, instead reconstructing context per session. Mitigation involves maintaining reusable prompt templates capturing common workflows, storing session outputs as reference materials for future iterations, and structuring folder organizations encoding context through hierarchical arrangements.

Limited enterprise features including Team and Enterprise plan unavailability prevent organizational adoption during research preview phases. The absence of administrative controls, usage analytics, centralized billing, and compliance features blocks IT procurement and deployment. Mitigation requires monitoring Anthropic’s roadmap for enterprise availability announcements, potentially conducting pilot deployments through individual Max subscriptions while awaiting official organizational offerings, or selecting alternative solutions providing immediate enterprise readiness for time-sensitive requirements.

9. Transparent Pricing

Plan Tiers & Cost Breakdown

Cowork access requires Claude Max subscription tier exclusive to Anthropic’s premium offering. Max plans come in two variants distinguished solely by usage allowance rather than feature differentiation. Max 5x costs one hundred dollars monthly and provides five times the usage capacity of standard Professional plans, translating to approximately two hundred twenty-five messages per five-hour window. Max 20x costs two hundred dollars monthly and delivers twenty times Professional capacity, approximately nine hundred messages per five-hour window.

Both Max tiers include identical Cowork feature access: autonomous task execution, folder permissions, sub-agent coordination, skills library, connector integration, and extended context windows. The pricing differential purchases additional usage quota rather than capability unlocks, positioning Max 20x for power users relying on Claude for full-time work while Max 5x serves moderate users with periodic intensive workflows.

Cowork remains unavailable on lower subscription tiers regardless of pricing. Professional plan subscribers at twenty dollars monthly cannot access Cowork functionality despite representing Anthropic’s primary individual user segment. Team and Enterprise plans similarly lack Cowork integration during research preview phases, though waitlist registration suggests future availability. Free tier users receive no Cowork access or waitlist options, consistent with positioning as premium experimental capability.

The subscription model operates exclusively on monthly billing without annual discount options currently available. Users commit to recurring monthly charges with capability to cancel anytime, though cancellation takes effect at subsequent billing cycle boundaries rather than immediately. Mid-cycle cancellations forfeit remaining subscription period without prorated refunds, incentivizing users to time cancellations strategically.

No free trial periods enable hands-on evaluation before financial commitment. This absence represents unusual friction in SaaS markets where trial-driven conversion dominates acquisition strategies. Anthropic’s approach filters for serious users willing to invest substantially in cutting-edge capabilities rather than casual experimenters unlikely to provide actionable feedback during research preview phases.

Educational and nonprofit pricing discounts remain unannounced. Organizations operating on constrained budgets lack special accommodations despite potentially valuable use cases in academic and mission-driven contexts. This pricing posture may evolve as Cowork matures and Anthropic expands market coverage, though current focus targets commercial professional users able to absorb premium pricing.

Total Cost of Ownership Projections

Comprehensive total cost of ownership calculations extend beyond subscription fees to encompass platform requirements, learning investments, and operational overhead. Mac OS hardware represents the foundation prerequisite: users lacking macOS devices must purchase laptops or desktops meeting minimum specifications. Entry-level MacBook Air models start around one thousand dollars, though professional users may prefer MacBook Pro models ranging fifteen hundred to three thousand dollars based on performance needs. These hardware costs amortize across multiple years and applications, but represent substantial initial investments for users adopting Cowork as primary driver.

Subscription fees accumulate to twelve hundred dollars annually for Max 5x or twenty-four hundred dollars for Max 20x. These ongoing costs compound over multi-year usage horizons, totaling six thousand to twelve thousand dollars across five-year periods assuming stable pricing. For comparison, many professional software tools including project management platforms, design applications, and productivity suites cost substantially less while serving broader use cases, positioning Cowork as premium investment requiring strong value justification.

Learning curve investments consume productive time during adoption phases. Users must understand agentic interaction patterns, develop effective prompting skills, establish organizational workflows, and build mental models of Cowork capabilities and limitations. Conservative estimates suggest ten to twenty hours of initial exploration before achieving proficiency, representing fifteen hundred to three thousand dollars in opportunity cost for professionals billing at one hundred fifty dollars hourly.

Operational overhead including prompt engineering, output review, error correction, and workflow refinement continues throughout usage lifecycle. Even successful Cowork executions rarely produce perfect outputs without human refinement. Users must budget ongoing time for quality assurance, iterative improvement, and edge case handling. Assuming one hour weekly for Cowork workflow management, annual overhead totals approximately fifty-two hours or seventy-eight hundred dollars at professional services rates.

Summing components yields first-year total cost of ownership ranging eight thousand to fifteen thousand dollars for Max 5x users purchasing hardware, or fifty-seven hundred to twelve thousand dollars for users with existing Macs. Subsequent years drop to twenty-four hundred to ten thousand dollars annually depending on subscription tier and operational overhead. These TCO figures substantially exceed isolated subscription costs, emphasizing importance of comprehensive financial analysis.

Break-even analysis for professional services providers suggests Cowork must deliver eight to fifteen hours of monthly time savings to justify first-year investments at one hundred fifty dollar hourly rates. Users consistently achieving these productivity gains realize positive returns, while those with limited applicable workflows or modest time savings struggle reaching profitability. The economic calculus varies dramatically across use intensity, billing rates, and workflow substitutability.

For organizational deployments, TCO multiplies by user counts while productivity benefits scale proportionally. A ten-person consulting firm investing twelve to twenty-four thousand dollars annually in Max subscriptions must realize team-wide time savings justifying costs. However, organizational adoption remains speculative until Team and Enterprise plan integration occurs with appropriate multi-user licensing, administrative controls, and volume pricing.

10. Market Positioning

Cowork operates within the rapidly expanding autonomous AI agent market projected to reach eleven point seven nine billion dollars in 2026 with enterprise adoption increasing from twenty-five percent in 2025 to approximately fifty percent by 2027. This explosive growth reflects accelerating interest in AI systems capable of sustained goal-directed behavior rather than merely responding to individual prompts. Industry analysts project that by 2026, forty percent of enterprise software applications will include task-specific AI agents, up from less than five percent in 2024.

The competitive landscape segments along multiple axes including target users, workflow domains, autonomy levels, and integration patterns. Developer-focused coding agents including GitHub Copilot Workspace, Cursor, and Replit Agent dominate software development automation. General-purpose agents like Auto-GPT and BabyAGI provide open-source alternatives emphasizing transparency and extensibility. Enterprise workflow automation platforms including UIPath and Microsoft Power Automate integrate AI capabilities into robotic process automation frameworks. Cowork positions between these categories: more accessible than developer tools, more capable than RPA platforms, and more production-ready than open-source experiments.

Competitor Comparison Table

| Platform | Target Users | Workflow Focus | Autonomy Level | Platform Support | Pricing Model | Key Differentiator |

|---|---|---|---|---|---|---|

| Cowork | Knowledge workers, professionals | File management, documents, research | Medium – supervised autonomy | macOS only | $100-200/month | Accessible agentic AI for non-developers |

| Claude Code | Software developers | Coding, debugging, testing | High – terminal autonomy | macOS, Linux, Windows (CLI) | $100-200/month (Max) | Full filesystem access, parallel processes |

| GitHub Copilot Workspace | Developers | Software development | Medium – PR-focused | Cloud + IDE integration | ~$20-40/user/month | GitHub ecosystem integration |

| Cursor | Developers | Code generation, editing | Medium – editor-embedded | macOS, Linux, Windows | $20/month Pro | IDE-native AI pair programming |

| Replit Agent | Developers, students | App building, deployment | High – full-stack creation | Cloud-based | ~$20/month | Zero-setup cloud development |

| Microsoft Power Automate | Business users, IT | Workflow automation, RPA | Low-Medium – rule-based | Windows, Cloud | ~$15-40/user/month | Enterprise integration, compliance |

| Auto-GPT | Technical enthusiasts | General task automation | Very High – autonomous loops | Any (self-hosted) | Free (OSS) + API costs | Open source, customizable |

| Zapier | Business users | App integration, workflows | Low – trigger-based | Cloud | $20-50/month | 5000+ app integrations |

Unique Differentiators

Cowork’s most significant market differentiation stems from deliberately expanding autonomous AI beyond technical specialists to general knowledge workers. While competitors target developers comfortable with terminals, IDEs, and programming concepts, Cowork addresses consultants, marketers, researchers, and administrators lacking coding expertise but facing workflow automation opportunities. This positioning expands total addressable market substantially while reducing competitive intensity within developer-saturated segments.

The architectural relationship with Claude Code provides technical credibility and rapid capability evolution. Sharing proven agent SDK infrastructure enables Cowork to leverage millions in development investment while avoiding greenfield risks. Users confident in Claude Code’s technical sophistication transfer that trust to Cowork, reducing adoption friction compared to unproven alternatives from lesser-known vendors.

Anthropic’s brand strength in AI safety and responsible development resonates with risk-averse organizations prioritizing vendor trustworthiness. The company’s research contributions, transparent limitation disclosures, and thoughtful approach to capability deployment differentiate from competitors pursuing aggressive market share expansion without corresponding safety emphasis. This positioning particularly appeals to regulated industries and mission-critical applications where vendor reputation influences procurement decisions.

Integration with Claude’s conversational interface enables seamless transitions between interactive assistance and autonomous execution within unified experiences. Users conduct planning conversations with Claude, then delegate implementation to Cowork without switching tools or reconstructing context. This continuity contrasts with competitors requiring separate applications, accounts, and workflows for conversational versus agentic interactions.