Table of Contents

Overview

NVIDIA CUDA 13.1 represents the largest and most comprehensive update to the CUDA platform since its invention two decades ago, released December 4, 2025. This monumental release introduces fundamental paradigm shifts in GPU programming addressing persistent challenges: traditional SIMT (Single Instruction, Multiple Thread) programming requires explicit thread-level management creating steep learning curves, hardware-specific optimizations (tensor cores, specialized memory hierarchies) demand deep architecture expertise limiting accessibility, and evolving GPU architectures necessitate kernel rewrites for optimal performance on new hardware generations.

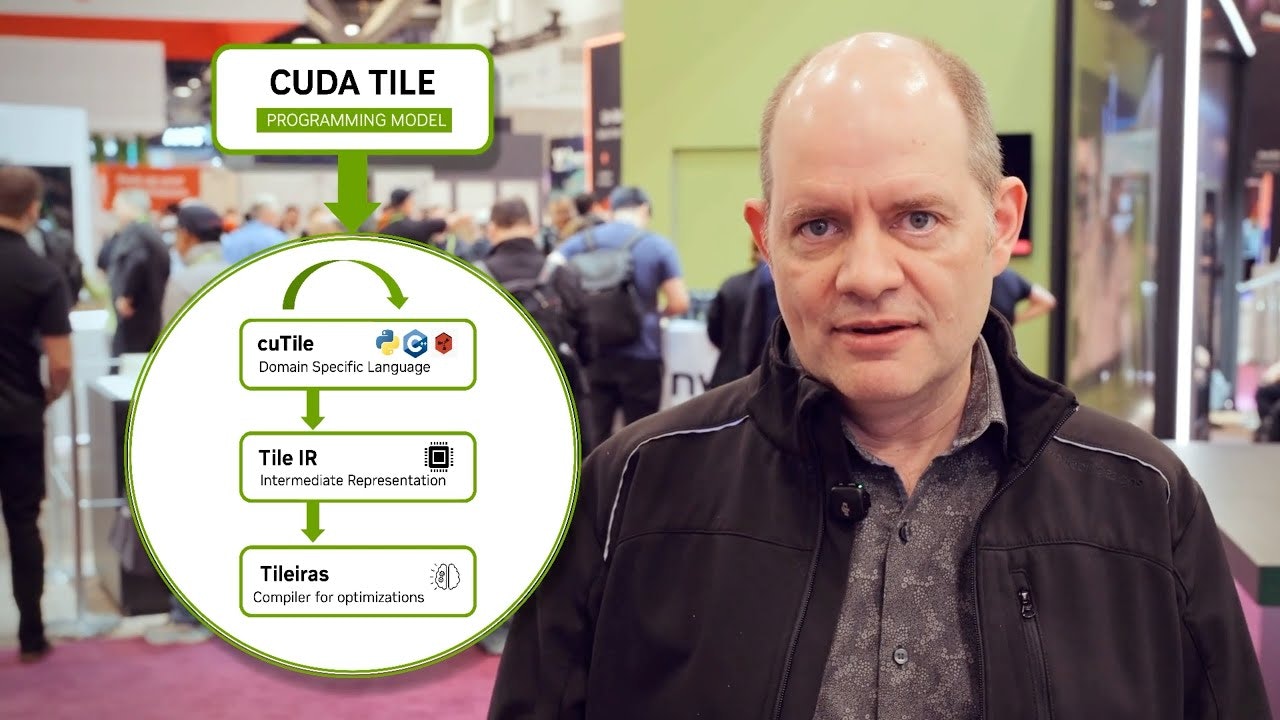

CUDA 13.1’s flagship innovation—CUDA Tile—elevates programming abstraction from thread-level SIMT to tile-based operations where developers specify data chunks (tiles) and operations while compilers and runtimes optimize execution across threads and specialized hardware automatically. This abstraction simplifies tensor core utilization (previously requiring manual PTX programming or specialized libraries), future-proofs kernels through CUDA Tile IR (virtual instruction set architecture ensuring forward compatibility across GPU generations), and reduces optimization burden enabling faster development cycles. Complementary features include green contexts providing fine-grained GPU resource partitioning for spatial isolation and latency-sensitive workloads, FP64/FP32 emulation on tensor cores accelerating double-precision computations, Nsight Compute updates supporting CUDA Tile kernel profiling, MPS enhancements with static SM partitioning and memory locality optimization, cuBLAS Grouped GEMM delivering up to 4x speedups for mixture-of-experts models, and completely rewritten CUDA Programming Guide serving both novice and advanced developers.

Currently, CUDA Tile supports NVIDIA Blackwell architecture (compute capability 10.x and 12.x) exclusively—B200, B300, GB200, GB300 products—with future CUDA releases expanding support to additional architectures. The initial release focuses on AI algorithms (deep learning, MoE models, matrix operations), with C++ implementation planned for upcoming releases complementing current cuTile Python DSL. CUDA 13.1 targets AI/ML engineers optimizing training and inference kernels, HPC scientists accelerating scientific simulations, GPU researchers exploring novel algorithms, hardware enthusiasts maximizing Blackwell GPU utilization, and students learning GPU programming through simplified abstractions democratizing access to advanced optimization techniques previously requiring expert-level knowledge.

Key Features

- CUDA Tile: Tile-Based Programming Model Abstracting Hardware Complexity: Revolutionary programming paradigm shifting from SIMT’s explicit thread management to tile-based operations where developers define data tiles (chunks) and mathematical operations while compilers/runtimes handle thread distribution, memory management, and hardware feature utilization automatically. Traditional SIMT requires specifying each thread’s execution path, data partitioning, synchronization points, and memory access patterns creating verbose error-prone code. CUDA Tile elevates abstraction enabling specification like “perform matrix multiplication on these tiles” without manual thread block configuration, shared memory management, or tensor core invocation. The tile model maps naturally onto tensor cores—specialized matrix multiplication hardware requiring previously complex PTX-level programming or library dependencies. CUDA Tile abstracts tensor cores enabling automatic utilization through high-level tile operations dramatically simplifying optimization workflows. This abstraction future-proofs kernels: code written for current Blackwell architecture automatically benefits from future GPU improvements without rewrites as CUDA Tile IR (virtual ISA) translates tile operations into architecture-specific optimized implementations. Currently supports NVIDIA Blackwell (compute capability 10.x: B200/B300, 12.x: GB200/GB300) exclusively with broader architecture support planned future releases. Initial focus targets AI algorithms (deep learning, mixture-of-experts, attention mechanisms, convolutions) where tile-based matrix operations dominate workloads.

- cuTile Python: Domain-Specific Language for Array/Tile-Based Kernels: New Python DSL enabling tile-based GPU kernel authoring without C++/CUDA syntax lowering barrier to entry for data scientists, ML engineers, and researchers comfortable with NumPy-style array programming. cuTile Python provides Pythonic interface expressing tile operations through familiar syntax while compiling to optimized GPU kernels leveraging CUDA Tile IR backend. Developers write high-level tile descriptions—array shapes, mathematical operations, data dependencies—and cuTile compiler generates efficient CUDA kernels handling block/grid decomposition, memory hierarchy management (global→shared→registers), tensor core orchestration, and warp-level optimizations automatically. This Python-first approach democratizes GPU kernel development enabling rapid prototyping, algorithm experimentation, and performance iteration without traditional CUDA’s compilation complexity or C++ template metaprogramming requirements. Integration with PyTorch, JAX, TensorFlow enables custom operator development through Python maintaining framework consistency versus context-switching to C++/CUDA. cuTile Python targets AI/ML practitioners requiring custom kernels (novel attention variants, sparse operations, domain-specific algorithms) previously necessitating C++/CUDA expertise or settling for suboptimal PyTorch built-ins. Future CUDA releases plan C++ implementation serving systems programmers preferring statically-typed compiled languages or integrating with existing C++ codebases.

- CUDA Tile IR: Virtual Instruction Set Architecture for Forward Compatibility: New intermediate representation sitting between high-level languages (Python, future C++) and GPU-specific machine code ensuring tile-based kernels remain compatible across GPU generations without source modifications. Traditional CUDA compiles directly to architecture-specific PTX/SASS creating coupling where architecture changes (Ampere→Hopper→Blackwell) require kernel rewrites extracting new hardware features (increased shared memory, faster tensor cores, improved memory bandwidth). CUDA Tile IR breaks this coupling through virtual ISA defining tile operations abstractly—tile creation, mathematical operations, memory movement patterns—independent of physical hardware implementation. When executing on specific GPU, CUDA runtime translates Tile IR into optimized architecture-specific code leveraging available hardware (Blackwell’s 5th-generation tensor cores, increased register files, enhanced shared memory). Future GPUs automatically benefit from improvements as runtime optimizations evolve without developer intervention. This forward compatibility protects software investment: write tile kernels once, run efficiently across current and future NVIDIA architectures eliminating costly porting/optimization cycles characteristic of rapidly-evolving GPU ecosystems. The virtual ISA strategy mirrors successful CPU approaches (Java bytecode, LLVM IR) where abstraction layers enable platform portability and evolution independence.

- Green Contexts: Fine-Grained GPU Resource Partitioning: Lightweight contexts enabling developers defining independent partitions of GPU resources—primarily streaming multiprocessors (SMs) and work queues (WQs)—dedicating specific resources to particular workloads ensuring spatial isolation and reducing interference. Traditional CUDA contexts share entire GPU creating contention where concurrent kernels compete for SMs, memory bandwidth, and scheduling resources causing unpredictable latency and throughput variation. Green contexts partition GPU: context A provisions 80% SMs for throughput-oriented batch processing, context B reserves 20% SMs for latency-sensitive inference ensuring immediate execution when requests arrive without waiting for batch completion. Available in CUDA Driver API since 12.4, CUDA 13.1 exposes green contexts through Runtime API improving accessibility for application developers versus driver-level programming. Use cases include latency-critical systems (real-time inference, interactive applications) requiring guaranteed SM availability, multi-tenant GPU sharing (isolating different users/workloads preventing interference), power management (allocating resources proportionally to workload priorities), and controlled concurrency (preventing resource exhaustion from concurrent kernel launches). Enhanced split() API enables customizable SM partitioning and work queue configuration minimizing false dependencies between contexts. Nsight Systems integration visualizes green context activity showing SM allocations, concurrent execution patterns, and resource utilization helping developers validating partitioning strategies.

- FP64 and FP32 Emulation on Tensor Cores Accelerating Double-Precision: cuBLAS enhancements introduced CUDA Toolkit 13.0 now extended in 13.1 enable emulating double-precision (FP64) and single-precision (FP32) matrix multiplications on tensor cores—specialized hardware traditionally supporting only FP16, BF16, TF32, INT8, FP8 datatypes. Scientific computing, molecular dynamics, computational fluid dynamics, quantum chemistry, weather modeling require FP64 precision maintaining numerical accuracy but GPU tensor cores lacked native double-precision support limiting performance. CUDA 13.1’s FP64 emulation decomposes double-precision operations into sequences of lower-precision tensor core operations maintaining numerical equivalence while achieving substantial speedups versus traditional FP64 CUDA cores. Supported GPUs include NVIDIA GB200 NVL72, RTX PRO 6000 Blackwell Server Edition enabling HPC applications benefiting from tensor core throughput. FP32 emulation similarly accelerates single-precision workloads beyond native FP32 performance through tensor core utilization. This emulation democratizes tensor cores beyond AI/ML (their traditional domain) enabling scientific HPC leveraging specialized hardware previously exclusive to deep learning creating performance improvements across broader computational science domains.

- CUDA Multi-Process Service (MPS) Enhancements: Significant MPS improvements enabling advanced GPU sharing, partitioning, and resource management:

- Memory Locality Optimization Partition (MLOPart): Blackwell-specific feature (compute capability 10.0/10.3: B200, B300; future support: GB200, GB300) creating specialized CUDA devices optimized for memory locality. Single physical GPU presents as multiple logical devices with fewer compute resources but dedicated memory partitions improving locality. Applications utilize MLOPart devices independently with reduced cross-partition interference benefiting memory-bound workloads where locality matters more than absolute compute throughput.

- Static Streaming Multiprocessor Partitioning: Ampere (compute capability 8.0) and newer GPUs support static SM partitioning providing exclusive resource allocation for MPS clients versus traditional dynamic provisioning. Launched via

mps-control-daemon -Sor--static-partitioningflag delivering deterministic resource allocation and improved isolation. Fundamental partitioning unit is “chunk” varying by architecture (8 SMs on Hopper/newer discrete GPUs). Static partitioning benefits multi-tenant environments requiring guaranteed resources, deterministic performance for benchmarking/testing, or isolation preventing noisy-neighbor effects.

- Developer Tools Updates: Comprehensive tooling enhancements supporting CUDA 13.1 features:

- NVIDIA Nsight Compute 2025.4: CUDA Tile kernel profiling support with “Result Type” column distinguishing Tile vs SIMT kernels, “Tile Statistics” section summarizing dimensions and pipeline utilization, source page metrics mapping to cuTile kernel source. Added CUDA graph node profiling from device-launched graphs and improved source navigation with clickable label links.

- NVIDIA Compute Sanitizer 2025.4: Compile-time patching through

-fdevice-sanitize=memcheckcompiler flag integrating memory error detection directly into NVCC compilation versus runtime instrumentation. Provides faster debugging runs, enhanced error detection (illegal accesses between adjacent allocations through base-and-bounds analysis), improved developer productivity catching subtle memory issues earlier. Currently supports memcheck tool. - NVIDIA Nsight Systems 2025.6.1: System-wide CUDA trace (

--cuda-trace-scope), CUDA host function trace support (graph host nodes,cudaLaunchHostFunc()), hardware-based tracing as default when supported, green context timeline rows showing SM allocations in tooltips visualizing resource utilization.

- Math Libraries Performance and Feature Updates:

- cuBLAS: Experimental Grouped GEMM API supporting FP8, BF16, FP16 datatypes on Blackwell with up to 4x speedup versus multi-stream GEMM for mixture-of-experts models. CUDA Graph support enables host-synchronization-free implementation with device-side shapes. Block-scaled FP4/FP8 matmuls (introduced CUDA 12.9) gain performance enhancements on Blackwell; BF16 performance improvements across operations.

- cuSPARSE: New SpMVOp API (sparse matrix-vector multiplication) with improved performance versus legacy CsrMV API supporting CSR format, 32-bit indices, double precision, user-defined epilogues enabling custom post-processing.

- cuFFT: cuFFT device API providing host functions querying/generating device function code and database metadata facilitating cuFFTDx code block generation improving performance for customized FFT pipelines.

- cuSOLVER: Batched SYEVD (symmetric eigenvalue decomposition) and GEEV (general eigenvalue) APIs deliver ~2x speedup on Blackwell RTX Pro 6000 versus L40S for eigen-decomposition workloads.

- CUDA Core Compute Libraries (CCCL) 3.1 Enhancements:

- Deterministic Floating-Point Reductions: CUB DeviceReduce provides three determinism modes:

not_guaranteed(single-pass atomics, fastest but non-deterministic),run_to_run(bitwise-identical results across runs on same GPU),gpu_to_gpu(bitwise-identical across different GPUs based on reproducible reduction techniques). Developers select trade-offs between performance and reproducibility critical for scientific validation, debugging, or production systems requiring consistency. - Single-Phase CUB APIs with Memory Resources: New overloads accepting memory resources eliminating cumbersome two-phase call pattern (query temp storage→allocate→execute→free). Users pass memory resource (e.g.,

cuda::device_memory_pool) and CUB handles temporary storage automatically simplifying API usage and reducing error-proneness.

- Deterministic Floating-Point Reductions: CUB DeviceReduce provides three determinism modes:

- Completely Rewritten CUDA Programming Guide: Comprehensive documentation overhaul designed for both novice and advanced CUDA programmers covering fundamentals through advanced topics with updated examples, clearer organization, expanded explanations, and CUDA 13.1 feature integration providing authoritative reference for modern CUDA development.

How It Works

CUDA 13.1’s transformative features operate through sophisticated compiler, runtime, and hardware integration:

CUDA Tile Programming Workflow:

Developers write tile-based kernels using cuTile Python DSL defining tile shapes, mathematical operations (matmul, convolutions, reductions), and data dependencies through high-level array syntax reminiscent of NumPy. The cuTile compiler analyzes tile specifications inferring optimal block/grid dimensions, determining shared memory layouts, planning memory hierarchy usage (global→L2→shared→registers), and generating tile operation schedules. Compilation produces CUDA Tile IR—architecture-independent virtual instructions representing tile operations abstractly. At runtime, CUDA driver translates Tile IR into architecture-specific optimized code for executing GPU (e.g., Blackwell): mapping tiles onto tensor cores, orchestrating warp-level parallelism, managing memory coalescing, inserting synchronization, and handling edge cases. Generated kernels execute on Blackwell tensor cores achieving high throughput through specialized matrix multiplication hardware without developers writing explicit PTX or managing low-level details. Future GPU architectures receive updated Tile IR→machine code translators leveraging hardware improvements automatically.

Green Context Resource Partitioning:

Applications create green contexts through cudaGreenCtxCreate API specifying desired SM allocation and work queue configuration. The API accepts resource descriptors defining SM counts, placement preferences, oversubscription policies, and queue depths. CUDA driver provisions requested resources ensuring green context’s SM subset remains exclusively available preventing other contexts (including primary context) from scheduling thread blocks onto reserved SMs. Kernels launched on streams belonging to green contexts execute only within provisioned resources enabling spatial isolation. Nsight Systems visualizes green context timelines showing concurrent execution across contexts, SM utilization per context, and resource contention helping developers validating partitioning strategies achieve intended isolation/performance goals.

FP64/FP32 Emulation on Tensor Cores:

cuBLAS decomposes double-precision or single-precision matrix multiplications into sequences of mixed-precision operations executable on tensor cores. For FP64 emulation, matrices decompose into high/low components representable in lower precision (TF32, FP32) maintaining numerical accuracy through iterative refinement or compensated summation techniques. Tensor cores execute partial matrix products; cuBLAS accumulates results reconstructing full double-precision output. This decomposition achieves speedups versus native FP64 CUDA cores by exploiting tensor core throughput despite multiple passes required. Hardware support varies: GB200 NVL72, RTX PRO 6000 Blackwell Server Edition enable FP64 emulation; future releases expand architecture support.

MPS Static SM Partitioning:

Launching MPS control daemon with -S flag enables static partitioning mode where MPS divides GPU SMs into fixed “chunks” (architecture-dependent: 8 SMs on Hopper/newer discrete GPUs). Each MPS client receives exclusive chunk allocation ensuring deterministic resource availability. Clients cannot access SMs outside assigned chunks creating isolation preventing interference from other clients. Static partitioning trades flexibility (dynamic provisioning adapts to workload demands) for predictability (guaranteed resources regardless of concurrent activity) benefiting scenarios requiring deterministic performance, fair resource allocation, or strict isolation.

Developer Tools Integration:

Nsight Compute profiles CUDA Tile kernels displaying tile-specific metrics (tile dimensions, pipeline utilization, tensor core efficiency) alongside traditional SIMT metrics. Nsight Systems traces green context activity showing SM allocations, concurrent kernel execution across contexts, and resource utilization patterns. Compute Sanitizer’s compile-time patching integrates memory checking into NVCC compilation enabling faster iterative debugging versus runtime instrumentation overhead.

Use Cases

CUDA 13.1’s specialized features address scenarios where programming abstraction, hardware utilization, resource partitioning, or numerical precision directly impact application success:

High-Performance AI Model Training and Inference:

- ML engineers developing transformer models leverage CUDA Tile simplifying attention mechanism kernels (flash attention variants, sparse attention patterns) through tile-based matrix operations eliminating manual tensor core programming

- cuBLAS Grouped GEMM acceleration (4x speedup) benefits mixture-of-experts models executing many small matmuls concurrently reducing training time

- Green contexts partition GPUs for multi-model serving: 80% SMs handle batch inference throughput, 20% SMs reserved for latency-sensitive realtime requests ensuring immediate response

- FP8 support on Blackwell enables mixed-precision training balancing memory capacity against throughput

Scientific Computing and HPC Simulations:

- Computational scientists accelerate molecular dynamics, fluid dynamics, climate modeling, quantum chemistry simulations through FP64 emulation on tensor cores achieving speedups over traditional double-precision CUDA cores

- cuSOLVER eigenvalue decomposition improvements (2x Blackwell speedup) benefit quantum mechanics, structural analysis, vibration analysis applications

- CUDA Tile abstracts complex optimization enabling faster algorithm prototyping for numerical methods without low-level performance engineering

- Deterministic floating-point reductions ensure reproducible scientific results critical for validation, debugging, or regulatory compliance

Multi-Tenant GPU Cloud Infrastructure:

- Cloud providers partition GPUs using green contexts or MPS static SM allocation isolating customer workloads preventing noisy-neighbor interference

- Memory locality optimization partition (MLOPart) on Blackwell creates logical devices with dedicated memory improving tenant isolation and locality

- Resource quotas implemented through SM partitioning enforce fair sharing or tiered service levels

- Deterministic performance through static partitioning enables reliable SLA guarantees for enterprise customers

Real-Time Interactive Applications and Robotics:

- Autonomous vehicle perception systems use green contexts reserving SMs for latency-critical object detection ensuring immediate processing without batch-induced delays

- Interactive graphics applications partition resources: 70% SMs for rendering throughput, 30% SMs for physics simulation ensuring responsive frame rates

- Robotics control loops requiring deterministic latency leverage green context isolation preventing GPU scheduling unpredictability

- Real-time video analytics pipelines balance throughput (batch processing) against latency (immediate alerts) through resource partitioning

GPU Kernel Development and Optimization:

- Researchers exploring novel algorithms prototype using cuTile Python iterating rapidly versus traditional C++/CUDA development cycles

- Performance engineers benchmark kernel variations using deterministic reductions ensuring reproducible measurements across runs

- Library developers future-proof implementations through CUDA Tile IR maintaining compatibility across GPU generations without architecture-specific branches

- Students learning GPU programming benefit from tile abstraction avoiding overwhelming thread-level complexity while understanding parallel algorithms

Pros \& Cons

Advantages

- CUDA Tile Dramatically Simplifies GPU Programming: The tile-based abstraction eliminates explicit thread management, shared memory orchestration, and tensor core invocation replacing verbose SIMT code with high-level tile operations. This simplification accelerates development, reduces bugs from manual synchronization/memory management, and lowers barrier to entry for newcomers while maintaining performance through compiler optimizations. Developers focus on algorithms versus hardware minutiae improving productivity.

- Future-Proof Kernels Through Virtual ISA: CUDA Tile IR ensures code written for current Blackwell architecture automatically benefits from future GPU improvements without rewrites. This forward compatibility protects software investment eliminating costly porting/optimization cycles when new architectures emerge. Unlike traditional CUDA requiring architecture-specific tuning extracting maximum performance, Tile IR provides “write once, run efficiently everywhere” portability across NVIDIA GPU generations.

- Green Contexts Enable Fine-Grained Resource Control: Spatial SM partitioning addresses critical multi-tenant, latency-sensitive, and interference-reduction scenarios impossible with traditional shared contexts. Green contexts provide guaranteed resources for high-priority workloads, enable fair multi-tenant sharing, reduce performance unpredictability, and support power management through proportional resource allocation. This control proves invaluable for production deployments requiring predictable GPU behavior.

- FP64 Emulation Democratizes Tensor Cores for HPC: Enabling double-precision scientific workloads leveraging tensor cores—previously exclusive to AI/ML—delivers substantial speedups for computational science, molecular dynamics, quantum chemistry, weather modeling requiring FP64 accuracy. This democratization expands tensor core value beyond deep learning improving GPU ROI for diverse workload portfolios typical of supercomputing centers and research institutions.

- Comprehensive Math Library Performance Gains: cuBLAS Grouped GEMM (4x MoE speedup), cuSOLVER eigenvalue improvements (2x Blackwell), cuSPARSE SpMVOp enhancements, and cuFFT device API provide immediate performance benefits for applications using these libraries without code changes. These library optimizations deliver “free” speedups on Blackwell hardware simply by linking updated CUDA Toolkit versions.

- Enhanced Developer Tools Improving Productivity: Nsight Compute Tile profiling, Compute Sanitizer compile-time patching, Nsight Systems green context visualization, and CCCL single-phase APIs reduce debugging time, simplify performance analysis, and streamline development workflows. These tool improvements compound productivity gains from language-level abstractions creating multiplicative developer experience enhancements.

- Open Documentation and Learning Resources: Completely rewritten CUDA Programming Guide, cuTile examples, green context tutorials, and comprehensive API documentation accelerate onboarding, reduce learning curves, and support self-service problem-solving. Strong documentation prevents productivity loss from unclear APIs or missing examples characteristic of rapidly-evolving platforms.

- Free CUDA Toolkit with No Licensing Costs: CUDA 13.1 remains freely downloadable without per-developer licenses, runtime fees, or usage-based charges. This zero-cost model democratizes access enabling students, researchers, startups, and enterprises leveraging advanced features without budget constraints. Optional paid enterprise support provides commercial backing for production deployments requiring SLAs.

Disadvantages

- Steep Learning Curve Despite Abstraction Improvements: While CUDA Tile simplifies some aspects, mastering GPU programming fundamentals (memory hierarchies, parallelism, synchronization, performance analysis) remains challenging. Developers unfamiliar with parallel computing concepts face initial learning investment understanding when/how to apply tile-based programming, interpret profiling results, or diagnose performance issues. Abstraction helps but doesn’t eliminate inherent GPU complexity.

- Hardware Lock-In to NVIDIA Ecosystem: CUDA 13.1 exclusively supports NVIDIA GPUs creating vendor dependence preventing portability to AMD ROCm, Intel oneAPI, Apple Metal, or other GPU platforms. Organizations investing heavily in CUDA face switching costs if competitive or economic factors favor alternative GPU vendors. While CUDA’s performance and ecosystem justify lock-in for many, lack of true cross-vendor portability creates strategic risk.

- CUDA Tile Limited to Blackwell Architecture Initially: The revolutionary tile programming model currently supports only NVIDIA Blackwell (compute capability 10.x/12.x: B200, B300, GB200, GB300) excluding Hopper, Ada Lovelace, Ampere, Turing, Volta users despite these representing massive installed base. Developers on older architectures cannot leverage CUDA Tile requiring traditional SIMT programming. Future releases promise broader support but timeline remains unspecified creating adoption uncertainty.

- cuTile Python Only; C++ Implementation Pending: Initial CUDA Tile release provides Python DSL exclusively requiring C++/CUDA developers waiting for planned future C++ implementation. This limitation impacts systems programmers preferring statically-typed compiled languages, existing C++ codebases requiring Tile integration, or performance-critical scenarios where Python overhead concerns exist (despite compilation to native kernels). Python-only strategy accelerates AI/ML adoption but fragments developer community short-term.

- Maturity Uncertainty for Production-Critical Workloads: Launched December 2025, CUDA 13.1 represents very new major release lacking extensive production battle-testing, comprehensive bug reports across diverse workloads, or proven reliability over extended periods. Early adopters face potential undiscovered issues, performance regressions on edge cases, or API instabilities requiring updates. Production-critical deployments may prefer waiting for subsequent releases (13.2+) incorporating community feedback and bug fixes.

- Complexity Managing Green Context Partitioning: While green contexts enable powerful resource control, determining optimal SM allocations, managing multiple contexts, preventing resource fragmentation, and balancing flexibility versus isolation requires experimentation and expertise. Misconfigured partitioning (too few SMs for workload demands, excessive oversubscription, suboptimal work queue configuration) can degrade performance versus traditional shared contexts. Developers need profiling, benchmarking, and iterative tuning extracting green context benefits.

- FP64 Emulation Not Universally Available: Double-precision tensor core emulation supports only specific Blackwell SKUs (GB200 NVL72, RTX PRO 6000 Blackwell Server Edition) excluding consumer Blackwell cards, other architectures, or budget-constrained deployments. HPC users on non-supported hardware cannot leverage FP64 emulation requiring traditional CUDA cores or upgrading expensive hardware. Limited hardware support fragments HPC community adoption.

- Breaking Changes and Compatibility Considerations: Major releases like CUDA 13.1 potentially introduce API changes, deprecated features, or behavioral modifications requiring code updates. Applications deeply integrated with previous CUDA versions may face migration effort adapting to new paradigms (green contexts, Tile IR). While NVIDIA maintains backward compatibility generally, feature deprecations and best practice evolution create ongoing maintenance burden for legacy codebases.

How Does It Compare?

CUDA 13.1 vs. AMD ROCm 7.0 (Open-Source GPU Computing Platform)

AMD ROCm (Radeon Open Compute) is AMD’s open-source software stack for GPU programming supporting AMD Instinct MI300/MI350 series accelerators providing HIP (C++ dialect), OpenMP, OpenCL programming models targeting HPC and AI workloads.

Vendor Ecosystem:

- CUDA 13.1: NVIDIA-exclusive supporting only NVIDIA GPUs creating vendor lock-in but maximizing NVIDIA hardware utilization

- AMD ROCm: AMD-exclusive supporting AMD Instinct/Radeon GPUs with HIP providing CUDA-to-HIP translation enabling code portability

Programming Abstractions:

- CUDA 13.1: CUDA Tile introduces tile-based programming simplifying optimization through high-level abstractions automatically leveraging tensor cores

- AMD ROCm: Traditional kernel programming (HIP C++) without tile-based abstraction layer requiring manual optimization for matrix cores/CDNA architecture features

Market Maturity:

- CUDA 13.1: 20-year platform evolution with massive ecosystem (6+ million developers), extensive libraries, comprehensive tooling, proven production reliability

- AMD ROCm: Younger platform (launched ~2016) with growing but smaller ecosystem, expanding AI framework support (PyTorch, TensorFlow upstream), increasing supercomputer adoption (Frontier, LUMI)

AI Framework Support:

- CUDA 13.1: Native first-class support across all major frameworks (PyTorch, TensorFlow, JAX, MXNet); ecosystem assumes CUDA

- AMD ROCm: Upstream support in PyTorch/TensorFlow requiring ROCm installation; compatibility improving but historically lagged CUDA

HPC Library Ecosystem:

- CUDA 13.1: Mature math libraries (cuBLAS, cuFFT, cuSPARSE, cuSOLVER) with decades optimization; Blackwell-specific enhancements (Grouped GEMM, FP64 emulation)

- AMD ROCm: Growing library ecosystem (rocBLAS, rocFFT, rocSPARSE, rocSOLVER) with comparable functionality but less optimization maturity versus CUDA

Hardware Availability:

- CUDA 13.1: Blackwell (B200, B300, GB200, GB300) for latest features; broad GPU portfolio from consumer (RTX) to datacenter (H100, A100)

- AMD ROCm: AMD Instinct MI350/MI300 series datacenter accelerators; consumer RDNA GPUs (Radeon 6000/7000) with unofficial ROCm support

When to Choose CUDA 13.1: For NVIDIA hardware deployments, maximum ecosystem maturity, comprehensive tooling, tile-based programming abstractions, or leveraging existing CUDA investments.

When to Choose AMD ROCm: For AMD hardware deployments, open-source philosophy, avoiding NVIDIA vendor lock-in, or supercomputer environments standardized on AMD (Frontier, LUMI systems).

CUDA 13.1 vs. Intel oneAPI (Cross-Architecture Programming Model)

Intel oneAPI is Intel’s unified programming model supporting diverse architectures (Intel CPUs, GPUs, FPGAs) through SYCL-based DPC++ providing cross-vendor portability and heterogeneous computing abstractions.

Cross-Platform Philosophy:

- CUDA 13.1: NVIDIA-specific optimizing exclusively for NVIDIA GPUs maximizing performance through hardware-specific features

- Intel oneAPI: Cross-architecture supporting Intel GPUs (Iris Xe, Arc, Data Center GPU Max), CPUs, FPGAs through unified SYCL API enabling portability

Programming Model:

- CUDA 13.1: CUDA C++ with CUDA Tile (Python DSL) providing NVIDIA-specific abstractions including tensor core utilization, green contexts

- Intel oneAPI: SYCL/DPC++ (ISO C++ based) providing standard parallel programming abstractions portable across vendors; less hardware-specific optimization

Ecosystem Maturity:

- CUDA 13.1: Dominant GPU ecosystem with 20 years evolution, massive developer base, comprehensive libraries, proven production scale

- Intel oneAPI: Newer unified model (launched 2020) with growing adoption; stronger CPU legacy than GPU ecosystem maturity

GPU Market Position:

- CUDA 13.1: Dominant datacenter GPU (NVIDIA H100/A100/Blackwell powering most AI infrastructure); consumer gaming leadership (RTX series)

- Intel oneAPI: Intel Arc consumer GPUs, Data Center GPU Max (Ponte Vecchio) targeting HPC; smaller GPU market share versus NVIDIA/AMD

Performance Optimization:

- CUDA 13.1: Decades NVIDIA hardware co-design enabling maximum performance extraction through architecture-specific features (tensor cores, Blackwell enhancements)

- Intel oneAPI: Portable abstractions prioritize cross-platform compatibility over maximum single-architecture performance; less mature GPU optimization versus CUDA

When to Choose CUDA 13.1: For NVIDIA GPU deployments, maximum performance, mature ecosystem, AI/ML workloads, or existing CUDA codebases.

When to Choose Intel oneAPI: For heterogeneous environments (CPUs+GPUs+FPGAs), Intel hardware deployments, cross-vendor portability requirements, or avoiding GPU vendor lock-in through standards-based SYCL.

CUDA 13.1 vs. OpenCL (Cross-Platform GPU Computing Standard)

OpenCL is Khronos Group open standard for cross-platform parallel programming supporting CPUs, GPUs, FPGAs from multiple vendors (NVIDIA, AMD, Intel, ARM, Qualcomm) providing vendor-neutral heterogeneous computing.

Standardization:

- CUDA 13.1: Proprietary NVIDIA platform optimized exclusively for NVIDIA hardware with vendor-specific features (CUDA Tile, green contexts)

- OpenCL: Open standard (Khronos Group) supporting diverse vendors enabling true cross-platform portability but lowest-common-denominator feature set

Performance:

- CUDA 13.1: Maximum performance on NVIDIA GPUs through hardware co-design, specialized features (tensor cores), decades optimization

- OpenCL: Performance varies dramatically by vendor/implementation; generally slower than vendor-native solutions (CUDA on NVIDIA, ROCm on AMD) due to abstraction overhead

Ecosystem Vitality:

- CUDA 13.1: Thriving active ecosystem with continuous innovation (CUDA Tile major advancement), comprehensive tooling updates, massive community

- OpenCL: Stagnating ecosystem; Apple deprecated macOS support; limited mindshare versus vendor-specific platforms; slower standard evolution

Feature Richness:

- CUDA 13.1: Cutting-edge features (tile programming, green contexts, FP64 emulation, advanced profiling) unavailable in OpenCL specifications

- OpenCL: Baseline feature set (OpenCL 3.0) lacking modern abstractions, tensor core support, or advanced resource management limiting optimization potential

Developer Experience:

- CUDA 13.1: Rich tooling (Nsight suite), extensive documentation, large community, abundant learning resources, comprehensive library ecosystem

- OpenCL: Fragmented tooling quality across vendors, limited learning resources versus CUDA, smaller community, inconsistent documentation

Hardware Support:

- CUDA 13.1: NVIDIA GPUs exclusively; Blackwell requires CUDA 13.1 for latest features

- OpenCL: Broad hardware support (NVIDIA, AMD, Intel GPUs; ARM Mali; Qualcomm Adreno) enabling portability but lowest-common-denominator performance

When to Choose CUDA 13.1: For NVIDIA hardware, maximum performance, modern features, rich ecosystem, AI/ML workloads, or production deployments requiring comprehensive tooling.

When to Choose OpenCL: For true cross-vendor portability (supporting NVIDIA+AMD+Intel from single codebase), embedded systems, mobile GPUs, or avoiding proprietary vendor lock-in despite performance/feature trade-offs.

Final Thoughts

NVIDIA CUDA 13.1 represents transformative milestone in GPU programming through CUDA Tile’s paradigm shift from thread-level SIMT to tile-based operations, green contexts’ fine-grained resource partitioning, FP64 emulation democratizing tensor cores for HPC, and comprehensive tooling/library enhancements. The December 4, 2025 release delivers NVIDIA’s boldest CUDA evolution positioning platform for next-generation AI workloads (trillion-parameter models, mixture-of-experts architectures), HPC scientific computing (double-precision simulations leveraging tensor cores), multi-tenant cloud infrastructure (spatial SM isolation), and future GPU architectures (Tile IR forward compatibility).

CUDA Tile’s abstraction dramatically lowers GPU programming complexity enabling broader developer participation while maintaining performance through compiler optimizations and automatic tensor core utilization. This democratization proves critical as AI/ML demands outpace available GPU expertise—simplifying kernel development accelerates innovation enabling data scientists and ML engineers implementing custom operators without traditional CUDA mastery. The Python-first cuTile DSL particularly benefits AI community familiar with NumPy-style array programming maintaining ecosystem consistency versus context-switching to C++/CUDA.

Green contexts address persistent multi-tenant and latency-sensitive challenges where traditional shared GPU contexts create interference and unpredictability. Cloud providers, interactive applications, autonomous systems, and real-time inference pipelines benefit from guaranteed resource availability, spatial isolation, and deterministic performance impossible with conventional approaches. Combined with MPS enhancements (static SM partitioning, MLOPart memory locality), CUDA 13.1 enables sophisticated resource management matching diverse workload requirements within single GPU maximizing utilization efficiency.

The platform particularly excels for:

AI/ML researchers and engineers developing transformer models, mixture-of-experts architectures, custom attention mechanisms, or novel algorithms benefiting from CUDA Tile’s simplified tensor operations and cuBLAS Grouped GEMM acceleration (4x MoE speedup)

HPC scientists in molecular dynamics, computational fluid dynamics, quantum chemistry, weather modeling leveraging FP64 emulation on tensor cores achieving speedups over traditional double-precision CUDA cores democratizing specialized hardware

Cloud infrastructure operators managing multi-tenant GPU clusters using green contexts or MPS static partitioning isolating customer workloads, ensuring fair resource allocation, and providing deterministic SLA-backed performance

Real-time systems developers in autonomous vehicles, robotics, interactive graphics, video analytics partitioning GPU resources guaranteeing latency-sensitive workload availability through SM reservation preventing batch-induced delays

GPU kernel library maintainers future-proofing implementations through CUDA Tile IR ensuring compatibility across GPU generations without architecture-specific code branches or costly porting cycles

For users requiring cross-vendor GPU portability, AMD ROCm’s open-source stack supports AMD Instinct accelerators with HIP providing CUDA-translation enabling platform diversity. For heterogeneous computing spanning CPUs+GPUs+FPGAs, Intel oneAPI’s SYCL-based approach offers unified programming model though less GPU optimization maturity. For standards-based truly vendor-neutral computing, OpenCL provides baseline cross-platform capabilities accepting performance trade-offs for portability.

But for NVIDIA hardware deployments prioritizing maximum performance, cutting-edge features, ecosystem maturity, or AI/ML workload optimization, CUDA 13.1’s comprehensive enhancements justify continued platform investment. The CUDA Tile paradigm shift, green context resource control, tensor core democratization, and tooling improvements represent evolutionary leaps impossible for competitors replicating short-term given NVIDIA’s hardware-software co-design advantages and decades accumulated optimization expertise.

The platform’s primary limitations—hardware lock-in to NVIDIA excluding AMD/Intel alternatives, CUDA Tile limited to Blackwell architecture initially, cuTile Python-only pending C++ implementation, and early-stage maturity requiring production validation—reflect expected constraints of ambitious platform evolution pioneering new programming paradigms. These limitations will mitigate as future CUDA releases expand architecture support, deliver C++ Tile implementation, and incorporate community feedback stabilizing APIs.

The critical strategic question centers on NVIDIA ecosystem commitment: organizations deeply invested in CUDA benefit immensely from 13.1’s advancements extracting maximum Blackwell performance, future-proofing kernels, and simplifying development workflows. Organizations pursuing multi-vendor GPU strategies or concerned about NVIDIA dependence must weigh CUDA’s technical superiority against portability/flexibility trade-offs of AMD ROCm, Intel oneAPI, or OpenCL alternatives accepting performance gaps for reduced vendor lock-in.

For developers and organizations prioritizing GPU performance, ecosystem maturity, comprehensive tooling, or AI/ML workload optimization on NVIDIA hardware, CUDA 13.1’s revolutionary CUDA Tile programming model, green context resource partitioning, FP64 tensor core emulation, and extensive library/tool enhancements deliver transformative capabilities justifying immediate adoption—positioning GPU computing for next decade of AI/HPC advancement through simplified programming abstractions, hardware feature democratization, and forward-compatible software architectures.