Table of Contents

Overview

In the ever-evolving landscape of AI, a new contender has emerged, promising to push the boundaries of what’s possible with large language models. DeepSeek-V3-0324, developed by DeepSeek AI, is an open-source marvel boasting a staggering 685 billion parameters. This model isn’t just about size; it’s about performance, offering enhanced coding capabilities, improved reasoning, and a massive context window. Let’s dive into what makes DeepSeek-V3-0324 a force to be reckoned with.

Key Features

DeepSeek-V3-0324 comes packed with impressive features designed to tackle complex AI tasks. Here’s a breakdown of what it offers:

- 685B MoE Parameters: This massive parameter count, utilizing a Mixture-of-Experts (MoE) architecture, allows for highly nuanced and detailed text generation and understanding.

- 128K Context Window: A huge context window enables the model to process and retain information from much larger documents and conversations, leading to more coherent and relevant outputs.

- Enhanced Coding and Reasoning Abilities: DeepSeek-V3-0324 excels at generating, understanding, and debugging code, as well as tackling complex reasoning problems.

- Open-Source under MIT License: The open-source nature of the model promotes collaboration, transparency, and accessibility for researchers and developers.

How It Works

DeepSeek-V3-0324 leverages a mixture-of-experts (MoE) architecture. This means that instead of activating all parameters for every input, the model intelligently selects a subset of “expert” networks best suited to handle the specific task. This approach significantly improves efficiency and allows the model to handle the massive 685 billion parameters without requiring prohibitive computational resources for every query. The model then processes the input within its expansive 128K token context window, allowing for a deeper understanding of the information before generating a response. This results in more accurate, relevant, and contextually aware outputs.

Use Cases

The capabilities of DeepSeek-V3-0324 open up a wide range of potential applications. Here are a few key use cases:

- Code Generation: Automate the creation of code snippets, entire programs, and assist in debugging existing codebases.

- Mathematical Problem-Solving: Tackle complex mathematical problems, from basic arithmetic to advanced calculus.

- Multilingual Tasks: Translate between languages, generate content in multiple languages, and understand nuances across different linguistic contexts.

- AI Research and Development: Serve as a powerful tool for researchers exploring new frontiers in natural language processing and artificial intelligence.

Pros & Cons

Like any powerful tool, DeepSeek-V3-0324 has its strengths and weaknesses. Let’s examine the advantages and disadvantages.

Advantages

- High performance in coding tasks: Excels at code generation, understanding, and debugging, making it invaluable for software development.

- Open-source accessibility: The MIT license promotes collaboration, transparency, and allows for customization and adaptation.

- Large context window: The 128K token context window enables the model to process and retain information from much larger documents and conversations.

Disadvantages

- Requires substantial computational resources for deployment: The sheer size of the model necessitates significant hardware and infrastructure for optimal performance.

How Does It Compare?

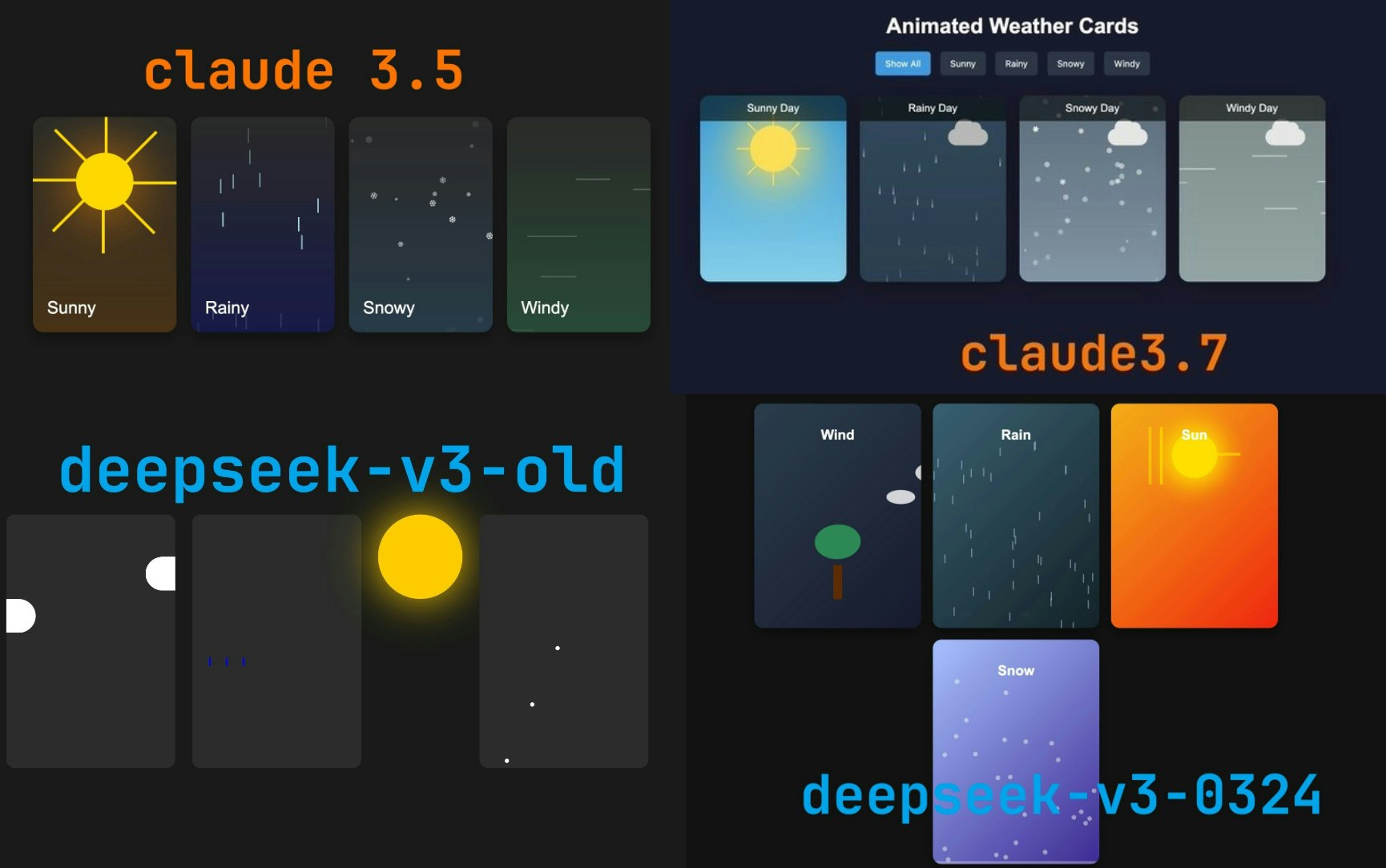

When considering large language models, it’s important to understand how they stack up against the competition.

- Claude 3.7 Sonnet: While Claude 3.7 Sonnet is a proprietary model known for its strong reasoning capabilities, its accessibility is limited compared to DeepSeek-V3-0324’s open-source nature.

- GPT-4: GPT-4 offers advanced capabilities across a wide range of tasks, but it is not open-source, restricting customization and community-driven development. DeepSeek-V3-0324 provides an open-source alternative with competitive performance, particularly in coding tasks.

Final Thoughts

DeepSeek-V3-0324 represents a significant step forward in open-source large language models. Its impressive size, enhanced coding abilities, and expansive context window make it a powerful tool for developers, researchers, and anyone looking to push the boundaries of AI. While the computational requirements are a factor to consider, the open-source nature and strong performance make DeepSeek-V3-0324 a compelling option in the ever-evolving AI landscape.