Table of Contents

Overview

In the landscape of AI-powered voice applications, building production-grade voice agents traditionally requires managing complex infrastructure—scaling model deployments, managing multi-region latency, provisioning GPUs, handling autoscaling, optimizing for privacy and compliance. Hathora addresses this infrastructure complexity through an inference platform specifically optimized for voice AI, enabling developers to deploy conversational agents across 14 global regions with 32ms average latency and sub-2-second container startup, eliminating DevOps overhead while supporting both open-source and proprietary models. Rather than requiring developers to manage Kubernetes clusters, GPU provisioning, or regional replication, Hathora abstracts this infrastructure beneath an intuitive interface—developers deploy models once and Hathora orchestrates global distribution, auto-scaling, regional failover, and performance optimization automatically. The platform enables you to start instantly on shared endpoints, then seamlessly upgrade to dedicated infrastructure for stringent privacy, compliance, or VPC requirements.

Key Features

Hathora combines low-latency inference orchestration with voice-specific optimization:

- Zero-DevOps Voice Deployment: Deploy voice agents without managing infrastructure complexity—no Kubernetes configuration, GPU provisioning, or networking complexity required. Hathora handles orchestration automatically.

- Multi-Region Global Deployment: Voice models automatically distribute across 14+ global regions with user traffic automatically routed to geographically closest deployment. Users worldwide receive consistent sub-50ms latency without manual regional configuration.

Ultra-Low Latency Performance: 32ms average edge latency and 2.2-second container startup time enable natural, responsive voice interactions. Real-time constraints for voice applications (sub-100ms target for human-like perception) are built-in rather than requiring optimization afterward.

Multi-Model Flexibility: Support for open-source models (Llama, Mistral, Whisper, others) alongside proprietary models (GPT-5, Claude, others). Developers mix and match ASR (automatic speech recognition), TTS (text-to-speech), and LLM models from different providers within unified deployment.

Shared and Dedicated Hosting Options: Start immediately on shared endpoints for rapid experimentation, then upgrade transparently to dedicated infrastructure for enhanced privacy, compliance, or isolated performance without architectural changes.

VPC Integration and Compliance: Deploy models within your Virtual Private Cloud for isolated infrastructure, meeting stringent compliance requirements (HIPAA, SOC 2) and data residency mandates.

Bring Your Own Models/Containers: Deploy custom-trained models by providing Dockerfiles. Hathora handles containerization, orchestration, scaling, and multi-region replication automatically.

Cost-Optimized Hybrid Infrastructure: Combines cost-effective committed bare-metal capacity with auto-scaling cloud resources, enabling organizations to balance cost and performance based on demand patterns.

Comprehensive Observability: Real-time performance metrics, latency monitoring, error tracking, and integration with external observability tools (Prometheus) provide visibility throughout the inference pipeline.

How It Works

Hathora operates through an integrated inference pipeline:

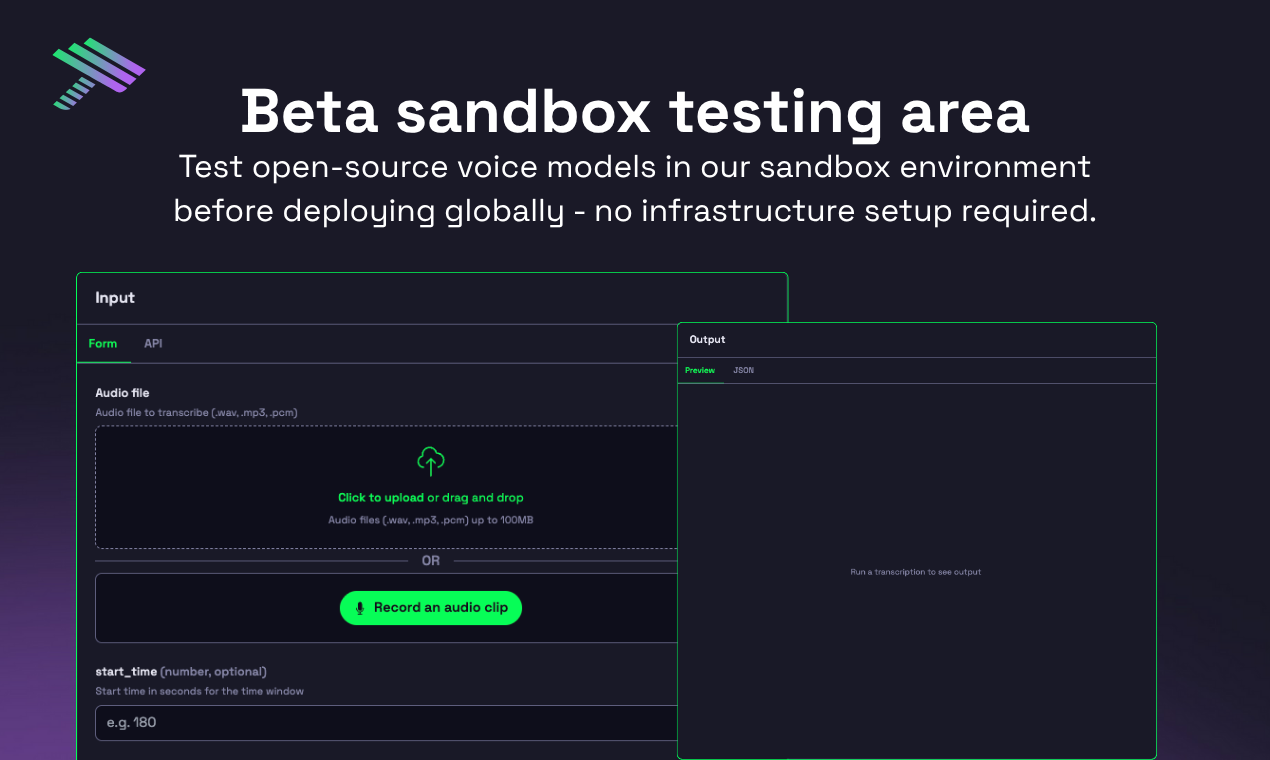

Select or Upload Your Model: Choose from pre-integrated open-source or proprietary models (supported ASR, TTS, LLM models from OpenAI, ElevenLabs, and others), or upload your custom model via Docker container. Hathora automatically handles containerization and optimization.

Configure Deployment and Scaling: Specify your desired deployment configuration—shared endpoints for rapid development or dedicated infrastructure for production requirements. Set scaling parameters, VPC requirements, and regional availability preferences. Hathora handles all regional replication and auto-scaling policy configuration automatically.

Deploy Across 14 Global Regions: Submit your configured model. Hathora automatically orchestrates containerization, deploys to all selected regions, configures load balancing, and establishes health monitoring. User traffic automatically routes to geographically closest regions for optimal latency.

Monitor and Optimize in Real-Time: Access real-time metrics on inference latency, throughput, resource utilization, and error rates. Hathora surfaces performance bottlenecks and enables rapid iteration on model configuration or infrastructure scaling parameters.

Upgrade to Dedicated Infrastructure Seamlessly: As requirements evolve, transparently migrate from shared to dedicated infrastructure without architectural changes. Hathora maintains identical inference characteristics across hosting tiers, ensuring consistency throughout scaling.

Use Cases

Hathora serves voice AI scenarios requiring low latency and global performance:

- Conversational Voice Assistants: Deploy natural-sounding voice assistants for customer engagement, information retrieval, and task automation across global user bases with consistent low-latency responses.

Call Center Automation: Replace human-handled routine inquiries with AI-powered voice agents for immediate customer support, efficient call routing, and instant responsiveness without hold times or agent availability constraints.

Interactive Voice Response (IVR) Systems: Implement voice-driven customer service systems that understand context, handle complex workflows, and escalate to human agents seamlessly—all with natural conversation flow enabled by sub-50ms latency.

Low-Latency Voice Analytics: Process voice streams in real-time for sentiment analysis, emotion detection, intent classification, and quality assurance without end-to-end processing latency that degrades voice naturalness.

Real-Time Voice Translation: Enable simultaneous interpretation and translation within voice conversations, with latency constraints strict enough to maintain conversation naturalness across languages.

Voice-First AI Applications: Build applications where voice is the primary interface—voice-driven automation, accessibility-focused tools, conversational AI experiences—all requiring consistently low latency for user perception of natural interaction.

Edge Voice Processing: Deploy voice models closer to end users while maintaining compliance—processing sensitive voice data locally within VPCs rather than routing to centralized cloud infrastructure.

Pros & Cons

Advantages

- Eliminates DevOps Complexity: Voice engineers focus on model fine-tuning and application logic rather than infrastructure management, deployment orchestration, or multi-region scaling.

Ultra-Low Latency by Default: 32ms edge latency is built-in rather than requiring extensive optimization. Real-time performance constraints for voice interactions are satisfied automatically.

Global Scale Instantly: Deploy models worldwide across 14 regions without manual regional replication or traffic routing configuration. Automatically routes users to geographically optimal deployments.

Flexible Model Support: Combine open-source and proprietary models from diverse providers (OpenAI, Anthropic, ElevenLabs, others), enabling best-of-breed model selection rather than platform lock-in.

Transparent Shared-to-Dedicated Scaling: Start on shared infrastructure for rapid experimentation and cost-effective prototyping, then upgrade to dedicated infrastructure without architectural changes as production requirements evolve.

Enterprise-Grade Compliance: VPC deployment, compliance certifications, and data residency controls enable meeting strict regulatory requirements and internal security policies.

Cost Optimization: Hybrid infrastructure combining committed bare-metal capacity with elastic cloud resources enables favorable unit economics by matching infrastructure to demand patterns.

Disadvantages

Learning Curve for Advanced Optimization: While basic deployment is straightforward, optimizing performance across regions, configuring scaling policies, and implementing custom models requires understanding infrastructure concepts.

Inference-Specific Focus: Specializes in inference deployment rather than comprehensive model development, fine-tuning, or training capabilities. Teams requiring full ML development pipelines may need complementary tools.

Regional Coverage Limitations: 14 global regions provide good worldwide coverage but fall short compared to major cloud providers’ 30+ regions, potentially impacting edge latency for certain geographies.

Vendor-Specific Abstractions: While supporting multiple models, platform-specific orchestration and scaling patterns may require learning Hathora-specific concepts versus lower-level infrastructure management.

How Does It Compare?

Hathora occupies a specific category—inference optimization for latency-sensitive applications—representing a distinct approach to voice AI deployment than traditional voice platforms.

Twilio Voice provides global telecommunications infrastructure for voice call routing, conferencing, and programmable call control through REST APIs. Twilio excels at call connectivity and telephony infrastructure but doesn’t handle AI model inference—developers supply AI capabilities externally. Twilio manages “phone company” infrastructure (PSTN connectivity, call routing, recording); Hathora manages AI inference infrastructure. They address different layers of the voice application stack and frequently complement each other rather than compete directly.

Retell AI provides a no-code platform specifically for AI voice agent automation focused on inbound/outbound calling workflows, scheduling, CRM integration, and call analytics. Retell emphasizes ease of use for non-technical users and pre-built business logic for common call center scenarios. Retell is application-layer (call workflows, business logic, CRM integration); Hathora is infrastructure-layer (model inference, latency optimization, global deployment). Retell provides business-ready voice agents; Hathora provides infrastructure for voice applications.

Vapi offers voice AI agent development with emphasis on ease of deployment, natural conversation flows, real-time audio processing, and built-in telephony. Vapi abstracts infrastructure complexity while enabling developers to build sophisticated conversational agents. Like Retell, Vapi emphasizes developer ergonomics for voice agent construction. However, Vapi’s primary focus remains conversation orchestration and voice agent workflows; Hathora’s focus remains low-latency inference optimization across diverse model types.

ElevenLabs specializes in text-to-speech (TTS) voice generation with human-like quality, voice cloning, and multivoice conversations. ElevenLabs focuses on voice synthesis quality and expressiveness. While voice synthesis is one component of voice applications, ElevenLabs doesn’t handle full inference infrastructure, ASR, or LLM deployment. ElevenLabs provides TTS; Hathora hosts inference infrastructure for TTS, ASR, and LLM models from any provider.

Together AI provides general-purpose inference infrastructure for open-source models with emphasis on cost-effectiveness and model diversity. Together supports 200+ models across multiple modalities (text, code, images, video). Unlike Hathora’s voice-specific optimization (32ms latency, 2.2s startup), Together prioritizes cost and model breadth. Together serves general AI workloads; Hathora optimizes specifically for voice latency requirements.

Hathora’s distinctive positioning emerges through: voice-specific latency optimization (32ms edge latency built-in), automatic global distribution across 14 regions, zero DevOps approach for inference deployment, and complete model flexibility (open-source, proprietary, custom). While platforms like Retell and Vapi focus on voice agent workflows, and TTS platforms like ElevenLabs focus on voice synthesis, Hathora specializes in the infrastructure layer enabling low-latency model inference globally.

Final Thoughts

Hathora addresses a genuine infrastructure challenge in voice AI—deploying latency-sensitive models globally while minimizing operational complexity. Its combination of ultra-low latency, automatic global distribution, zero DevOps overhead, and flexible model support creates compelling value for voice application developers.

For teams building voice applications requiring consistent sub-50ms latency globally (voice assistants, call center automation, real-time translation, interactive robotics), Hathora delivers infrastructure designed specifically for voice’s latency constraints. The transparent upgrade path from shared to dedicated infrastructure enables rapid prototyping without upfront enterprise-level commitment.

However, teams focused on conversation logic and orchestration (rather than infrastructure) may find platforms like Retell or Vapi more aligned with their needs. Organizations requiring comprehensive ML development infrastructure (training, fine-tuning, inference) should evaluate platforms like Together AI or specialized ML infrastructure providers. Hathora excels when latency optimization and global inference deployment are primary requirements.