Table of Contents

Overview

Hiperyon revolutionizes multi-LLM workflows through unified memory infrastructure that maintains conversation context and user preferences across ChatGPT, Claude, Gemini, Mistral, Grok, and other platforms. Launched November 2025 as Chrome extension (with mobile app in development), Hiperyon addresses fundamental inefficiency of fragmented LLM usage—context loss, token waste, and repetitive reexplanations when switching between platforms. Rather than requiring users to manually recreate context each time they switch models, Hiperyon operates as intelligent memory layer automatically preserving conversation history, insights, and preferences across platforms. The platform combines 30% performance improvement through continuous context with 90% token reduction through intelligent memory compression, enabling teams to leverage diverse LLM strengths without workflow fragmentation.

Key Features

Hiperyon combines cross-platform memory synchronization with intelligent context optimization:

- Unified Cross-Platform Memory: Automatically syncs conversation history, preferences, and contextual data across ChatGPT, Claude, Gemini, Mistral, Grok, and other browser-based LLMs. Users switch models seamlessly while maintaining complete context and conversation thread.

- Intelligent Memory Compression: Proprietary algorithm identifies and retains contextually relevant information while discarding redundant data. Reduces token usage by 90% during model switches by eliminating need to re-explain goals or rebuild context.

Real-Time Context Injection: When switching LLM models, Hiperyon automatically injects full retained context into new platform, enabling continued conversation without interruption. Users reference previous interactions and build directly on prior analysis.

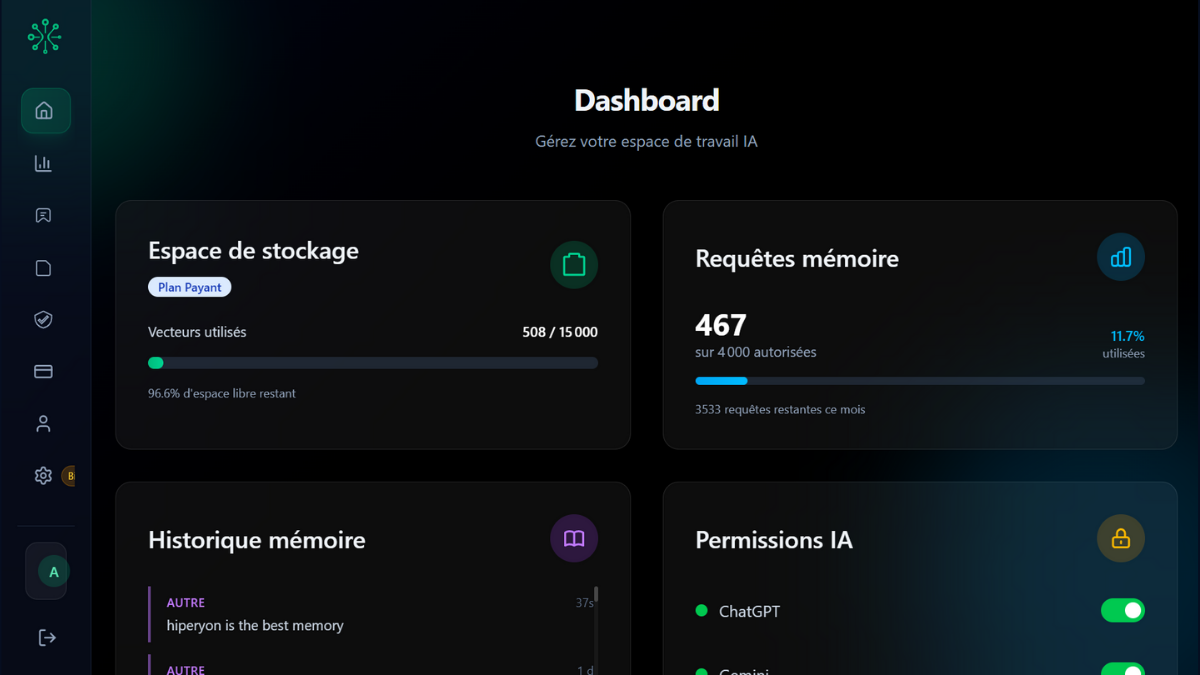

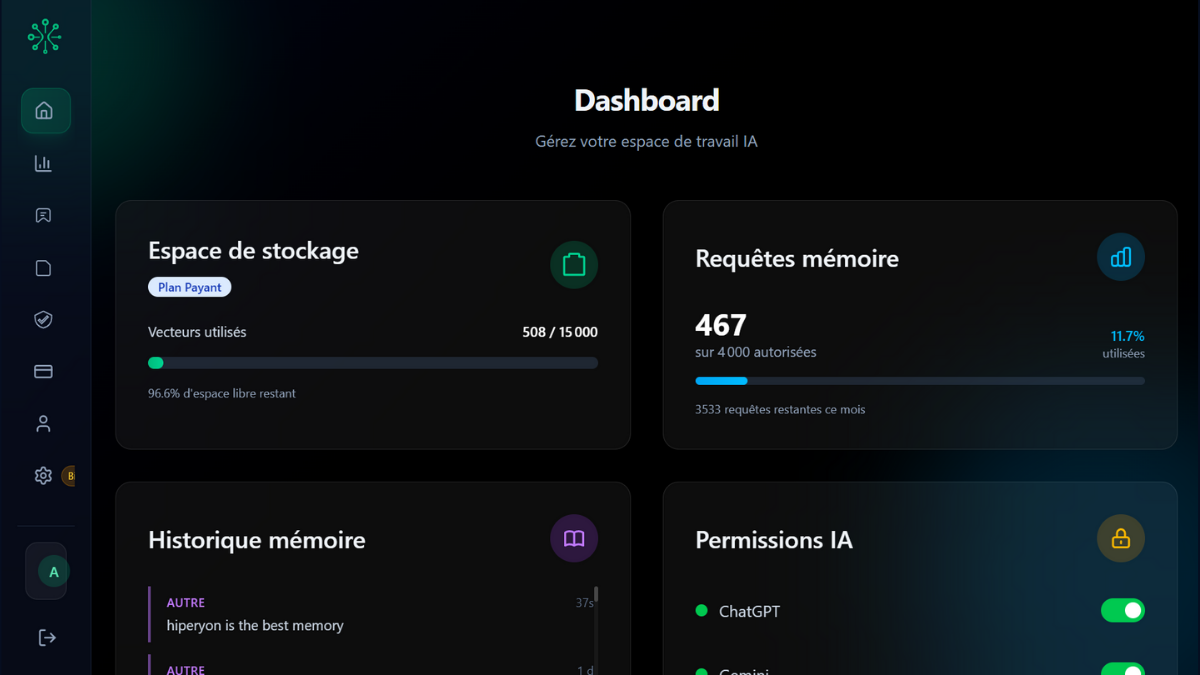

Memory Dashboard with Manual Controls: Centralized interface enables users to view, edit, export, and delete stored memories. Fine-grained control over data retention, enabling manual override of automatic memory decisions and complete data ownership.

Encryption and Privacy Protection: End-to-end encryption (TLS in transit, AES-256 at rest) ensures data security. GDPR compliant with user-controlled access and deletion capabilities. Enterprise-grade security architecture.

Freemium and Paid Plans: Free tier includes cross-model memory sync and 5GB storage. Premium tiers starting at $9/month unlock unlimited storage, advanced features, priority support, and custom model integrations.

Enterprise Integrations: SOC 2-certified infrastructure enabling enterprise deployment. Custom API integrations for organizations with specialized LLM requirements.

Model-Agnostic Architecture: Works with any browser-based LLM without requiring model modifications. Compatible with proprietary (ChatGPT, Gemini) and open-source (Mistral) models simultaneously.

Sub-Second Latency: Memory retrieval optimized for minimal performance impact. Platform guarantees 100% uptime and sub-second memory access enabling seamless user experience.

How It Works

Hiperyon operates as transparent memory layer across LLM interfaces:

Install Chrome Extension: Add Hiperyon extension to Chrome browser. Extension automatically activates whenever user accesses supported LLM platforms.

Automatic Context Capture: As users interact with any LLM (ChatGPT, Claude, Gemini), Hiperyon captures conversation content, user interactions, and contextual data automatically without requiring explicit save actions.

Memory Analysis and Compression: Proprietary algorithm analyzes captured data in real-time. System identifies high-value context, user preferences, key insights, and critical information while filtering redundant or low-relevance data.

Smart Memory Storage: Relevant context stores in encrypted, indexed memory system. User data remains locally controlled with enterprise-grade encryption protecting stored information.

Seamless Model Switching: When user switches LLM platforms mid-conversation, Hiperyon detects platform change and automatically retrieves relevant context from memory.

Context Injection: Retained context injects directly into new LLM interface, enabling user to continue conversation as if never switching platforms. New model has complete context enabling immediate continuation.

Manual Control and Customization: Users access memory dashboard to review stored information, edit memories manually, adjust retention rules, or delete specific data. Fine-grained controls enable customization of memory behavior.

Analytics and Optimization: Dashboard provides analytics showing memory usage patterns, token savings, and cross-model usage statistics enabling data-driven workflow optimization.

Use Cases

Hiperyon serves diverse multi-LLM workflow scenarios:

- Research Across Models: Researchers consult different LLMs for diverse perspectives on single research question. Hiperyon retains research context enabling seamless switching between models while maintaining research continuity and avoiding repetitive explanation.

Development and Debugging: Developers switch between GPT-4 for strategic thinking, Claude for code analysis, and Gemini for documentation. Hiperyon preserves project context across model switches enabling efficient development workflows.

Content Creation with Model Specialization: Writers use different models for different content stages—outline generation, first draft, editing, publishing optimization. Hiperyon maintains content context through entire workflow.

Data Analysis and Insights: Analysts use multiple LLMs for different analytical perspectives. Hiperyon enables context retention enabling each model to build on prior analysis without redundant data explanation.

Enterprise Teams and Compliance: Organizations managing sensitive workflows require complete audit trails and data control. Hiperyon’s GDPR compliance, encryption, and audit capabilities enable enterprise deployment with compliance assurance.

Prompt Engineering and Optimization: Engineers experiment with different models testing prompt effectiveness. Hiperyon retains successful prompts and context enabling systematic optimization across models.

Customer Support and Assistance: Support teams leverage different LLMs for different issue types while maintaining customer context. Hiperyon enables context retention enabling consistent support experience across tool switches.

Pros & Cons

Advantages

- Eliminates Context Loss: Unlike manual context recreation, Hiperyon automatically preserves full context across model switches, enabling seamless continuation without frustration.

Significant Token Savings: 90% token reduction through intelligent memory compression. Dramatically reduces costs for organizations managing substantial LLM usage.

True Cross-Platform Unification: Unlike single-platform tools or fragmented plugins, Hiperyon uniquely unifies proprietary (ChatGPT, Gemini) and open-source (Mistral) models simultaneously through single interface.

Complete Data Control: Users maintain full ownership of stored data with fine-grained manual controls, export capabilities, and deletion options. Dashboard provides complete visibility into stored information.

Enterprise-Ready Security: SOC 2 certification, end-to-end encryption, GDPR compliance, and audit trails provide enterprise-grade security assurance rarely found in consumer tools.

30% Performance Improvement: Continuous context delivery enables LLMs to provide more relevant and accurate responses immediately, without requiring context rebuilding.

Minimal Setup Friction: Simple Chrome extension installation without requiring configuration. Automatic activation across supported platforms reduces user effort.

Disadvantages

Browser Extension Limitation: Currently limited to Chrome browser, excluding Firefox, Safari, or other browsers. Mobile app in development but not yet available.

Dependency on Platform Support: Functionality dependent on LLM providers maintaining compatible APIs. Platform changes or deprecated interfaces may require extension updates.

Emerging Platform Stage: Launched November 2025, so feature maturity and edge-case handling continue evolving. Enterprise users should validate against specific workflow requirements.

Free Tier Storage Constraints: 5GB free tier storage may be insufficient for heavy users or organizations with extensive interaction history. Upgrade to paid plans required for unlimited storage.

Learning Curve for Advanced Features: While basic functionality is intuitive, advanced features like custom retention rules and API integrations require some configuration understanding.

How Does It Compare?

Hiperyon occupies distinct position within multi-LLM management landscape, emphasizing unified memory across consumer interfaces rather than developer infrastructure or workflow automation.

AI Context Flow (launched November 2025, direct competitor) functions as Chrome extension providing portable “AI memory” across ChatGPT, Claude, Gemini, Grok. Similar core value proposition to Hiperyon—enabling context retention across model switches. However, AI Context Flow emphasizes prompt optimization alongside memory, while Hiperyon emphasizes complete context preservation and memory compression. Both offer similar freemium models with paid tiers. Direct head-to-head competitors with minor differentiation in feature emphasis.

Mem0 provides developer-focused memory infrastructure through APIs and SDKs rather than consumer interface. Mem0 emphasizes infrastructure layer enabling developers to build memory into LLM applications through MCP protocol and standard integrations. Mem0 serves developers building custom AI systems; Hiperyon serves end-users managing existing platforms. Mem0 is infrastructure; Hiperyon is consumer interface. Organizations use both—Mem0 for developer applications, Hiperyon for direct user workflows.

Prompts.ai provides multi-LLM management through unified dashboard consolidating access to 35+ models with centralized cost tracking, automated routing, and governance controls. Prompts.ai emphasizes cost management and model routing rather than memory or context. Prompts.ai serves organizations managing costs across many models; Hiperyon serves individual users managing context across platforms. Different value focus—cost versus context.

LangChain, LangGraph, AutoGen provide developer frameworks for building multi-agent LLM workflows rather than consumer interfaces. Frameworks serve developers building custom applications; Hiperyon serves end-users using existing platforms. Different audiences and use cases.

Traditional Manual Approaches require users to manually copy context between platforms, losing conversation history, and recreating explanations. Hiperyon delivers efficiency through complete automation.

Hiperyon’s distinctive positioning emerges through: unified memory across consumer LLM platforms (not developer infrastructure), automatic context preservation (not requiring manual setup), cross-platform unification (proprietary and open-source models), intelligent memory compression (not raw context storage), and complete data control (user-owned data with encryption). While AI Context Flow offers similar capabilities with prompt optimization focus, Mem0 provides developer infrastructure, and Prompts.ai emphasizes cost management, Hiperyon specifically optimizes for seamless multi-platform user experience.

Final Thoughts

Hiperyon addresses genuine productivity challenge—the massive time waste and context loss from fragmented LLM usage. Its combination of unified memory, automatic context injection, intelligent compression, and enterprise-grade security transforms fragmented workflows into cohesive experience enabling users to leverage LLM diversity without friction.

For researchers, developers, analysts, and teams managing multiple LLMs, Hiperyon delivers practical efficiency improvements through automatic context preservation that manual approaches and fragmented solutions cannot match.

However, users confined to single platforms (only ChatGPT or only Claude), those requiring specialized frameworks, or organizations with extreme infrastructure requirements should evaluate platform fit. Hiperyon optimizes specifically for seamless multi-platform user experience rather than providing developer infrastructure or comprehensive LLM management platforms.