Table of Contents

Overview

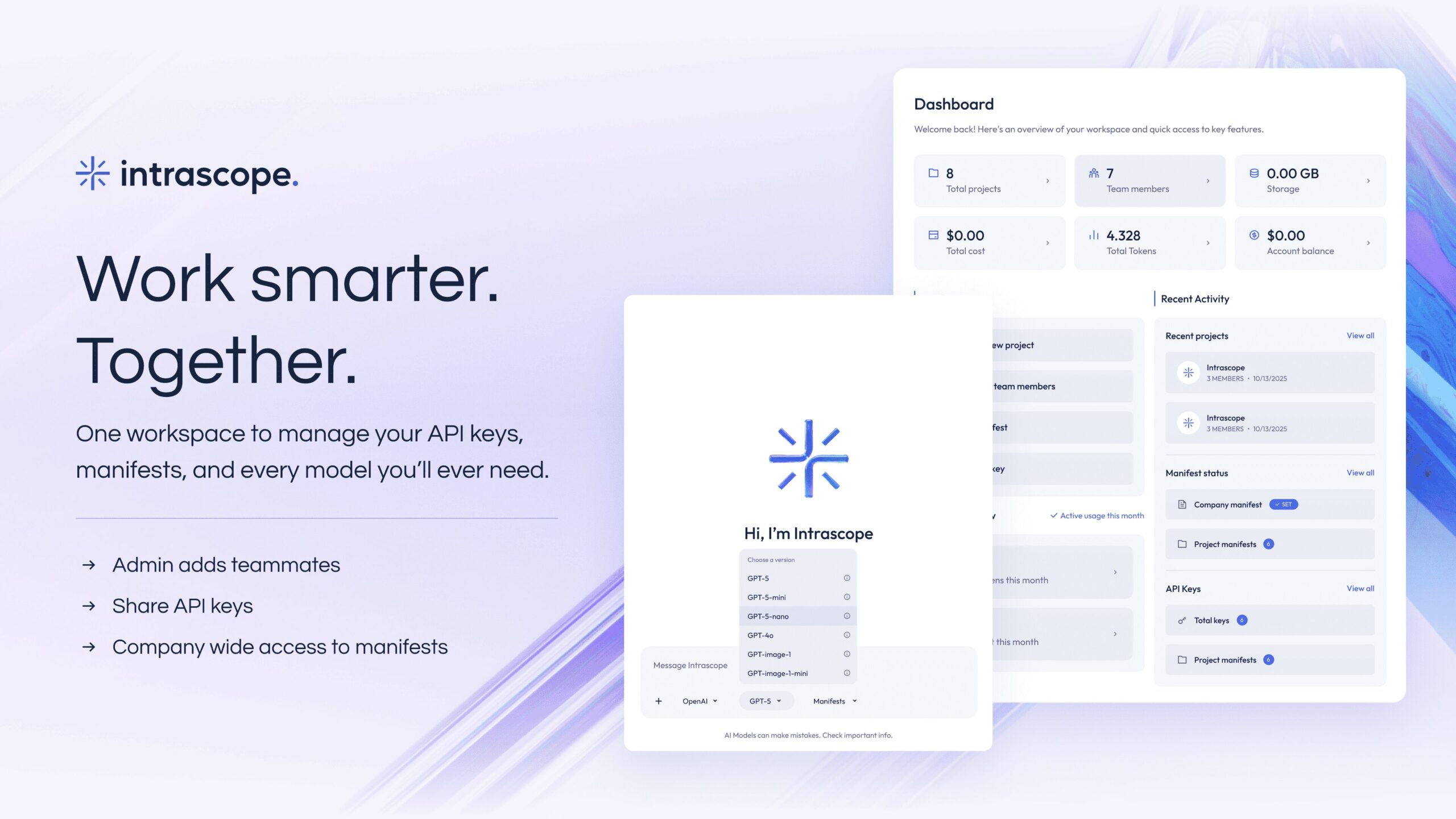

Developed as a “Unified AI Command Center,” Intrascope bridges the gap between raw API power and user-friendly collaboration. It empowers administrators to connect their own API keys once, allowing the entire team to access top-tier models without ever exposing sensitive credentials to individual users. The platform introduces a structured project environment using Manifests—pre-defined sets of instructions that ensure consistent AI behavior and brand voice across different teams. With robust usage monitoring and privacy-first data handling, Intrascope transforms AI from an individual experiment into a predictable, enterprise-ready business asset.

Key Features

- Unified API Key Management: Connects accounts from OpenAI, Anthropic, Gemini, xAI, and DeepSeek to provide a single point of access for the whole team.

- Contextual Manifests: Enables the creation of reusable project blueprints (Manifests) that define specific roles, tones, and formatting rules for AI responses.

- Granular Token Monitoring: Gives administrators full visibility into AI consumption, allowing them to track usage and set specific spending caps per user or project.

- Shared Project Environments: Organizes chats into structured projects where team members can work within a shared context while maintaining personal login sessions.

- Privacy-First Data Isolation: Ensures that no company data or chat history is used for training external models or profiling users.

- White-Label Customization: Offers agencies the ability to rebrand the platform with their own logos and domains for a client-facing experience.

- Administrator Control Hub: Centralizes user onboarding, manifest assignments, and database limit configurations in one intuitive dashboard.

- BYOK (Bring Your Own Key) Support: Allows teams to pay only for the exact AI usage through their own provider accounts, potentially reducing costs by up to 85%.

How It Works

The setup begins with an administrator connecting their preferred AI provider keys and creating “Manifests” that describe the company’s specific projects or tasks. Team members are then invited to the platform, where they can immediately start chatting with models like GPT-4 or Claude 3.5 Sonnet using the pre-set context. The system automatically routes these requests through the administrator’s keys, tracking every token consumed. If a user approaches their assigned budget limit, the system can pause access or alert the administrator. This feedback loop ensures that the team always has access to the best models without the risk of an unmonitored financial surprise at the end of the month.

Use Cases

- Marketing Agencies: Deploying branded AI workspaces for clients where specialized Manifests ensure all generated content follows specific brand guidelines.

- Support Teams: Equipping agents with a “Knowledge Manifest” that contains updated product documentation for consistent customer responses.

- Research Organizations: Centralizing deep research across multiple models (e.g., using Gemini for large context and Claude for reasoning) while keeping billing unified.

- Enterprise Governance: Standardizing AI usage across departments to ensure that no corporate secrets are used to train public models.

Pros and Cons

- Pros: Drastically reduces the risk of API key leaks. Provides a professional “Enterprise” feel for teams without the high per-seat cost of official commercial plans. “Manifests” significantly improve output consistency.

- Cons: Requires initial administrator setup of API keys and Manifests. Team members are reliant on the platform’s availability to access their integrated AI tools.

Pricing

- Free Trial: Includes basic access to the unified interface for a limited testing period with core features enabled.

- Self API Monthly: $39/month. Targeted at small teams needing ongoing access to centralized management and usage tracking.

- Self API Lifetime: $299 one-time payment. A popular founding-member plan that includes access for 25 users plus administrator controls.

- Enterprise Solutions: Custom pricing. Offers enhanced security, SLA guarantees, and dedicated support for large-scale deployments.

How Does It Compare?

- TypingMind (Team): The closest direct competitor. While TypingMind is highly polished and feature-rich, Intrascope focuses more on “Structured Projects” and “Manifest-centric” workflows that simplify the experience for non-technical team members.

- Team-GPT: Focuses heavily on collaborative “Shared Chats.” Intrascope offers more rigorous administrator controls over specific model permissions and budget caps per user.

- LibreChat: A powerful open-source alternative for self-hosting enthusiasts. Intrascope provides a “No-Code” cloud experience that is much faster to deploy and manage for average business users.

- OmniGPT / Poe for Teams: These are subscription-based “Aggregator” services. Intrascope is the superior choice for those who prefer to “Bring Their Own Key” to save on costs and maintain full data ownership.

- MindStudio: Focuses on building custom AI “Apps.” Intrascope is more suited for teams who want a “Unified Chat” experience that feels like a more controlled and professional version of ChatGPT.

Final Thoughts

Intrascope.app is a vital tool for the “Agentic Team” of 2026. As businesses realize that simple chat interfaces are not enough for professional coordination, platforms like Intrascope provide the necessary layer of governance and shared intelligence. By turning the “API key” into a hidden infrastructure component and the “Prompt” into a reusable “Manifest,” it allows teams to scale their AI operations safely and cost-effectively. For agencies and SMBs looking for a professional-grade AI workspace without the “Enterprise” price tag, Intrascope offers a compelling, privacy-first alternative.