Table of Contents

Overview

In the rapidly evolving world of conversational AI, ensuring your chatbot or AI agent performs flawlessly is paramount. Enter Janus, a simulation testing platform designed to rigorously evaluate conversational AI agents before they ever interact with real users. By simulating thousands of realistic user interactions, Janus helps you identify potential pitfalls and optimize your AI for peak performance. Let’s dive into what makes Janus a valuable tool for AI developers and businesses alike.

Key Features

Janus boasts a robust set of features designed to thoroughly test and refine your conversational AI:

- Simulation of thousands of realistic user interactions: Janus creates diverse and realistic conversation scenarios, exposing your AI agent to a wide range of potential user inputs and behaviors.

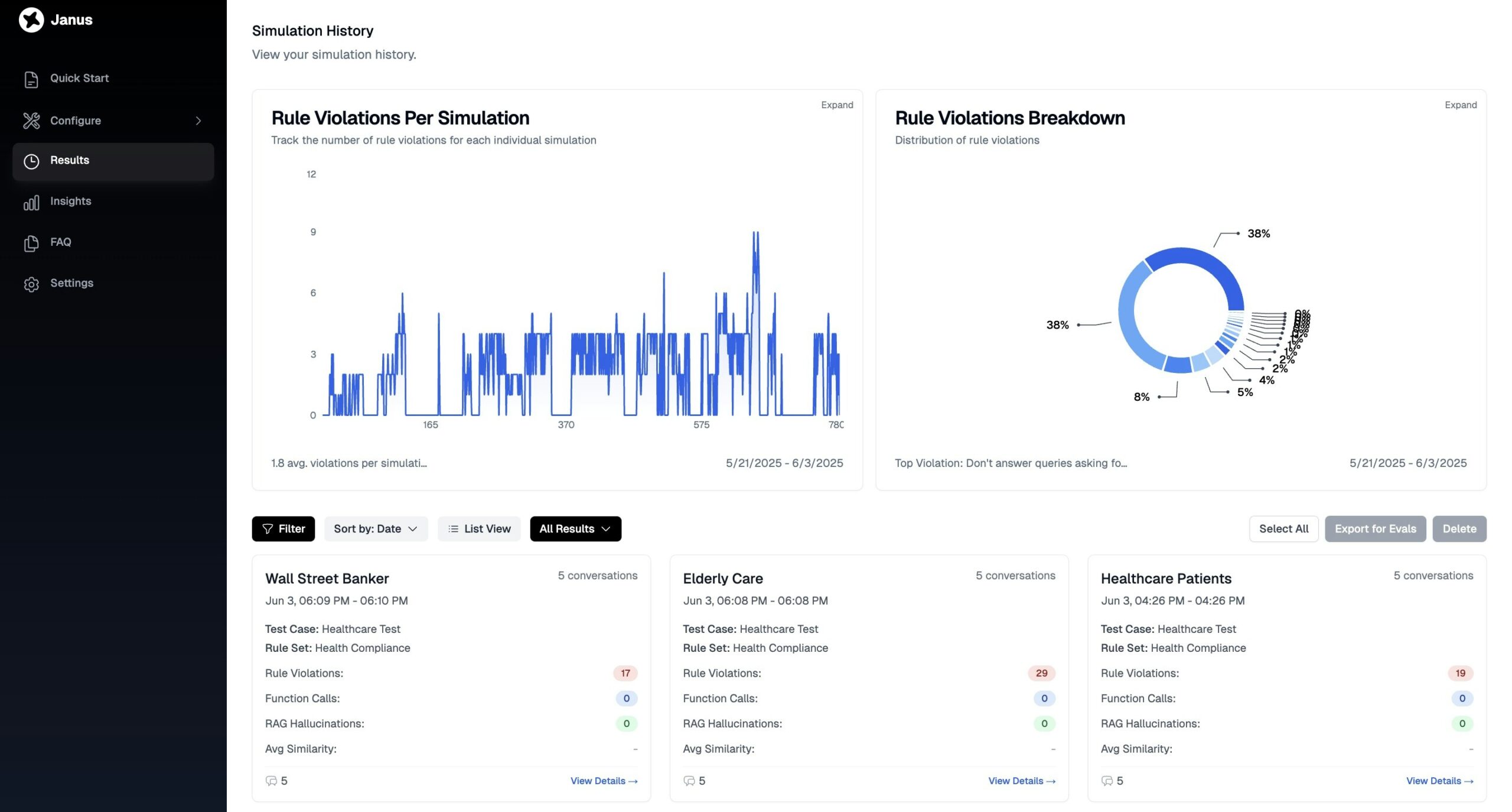

- Detection of hallucinations, rule violations, and tool-call failures: The platform actively searches for common AI errors, including instances where the AI fabricates information (hallucinations), breaks predefined rules, or fails to properly execute external tool calls.

- Customizable evaluation scenarios: Tailor the testing environment to match your specific use cases and target audience, ensuring relevant and accurate evaluation.

- Actionable insights for model improvement: Janus doesn’t just identify problems; it provides clear, actionable recommendations to help you improve your AI agent’s performance and reliability.

How It Works

Janus employs a sophisticated approach to AI testing. It begins by generating synthetic user personas, each with unique characteristics and conversational styles. These personas then interact with your AI agent in simulated conversations, mimicking real-world interactions. During these simulations, Janus meticulously monitors the AI’s responses, looking for hallucinations, rule violations, and tool-call failures. The platform then analyzes the results and provides detailed reports with actionable insights, enabling developers to address weaknesses and optimize the AI’s performance.

Use Cases

Janus can be applied across a variety of industries and applications:

- Pre-deployment testing of AI chatbots: Ensure your chatbot is ready for prime time by thoroughly testing its performance and identifying potential issues before launch.

- Continuous evaluation of AI agent performance: Regularly monitor your AI agent’s performance to identify regressions or areas for improvement, ensuring it continues to meet your standards.

- Compliance and safety assessments for AI systems: Verify that your AI system adheres to relevant regulations and safety guidelines, mitigating potential risks and ensuring responsible AI development.

Pros & Cons

Like any tool, Janus has its strengths and weaknesses. Let’s take a look:

Advantages

- Comprehensive simulation testing: Janus offers a thorough and realistic testing environment, exposing your AI agent to a wide range of potential scenarios.

- Customizable evaluation scenarios: Tailor the testing process to your specific needs and target audience, ensuring relevant and accurate results.

- Actionable insights for improvement: Janus provides clear and concise recommendations, enabling you to quickly address weaknesses and optimize your AI’s performance.

Disadvantages

- May require integration effort: Integrating Janus into your existing development workflow may require some initial setup and configuration.

- Limited to conversational AI agents: Janus is specifically designed for testing conversational AI agents and may not be suitable for other types of AI systems.

How Does It Compare?

While several tools offer AI monitoring and evaluation capabilities, Janus distinguishes itself through its focus on pre-deployment simulation testing. For example, Langfuse primarily focuses on post-deployment monitoring, tracking AI performance in real-world scenarios. Janus, on the other hand, emphasizes proactive testing and optimization before deployment, helping you catch and fix issues before they impact users.

Final Thoughts

Janus offers a powerful and effective solution for rigorously testing and optimizing conversational AI agents. Its comprehensive simulation capabilities, customizable evaluation scenarios, and actionable insights make it a valuable asset for any organization looking to deploy reliable and high-performing AI systems. While integration may require some initial effort, the benefits of pre-deployment testing and optimization far outweigh the costs, ensuring a smoother and more successful AI implementation.