Table of Contents

Kimi K2.5

Type: Open-Source Multimodal MoE Model

Kimi K2.5 represents Moonshot AI’s most advanced flagship model released to date, establishing a new open-source standard for agentic capabilities, complex coding, and visual reasoning. Building upon the K2 architecture, this model integrates a native multimodal design powered by the “MoonViT” vision encoder, allowing it to process video and image inputs with the same fluency as text. It uniquely combines a deliberate “Thinking” mode for deep logic with a responsive chat mode for real-time interaction.

Key Features

- Native Multimodal Architecture: Seamlessly processes text, images, and video streams to execute complex visual tasks (e.g., replicating website workflows from video demos).

- Dual Reasoning Modes: Features a “Thinking” mode that employs chain-of-thought reasoning for hard logic puzzles and math, alongside a standard mode for rapid execution.

- Agentic Intelligence: Specifically optimized to power autonomous agents, capable of planning and executing multi-step workflows across different applications.

- Efficiency at Scale: Utilizes a Mixture-of-Experts (MoE) structure to deliver trillion-parameter performance while maintaining low inference costs suitable for local deployment or affordable API usage.

Use Cases

- Advanced Coding Automation: analyzing entire codebases visually and structurally to refactor or generate complex applications.

- Visual Data Agent: Autonomous navigation of GUIs and processing of visual documentation.

- Academic & Logical Research: Solving competition-level mathematics and synthesizing large-scale literature reviews using the extended context window.

Pros & Cons

- Pros: Exceptional versatility across vision and code; open-source accessibility allows for private deployment; dual-mode reasoning offers flexibility between speed and depth.

- Cons: The “Thinking” mode can be computationally intensive and slower for trivial queries; requires significant VRAM for local execution of the full model.

Pricing

Free / Open Source

The model weights are available for researchers and developers. API access is expected to follow Moonshot’s ultra-low pricing strategy (historically ~$0.15 per 1M tokens), significantly undercutting Western proprietary models.

How Does It Compare?

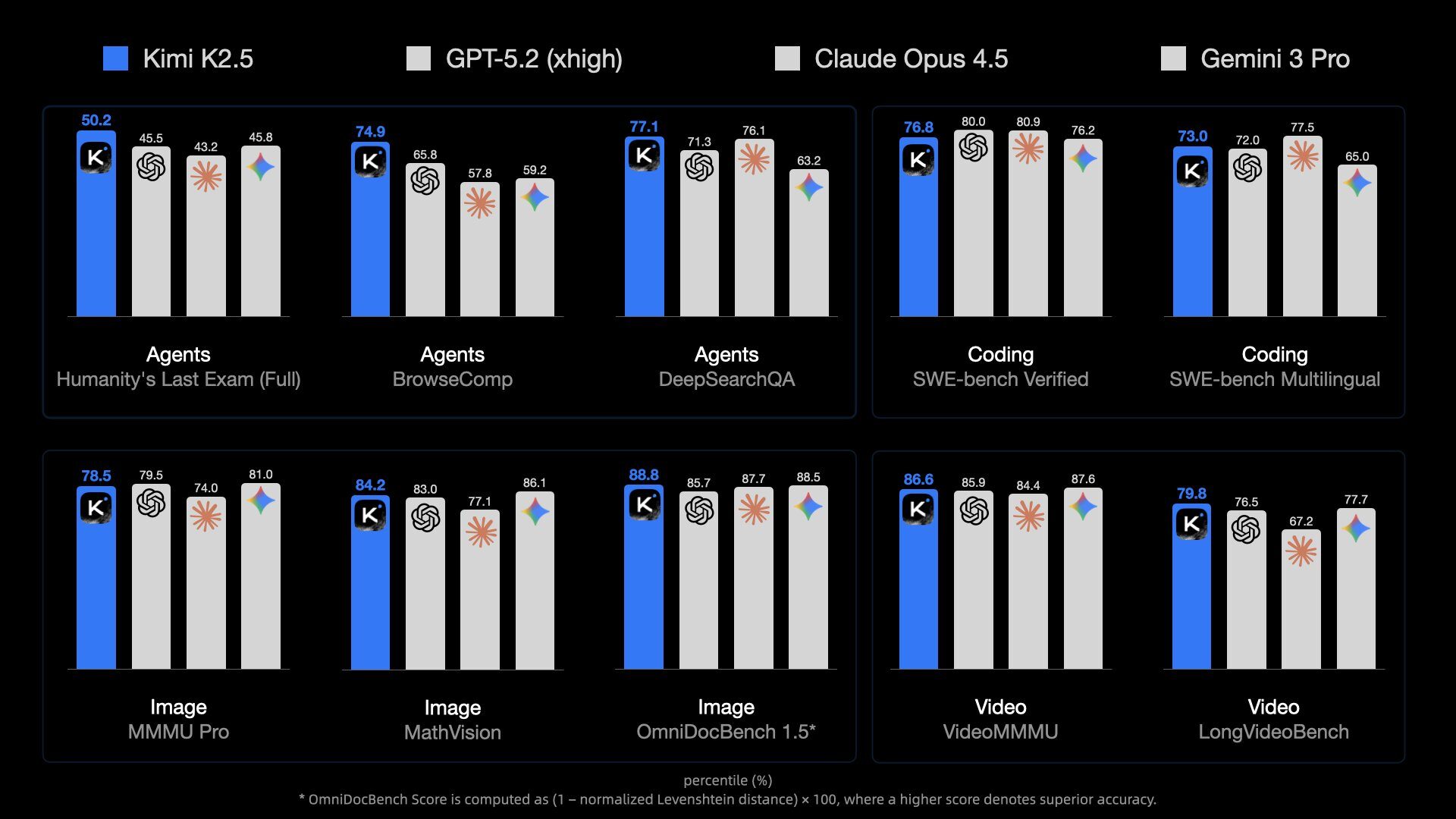

Kimi K2.5 enters a highly competitive landscape in early 2026. Here is how it stacks up against the latest market leaders:

- vs. GPT-4.1 (OpenAI): While GPT-4.1 remains a benchmark for general nuance and instruction following, Kimi K2.5 challenges its dominance in coding and visual agent tasks, offering comparable performance at a fraction of the cost due to its open-source nature.

- vs. Claude Opus 4 (Anthropic): Claude Opus 4 is renowned for its massive context window and creative writing. Kimi K2.5 differentiates itself with superior visual reasoning and agentic execution capabilities, though Claude may still hold an edge in pure literary generation.

- vs. DeepSeek-V3: Both are leading open-weight models from China. Kimi K2.5 distinguishes itself with its “Thinking” mode (similar to reasoning-specific models) and stronger native multimodal integration compared to standard V3 text-heavy tasks.

- vs. Gemini Deep Research / 2.0 (Google): Google’s offering excels in deep integration with its ecosystem. Kimi K2.5 provides a more flexible, platform-agnostic alternative for developers building custom autonomous agents outside the Google stack.

Final Thoughts

Kimi K2.5 marks a significant shift in the open-source AI landscape by bringing “reasoning model” capabilities (similar to the o1-series) into a freely available multimodal package. Its ability to handle video input and complex agentic planning makes it not just a chatbot, but a foundational engine for the next generation of autonomous software engineers and digital workers. For developers seeking state-of-the-art performance without proprietary lock-in, Kimi K2.5 is currently the model to beat.