Table of Contents

Overview

In the ever-evolving landscape of AI, running large language models (LLMs) can often feel like a resource-intensive endeavor. But what if you could harness the power of LLMs directly on your device, offline, and without the bloat? Enter Kolosal AI, an open-source platform designed to do just that. This lightweight runtime brings the capabilities of LLaMA, Mistral, Qwen, and more to your local machine, opening up a world of possibilities for edge AI and privacy-focused applications. Let’s dive into what makes Kolosal AI a game-changer.

Key Features

Kolosal AI packs a punch despite its small size. Here’s a look at its key features:

- 20 MB Lightweight AI Runtime: Kolosal AI is incredibly compact, making it easy to deploy and run on resource-constrained devices.

- Offline Execution of LLMs: Enjoy complete privacy and independence from cloud services by running LLMs entirely offline.

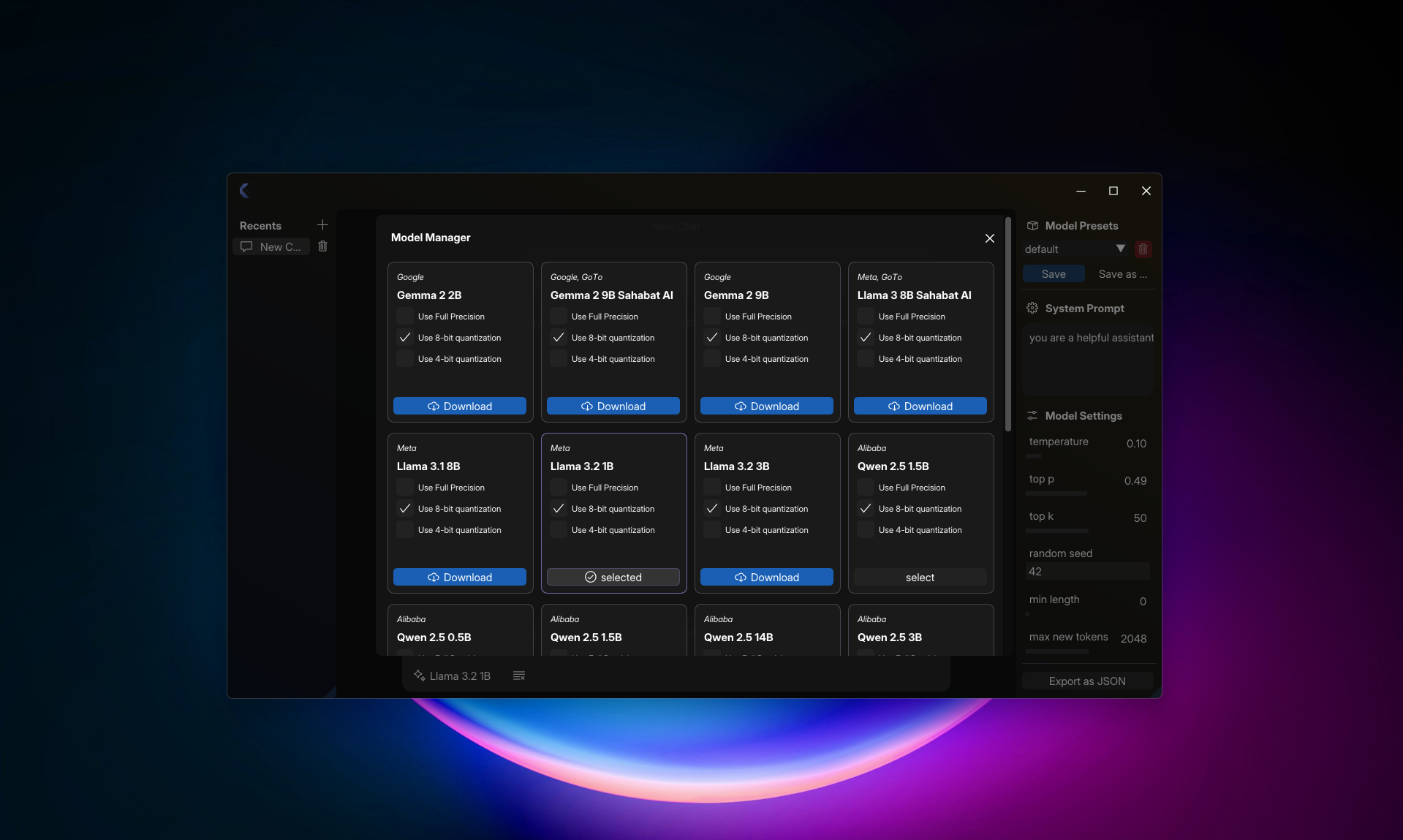

- Supports Leading LLMs: Seamlessly integrate with popular models like LLaMA, Mistral, Qwen, and Phi-3, giving you flexibility in your AI projects.

- Hardware Acceleration: Leverage the power of your hardware with compatibility for AVX2 CPUs and AMD/NVIDIA GPUs, ensuring optimal performance.

- Fine-tuning via UnsLOTH: Customize and adapt LLMs to your specific needs through fine-tuning using the UnsLOTH method.

- Windows GUI (macOS/Linux in Development): A user-friendly graphical interface for Windows simplifies model management and interaction. macOS and Linux support are on the horizon.

How It Works

Getting started with Kolosal AI is relatively straightforward. First, you’ll need to install the Kolosal AI runtime on your machine. Once installed, you can load your desired supported LLM locally. The platform then allows you to run, fine-tune, and interact with the model through a chat interface or API. The beauty of Kolosal AI lies in its offline operation. All processing happens locally, ensuring your data remains private and secure. This also translates to faster response times, as you’re not reliant on an internet connection or remote servers.

Use Cases

Kolosal AI’s unique features make it suitable for a variety of applications:

- Running AI Models on Edge Devices: Deploy LLMs on devices with limited resources, such as embedded systems or IoT devices.

- Privacy-Sensitive Local Applications: Develop applications that require complete data privacy, such as personal assistants or secure document processing tools.

- Educational LLM Experimentation: Provide students and researchers with a safe and accessible platform for experimenting with LLMs.

- Custom AI Apps with User-Trained Models: Build bespoke AI applications that leverage user-trained models for personalized experiences.

Pros & Cons

Like any technology, Kolosal AI has its strengths and weaknesses. Let’s break them down:

Advantages

- Lightweight and portable, making it easy to deploy and run on various devices.

- Fully offline operation ensures privacy and eliminates reliance on internet connectivity.

- Open-source and free, fostering community contributions and accessibility.

Disadvantages

- Requires some technical setup, which may be a barrier for non-technical users.

- Hardware-dependent performance means that the speed and efficiency will vary based on your system’s specifications.

- GUI is currently only available for Windows, with macOS and Linux support still in development.

How Does It Compare?

When considering alternatives, LM Studio and Ollama often come to mind. LM Studio offers a more robust GUI but comes with a significantly larger footprint (2+ GB). Ollama provides strong performance but is heavier and more reliant on cloud services. Kolosal AI distinguishes itself with its ultra-lightweight design and complete offline operation, making it a compelling choice for users prioritizing portability and privacy.

Final Thoughts

Kolosal AI is a promising platform for anyone looking to run LLMs locally and offline. Its lightweight design, support for popular models, and open-source nature make it an attractive option for edge AI, privacy-focused applications, and educational purposes. While it may require some technical expertise to set up, the benefits of offline operation and complete control over your data make it a worthwhile endeavor. As the platform continues to develop and expand its GUI support, Kolosal AI is poised to become a leading solution for on-device LLM deployment.