Table of Contents

Overview

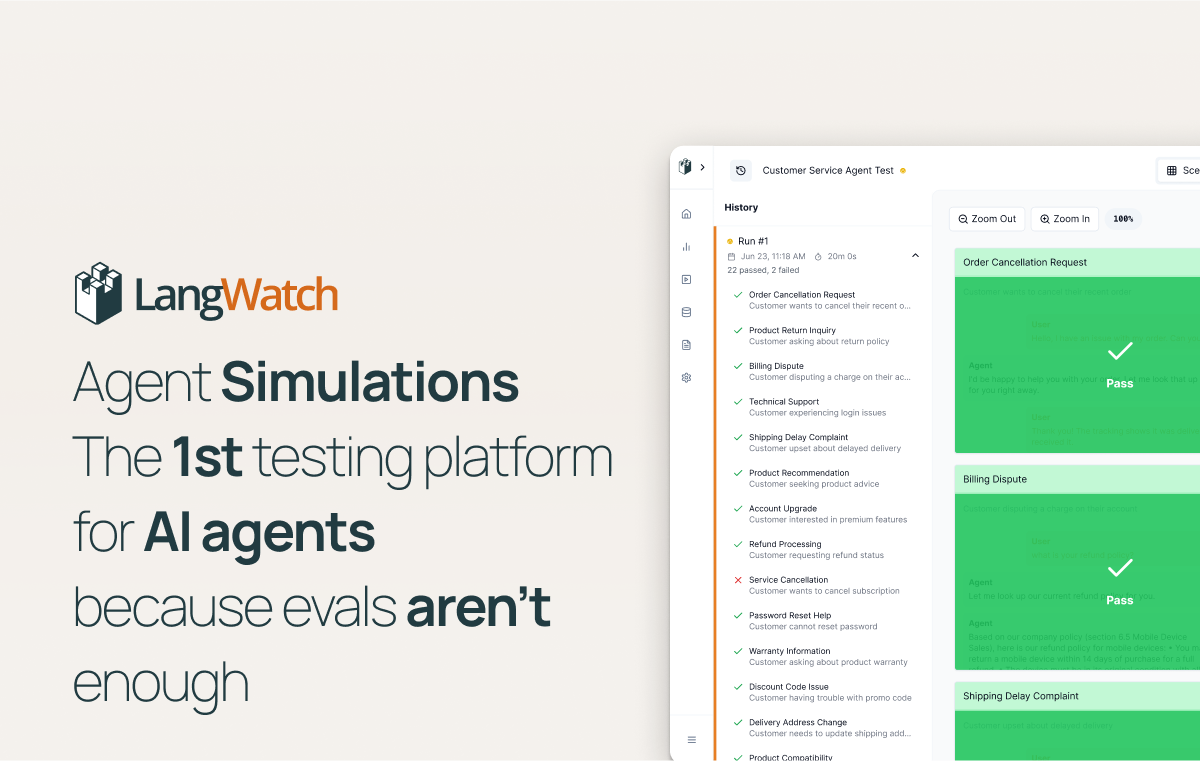

As AI agents become increasingly sophisticated, tackling complex tasks that involve reasoning, tool use, and decision-making, traditional evaluation methods often fall short. Enter LangWatch Scenario, a groundbreaking platform designed to simulate real-world interactions and thoroughly test AI agent behavior. Think of it as the ultimate unit testing framework, specifically tailored for the intricate world of AI agents, moving beyond simple prompts to validate complex workflows.

Key Features

LangWatch Scenario is packed with powerful features designed to give developers deep insights into their AI agents’ performance. Here’s a closer look at what makes it stand out:

- Scenario-based AI testing: Move beyond simple prompts with comprehensive tests built around realistic, multi-step scenarios.

- Real-world agent simulation: Evaluate your AI agents in environments that mimic actual user interactions and operational challenges.

- Supports reasoning and tool-use validation: Crucially tests an agent’s ability to reason through problems and effectively utilize external tools.

- Designed for complex AI workflows: Built to handle the intricacies of multi-agent systems and sophisticated decision-making processes.

- Developer-focused test environment: Provides a robust and intuitive platform for engineers to design, run, and analyze their AI tests.

How It Works

Understanding how LangWatch Scenario operates is key to leveraging its full potential. The process is straightforward yet powerful, empowering developers to gain unparalleled insights into their AI agents. Developers begin by designing detailed simulations that accurately mimic real-world tasks and challenges. These simulations then serve as the testing ground for AI agents, allowing LangWatch to meticulously track and analyze their performance across various scenarios. This comprehensive analysis helps identify not only an agent’s strengths but also pinpoint potential failures and areas for improvement, ensuring robust and reliable AI deployment.

Use Cases

LangWatch Scenario’s versatility makes it an invaluable tool across a range of AI development and deployment stages. Here are some key use cases where it truly shines:

- Testing AI agents for reliability: Ensure your agents consistently perform as expected under diverse conditions, minimizing unexpected errors.

- Validating multi-agent workflows: Crucial for systems where multiple AI agents interact, ensuring seamless collaboration and accurate task completion.

- QA for AI products before deployment: Catch critical bugs and performance issues in AI applications before they reach end-users, enhancing product quality.

- Building robust autonomous systems: Lay the foundation for highly dependable autonomous systems by rigorously testing their decision-making and operational integrity.

Pros & Cons

Like any powerful tool, LangWatch Scenario comes with its own set of advantages and considerations. Let’s weigh them out to help you decide if it’s the right fit for your needs.

Advantages

- Enables comprehensive agent testing: Provides a holistic view of agent performance across complex, real-world scenarios.

- Realistic simulation environments: Tests agents in conditions that closely mirror their intended operational environments, leading to more accurate evaluations.

- Promotes agent safety and reliability: By identifying failure points early, it helps developers build safer and more dependable AI systems.

Disadvantages

- Requires setup effort: Designing detailed, realistic simulations can be time-consuming and requires careful planning.

- Best suited to technical users: The platform’s depth and focus on complex scenarios mean it’s most beneficial for developers and AI engineers.

- May not cover all edge cases: While comprehensive, the effectiveness is tied to the quality and breadth of the designed scenarios, meaning some unforeseen edge cases might still slip through.

How Does It Compare?

In the rapidly evolving landscape of AI development tools, LangWatch Scenario carves out a distinct niche, offering capabilities that set it apart from other popular solutions. While tools like Helicone excel at logging LLM usage, they lack the crucial simulation capabilities that LangWatch provides for evaluating full agent behavior. Similarly, Humanloop focuses primarily on prompt iteration and optimization, rather than the comprehensive, scenario-based testing of entire AI agents. And while LangChain evals offer basic test suites, LangWatch Scenario elevates evaluation to a new level with its rich, real-world scenario testing, making it far more suitable for validating complex reasoning and tool-use in AI agents.

Final Thoughts

LangWatch Scenario emerges as an indispensable tool for anyone serious about developing robust, reliable, and safe AI agents. Its focus on real-world simulation and scenario-based testing addresses a critical gap in traditional AI evaluation, providing developers with the insights needed to build truly intelligent and dependable systems. If you’re building complex AI agents that need to reason, use tools, and make decisions in dynamic environments, LangWatch Scenario is definitely a platform worth exploring to elevate your AI quality assurance.