Table of Contents

- Overview

- Core Features & Capabilities

- How It Works: The Workflow Process

- Ideal Use Cases

- Strengths and Strategic Advantages

- Limitations and Realistic Considerations

- Competitive Positioning and Strategic Comparisons

- Pricing and Access

- Technical Architecture and Platform Details

- Company Background and Partnerships

- Launch Reception and Market Position

- Important Caveats and Realistic Assessment

- Final Assessment

Overview

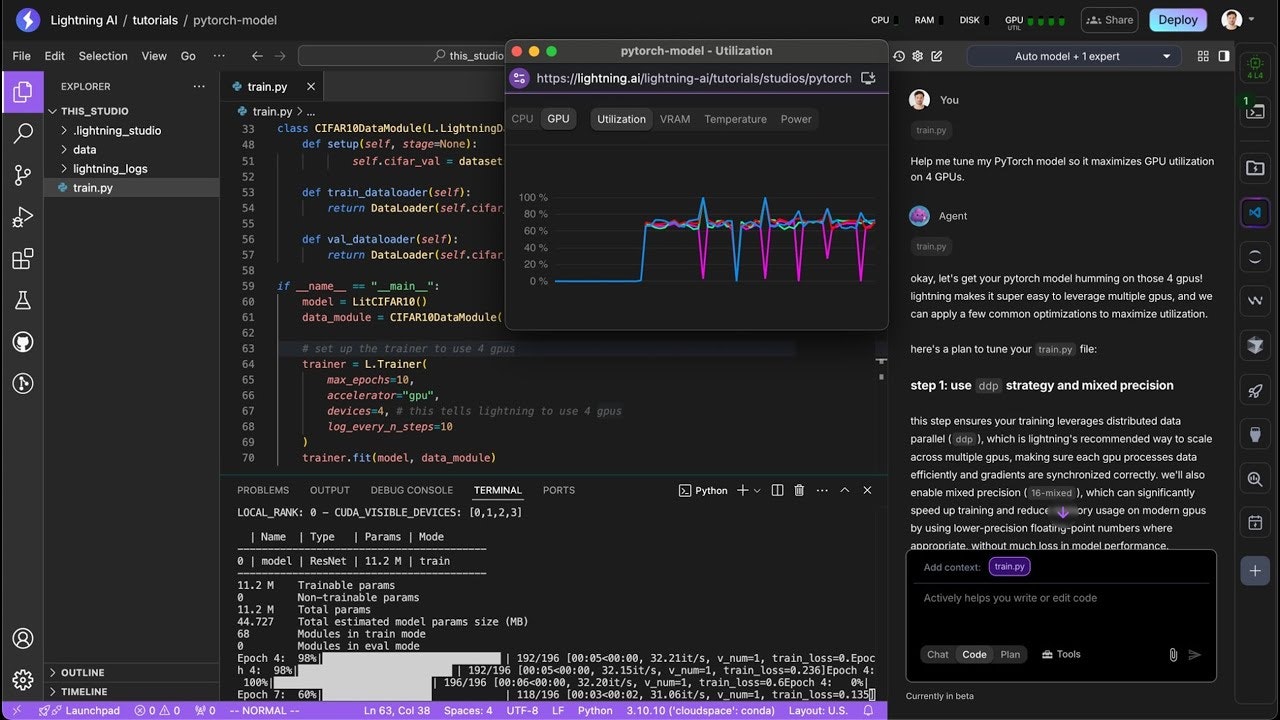

Lightning AI, the team behind PyTorch Lightning, announced a comprehensive suite of new tools at PyTorch Conference 2025 (October 22-23, 2025 in San Francisco) designed to accelerate AI development workflows for PyTorch developers and researchers. Rather than positioning itself as a standalone notebook environment competitor to Google Colab, Lightning has evolved into a full-stack platform combining AI-assisted code editing, multi-cloud GPU provisioning, interactive distributed training, reinforcement learning, and production deployment—all optimized specifically for the PyTorch ecosystem.

The platform addresses fragmentation in PyTorch development workflows: developers typically use ChatGPT for coding assistance (losing project context between sessions), Jupyter notebooks or Google Colab for experimentation without version control integration, separate GPU provisioning platforms requiring manual configuration, and distinct deployment tools disconnected from training environments. Lightning unifies these into single environment where PyTorch project context persists across all operations, GPU provisioning happens directly from the editor interface, and deployment flows naturally from training without tool switching.

Founded in 2019 by William Falcon (CEO) and team behind PyTorch Lightning open-source framework, Lightning has established credibility within the PyTorch community with 2+ million existing users of the PyTorch Lightning framework and direct partnerships with Meta’s PyTorch team on Monarch integration announced at PyTorch Conference 2025.

Core Features & Capabilities

Lightning provides comprehensive features combining AI assistance, distributed training, reinforcement learning, and deployment infrastructure specifically optimized for PyTorch workflows.

AI Code Editor with PyTorch Expertise: Specialized AI assistant providing domain-aware coding assistance for PyTorch development built into Lightning Studios and Notebooks. Unlike generic code completion tools, the editor understands PyTorch idioms, tensor operations, distributed training patterns, and reinforcement learning frameworks through training on PyTorch-specific code. Multiple expert modes support training workflows (hyperparameter optimization, debugging), inference optimization, and RL algorithm development with PyTorch-specific suggestions.

Multi-Cloud GPU Marketplace: Direct GPU provisioning from editor without external procurement or separate cloud console access. Integrates NVIDIA A100, H100, H200, and other accelerators from AWS, Google Cloud, Lambda, Nebius, Voltage Park, and Nscale cloud providers. Per-second billing and interruptible instances enable cost optimization compared to fixed hourly rates. GPU resources spin up from editor context with single configuration eliminating manual cluster setup.

Lightning Environments Hub: Pre-configured, reproducible workspaces for different ML workloads launched at PyTorch Conference 2025. Each environment resembles a “home” within evolving ecosystem: comes ready for specific use cases (distributed training, RL experiments, multi-agent systems), scales from single GPU to multi-node clusters without reconfiguration, persists state across sessions enabling long-running experiments, and enables developers to share reproducible research configurations through public Environments hub.

Meta Monarch Integration: Official partnership with Meta brings Monarch distributed training framework directly into Lightning Studios announced at PyTorch Conference 2025. Monarch enables interactive, cluster-scale training from notebooks without expensive iteration cycles typical of traditional distributed training requiring full cluster restarts for code changes. Developers maintain tight feedback loop even when scaling to hundreds of GPUs through persistent resources enabling real-time debugging and code adjustments without restarting clusters.

OpenEnv Support: Zero-day support announced at PyTorch Conference 2025 for Meta’s new open standard for reinforcement learning environments. Enables researchers to create, reproduce, and scale RL experiments using standardized environment definitions. Lightning manages enterprise infrastructure including access control, networking, security, and reproducibility for both research and production RL workloads.

TorchForge Integration: Native support announced at PyTorch Conference 2025 for Meta’s new PyTorch-native reinforcement learning framework built on Monarch. Enables clean, composable interface for authoring RL algorithms while scaling seamlessly across clusters without manual distributed computing code. Researchers run TorchForge experiments directly on Lightning platform, leveraging distributed OpenEnv environments for RLHF (Reinforcement Learning from Human Feedback) and large-scale training.

Model APIs for Unified Access: Access any AI model (open-source or proprietary) directly from Lightning Studios using standardized APIs. Built-in usage tracking, access control, and billing through Lightning credits system. Eliminates context switching between different model providers (OpenAI, Anthropic, Google) and APIs requiring separate authentication.

LitServe for Production Deployment: One-click deployment of PyTorch models as production APIs with OpenAI-compatible endpoints for easy integration. Supports quantization for reduced model size, batch inference for throughput optimization, and real-time monitoring for production observability. Integrates with Lightning Studios for continuous training pipelines enabling model updates without deployment friction. Custom containers support enterprise security requirements and airgapped environments.

Distributed Training Optimization: 40% throughput improvement for PyTorch workloads through kernel-level optimizations compared to raw cloud instances according to Lightning AI benchmarks. Seamless scaling from single GPU to hundreds across multiple cloud providers while maintaining PyTorch workflow familiarity without learning new distributed computing frameworks.

Browser-Based Persistent Workspaces: GPU workspaces persist across browser sessions. Developers can close browser and resume exact computational state later without losing work or restarting training runs. Hybrid workflows support local development connected to cloud resources through Lightning’s synchronization.

Enterprise Features: SOC2/HIPAA-compliant private cloud deployments for regulated industries. Granular cost controls including real-time budget tracking dashboard and autosleep for idle resources preventing runaway compute costs. Environment reproducibility ensures consistency across team members and across time for research reproducibility.

How It Works: The Workflow Process

Lightning operates through integrated workflow combining code development, resource provisioning, training execution, and deployment without tool switching.

Step 1 – Enter Studio or Notebook: Open Lightning Studios (IDE-like experience with code editor, file browser, terminal) or Notebooks (Jupyter-like notebook experience) through browser at lightning.ai. Instant access to Python/PyTorch development environment without local installation or configuration.

Step 2 – Write Code with AI Assistance: Write PyTorch code using built-in AI Code Editor with PyTorch-specific understanding. Select expert mode (training for model development, inference for optimization, RL for reinforcement learning). AI provides context-aware suggestions, debugging assistance, optimization recommendations tailored to PyTorch patterns and best practices.

Step 3 – Provision GPU Resources: From within editor interface, provision exact GPU resources needed through integrated GPU Marketplace. Configure cloud provider selection, GPU type (A100, H100, H200, T4), instance count, node configuration for distributed training. Resources spin up immediately without leaving development environment.

Step 4 – Execute with Distributed Framework (Optional): For large-scale training requiring multiple GPUs, select Lightning Environment and Monarch integration. Framework enables interactive development even across hundreds of GPUs through persistent processes. Maintain persistent resources and real-time debugging without cluster restart overhead.

Step 5 – Run RL Experiments (Optional): For reinforcement learning workloads, select OpenEnv environment and TorchForge framework announced at PyTorch Conference 2025. Execute RL algorithms directly on Lightning with automatic scaling across clusters following standardized OpenEnv definitions.

Step 6 – Access Models as APIs: Leverage built-in Model APIs to access external models (GPT-4, Claude, Mistral, open-source LLMs from Hugging Face) directly within workflow without external tool switching or separate API key management for each provider.

Step 7 – Deploy to Production: Use LitServe for one-click deployment from training environment. Models become production APIs with OpenAI-compatible endpoints, automatic monitoring, and horizontal scaling without DevOps configuration.

Step 8 – Track and Manage: Monitor usage through Lightning credits system with real-time tracking. Manage costs with budget tracking dashboard and auto-sleep for idle resources. Team administrators access usage analytics and cost allocation.

Ideal Use Cases

Lightning’s comprehensive platform addresses diverse PyTorch development scenarios where unified workflow and PyTorch optimization matter.

Accelerated PyTorch Model Development: Build, train, and fine-tune PyTorch models with AI assistance and instant GPU access, eliminating infrastructure complexity typical of cloud GPU provisioning and configuration.

Interactive Distributed Training at Scale: Train models across hundreds of GPUs while maintaining interactive development environment enabling real-time debugging and experimentation through Monarch integration unavailable in traditional batch job systems.

Reinforcement Learning Research: Develop RL algorithms using TorchForge framework with standardized OpenEnv environments. Scale from single GPU to distributed clusters seamlessly without distributed computing expertise.

Multi-Agent System Development: Build complex multi-agent AI systems using pre-built environments in Lightning Environments hub and Lightning’s reproducible workspace management.

Model Fine-Tuning and Optimization: Fine-tune open-source foundation models or proprietary models on custom datasets with integrated Model APIs and LitServe deployment pipeline.

Enterprise ML Pipelines: Deploy production-grade ML systems with compliance requirements (SOC2, HIPAA) using private deployments and environment reproducibility for audit trails.

Research and Experimentation: Conduct systematic experiments with environment reproducibility enabling research reproduction, multi-cloud flexibility for cost optimization, and collaborative experimentation through Environments hub.

Production Inference Systems: Deploy trained models as scalable inference APIs using LitServe with quantization and batch processing optimization for production workloads.

Strengths and Strategic Advantages

PyTorch-Specific Optimization: Every feature, from AI coding assistance to distributed training to deployment, specifically optimized for PyTorch rather than generic ML platforms supporting multiple frameworks. Enables deeper integration and PyTorch-specific optimizations.

Integrated Full-Stack Platform: Eliminates tool fragmentation by providing coding environment, training infrastructure, RL support, and deployment in single environment. No context switching between ChatGPT for coding help, Colab for experimentation, separate GPU providers for compute, and deployment platforms.

Direct Meta Collaboration: Monarch integration with Meta’s PyTorch team announced at PyTorch Conference 2025 ensures cutting-edge distributed training capabilities unavailable elsewhere. TorchForge and OpenEnv represent latest research frameworks from Meta’s PyTorch organization.

Interactive Distributed Training: Monarch enables maintaining tight feedback loop even at cluster scale—unprecedented combination of interactivity and scale eliminating traditional batch job overhead for distributed training.

Enterprise-Grade Features: SOC2/HIPAA compliance, private deployments, granular cost control with real-time tracking, environment reproducibility address enterprise requirements for regulated industries and security-conscious organizations.

Multi-Cloud Flexibility: Avoid vendor lock-in by provisioning from multiple cloud providers (AWS, Google Cloud, Lambda, Nebius, Voltage Park, Nscale) through single interface. Optimize for cost, performance, or geographic region dynamically.

Performance Optimization: 40% throughput improvement for PyTorch workloads according to Lightning AI benchmarks demonstrates commitment to efficiency beyond generic cloud instances through PyTorch-specific kernel optimizations.

Persistent Workspaces: Sessions persist across browser closures enabling long-running experiments spanning days or weeks and collaborative development where team members resume each other’s work.

Established User Base: 2+ million users of PyTorch Lightning framework provide proven track record and community support. Lightning AI founded by creator of PyTorch Lightning (William Falcon) provides credibility.

Active Development: October 2025 announcements at PyTorch Conference indicate rapid feature development aligned with latest PyTorch ecosystem advances.

Limitations and Realistic Considerations

PyTorch Exclusivity: Platform exclusively optimizes for PyTorch. Organizations using TensorFlow, JAX, MXNet, or other frameworks cannot access specialized advantages. Multi-framework organizations require separate tools.

Cloud-Native Dependency: Requires internet connectivity and cloud infrastructure. Offline development and local-only workflows not supported. Organizations with airgapped environments require private deployment options.

Learning Curve for Advanced Features: Complex features (Monarch distributed training, Environments management, TorchForge RL framework) require understanding distributed training concepts, RL fundamentals, and Lightning ecosystem architecture.

Pricing Model Transparency: While credit-based system with per-second billing transparent in concept, specific costs per GPU hour per accelerator type (A100, H100, H200, T4), model API call pricing per provider, or storage costs not comprehensively published on public website requiring account creation.

Rapid Evolution Risk: New platform features announced October 2025 at PyTorch Conference means capabilities still evolving. Potential for breaking changes, API modifications, or workflow adjustments as platform matures based on user feedback.

Integration Complexity: While unified platform simplifies workflow, integrating multiple frameworks (Monarch, TorchForge, OpenEnv) requires coordinated understanding of each component’s purpose and interaction patterns.

Enterprise Requirements: Private deployments and compliance features (SOC2, HIPAA) likely require custom arrangements through sales team increasing adoption friction for enterprise customers.

New Feature Maturity: Monarch integration, TorchForge support, OpenEnv compatibility, and Environments hub all announced October 2025 at PyTorch Conference. Limited production track record for these specific features.

Vendor Dependency: Platform lock-in to Lightning AI ecosystem despite code export capabilities. Organizations requiring maximum independence may prefer assembling own toolchain.

Competitive Positioning and Strategic Comparisons

Lightning occupies unique position combining AI-assisted coding, distributed training, and production deployment specifically for PyTorch—differentiating from competitors targeting different problem spaces.

vs. Google Colab: Google Colab provides free, accessible Jupyter notebooks with T4 GPU access (free tier), plus Colab Pro ($9.99/month for priority T4/V100/L4 access) and Colab Pro+ ($49.99/month for premium resources). Colab announced AI-first reimagining in May 2025 with Gemini 2.5 Flash-powered agentic collaborator and Data Science Agent for data exploration. New Slideshow Mode enables live code execution and collaborative sharing. Educational tier provides free 1-year Colab Pro for US students and faculty as of July 2025. Colab excels at accessibility, free GPU access for education, zero-setup experimentation, and collaborative notebook sharing. Lightning provides PyTorch-optimized IDE with AI assistance, multi-cloud GPU marketplace with per-second billing, Monarch distributed training, and integrated production deployment. Colab best for education and quick experiments; Lightning best for production-ready development and advanced distributed training. Different target audiences—Colab for students/researchers seeking free resources, Lightning for professional PyTorch developers building production systems.

vs. AWS SageMaker: AWS SageMaker provides comprehensive ML platform serving multiple frameworks (PyTorch, TensorFlow, MXNet, scikit-learn) with extensive features including AutoPilot for AutoML, Feature Store for feature engineering, Model Monitor for drift detection, and Pipelines for MLOps orchestration. SageMaker supports entire ML lifecycle from data labeling through deployment across any framework. Lightning specializes in PyTorch with deeper framework integration through PyTorch Lightning and direct Meta collaboration. SageMaker provides breadth across frameworks and ML lifecycle stages; Lightning provides PyTorch depth with interactive distributed training and RL support. Organizations with multi-framework needs choose SageMaker; PyTorch-focused teams choose Lightning.

vs. Jupyter/Local Development: Local Jupyter notebooks with manual GPU provisioning require extensive setup including CUDA installation, driver configuration, environment management, and manual scaling for distributed training. Lightning automates infrastructure while maintaining familiar notebook interface through Lightning Notebooks. Trade-off between maximum control and flexibility (local Jupyter) versus convenience and infrastructure automation (Lightning). Local development enables offline work and complete environment control; Lightning enables instant GPU access and distributed training without setup.

vs. GitHub Copilot: GitHub Copilot ($10/month for individuals, $19/user/month for business) provides AI code completion in VS Code, Visual Studio, JetBrains IDEs, and Neovim using GPT-4o, Claude 3.5 Sonnet, or Gemini 2.0 Flash with access to GPT-5 and GPT-5 Mini models. Copilot announced “agent mode” in February 2025 for autonomous task completion and “coding agent” in May 2025 for asynchronous pull request generation powered by GitHub Actions. Copilot focuses on code generation and completion across all programming languages without infrastructure integration. Lightning provides PyTorch-specific AI assistance integrated with training infrastructure, GPU provisioning, and deployment. Copilot focuses on coding assistance; Lightning focuses on complete development lifecycle for PyTorch. Complementary tools—Copilot for general coding, Lightning for PyTorch ML workflows.

vs. Weights & Biases (W&B): W&B provides experiment tracking, model versioning, hyperparameter optimization, and collaboration tools integrated with PyTorch and other frameworks. W&B focuses on experiment management and tracking without providing compute infrastructure or deployment. Lightning provides end-to-end platform including compute, training, and deployment alongside experiment tracking. Complementary tools—W&B for experiment tracking integrated with Lightning for compute and deployment.

vs. DataRobot and H2O.ai: Enterprise AutoML platforms emphasize automated machine learning, model deployment, and MLOps for business users and citizen data scientists. DataRobot provides automated feature engineering, model selection, and deployment without code. H2O.ai offers AutoML, explainable AI, and enterprise MLOps. Lightning emphasizes developer control, research flexibility, and PyTorch-native workflows while handling infrastructure automation. AutoML platforms target business analysts and citizen data scientists; Lightning targets ML engineers and researchers requiring code-level control.

Key Differentiators: Lightning’s core differentiation lies in exclusive PyTorch optimization across entire development stack, Monarch integration enabling interactive distributed training at cluster scale through Meta partnership, unified platform eliminating tool fragmentation for coding/training/deployment, enterprise-grade compliance features (SOC2, HIPAA) with private deployments, multi-cloud flexibility avoiding vendor lock-in to single cloud provider, PyTorch Lightning framework foundation with 2+ million users providing established community, and direct collaboration with Meta’s PyTorch team for latest framework features. No competitor combines all these dimensions specifically for PyTorch ecosystem.

Pricing and Access

Lightning operates on credit-based consumption model with free tier for evaluation and paid tiers for production use.

Free Tier: Start developing free with community features, limited GPU hours for experimentation, and basic environments. Suitable for learning, prototyping, and small-scale experimentation.

Pay-as-You-Go Credits: Purchase credits for GPU compute (per-second billing), model API access, and storage. Per-second GPU billing enables cost optimization through interruptible instances and precise usage tracking. Credit system allows flexible consumption without monthly minimums.

Specific Pricing Transparency: Exact credit costs per GPU hour per accelerator type (A100, H100, H200, T4), model API call pricing per provider, or deployment costs not transparently published on public website. Requires account creation and contact with sales for detailed pricing information.

Enterprise Custom Pricing: Private deployments, compliance features (SOC2, HIPAA), dedicated support, and volume discounts available through custom arrangements with sales team for enterprise customers.

No Platform Subscription: Unlike Colab Pro ($9.99/month) or GitHub Copilot ($10/month), Lightning charges for actual resource consumption through credits rather than monthly subscription independent of usage.

Cost Comparison Context: Google Colab Pro offers T4/V100 GPUs at $9.99/month with usage limits; Lightning offers multi-cloud GPU marketplace with per-second billing potentially more cost-effective for intermittent usage or enabling access to premium accelerators (H100, H200) unavailable in Colab.

Technical Architecture and Platform Details

Browser-Based Interface: Lightning Studios (IDE-like experience with multi-panel interface) and Notebooks (Jupyter-like notebook experience) accessible through modern browsers at lightning.ai. Persistent sessions survive browser closures without losing computational state.

PyTorch Lightning Integration: Builds directly on established PyTorch Lightning framework used by 2+ million developers since 2019. Extends open-source framework with AI assistance, infrastructure automation, and deployment capabilities.

Monarch Partnership: Official integration with Meta’s Monarch distributed training framework announced at PyTorch Conference 2025. Enables cluster-scale development maintaining interactive feedback loop through persistent processes and actor-based distributed computing model.

Multi-Cloud GPU Access: Integrates AWS EC2 GPU instances (A100, H100), Google Cloud TPU/GPU, Lambda Labs, Nebius, Voltage Park, Nscale, and Lightning’s proprietary cloud infrastructure. Per-second billing enables cost optimization for short-running experiments.

TorchForge Support: Native compatibility with Meta’s new PyTorch-native RL framework built on Monarch announced at PyTorch Conference 2025. Provides clean interface for RL algorithm development.

OpenEnv Standard: Supports Meta’s new open standard for reinforcement learning environment packaging, scaling, and sharing announced at PyTorch Conference 2025.

LitServe Deployment: Production inference framework supporting OpenAI-compatible API endpoints for easy integration, quantization for model compression, batch processing for throughput optimization, and monitoring for production observability.

Model API Integration: Standardized interface for accessing external models (GPT-4, Claude, Mistral, Gemini, Llama, open-source models from Hugging Face) alongside local models with unified authentication and billing.

Version Control: Git integration for source code management, environment versioning for reproducibility, and collaborative development workflows.

Company Background and Partnerships

Lightning AI founded in 2019 by William Falcon (CEO), creator of PyTorch Lightning open-source framework used by 2+ million developers. Falcon completed PhD research at NYU under Kyunghyun Cho and Yann LeCun at NYU CILVR Lab, funded by NSF and Google DeepMind. Previously worked at Facebook AI Research and served as CTO of Lightning AI (company distinct from Lightning AI Studios platform name).

Lightning AI team includes Luca Antiga (CTO), who serves as Chair of Technical Advisory Council of PyTorch Foundation and authored “Deep Learning with PyTorch” book, bringing deep PyTorch expertise to company leadership.

Recent partnerships announced at PyTorch Conference 2025 (October 22-23, 2025) include direct collaboration with Meta’s PyTorch team on Monarch integration, TorchForge RL framework support, and OpenEnv standardization initiative. These partnerships indicate tight alignment with PyTorch ecosystem roadmap and Meta’s research direction.

October 2025 announcement suite at PyTorch Conference demonstrates Lightning AI’s positioning as essential infrastructure provider for PyTorch development beyond open-source PyTorch Lightning framework.

Launch Reception and Market Position

October 22-23, 2025 announcement suite at PyTorch Conference received strong reception from PyTorch and AI research communities. Key appreciation focused on first platform integrating Monarch making large-scale distributed training interactive through persistent processes, unified platform eliminating PyTorch development tool fragmentation across coding/training/deployment, direct Meta collaboration providing early access to latest frameworks (TorchForge, OpenEnv, Monarch), and enterprise features addressing production requirements (SOC2, HIPAA compliance, private deployments).

PyTorch community members highlighted value of interactive distributed training enabled by Monarch integration—eliminating traditional batch job overhead requiring full cluster restarts for code changes. Researchers appreciated Lightning Environments hub for reproducible research configurations and collaborative experimentation.

Enterprise organizations noted importance of compliance features (SOC2, HIPAA) and private deployment options for regulated industries previously underserved by cloud-based ML platforms.

Important Caveats and Realistic Assessment

PyTorch Lock-in: Platform exclusively optimizes for PyTorch. Organizations with multi-framework strategies (TensorFlow for production, PyTorch for research) must integrate separate tools rather than unified platform.

Cloud Infrastructure Dependency: All features require cloud connectivity and infrastructure provisioning. Offline or local-only development not supported except through private deployment arrangements for enterprise customers.

Emerging Complexity: Multiple advanced features (Monarch distributed training, TorchForge RL, OpenEnv environments) add platform complexity. Learning curve steep for distributed training concepts, RL fundamentals, and Lightning ecosystem architecture.

Pricing Transparency: While credit-based system with per-second billing logical in concept, lack of published rates for specific GPU types, model APIs, or storage makes cost projection difficult before account creation and usage commitment.

Production Maturity Unproven for New Features: October 2025 launch of new frameworks (TorchForge, OpenEnv support, Monarch integration, Environments hub) means limited production track record specifically for these features. Core PyTorch Lightning framework proven with 2+ million users, but new platform features require maturity time.

Enterprise Friction: Private deployments and compliance features require custom arrangements through sales team rather than self-serve provisioning, increasing adoption friction and sales cycle length for enterprise customers.

Vendor Dependency Risk: While platform provides code export and standard PyTorch code, organizations building on Lightning ecosystem (Environments, deployment pipelines, cost management) create switching costs if migrating away from platform.

Rapid Evolution Challenges: Active development and October 2025 feature announcements indicate rapid evolution. Organizations requiring stable, unchanging platforms may face challenges with API changes or workflow modifications.

Final Assessment

Lightning represents comprehensive platform addressing fragmentation in PyTorch development workflows through unified environment combining AI-assisted coding, distributed training infrastructure, reinforcement learning frameworks, and production deployment. By consolidating previously separate tools (ChatGPT for coding, Jupyter/Colab for notebooks, cloud consoles for GPU provisioning, deployment platforms) into single PyTorch-optimized platform, Lightning eliminates context switching and enables developers to focus on model development and research rather than infrastructure configuration.

The platform’s greatest strategic strengths lie in exclusive PyTorch optimization across complete development stack from coding through deployment, Monarch integration with Meta enabling interactive cluster-scale development through partnership with PyTorch creators, unified platform consolidating fragmented development tools, direct Meta collaboration providing latest frameworks (TorchForge, OpenEnv, Monarch) before general availability, enterprise-grade compliance features (SOC2, HIPAA) with private deployments for regulated industries, multi-cloud flexibility avoiding vendor lock-in to single cloud provider, 40% throughput improvement for PyTorch workloads through kernel optimizations, established user base with 2+ million PyTorch Lightning users providing community support, and Lightning Environments hub enabling reproducible research and collaborative experimentation.

However, prospective users should approach with realistic expectations about PyTorch exclusivity limiting multi-framework organizations, cloud infrastructure dependency preventing offline development, emerging platform maturity for October 2025 announced features (Monarch, TorchForge, OpenEnv, Environments hub), pricing transparency requiring account creation and sales contact for detailed costs, rapid evolution potentially introducing breaking changes, integration complexity for advanced distributed training features, enterprise adoption friction through custom arrangement requirements, and vendor dependency risks for organizations building significant platform integration.

Lightning appears optimally positioned for PyTorch researchers and developers working on distributed training requiring interactive development, organizations building production ML systems with PyTorch and compliance requirements (healthcare, finance, regulated industries), teams adopting reinforcement learning with TorchForge and OpenEnv standardization, enterprises seeking multi-cloud flexibility without single provider lock-in, developers valuing unified workflows over independent tool optimization, ML engineers frustrated by development tool fragmentation and infrastructure complexity, research teams requiring reproducible environments through Environments hub, and organizations prioritizing PyTorch ecosystem alignment over multi-framework support.

It may be less suitable for organizations using multiple frameworks requiring multi-framework support (TensorFlow, JAX, MXNet alongside PyTorch), teams preferring local-only development without cloud dependency for security or offline requirements, early adopters uncomfortable with emerging frameworks still maturing (Monarch, TorchForge, OpenEnv launched October 2025), cost-sensitive organizations requiring transparent pricing upfront before account creation, teams requiring proven, established platforms with extensive production history for all features, organizations preferring maximum tool independence without vendor platform dependencies, educational institutions seeking free GPU access where Google Colab more appropriate, or AutoML-focused organizations where DataRobot or H2O.ai better suited.

For PyTorch developers frustrated by development tool fragmentation (separate tools for coding, GPU provisioning, training, deployment), infrastructure complexity preventing focus on model development, lack of interactive distributed training at scale, or absence of unified platform for RL research with TorchForge and OpenEnv, Lightning offers genuinely innovative approach combining interactivity, scale, AI assistance, and compliance in unified platform specifically optimized for PyTorch ecosystem. Platform positioning as essential infrastructure for next generation of PyTorch-based AI development reflects Lightning AI’s strategy of extending open-source PyTorch Lightning framework with commercial cloud platform for complete development lifecycle.