Table of Contents

Overview

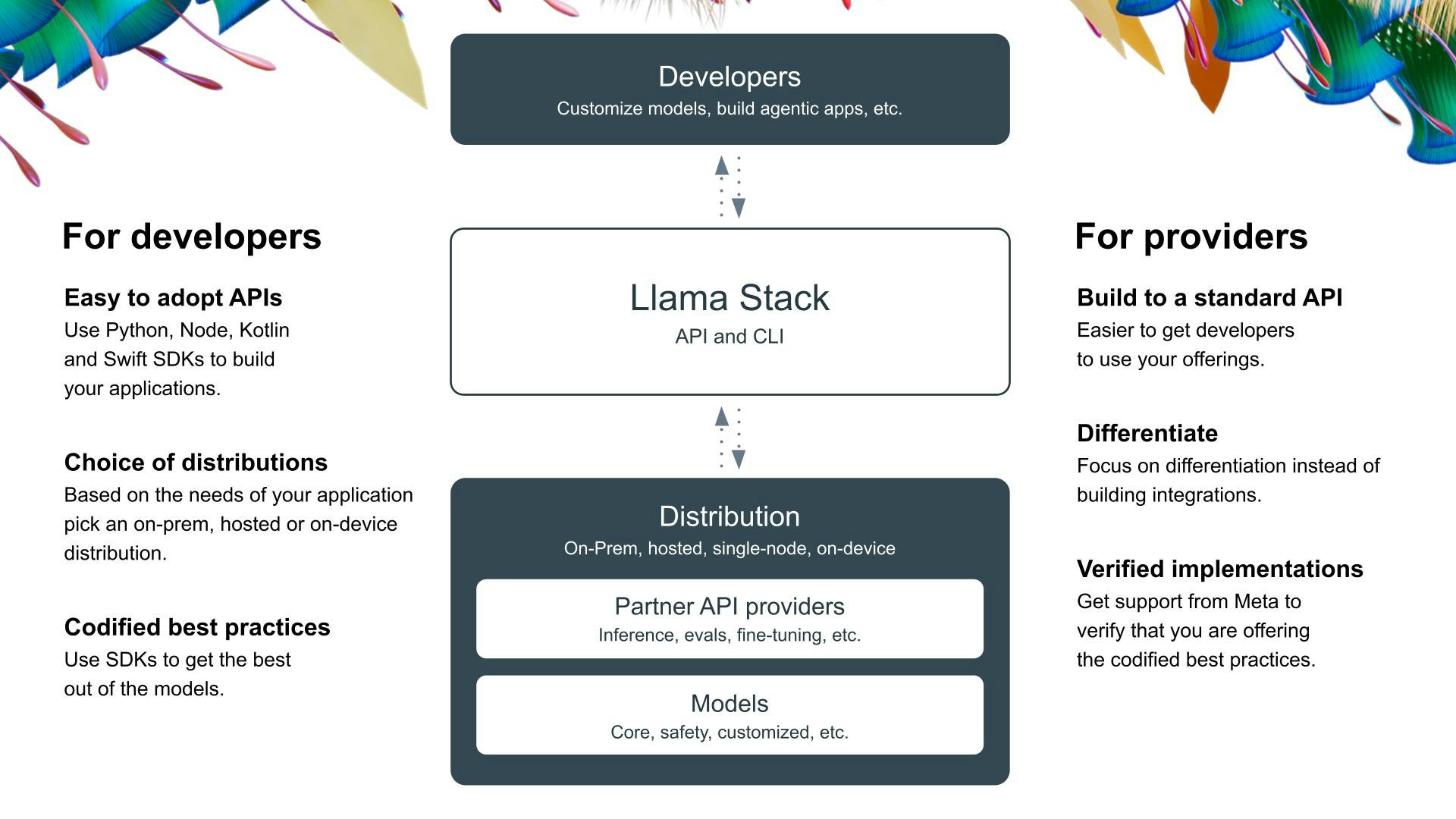

In the rapidly evolving world of generative AI, developers are constantly seeking tools to streamline the creation and deployment of intelligent applications. Enter Llama Stack, an open-source framework by Meta designed to do just that. This innovative platform aims to standardize and simplify the development process, making it easier than ever to build powerful AI solutions using Llama models. Let’s dive into what makes Llama Stack a contender in the AI development landscape.

Key Features

Llama Stack boasts a comprehensive suite of features designed to empower developers at every stage of the AI application lifecycle. Here’s a breakdown of its key capabilities:

- Standardized API for genAI development: Provides a consistent interface for interacting with Llama models, simplifying integration and reducing code complexity.

- Unified components for Inference, RAG, Agents, and more: Offers pre-built modules for common AI tasks, such as inference, Retrieval-Augmented Generation (RAG), and agent execution, accelerating development.

- Plugin architecture supporting varied environments: Enables seamless deployment across cloud, on-premise, and edge environments, providing flexibility and scalability.

- CLI and SDKs for Python, Node, iOS, Android: Offers a range of development tools for various platforms, catering to diverse developer preferences.

- Verified distributions for easy setup: Simplifies the installation and configuration process, allowing developers to get started quickly.

- Security-focused with prompt patching of vulnerabilities: Prioritizes security by actively monitoring and addressing potential vulnerabilities, ensuring the safety of AI applications.

How It Works

Llama Stack simplifies the development of AI applications by abstracting away the complexities of deployment and integration. Developers leverage the Llama Stack APIs and SDKs to build applications compatible with Meta’s Llama models. The stack integrates essential tools for inference, agent execution, and telemetry, ensuring consistent performance across different platforms and environments. This streamlined approach allows developers to focus on building innovative AI solutions without getting bogged down in infrastructure management.

Use Cases

Llama Stack opens up a wide range of possibilities for AI application development. Here are a few compelling use cases:

- Developing AI chatbots with Llama models to provide intelligent and engaging customer service experiences.

- Creating RAG-based search tools that leverage external knowledge sources to deliver more accurate and relevant search results.

- Building secure and scalable AI agents for mobile and enterprise applications, automating tasks and improving efficiency.

- On-premises AI applications for regulated industries, enabling secure and compliant AI deployments in sensitive environments.

- Prototyping and deploying edge AI services, bringing AI capabilities closer to the data source for faster and more efficient processing.

Pros & Cons

Like any technology, Llama Stack has its strengths and weaknesses. Let’s weigh the advantages and disadvantages.

Advantages

- Standardization reduces integration burden, simplifying the development process.

- Wide SDK support caters to diverse developer preferences and platforms.

- Modular and extensible architecture allows for customization and adaptation.

- Security patches are actively maintained, ensuring the safety of AI applications.

Disadvantages

- Tied closely to Meta’s ecosystem, potentially limiting flexibility for developers using other models.

- May require a steep learning curve for new users unfamiliar with the Llama Stack framework.

How Does It Compare?

When evaluating AI development frameworks, it’s important to consider the alternatives.

- LangChain: Offers broader model compatibility but less tight integration with Meta’s ecosystem compared to Llama Stack.

- Haystack: Provides strong RAG tools but lacks the standardized API structure offered by Llama Stack.

The best choice depends on the specific requirements of your project and your preferred development environment.

Final Thoughts

Llama Stack presents a compelling solution for developers looking to streamline the development of generative AI applications using Llama models. Its standardized API, unified components, and security-focused approach make it a valuable tool for building a wide range of AI solutions. While its close ties to the Meta ecosystem and potential learning curve are worth considering, Llama Stack’s benefits make it a strong contender in the ever-evolving landscape of AI development frameworks.