Table of Contents

Overview

In the ever-evolving landscape of AI, running large language models (LLMs) locally is becoming increasingly important for privacy, security, and offline development. Enter LM Studio, a powerful cross-platform desktop application designed to bring the power of LLMs directly to your computer. Whether you’re a developer, researcher, or simply curious about AI, LM Studio offers a versatile and accessible way to explore the world of large language models without relying on cloud services. Let’s dive into the details of what makes LM Studio a compelling tool for local AI experimentation.

Key Features

LM Studio boasts a comprehensive set of features that make it a standout choice for local LLM deployment:

- Run LLMs locally on Windows, macOS, and Linux: Enjoy the flexibility of running powerful AI models on your preferred operating system, ensuring accessibility for a wide range of users.

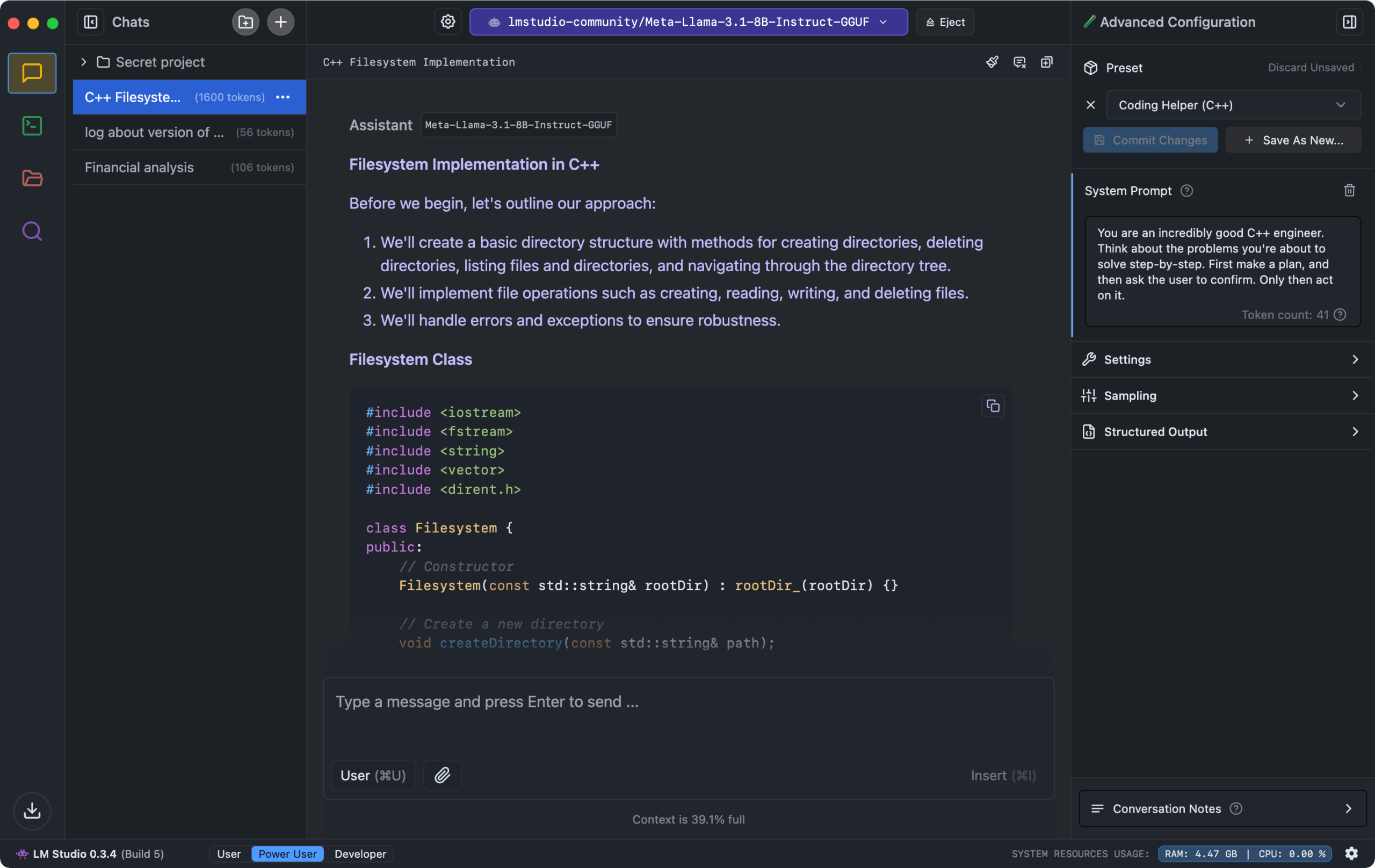

- Chat interface for interacting with models: Engage in interactive conversations with your chosen LLM through a user-friendly chat interface, perfect for testing prompts and exploring model capabilities.

- Integration with local documents using RAG: Leverage Retrieval-Augmented Generation (RAG) to ground your LLM in your own data, enabling powerful document-based question answering and information retrieval.

- OpenAI-compatible API server: Seamlessly integrate LM Studio with your existing applications by utilizing its OpenAI-compatible API server, allowing you to swap out cloud-based models with your local instance.

- Support for GGUF and MLX model formats: LM Studio supports popular model formats like GGUF and MLX, providing access to a vast library of pre-trained LLMs available on platforms like Hugging Face.

- SDKs for Python and TypeScript: Develop custom applications and integrations with ease using the provided Python and TypeScript SDKs, empowering you to build tailored AI solutions.

How It Works

Getting started with LM Studio is a straightforward process. First, you’ll need to download and install the application on your operating system. Once installed, you can browse and download compatible LLMs from sources like Hugging Face directly within the LM Studio interface. After downloading a model, you can interact with it through the built-in chat UI, experimenting with different prompts and settings. Alternatively, you can set up a local OpenAI-compatible API server, allowing you to integrate the LLM into your own applications as if it were a cloud-based service. This flexibility makes LM Studio a valuable tool for both casual experimentation and serious development.

Use Cases

LM Studio’s versatility makes it suitable for a wide range of applications:

- Offline AI development: Develop and test AI applications without an internet connection, ensuring privacy and security for sensitive projects.

- Local data processing for privacy: Process sensitive data locally, keeping it within your control and avoiding the risks associated with cloud-based processing.

- Application integration via OpenAI-compatible API: Integrate LLMs into your existing applications using the familiar OpenAI API, simplifying the transition from cloud to local deployment.

- Model testing and research: Experiment with different LLMs and fine-tune their performance for specific tasks, contributing to the advancement of AI research.

Pros & Cons

Like any tool, LM Studio has its strengths and weaknesses. Understanding these can help you determine if it’s the right choice for your needs.

Advantages

- Offline operation: Run LLMs without an internet connection, ensuring privacy and accessibility.

- Cross-platform: Compatible with Windows, macOS, and Linux, catering to a diverse user base.

- OpenAI API-compatible: Seamlessly integrate with existing applications using the familiar OpenAI API.

- Local document support: Leverage RAG to ground your LLMs in your own data, enabling powerful document-based applications.

Disadvantages

- Resource-heavy: Running LLMs locally can require significant computational resources, potentially straining older or less powerful machines.

- GUI not open-source: While the application is free to use, the GUI is not open-source, which may be a concern for some users.

- Some learning curve: While generally user-friendly, setting up and configuring LLMs can require some technical knowledge.

How Does It Compare?

When considering local LLM deployment, it’s important to compare LM Studio with its competitors.

- Ollama: Ollama offers a simpler and more streamlined experience but lacks some of the advanced features found in LM Studio, such as RAG integration and SDKs.

- GPT4All: GPT4All is known for its user-friendly interface, making it a good choice for beginners. However, it offers fewer advanced capabilities compared to LM Studio.

LM Studio strikes a balance between ease of use and advanced functionality, making it a compelling choice for users who need more than just basic LLM interaction.

Final Thoughts

LM Studio is a powerful and versatile tool for anyone looking to run large language models locally. Its cross-platform compatibility, OpenAI API integration, and local document support make it a standout choice for developers, researchers, and anyone interested in exploring the world of AI. While it may require some computational resources and has a slight learning curve, the benefits of offline operation and data privacy make LM Studio a valuable addition to any AI enthusiast’s toolkit.