Table of Contents

Overview

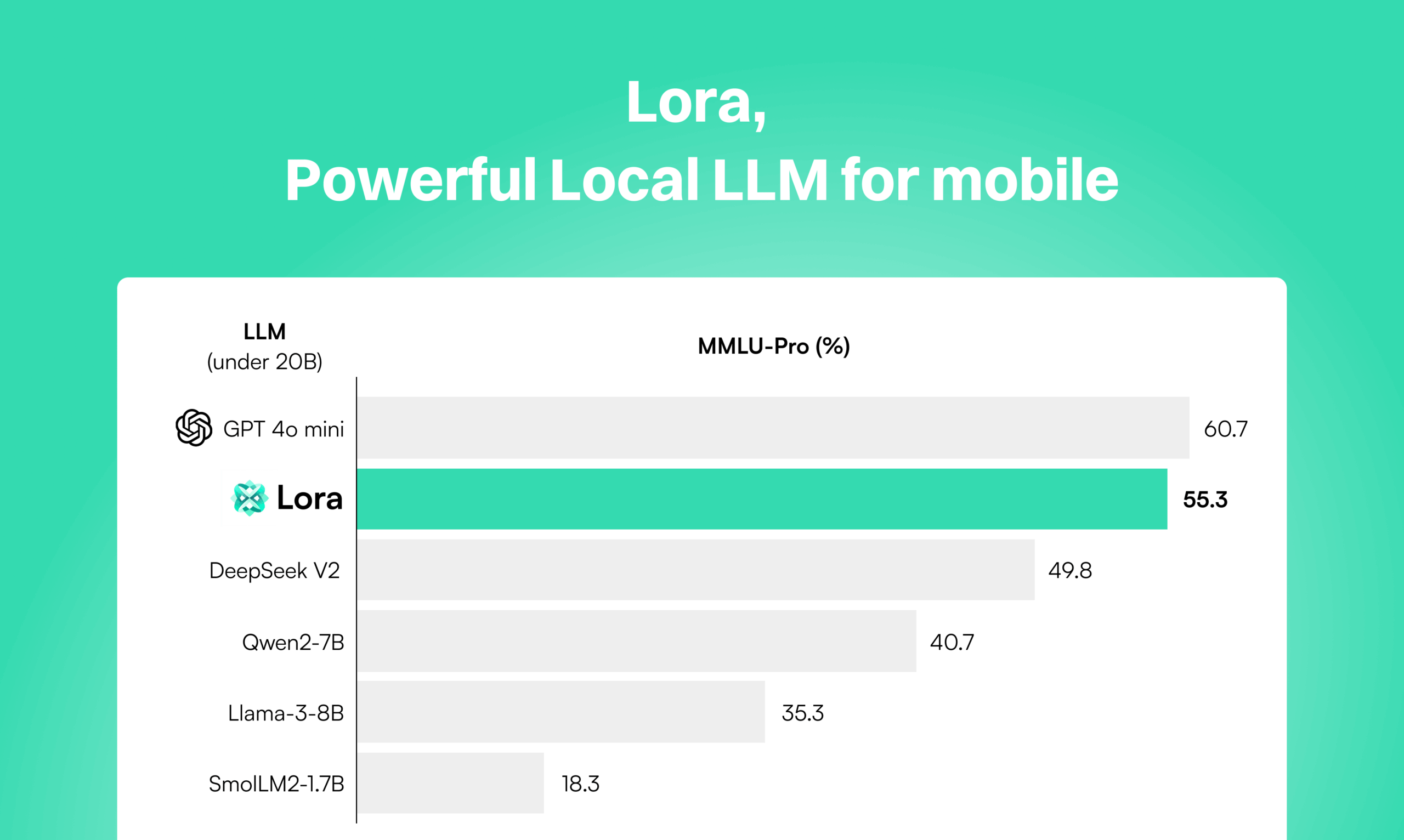

In a world increasingly concerned with data privacy, Lora emerges as a game-changer. This privacy-first local large language model (LLM) is designed specifically for mobile devices, offering performance that rivals GPT-4o-mini, but with the added benefit of complete data control. Imagine having the power of a sophisticated AI assistant right on your phone, without ever sending your data to the cloud. Let’s dive into what makes Lora a compelling option for developers and users alike.

Key Features

Lora boasts a powerful set of features focused on privacy and accessibility:

- Local LLM for mobile: Lora is built to run directly on mobile devices, eliminating the need for cloud connectivity and ensuring data stays on the device.

- Offline support including airplane mode: Enjoy uninterrupted AI functionality even without an internet connection, perfect for travel or areas with limited connectivity.

- No data logging for privacy: Lora prioritizes user privacy by not logging any user data, providing peace of mind knowing your interactions remain private.

- SDK for custom app integration: Developers can easily integrate Lora into their mobile applications using a dedicated SDK, enabling custom AI-powered experiences.

- Comparable to GPT-4o-mini: Experience performance similar to GPT-4o-mini, bringing powerful AI capabilities to your mobile device.

How It Works

Lora operates by leveraging optimized local inference directly on your mobile device. Instead of sending requests to a remote server, the LLM runs entirely on the device’s hardware. Developers can seamlessly integrate Lora into their mobile apps using the provided SDK. This allows them to embed private, intelligent responses and functionalities directly within their applications, even when the device is offline. The result is a fast, responsive, and private AI experience.

Use Cases

Lora opens up a wide range of possibilities for different users:

- Developers creating AI-powered mobile apps: Integrate Lora into your mobile apps to provide intelligent features like chatbots, content generation, and more, all without compromising user privacy.

- Users needing private on-device AI: Individuals who prioritize data privacy can benefit from Lora’s ability to provide AI assistance without sending data to the cloud.

- Enterprise apps requiring local inference without cloud dependence: Businesses can leverage Lora to build secure and compliant mobile applications that require local inference, reducing reliance on cloud services.

Pros & Cons

Lora offers a compelling value proposition, but it’s important to consider both its strengths and limitations.

Advantages

- Full privacy with no data sent to cloud.

- Runs offline, ensuring accessibility even without an internet connection.

- Lightweight yet performant, delivering a smooth user experience.

Disadvantages

- May have limited model size compared to cloud LLMs.

- Currently mobile-specific, limiting its use on other platforms.

How Does It Compare?

When considering local LLMs, it’s essential to compare Lora with its competitors. While options like LM Studio and Ollama exist, they don’t quite match Lora’s mobile-first approach. LM Studio is primarily desktop-focused and lacks the mobile integration Lora offers. Ollama, while offering local models, lacks a direct mobile SDK and the offline-first user experience that Lora provides. Lora’s focus on mobile, privacy, and offline functionality sets it apart.

Final Thoughts

Lora represents a significant step forward in the world of AI, offering a powerful and private LLM solution for mobile devices. Its ability to run offline, combined with its focus on data privacy, makes it an attractive option for developers and users who prioritize security and control. While it may have some limitations compared to cloud-based LLMs, Lora’s unique features and mobile-first design position it as a compelling choice for a wide range of applications.