.png&blockId=1ddf2aeb-b80a-800a-9e33-f651ac07f285&width=2400)

Table of Contents

Overview

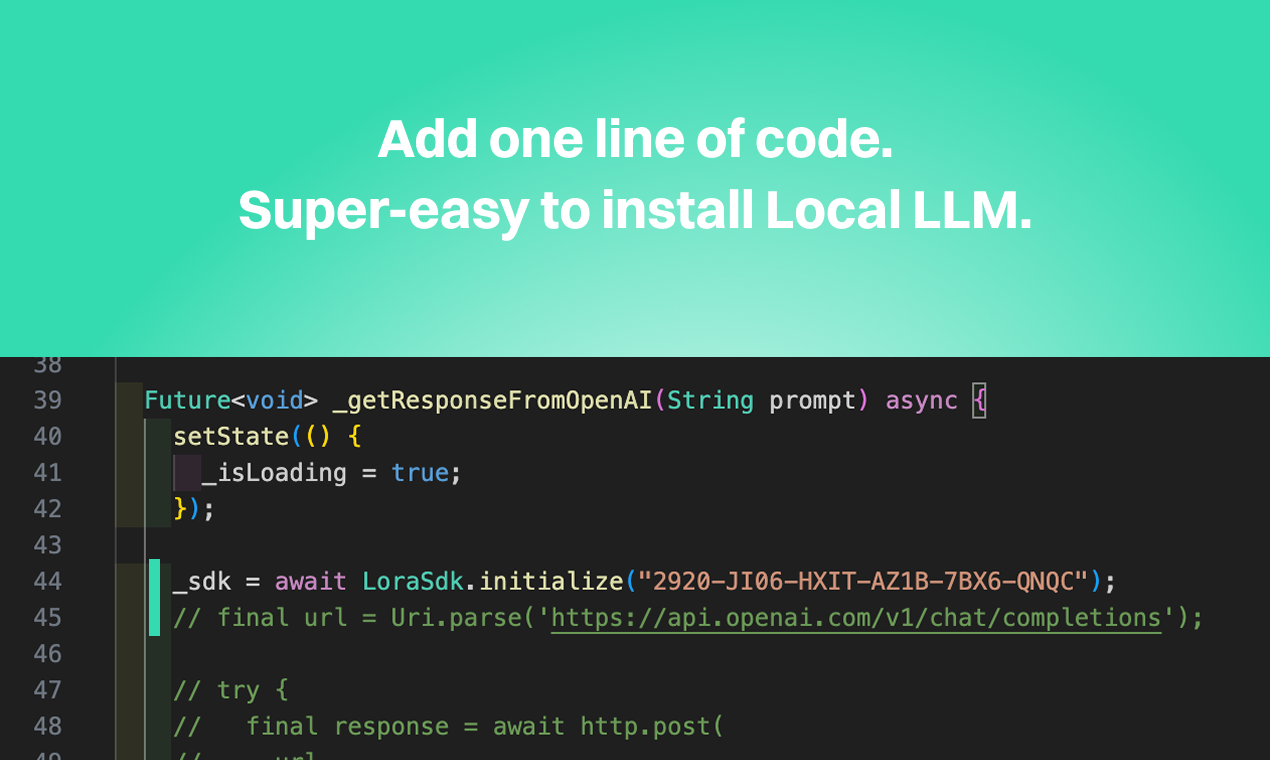

Imagine bringing the power of a large language model directly to your Flutter applications, without relying on cloud connectivity. Lora by Peekaboo Labs makes this a reality. This lightweight, local LLM offers impressive GPT-4o-mini-level performance and can be integrated into your projects with a single line of code. Let’s dive into what makes Lora a game-changer for mobile and embedded AI.

Key Features

Lora boasts a range of features designed for efficient and private on-device AI:

- Local LLM optimized for Flutter: Specifically designed to run efficiently on Flutter applications, ensuring seamless integration.

- One-line integration: Adding Lora to your project is incredibly simple, requiring minimal code changes.

- GPT-4o-mini-class performance: Delivers impressive language processing capabilities comparable to scaled-down versions of state-of-the-art models.

- Works offline: Operates entirely on the device, eliminating the need for an internet connection and ensuring privacy.

- Low-latency inference: Provides fast and responsive results, crucial for real-time applications.

How It Works

Lora’s magic lies in its simplicity and efficiency. Developers integrate Lora into their Flutter apps using a single line of code. The model then runs locally on the device, enabling real-time, privacy-preserving inference. It’s optimized for ARM and low-resource environments, making it suitable for mobile and edge AI applications. This local execution eliminates the need for constant communication with a cloud server, reducing latency and enhancing user privacy.

Use Cases

Lora opens up a wide array of possibilities for on-device AI:

- Offline AI chatbots: Create intelligent chatbots that function even without an internet connection.

- Real-time language processing in apps: Enable instant translation, summarization, and other language-based features within your mobile applications.

- Smart assistants for mobile: Develop personalized assistants that understand and respond to user commands directly on their devices.

- On-device summarization and translation: Quickly condense large texts or translate languages without relying on external services.

- Embedded system NLP: Integrate natural language processing capabilities into embedded systems for enhanced control and interaction.

Pros & Cons

Like any technology, Lora has its strengths and weaknesses. Let’s examine the advantages and disadvantages.

Advantages

- Fully local: Operates entirely on the device, ensuring data privacy and eliminating reliance on cloud services.

- Fast response time: Local processing results in low latency and quick responses.

- Privacy-focused: Keeps user data secure by processing it directly on the device.

- Easy integration: Simple one-line integration makes it easy to add AI capabilities to Flutter apps.

Disadvantages

- Limited by device compute power: Performance is constrained by the processing power of the device.

- Smaller model capacity vs. cloud LLMs: Local models have a smaller capacity compared to cloud-based LLMs.

- Flutter-specific: Currently limited to Flutter applications, restricting its use in other development environments.

How Does It Compare?

While Lora offers a compelling solution for on-device AI, it’s important to consider its competitors:

- EdgeGPT: Offers broader platform support, making it compatible with more development environments.

- Ollama: Provides a larger selection of models, giving developers more flexibility in choosing the right model for their needs.

- WhisperKit: Specializes in speech processing, whereas Lora is a more general-purpose LLM.

Final Thoughts

Lora by Peekaboo Labs is a promising solution for developers looking to integrate AI capabilities into their Flutter applications. Its ease of integration, focus on privacy, and impressive performance make it a compelling choice for on-device AI. While it has limitations in terms of compute power and model capacity compared to cloud-based solutions, its benefits for offline functionality and data privacy are undeniable. If you’re building Flutter apps and need local LLM capabilities, Lora is definitely worth exploring.

.png&blockId=1ddf2aeb-b80a-800a-9e33-f651ac07f285&width=2400)