Table of Contents

Overview

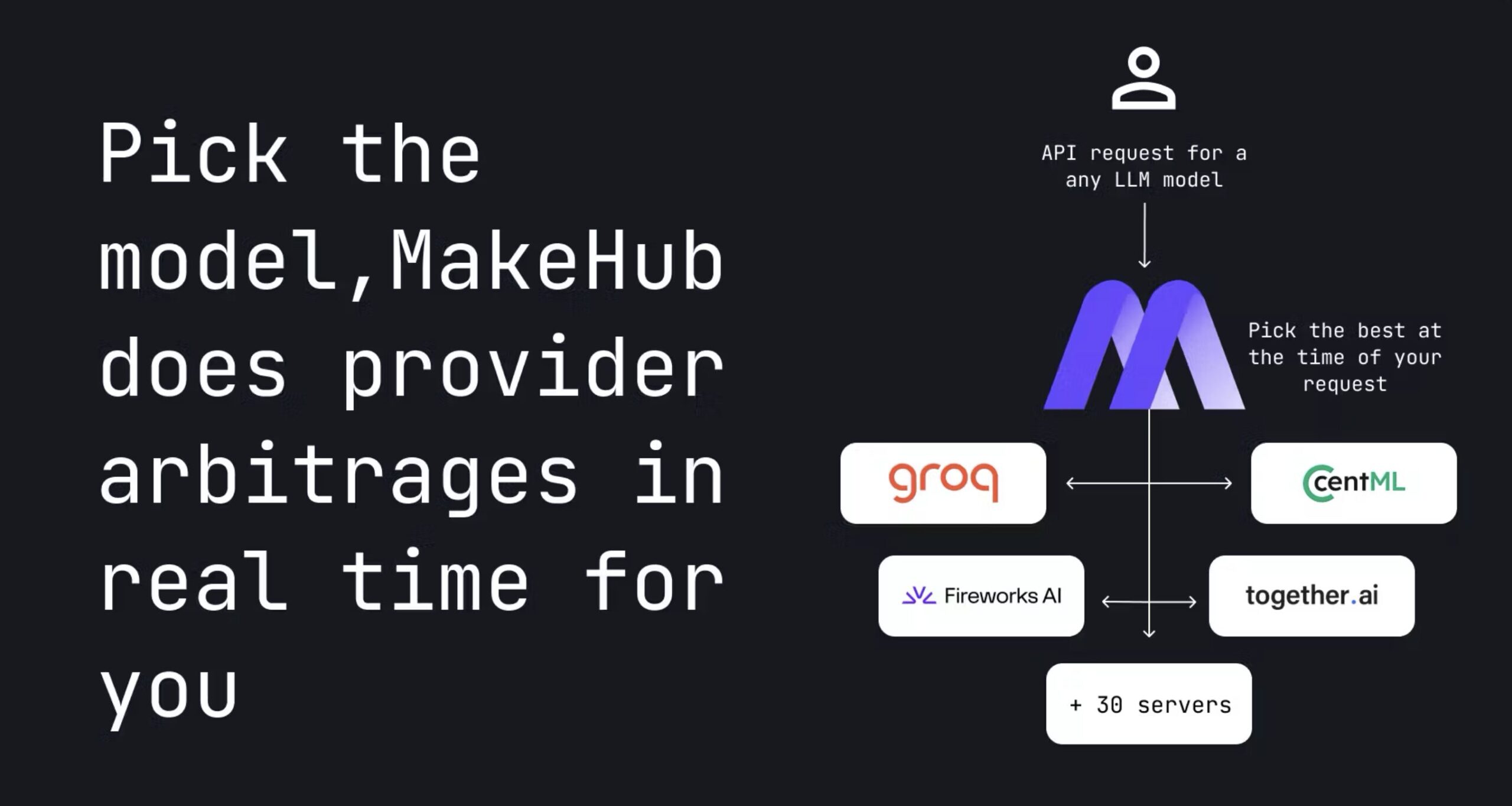

In the rapidly accelerating landscape of artificial intelligence, where large language model deployment costs and performance optimization represent critical challenges for developers and enterprises, MakeHub.ai emerges as a groundbreaking solution that transforms how organizations approach LLM integration and management. As the AI industry witnesses unprecedented growth in model diversity and pricing complexity—with providers ranging from OpenAI and Anthropic to emerging open-source alternatives—developers face the increasingly complex task of selecting optimal models while balancing performance requirements against budget constraints. MakeHub.ai addresses this challenge through an intelligent, OpenAI-compatible endpoint that revolutionizes LLM access by providing a unified API that dynamically routes requests to the most cost-effective and performant provider available in real-time. By continuously executing comprehensive benchmarks across multiple dimensions including price, latency, and system load, MakeHub.ai ensures that applications consistently operate at peak efficiency while minimizing operational costs, representing a paradigm shift from static provider selection to intelligent, data-driven model routing.

Key Features

MakeHub.ai delivers a comprehensive suite of advanced capabilities designed to optimize LLM operations across diverse use cases and organizational requirements.

- Universal OpenAI API Compatibility: Seamless integration with existing projects through standards-compliant API interfaces that mirror the widely adopted OpenAI specification, eliminating development friction and enabling immediate deployment without code modifications

- Intelligent Multi-Provider Routing Engine: Advanced algorithmic system that dynamically evaluates and routes requests across diverse LLM providers including OpenAI, Anthropic, Together.ai, Fireworks, DeepInfra, and Azure, ensuring optimal resource allocation for each query

- Continuous Real-Time Benchmarking: Sophisticated background monitoring system that continuously evaluates price, latency, and load metrics across all connected providers, enabling informed routing decisions based on current performance data

- Comprehensive Model Support Architecture: Unified platform supporting both proprietary closed-source models (GPT-4, Claude, Gemini) and open-source alternatives (LLaMA, Mistral, Phi), providing flexibility across the entire AI model spectrum

- Advanced Cost and Performance Optimization: Intelligent decision-making algorithms that balance cost-effectiveness with performance requirements, automatically selecting optimal providers to minimize inference costs while maintaining response quality standards

- Developer Tool Integration: Native compatibility with popular development environments including Roo Code and Cline forks, enabling immediate integration into existing AI-powered development workflows

How It Works

MakeHub.ai operates through a sophisticated multi-layered architecture that combines real-time performance monitoring with intelligent routing algorithms to deliver optimal LLM access. Developers integrate their applications using MakeHub.ai’s unified API endpoint, which maintains complete compatibility with OpenAI’s standard interface, ensuring seamless adoption without requiring code modifications. Behind this interface, MakeHub.ai’s advanced monitoring system continuously evaluates multiple performance metrics across all connected providers, including real-time pricing data, response latency measurements, and current system load indicators. When a request arrives, the platform’s intelligent routing engine analyzes these performance metrics alongside the specific requirements of the incoming query, dynamically selecting the optimal provider based on current conditions and user-defined preferences. This process occurs transparently, with the system handling all provider-specific API differences, authentication protocols, and response formatting, delivering a unified experience while ensuring each request is processed by the most suitable available resource.

Use Cases

MakeHub.ai’s dynamic optimization capabilities address critical challenges across diverse application scenarios and organizational contexts.

- High-Performance AI Application Development: Mission-critical applications requiring consistent low-latency responses, such as real-time customer service chatbots, live content generation systems, and interactive AI assistants where response speed directly impacts user experience and business outcomes

- Cost-Conscious Startup Operations: Resource-optimized AI deployment for emerging companies seeking to minimize operational expenses while maintaining competitive AI capabilities, enabling sustainable scaling of AI-powered features without prohibitive infrastructure costs

- Rapid AI Prototyping and Experimentation: Accelerated development cycles for AI researchers and product teams requiring seamless access to diverse model capabilities, enabling efficient A/B testing, model comparison, and rapid iteration without managing multiple provider relationships

- Enterprise-Scale AI Workload Management: Large-scale AI deployments requiring sophisticated load balancing and cost optimization across variable demand patterns, ensuring consistent performance during peak usage while optimizing costs during low-demand periods

- Multi-Model AI Pipeline Orchestration: Complex AI systems utilizing multiple specialized models for different tasks, benefiting from intelligent routing that matches specific query types to optimal model capabilities while maintaining overall system efficiency

Pros \& Cons

Advantages

MakeHub.ai offers compelling benefits that position it as a transformative solution for AI infrastructure management.

- Transparent Performance Intelligence: Comprehensive visibility into routing decisions and performance metrics, providing users with clear insights into optimization processes and enabling informed decision-making about AI infrastructure investments

- Significant Cost and Performance Benefits: Automated optimization delivering measurable improvements in both operational costs and response times through intelligent provider selection based on real-time performance data and pricing dynamics

- Seamless Integration Experience: OpenAI-compatible API design ensures minimal development overhead and immediate compatibility with existing AI applications, reducing implementation time and technical risk

- Enterprise-Grade Reliability: Sophisticated load balancing and failover capabilities ensuring consistent service availability even when individual providers experience outages or performance degradation

Disadvantages

Organizations should carefully consider these factors when evaluating MakeHub.ai for their AI infrastructure needs.

- Reduced Direct Provider Control: Automated routing systems limit granular control over specific provider selection for individual requests, potentially conflicting with requirements for deterministic model behavior or specific provider compliance needs

- Routing Algorithm Dependency: Application performance becomes contingent on the accuracy and efficiency of MakeHub.ai’s routing intelligence, requiring trust in the platform’s decision-making algorithms and continuous optimization processes

How Does It Compare?

MakeHub.ai establishes a distinctive position in the LLM infrastructure landscape through its focus on intelligent, transparent, and performance-driven routing optimization.

- OpenRouter: While OpenRouter provides extensive model access with 400+ models across 60+ providers and processes 8.4 trillion monthly tokens for 2.5+ million users, MakeHub.ai differentiates itself through superior benchmarking transparency and real-time optimization focus. OpenRouter excels in scale and model variety with transparent pass-through pricing, while MakeHub.ai prioritizes intelligent routing decisions based on live performance metrics and detailed optimization insights.

- Together.ai: Together.ai offers advanced model hosting capabilities including cutting-edge models like Llama 4 Maverick, multi-agent workflow support, and sophisticated function calling features. MakeHub.ai’s approach differs by focusing specifically on intelligent aggregation and routing across multiple providers rather than specialized model hosting, providing optimization logic that spans the entire LLM ecosystem rather than concentrating on advanced individual model capabilities.

- Traditional LLM Aggregators: Most existing aggregation platforms provide basic multi-provider access without sophisticated optimization capabilities. MakeHub.ai’s innovation lies in its real-time benchmarking and intelligent routing algorithms that continuously optimize provider selection based on current performance data, transforming LLM access from simple aggregation to intelligent orchestration.

- Direct Provider APIs: While direct provider relationships offer maximum control and potentially lower costs, they require complex multi-provider management, manual optimization, and extensive monitoring infrastructure. MakeHub.ai abstracts this complexity while providing automated optimization that can achieve better overall performance than manual provider management.

Final Thoughts

MakeHub.ai represents a significant advancement in LLM infrastructure management, successfully addressing the growing complexity of multi-provider AI operations through intelligent automation and transparent optimization. By combining comprehensive real-time benchmarking with sophisticated routing algorithms, the platform enables organizations to achieve optimal balance between cost efficiency and performance without sacrificing simplicity or reliability. While some organizations may prefer direct provider control for specific use cases, MakeHub.ai’s strength lies in democratizing access to enterprise-grade LLM optimization for developers and organizations that prioritize results over infrastructure complexity. As the LLM landscape continues to evolve with increasing provider diversity and pricing sophistication, MakeHub.ai’s approach to intelligent routing and transparent optimization positions it as an essential tool for organizations seeking to maximize their AI infrastructure efficiency. For developers and enterprises ready to embrace automated optimization and intelligent provider management, MakeHub.ai offers a compelling pathway to more efficient, cost-effective, and performant LLM operations in an increasingly complex AI ecosystem.