Table of Contents

Overview

As of October 2025, Metorial (Y Combinator F25) is the serverless platform for deploying Model Context Protocol servers at scale, often described as “Vercel for MCP.” Founded by Karim Rahme and Tobias Herber, the platform addresses a critical infrastructure gap that emerged as AI agents moved from local development to production environments.

The Model Context Protocol, introduced by Anthropic, enables AI language models to securely connect to external data sources, APIs, and tools through standardized interfaces. While MCP works seamlessly in local environments like Cursor or Claude Desktop, deploying MCP servers for production applications presents significant challenges: managing Docker configurations, handling per-user OAuth flows, scaling concurrent sessions, building observability infrastructure, and ensuring security through proper user isolation.

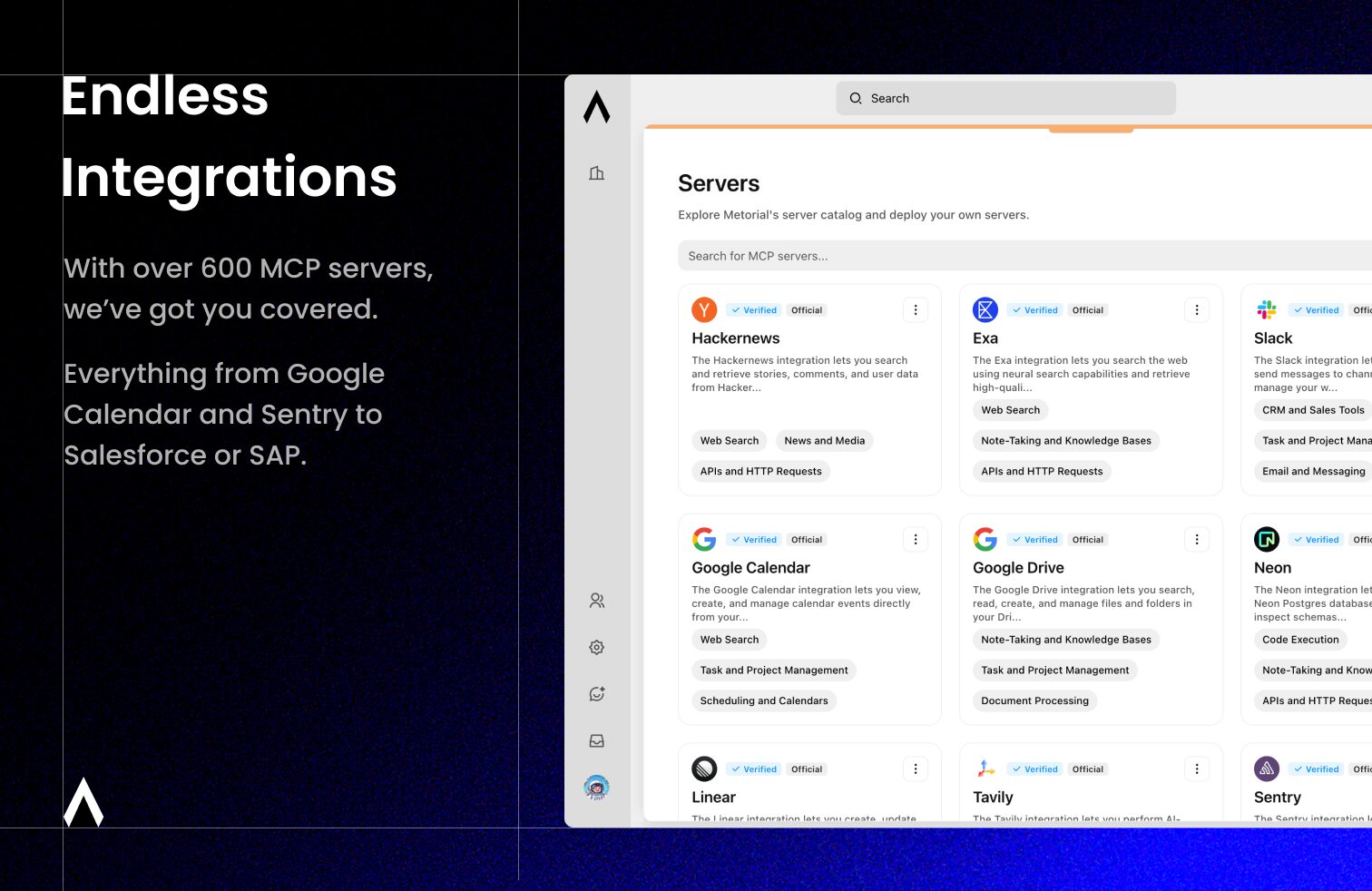

Metorial eliminates these barriers by maintaining an open catalog of over 600 MCP servers spanning GitHub, Slack, Google Drive, Salesforce, databases, and hundreds of other integrations that developers can deploy in three clicks. The platform’s serverless architecture automatically handles OAuth credential management including token refresh, hibernates idle MCP servers to reduce costs, resumes sessions with sub-second cold starts while preserving connection state, and provides per-user isolation ensuring each user’s credentials remain completely separate.

This unique combination of hibernation support and multi-tenant architecture distinguishes Metorial from alternatives that either run shared servers creating security vulnerabilities or provision separate virtual machines per user resulting in expensive and slow scaling. Metorial’s custom MCP engine manages thousands of concurrent connections efficiently, making it practical to deploy agent infrastructure that would otherwise require weeks of custom development.

The platform has gained significant traction with over 3,000 GitHub stars and is currently used by engineers at FAANG companies, Big 4 consulting firms, and American Express. Metorial is piloting with leading startups and Fortune 500 enterprises, demonstrating its viability for both emerging products and established organizations.

Developers integrate Metorial through Python and TypeScript SDKs that abstract MCP protocol complexity into single function calls, while also providing full REST API access for custom implementations. The platform’s open-source core enables self-hosting for organizations requiring complete infrastructure control, though most users leverage the hosted version at metorial.com.

Understanding Metorial requires recognizing the platform’s evolution. In February 2025, the team launched with a different vision: a visual AI workflow builder featuring drag-and-drop “Metorial Flows” for designing agents. By October 2025, they had pivoted completely to focus exclusively on solving the MCP deployment problem, abandoning visual builder features in favor of becoming infrastructure for the entire AI agent ecosystem.

Key Features

Metorial’s current feature set centers entirely on MCP server deployment, monitoring, and management for production AI applications:

Comprehensive MCP Server Catalog: Access over 600 pre-configured MCP servers spanning development tools like GitHub and GitLab, communication platforms including Slack and Microsoft Teams, business applications such as Salesforce and HubSpot, cloud storage services like Google Drive and Dropbox, databases covering PostgreSQL and MongoDB, productivity suites, analytics platforms, and specialized APIs. Every server in the catalog includes detailed documentation, usage examples, and configuration guidance.

Three-Click Deployment: Deploy any MCP server from the catalog through a streamlined three-step process that eliminates traditional infrastructure complexity. Select your desired server, provide OAuth credentials if required (client ID and secret), and activate deployment. Metorial automatically configures the server, establishes security policies, and makes it immediately available through the SDK or API with no manual infrastructure setup required.

Automated OAuth Management: For MCP servers requiring authentication, Metorial handles the entire OAuth flow including generating per-user authorization URLs with a single API call, securely storing credentials with encryption, automatically refreshing tokens before expiration, and managing credential rotation without service interruption. Each user’s credentials remain completely isolated in the multi-tenant architecture, ensuring security even when multiple users access the same MCP server type.

Serverless Hibernation Architecture: Metorial’s proprietary hibernation system represents a breakthrough in MCP deployment economics and performance. Idle MCP servers automatically hibernate after periods of inactivity, dramatically reducing compute costs for production deployments. When an agent needs to access a hibernated server, Metorial resumes it with sub-second cold starts while preserving the complete connection state, session variables, and in-progress transactions. This enables cost-efficient scaling where you only pay for active usage while maintaining the responsiveness required for production AI applications.

Per-User Isolation for Multi-Tenant Deployments: Unlike shared MCP servers that create security vulnerabilities or separate VM provisioning that proves expensive and slow, Metorial provides true per-user isolation within its serverless architecture. Each user automatically receives their own isolated MCP server instance configured with their personal OAuth credentials. This architecture enables secure SaaS deployments where thousands of end-users can each interact with MCP servers using their own authentication without any credential leakage or security compromise.

Embedded MCP Explorer: Test and validate MCP servers directly within the Metorial Dashboard before integrating them into production applications. The embedded explorer provides an interactive environment where developers can invoke MCP tools, examine response formats, debug parameter configurations, and verify behavior without writing integration code. This preview capability significantly accelerates development by identifying compatibility issues and clarifying functionality before commitment.

Comprehensive Monitoring and Debugging: Every MCP session is recorded and available for review in the Metorial Dashboard, providing complete visibility into agent-MCP interactions. Developers can replay entire sessions to understand exactly how agents used tools, trace errors to specific MCP calls with full request/response context, analyze performance metrics including latency and success rates, and identify patterns in tool usage across deployments. When errors occur, Metorial automatically detects them and generates detailed reports enabling rapid diagnosis and resolution.

Powerful Developer SDKs: Connect AI agents to MCP tools through TypeScript and Python SDKs that abstract protocol complexity into clean, intuitive interfaces. A typical integration requires just a single function call to establish MCP connections and make all tools available to your agent framework. SDKs support all major agent frameworks including LangChain, LangGraph, AutoGen, CrewAI, providing flexibility regardless of your development stack. For advanced use cases, complete REST API access enables custom implementations and programmatic management of the entire platform.

Open-Source and Self-Hostable: The core Metorial platform is open-source on GitHub at github.com/metorial/metorial, enabling complete transparency into how MCP servers are managed, deployed, and monitored. Organizations can fork the repository, customize behavior for specific requirements, and deploy the entire stack on their own infrastructure for complete control. This self-hosting capability addresses compliance requirements while enabling contribution back to the community ecosystem.

Multi-Instance Project Management: Create multiple isolated instances of your Metorial projects to test different configurations, separate staging from production environments, or maintain distinct versions for different customer segments. Each instance maintains independent MCP server deployments, user credential stores, and monitoring data, providing clean separation for complex deployment scenarios.

Server Customization and Forking: Beyond using pre-built MCP servers, developers can fork existing servers from the catalog and modify them for specialized requirements. Custom MCP servers integrate seamlessly into the Metorial ecosystem with the same deployment, OAuth management, hibernation, and monitoring capabilities available for official servers.

How It Works

Metorial’s operational architecture bridges local MCP development and production-scale deployment through a sophisticated serverless platform that handles infrastructure complexity automatically.

Developers begin by exploring Metorial’s catalog of 600+ MCP servers through the web dashboard or API. Each server entry provides comprehensive documentation including available tools and their parameters, authentication requirements and OAuth configuration guidance, usage examples demonstrating common interaction patterns, and performance characteristics like typical response times. This discovery process helps developers identify which servers provide the capabilities their agents require.

Once a suitable MCP server is identified, deployment proceeds through Metorial’s three-click process. For servers requiring OAuth, developers provide their application’s client ID and secret obtained from the service provider. Metorial stores these credentials securely and uses them to generate per-user authorization URLs. When an end-user of your application first requires access to the MCP server, Metorial redirects them to the provider’s OAuth consent screen, captures the granted tokens, and stores them in an encrypted, user-specific credential store. Subsequent access uses the stored credentials automatically, with Metorial handling token refresh transparently.

With MCP servers deployed and OAuth configured, developers integrate Metorial into their AI applications through the Python or TypeScript SDK. The integration pattern is remarkably simple, typically requiring just a few lines of code. For LangChain applications, developers wrap their agent initialization with Metorial’s session context, specifying which MCP server deployments should be available. The SDK automatically fetches available tools from those servers and makes them accessible to the agent. From the agent’s perspective, these tools appear no different from locally defined functions, but they’re actually executing against production MCP servers managed by Metorial’s infrastructure.

When an agent invokes an MCP tool, the request flows through Metorial’s infrastructure. If the target MCP server is currently active, the request routes directly with minimal latency. If the server is hibernated due to inactivity, Metorial’s custom MCP engine resumes it within milliseconds, restores the complete connection state, and forwards the request without the agent experiencing noticeable delay. This hibernation mechanism enables cost-efficient deployment where dozens or hundreds of MCP servers remain available but only consume compute resources when actively processing requests.

The multi-tenant isolation architecture ensures security for SaaS applications serving many end-users. When User A’s agent invokes a Google Drive MCP tool, it executes against an MCP server instance configured exclusively with User A’s Google Drive credentials. User B’s identical request uses a completely separate MCP server instance with User B’s credentials. This isolation happens automatically within Metorial’s serverless runtime without requiring developers to manage separate server processes or implement credential routing logic.

Throughout execution, Metorial records comprehensive telemetry including every MCP tool invocation with parameters and responses, OAuth token usage and refresh events, errors with full stack traces and context, and performance metrics across latency, success rates, and resource utilization. This data populates the Metorial Dashboard where developers can replay sessions, debug issues, analyze usage patterns, and optimize agent behavior.

For organizations requiring self-hosting, the open-source Metorial platform deploys on standard cloud infrastructure. The architecture comprises a control plane managing MCP server lifecycle and user credentials, a serverless runtime executing MCP server instances with hibernation support, a monitoring pipeline capturing telemetry and providing dashboards, and the SDK/API layer enabling agent integration. Teams can run this entire stack within their own VPC or data center, maintaining complete control while benefiting from Metorial’s architecture.

Use Cases

Metorial enables practical AI agent deployments across scenarios where connecting agents to real-world tools and data proves essential:

Rapid Multi-Platform Integration: Development teams building AI agents that need to interact with GitHub for code repository operations, Slack for team communication, Google Drive for document access, and Salesforce for CRM data can deploy all four MCP servers in minutes rather than spending weeks implementing custom API integrations for each platform. The three-click deployment and single-function-call SDK integration dramatically accelerates time-to-market for agent applications.

Secure Multi-Tenant SaaS Applications: Companies building SaaS products where each customer needs their AI agent to access the customer’s own third-party accounts face complex security challenges. Metorial’s per-user isolation architecture enables one deployment where thousands of customers each use their personal OAuth credentials without any risk of credential leakage. Customer A’s agent accessing their Gmail never sees Customer B’s credentials, even though both use the same MCP server type managed by the same Metorial deployment.

Enterprise Agent Deployments: Large organizations piloting AI agents across departments need infrastructure that handles scale, security, and compliance requirements. Metorial’s platform supports FAANG companies, Big 4 consulting firms, and Fortune 500 enterprises deploying agents that connect to internal databases, corporate Slack workspaces, document repositories, and business applications. The self-hosting option enables deployment within corporate networks while the monitoring capabilities provide audit trails for compliance.

Agent Framework Integration: Developers using popular agent frameworks like LangChain, LangGraph, AutoGen, or CrewAI can enhance their agents with 600+ external tools without implementing custom integrations. Metorial’s SDKs provide native compatibility with these frameworks, enabling seamless tool availability. An agent built with LangGraph can invoke Salesforce APIs, PostgreSQL databases, and Notion workspaces through Metorial MCP servers with the same ease as using LangGraph’s built-in tools.

Custom Integration Development: Beyond using pre-built MCP servers, development teams can fork existing servers from Metorial’s catalog and customize them for proprietary APIs or specialized workflows. These custom MCP servers deploy through the same Metorial infrastructure, inheriting OAuth management, hibernation, monitoring, and multi-tenant isolation capabilities. This enables organizations to extend Metorial’s catalog with internal tools while maintaining consistent operational patterns.

Cost-Optimized Production Deployment: Traditional always-on server deployments for AI agent infrastructure incur continuous costs even during idle periods. Metorial’s hibernation architecture enables production deployments where dozens of MCP servers remain available but only consume compute resources when actively processing agent requests. For applications with variable usage patterns, this can reduce infrastructure costs by 70-90% compared to always-on alternatives.

Cross-Cloud and Hybrid Integration: Organizations with workloads spanning multiple clouds and on-premise data centers need agents that can seamlessly access resources regardless of location. Metorial MCP servers can connect to AWS databases, Google Cloud storage, Azure services, and on-premise APIs, providing a unified tool interface for agents regardless of underlying infrastructure heterogeneity.

Pros \& Cons

Advantages

Solves Critical Production Deployment Gap: Metorial addresses the fundamental challenge preventing MCP adoption at scale. While MCP works elegantly in local development, production deployment historically required managing Docker configurations, implementing OAuth flows, building observability infrastructure, and solving multi-tenant isolation. Metorial eliminates these barriers, enabling teams to deploy production-ready MCP infrastructure in minutes rather than weeks.

Unique Hibernation Architecture: Metorial is the only platform offering true MCP server hibernation with sub-second cold starts. This breakthrough enables cost-efficient production deployments where dozens or hundreds of MCP servers remain available but only consume resources when actively used. Competitors either run always-on shared servers with security issues or provision separate VMs per user with prohibitive costs and slow scaling.

Genuine Per-User Isolation: The multi-tenant architecture provides real security for SaaS deployments. Each user gets their own isolated MCP server instance with their personal OAuth credentials, preventing any credential leakage or unauthorized access. This level of isolation typically requires complex custom infrastructure, but Metorial provides it automatically within the serverless runtime.

Comprehensive 600+ Server Catalog: The breadth of pre-configured MCP servers spanning development tools, communication platforms, business applications, databases, and specialized APIs means most common integration needs are immediately addressable. Teams avoid weeks of custom API integration work and can instead deploy battle-tested servers maintained by the community and Metorial team.

Open-Source Foundation with Commercial Support: The open-source core enables complete transparency, self-hosting for compliance requirements, and community contribution while the commercial hosted platform provides managed infrastructure, enterprise support, and continued development. This hybrid model appeals to both resource-constrained startups wanting flexibility and large enterprises requiring support guarantees.

Framework-Agnostic Integration: Python and TypeScript SDKs with native support for LangChain, LangGraph, AutoGen, and CrewAI enable integration regardless of agent framework choice. Developers aren’t locked into a specific framework stack and can switch or mix frameworks while maintaining consistent MCP access through Metorial’s infrastructure.

Strong Traction and Validation: Over 3,000 GitHub stars, usage by engineers at FAANG companies and Big 4 firms, active pilots with Fortune 500 enterprises, and Y Combinator backing provide validation that Metorial addresses real market needs. This traction suggests the platform will receive continued investment and development.

Disadvantages

Early-Stage Platform Maturity: Launched in its current MCP-focused form only in October 2025, Metorial represents a young platform still building production-grade reliability, comprehensive documentation, and enterprise features. Early adopters may encounter rough edges, incomplete features, or evolving interfaces as the platform matures.

Fundamental Product Pivot Risk: Metorial’s complete pivot from visual workflow builder (February 2025) to MCP deployment platform (October 2025) demonstrates willingness to make dramatic changes. While pivoting toward a clearer value proposition is often positive, it raises questions about long-term strategic consistency and whether future pivots might disrupt existing users.

MCP Adoption Dependency: Metorial’s value is entirely tied to Model Context Protocol adoption as the standard for agent-tool integration. If MCP fails to achieve widespread adoption, alternative protocols emerge as preferred standards, or major LLM providers implement proprietary integration mechanisms, Metorial’s entire value proposition weakens significantly.

Limited Pricing Transparency: Detailed pricing information for the hosted platform is not publicly available, making it difficult for teams to evaluate total cost of ownership before investing in integration. While the open-source version is free for self-hosting, understanding hosted platform economics is important for budget planning.

Competitive Pressure from Framework Vendors: Major agent framework providers like LangChain (recently raised \$125M at \$1.25B valuation with LangSmith deployment platform) could integrate MCP deployment capabilities directly into their offerings. Anthropic could expand Claude’s MCP support into a managed service. These competitive threats could commoditize Metorial’s infrastructure layer.

Monitoring Limited to Metorial-Hosted Servers: The comprehensive session recording and debugging capabilities only function for MCP servers hosted on Metorial’s platform. Organizations needing to monitor interactions with self-hosted or third-party MCP servers must implement separate observability infrastructure, limiting the unified visibility advantage.

OAuth Configuration Complexity for Custom Servers: While Metorial handles OAuth flow execution automatically, developers still must obtain client IDs and secrets from each service provider, configure redirect URLs correctly, and understand provider-specific OAuth requirements. This upfront configuration remains a barrier, though significantly less painful than implementing complete OAuth flows manually.

How Does It Compare?

Metorial occupies a unique infrastructure position in the AI agent ecosystem, competing less with agent frameworks and more with manual integration approaches and alternative deployment patterns:

LangChain + LangSmith Platform recently announced a \$125 million Series B at a \$1.25 billion valuation on October 21, 2025, transforming from an open-source framework into a comprehensive platform for agent engineering. LangChain provides the foundational framework for building agents, LangGraph enables low-level orchestration with durable execution, and LangSmith offers observability, evaluation, deployment infrastructure, and a no-code Agent Builder currently in private preview. This represents a complete lifecycle platform from development through production.

Metorial and LangChain are complementary rather than competitive. Developers build agents using LangChain/LangGraph frameworks, then connect those agents to Metorial’s MCP servers for access to 600+ external tools. LangSmith’s deployment infrastructure focuses on hosting the agent logic itself, while Metorial provides the external tool integration layer. A typical architecture combines LangChain for agent orchestration, Metorial for MCP server access, and LangSmith for monitoring and evaluation. The relationship is partnership, not rivalry.

Dust.tt positions itself as an internal AI agent platform for knowledge work, summaries, and collaborative workflows. Popular with async teams for internal enablement, Dust integrates with Notion, Slack, and documentation tools to power use cases like RFP drafting, customer support triage, and sales Q\&A assistants. However, Dust focuses on internal-facing agents rather than GTM execution, lacks native CRM integration or outbound messaging capabilities, and requires humans in the loop to approve agent actions.

Compared to Metorial, Dust operates at a different layer. Dust provides a complete agent application platform for internal workflows, while Metorial provides infrastructure for external tool integration regardless of agent application. Teams could theoretically build agents on Dust that connect to external tools through Metorial MCP servers, though Dust’s collaborative, human-in-the-loop model differs philosophically from Metorial’s automation focus.

Manual MCP Server Implementation represents the alternative Metorial most directly replaces. Teams building production AI agents could implement their own MCP servers, manage Docker configurations, build OAuth infrastructure, implement user isolation, create monitoring pipelines, and handle scaling challenges. This approach offers maximum customization but typically requires 4-8 weeks of engineering time per integration plus ongoing maintenance.

Metorial’s three-click deployment and automatic infrastructure management eliminate this development burden entirely, trading customization flexibility for dramatic time savings. For teams needing standard integrations to popular platforms, Metorial’s pre-built servers prove vastly more efficient. For teams requiring highly specialized integration logic or unusual authentication patterns, custom implementation may remain necessary.

Claude Desktop and Cursor IDE represent local MCP environments where developers can configure MCP servers for personal use during development. These tools excel at local prototyping and individual productivity but offer no path to production deployment, multi-user support, or programmatic control. Developers using Claude Desktop for experiments must still solve production deployment challenges when building applications for end-users.

Metorial bridges this gap explicitly. Developers can develop against MCP servers locally using Claude Desktop, then deploy those same servers to Metorial for production use with automatic OAuth, hibernation, monitoring, and multi-tenant isolation. This enables a smooth development-to-production workflow where MCP servers work consistently across environments.

Alternative Serverless Platforms like Vercel, AWS Lambda, or Google Cloud Functions provide general-purpose serverless infrastructure but lack MCP-specific capabilities. Deploying MCP servers on generic serverless platforms requires implementing hibernation logic, managing WebSocket connections, building OAuth infrastructure, and creating user isolation, effectively recreating what Metorial provides natively.

Metorial’s MCP-native design means these capabilities work automatically without custom development. The hibernation architecture specifically optimizes for MCP server lifecycle patterns, achieving sub-second cold starts that generic platforms struggle to match for WebSocket-based services.

Metorial distinguishes itself through its unique combination of MCP-specific hibernation architecture enabling cost-efficient deployment, per-user isolation solving multi-tenant security challenges, comprehensive 600+ server catalog eliminating custom integration work, open-source foundation with self-hosting options, and framework-agnostic SDKs enabling broad adoption. Its primary limitations stem from early-stage platform maturity, dependency on MCP protocol adoption, and potential competitive pressure from larger framework vendors.

The platform serves teams best when they need production MCP deployment without building infrastructure, multi-tenant SaaS applications requiring per-user isolation, integration with popular platforms covered by the 600+ server catalog, and agent framework flexibility without lock-in. It’s less suitable for teams requiring highly specialized integration logic not covered by existing servers, organizations with strict data sovereignty requiring exclusively self-hosted solutions with no cloud dependency, or projects with extreme cost sensitivity where self-managed infrastructure proves more economical at scale.

Final Thoughts

Metorial’s evolution from visual workflow builder to MCP deployment infrastructure represents a strategic pivot toward solving a genuine ecosystem pain point. The February 2025 vision of drag-and-drop agent creation proved less compelling than October 2025’s focus on production MCP server deployment, reflecting market feedback and founder learning typical of early-stage startups.

The current positioning as “Vercel for MCP” resonates because it addresses an authentic problem. MCP’s elegance in local development environments like Claude Desktop and Cursor contrasts sharply with deployment complexity in production applications. Teams building AI agents that need to interact with external tools face weeks of integration infrastructure work that Metorial eliminates through three-click deployment, automatic OAuth management, and serverless hibernation architecture.

The technical innovation of true hibernation with sub-second cold starts while preserving connection state represents genuine advancement beyond generic serverless platforms. This enables cost-efficient production deployments where dozens of MCP servers remain available but only consume resources during active use, solving economics that make always-on or VM-per-user approaches prohibitively expensive at scale.

However, realistic assessment requires acknowledging dependencies and risks. Metorial’s entire value proposition relies on MCP achieving broad adoption as the standard protocol for agent-tool integration. If alternative approaches emerge or major LLM providers implement proprietary integration mechanisms, Metorial’s infrastructure layer could become less relevant. The platform’s youth means early adopters will encounter evolving interfaces and incomplete features typical of products months from launch.

The competitive landscape presents both opportunities and threats. Integration with major frameworks like LangChain (recently raised \$125M at \$1.25B valuation) positions Metorial as complementary infrastructure, but also creates dependency on framework roadmaps and risk that framework vendors could integrate similar capabilities directly. The strong early traction—3,000+ GitHub stars, usage by FAANG and Fortune 500 engineers—validates market need but doesn’t guarantee sustainable differentiation as the space matures.

For development teams evaluating Metorial, the decision hinges on deployment timeline urgency, integration breadth requirements, multi-tenant security needs, and infrastructure preference. Teams racing to ship agent applications who need connections to popular platforms benefit dramatically from Metorial’s pre-built servers and automatic infrastructure. Organizations with unique integration requirements or strict data sovereignty mandates may find self-hosting or custom implementation more appropriate despite higher development costs.

The platform’s open-source foundation provides valuable optionality. Teams can start with the hosted platform for rapid deployment, then migrate to self-hosted infrastructure as requirements evolve or scale economics shift. This hybrid model addresses concerns about vendor lock-in while enabling commercial support for teams preferring managed services.

Looking ahead, Metorial’s trajectory depends on sustaining MCP ecosystem growth, maintaining technical leadership in hibernation and isolation architecture, expanding the server catalog to cover long-tail integration needs, building enterprise features for compliance and governance, and navigating competitive pressure from both framework vendors and potential Anthropic MCP services. The Y Combinator backing and Fortune 500 pilots suggest resources and validation to pursue this roadmap, but execution challenges remain significant.

For the current moment, Metorial offers the most mature infrastructure for production MCP deployment, filling a gap that teams would otherwise address through weeks of custom development. Whether this infrastructure layer becomes essential ecosystem plumbing or gets commoditized by larger platforms remains an open question. Early adopters benefit from immediate deployment capabilities while accepting early-stage platform risks.

Teams building AI agents that need external tool integration should evaluate Metorial alongside alternatives: implementing MCP servers manually, using framework-specific deployment features, or waiting for additional MCP infrastructure providers. For many scenarios, Metorial’s combination of deployment speed, security architecture, and catalog breadth currently offers the strongest option available. Whether that advantage persists as the ecosystem matures will define Metorial’s long-term success.