Table of Contents

Overview

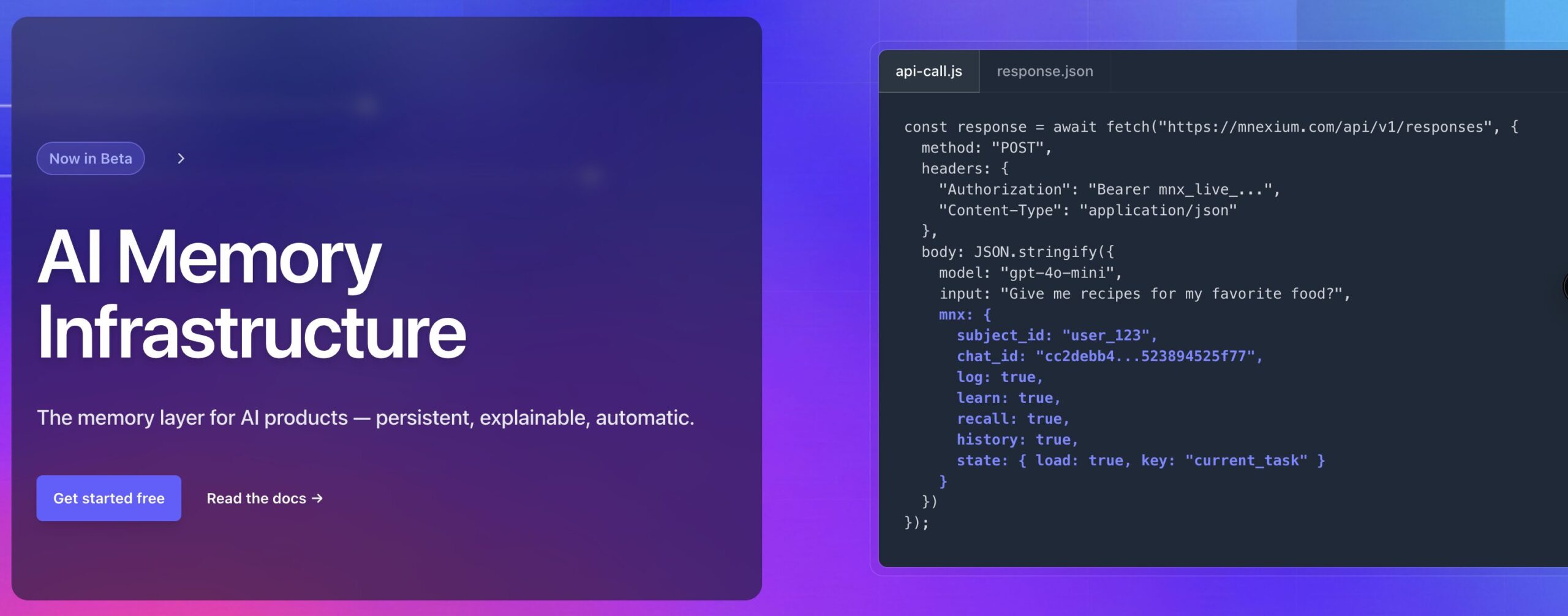

Mnexium AI provides a specialized infrastructure layer that gives Large Language Models (LLMs) persistent, long-term memory. Unlike traditional session-based chatbots that reset context after every interaction, Mnexium enables AI agents to recognize users, remember preferences, and track complex task progress across multiple sessions. By integrating directly into your existing API workflow, it removes the heavy lifting of managing vector databases, building embedding pipelines, or writing custom retrieval-augmented generation (RAG) logic.

Key Features

- Universal Memory Proxy: Seamlessly integrates with major providers including OpenAI, Anthropic Claude, and Google Gemini, allowing developers to switch models without losing stored user context or chat history.

- Unified mnx Object: Offers a zero-SDK integration where developers simply add a JSON object to their existing API calls to control logging, learning, and recall behaviors at the request level.

- Agentic State Management: Specifically designed for long-running workflows, the platform tracks short-term task progress and pending actions to ensure agents don’t lose their place during multi-step processes.

- Semantic Recall and Scoring: Automatically extracts structured facts from conversations, assigns importance scores, and injects the most relevant historical context back into the prompt for smarter responses.

- Full Audit Observability: Provides a transparent “Strategy Studio” where every memory creation, recall event, and system prompt injection is logged, making the agent’s behavior fully explainable and debuggable.

How It Works

Mnexium functions as a middleware layer that intercepts requests to your LLM provider. When you send an API call with the mnx configuration, the system automatically performs several background tasks. It retrieves relevant historical messages if history is enabled and uses semantic search to find long-term memories associated with the specific user or subject. These data points are then injected into the prompt before being sent to the LLM. Once the model responds, Mnexium analyzes the output to “learn” new facts, automatically updating the user profile and handling conflicting information by marking old records as superseded.

Use Cases

- Hyper-Personalized Customer Support: Create bots that remember a customer’s specific technical setup, previous issues, and preferences over months of interaction to provide a tailored service experience.

- Continuous Sales Agents: Enable AI sales representatives to resume complex negotiations where they left off, remembering specific deal terms and stakeholder concerns across interrupted sessions.

- Complex Multi-Step Planning: Power travel or project management agents that can track progress through hundreds of sub-tasks without resetting or forgetting previous decisions during the workflow.

- Auditable Enterprise Knowledge: Implement compliant AI systems that maintain a clear audit trail of why specific memories were used and how the agent’s internal state evolved over time.

Pros & Cons

Advantages

- Immediate Integration: The proxy-based approach means you can add persistent memory to a production app in minutes without rewriting core logic or installing new libraries.

- Provider Independence: You own the memory layer, making it easy to migrate from one LLM provider to another while keeping all user data intact.

- Automated Data Maintenance: Built-in deduplication and versioning ensure that your memory bank stays clean and relevant without manual intervention.

Disadvantages

- Latency Overhead: As a proxy service, it adds a minimal round-trip delay to API requests, though this is often offset by the time saved in manual context fetching.

- Proxy Reliability Dependency: Your application’s uptime for AI features becomes dependent on Mnexium’s service availability as the intermediate gateway.

How Does It Compare?

The LLM memory market has shifted from basic vector storage to sophisticated context management platforms.

- Mem0

Mem0 is a leading competitor focusing on a personalized memory layer for AI agents. It excels in extracting and storing long-term facts but often requires more manual orchestration or SDK integration compared to Mnexium’s transparent proxy model. Mem0 is highly flexible for developers who want granular control over the memory graph. - Zep

Zep specializes in long-term memory for AI assistant applications, offering high-performance indexing for chat histories and documents. While Zep provides powerful search and enrichment features, it typically operates as a separate service you call alongside your LLM, whereas Mnexium handles the injection into the LLM prompt automatically via its proxy. Pinecone (Vector Database)

Pinecone represents the infrastructure level of the stack. It provides the high-performance vector search that many memory systems are built upon. However, Pinecone requires developers to build their own pipelines for chunking, embedding, and retrieval. Mnexium abstracts this entirely, offering an end-to-end solution where Pinecone would only be a component of the backend.LangChain (Memory Modules)

LangChain offers various memory components within its framework (like ConversationBufferMemory). These are excellent for building prototypes within the LangChain ecosystem but are often transient or difficult to scale across distributed sessions. Mnexium provides a more robust, “plug-and-play” enterprise alternative that works independently of any specific coding framework.

Final Thoughts

Mnexium AI addresses the critical “forgetting” problem that hinders the deployment of truly intelligent AI agents. By moving context management into a dedicated, explainable infrastructure layer, it allows developers to focus on core product features rather than the intricacies of vector embeddings and retrieval logic. As the platform matures and moves out of beta, its ability to maintain a unified memory across multiple LLM providers will likely make it an essential tool for developers building the next generation of personalized AI applications.