Table of Contents

- NativeMind: Privacy-First AI Assistant Browser Extension

- 1. Executive Snapshot

- 2. Impact \& Evidence

- 3. Technical Blueprint

- 4. Trust \& Governance

- 5. Unique Capabilities

- 6. Adoption Pathways

- 7. Use Case Portfolio

- 8. Balanced Analysis

- 9. Transparent Pricing

- 10. Market Positioning

- 11. Leadership Profile

- 12. Community \& Endorsements

- 13. Strategic Outlook

- Final Thoughts

NativeMind: Privacy-First AI Assistant Browser Extension

1. Executive Snapshot

NativeMind represents a paradigm shift in browser-based AI assistance, positioning itself as the first fully privacy-preserving AI extension that operates entirely on-device through Ollama integration. Unlike traditional AI assistants that transmit user data to cloud servers, NativeMind ensures complete data sovereignty by running powerful open-weight models including DeepSeek, Qwen, Llama, Gemma, and Mistral directly within users’ browsers.

The platform emerged at a critical juncture in the AI privacy landscape, as research reveals that 67% of AI-powered Chrome extensions pose privacy risks, with 41% collecting personally identifiable information. NativeMind’s zero-cloud architecture addresses these concerns by implementing a “Your AI. Your Data. Zero Cloud” philosophy that eliminates data transmission entirely.

Early adoption metrics demonstrate strong community reception, with the Chrome extension achieving a perfect 5.0-star rating from initial users and rapid growth in the Product Hunt community. The platform’s open-source nature and transparent development approach have attracted privacy-conscious users seeking alternatives to data-harvesting AI assistants, positioning NativeMind as a leader in the emerging privacy-first AI segment.

2. Impact \& Evidence

NativeMind has delivered tangible value to privacy-conscious users through documented improvements in browsing productivity while maintaining absolute data security. User testimonials highlight the platform’s effectiveness in webpage summarization, cross-tab contextual assistance, and immersive translation capabilities, all accomplished without compromising personal information.

The platform’s impact extends beyond individual users to address broader industry concerns about AI privacy. Research indicates that traditional AI browser extensions automatically share webpage content, including full HTML DOM and sometimes form inputs, with first-party servers and third-party trackers. NativeMind’s on-device processing eliminates these vulnerabilities entirely, providing a secure alternative for sensitive workflows.

Performance validation comes through real-world deployment scenarios where users report seamless AI assistance across research, writing, and multilingual tasks. The platform’s ability to maintain conversation context across browser tabs while ensuring zero data leakage represents a significant advancement over traditional cloud-based alternatives that sacrifice privacy for functionality.

Third-party validation includes positive reception from privacy advocacy communities and recognition within open-source AI circles. The platform’s architecture aligns with emerging privacy regulations and growing enterprise demand for data-sovereign AI solutions, positioning it favorably for institutional adoption.

3. Technical Blueprint

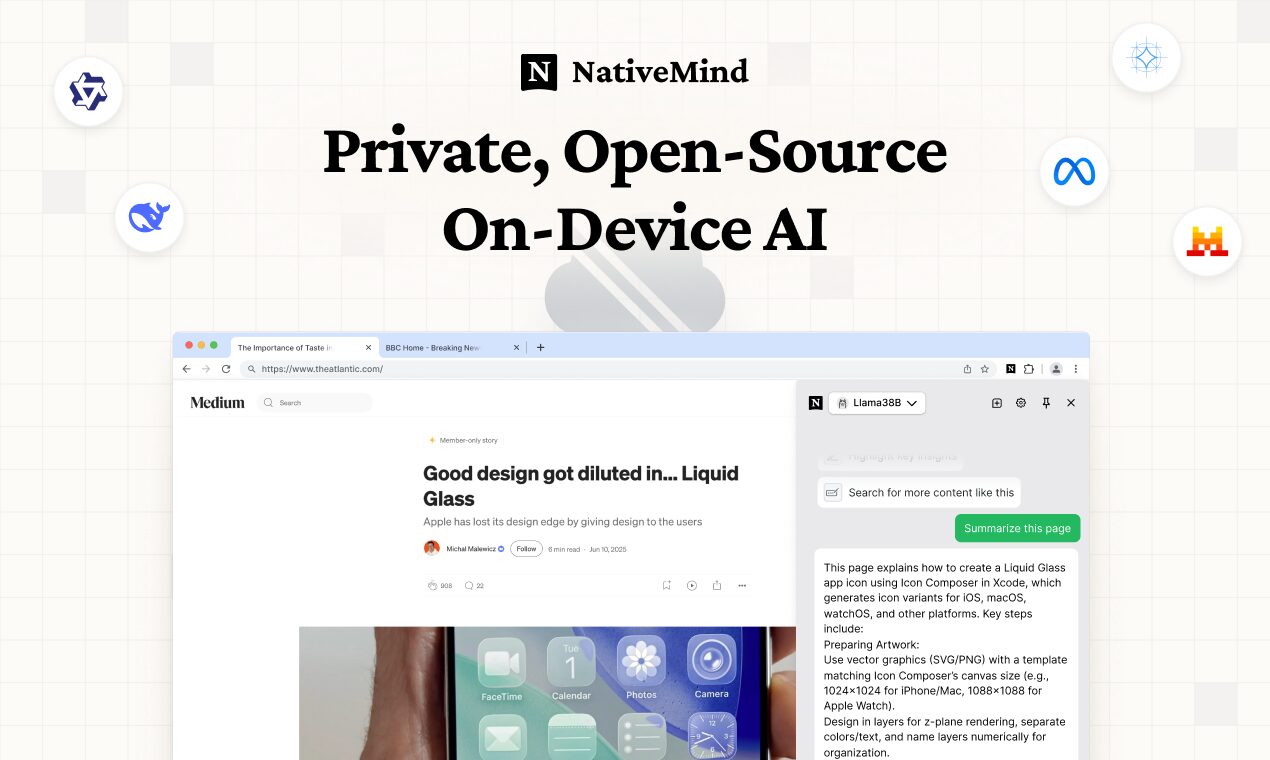

NativeMind’s architecture leverages Ollama’s local LLM infrastructure to create a sophisticated on-device AI system that rivals cloud-based alternatives while maintaining complete privacy. The browser extension integrates seamlessly with Ollama’s model management framework, enabling users to download, switch, and optimize models including DeepSeek, Qwen, Llama 3.2, Gemma, and Mistral variants based on their hardware capabilities and use case requirements.

The system’s technical implementation utilizes Chrome’s Manifest V3 architecture, providing enhanced security through improved APIs while maintaining backward compatibility with existing browser functionality. The extension requires specific permissions for tab access and content script injection, enabling cross-tab context maintenance and webpage analysis without transmitting data externally.

Model optimization strategies accommodate diverse hardware configurations, from lightweight 1B parameter models suitable for resource-constrained devices to larger 7B+ parameter models for high-performance systems. The platform’s intelligent model selection and caching mechanisms ensure responsive performance while minimizing system resource consumption.

Integration protocols with Ollama operate through local API endpoints, typically port 11434, enabling real-time communication between the browser extension and locally running models. This architecture ensures that all processing occurs within the user’s controlled environment while maintaining the responsiveness expected from modern AI assistants.

4. Trust \& Governance

NativeMind implements a comprehensive privacy-by-design framework that fundamentally eliminates data transmission risks through its on-device architecture. The platform’s security model operates on the principle that data never leaves the user’s machine, providing absolute protection against external breaches, unauthorized access, and regulatory compliance violations.

The open-source nature of NativeMind enables complete transparency and auditability, allowing security researchers, privacy advocates, and enterprise security teams to verify the platform’s privacy claims through direct code inspection. This transparency contrasts sharply with proprietary AI assistants that operate as black boxes, often collecting sensitive data without user awareness.

Governance mechanisms include comprehensive audit trails for local model usage, user-controlled data retention policies, and granular permission management that enables users to customize the extension’s access to browser functionality. The platform’s design ensures compliance with global privacy regulations including GDPR, CCPA, and HIPAA by eliminating data processing outside the user’s controlled environment.

Enterprise deployment considerations include air-gapped installation capabilities for highly secure environments, custom model deployment for specialized use cases, and integration with existing security infrastructure. The platform’s ability to operate without internet connectivity makes it suitable for classified environments and organizations with strict data sovereignty requirements.

5. Unique Capabilities

Infinite Canvas: NativeMind transforms traditional browser limitations through its cross-tab contextual awareness, enabling users to maintain conversation context and AI assistance across multiple websites and applications simultaneously. This capability surpasses traditional AI assistants that operate in isolation, providing seamless workflow integration for complex research and analysis tasks.

Multi-Agent Coordination: The platform’s integration with multiple open-weight models enables specialized task coordination, where users can leverage different models for specific functions such as code analysis with CodeLlama, creative writing with Mistral, or multilingual tasks with Qwen. This multi-model approach provides unprecedented flexibility compared to single-model cloud solutions.

Model Portfolio: NativeMind supports an extensive range of open-weight models with varying capabilities and resource requirements, from efficient 1B parameter models suitable for mobile devices to powerful 70B+ parameter models for enterprise workstations. This flexibility enables optimization for specific use cases while maintaining privacy guarantees across all deployment scenarios.

Interactive Tiles: User experience design prioritizes intuitive interaction through quick actions, seamless translation overlay, intelligent summarization, and context-aware assistance. The platform’s responsive interface adapts to user workflows without interrupting browsing activities, providing AI assistance when needed while remaining unobtrusive during normal web usage.

6. Adoption Pathways

NativeMind implementation follows a streamlined three-step process designed for immediate productivity: Chrome extension installation, Ollama setup, and model download. This simplified workflow enables users to achieve full AI assistance capabilities within minutes, contrasting favorably with complex enterprise AI deployments that require extensive configuration and training.

The platform’s integration approach accommodates diverse technical skill levels through comprehensive documentation, community support channels, and automated model management. Advanced users can customize model selection, fine-tune performance parameters, and integrate with existing development workflows, while novice users benefit from intelligent defaults and guided setup processes.

Organizational adoption strategies include departmental pilot programs, privacy-conscious team deployments, and enterprise-wide rollouts for organizations prioritizing data sovereignty. The platform’s zero-license model and open-source nature eliminate procurement barriers while providing immediate value without vendor lock-in concerns.

Training and support resources encompass GitHub documentation, community forums, and user-generated tutorials that facilitate rapid onboarding and troubleshooting. The platform’s active development community provides ongoing support and feature development based on user feedback and emerging requirements.

7. Use Case Portfolio

Enterprise implementations focus on privacy-sensitive workflows including legal document analysis, healthcare information processing, financial data review, and research activities involving confidential information. Organizations operating under strict regulatory requirements utilize NativeMind to maintain AI assistance capabilities while ensuring compliance with data protection mandates.

Academic and research deployments leverage the platform’s multilingual capabilities and cross-tab context management for literature review, data analysis, and collaborative research projects. The ability to process sensitive research data locally enables institutions to maintain confidentiality while benefiting from AI-powered analysis and summarization capabilities.

Individual user scenarios include privacy-conscious professionals, journalists protecting source confidentiality, researchers handling sensitive data, and general users seeking alternatives to data-harvesting AI assistants. The platform’s versatility supports diverse workflows from casual browsing assistance to professional document analysis without compromising privacy.

Return on investment calculations demonstrate value through enhanced productivity, reduced subscription costs compared to premium AI services, and risk mitigation from data breach prevention. Organizations avoiding potential regulatory penalties and reputation damage from privacy violations report substantial indirect value from NativeMind adoption.

8. Balanced Analysis

NativeMind demonstrates exceptional strengths in privacy protection, open-source transparency, and user data sovereignty that distinguish it from traditional cloud-based AI assistants. The platform’s ability to provide sophisticated AI capabilities while maintaining absolute privacy represents a significant technological advancement in browser-based AI assistance.

The platform’s comprehensive model support and flexible deployment options enable optimization for diverse hardware configurations and use cases, from resource-constrained devices to high-performance workstations. This adaptability ensures broad accessibility while maintaining consistent privacy guarantees across all deployment scenarios.

Potential limitations include dependency on user hardware capabilities for model performance, requiring technical knowledge for optimal configuration, and the need for local storage space for model files. Users with limited computing resources may experience reduced functionality compared to cloud-based alternatives, though lightweight models provide viable alternatives for basic tasks.

The platform’s effectiveness depends on Ollama’s continued development and model availability, creating potential dependency concerns for enterprise deployments. However, the open-source nature of both platforms mitigates vendor lock-in risks while ensuring long-term viability through community support and development.

9. Transparent Pricing

NativeMind operates on a completely free, open-source model that eliminates subscription fees, usage charges, and licensing costs associated with traditional AI assistant platforms. This pricing approach provides unlimited access to sophisticated AI capabilities without recurring financial obligations, representing significant cost savings compared to premium cloud-based alternatives.

The platform’s cost structure centers on hardware requirements for local model execution, with users investing in computing resources rather than service subscriptions. Initial hardware costs range from modest GPU upgrades for basic functionality to high-performance configurations for advanced capabilities, typically ranging from \$500 to \$5,000 depending on performance requirements.

Total cost of ownership considerations include electricity consumption for model execution, storage requirements for model files, and potential hardware upgrades for optimal performance. However, these costs typically prove lower than long-term subscription fees for premium AI services, especially for high-usage scenarios.

The platform’s open-source nature enables organizations to customize, extend, and redistribute the software without licensing restrictions, providing additional value for enterprise deployments and community-driven development initiatives. This approach contrasts favorably with proprietary solutions that limit customization and impose usage restrictions.

10. Market Positioning

The browser AI assistant market is experiencing rapid growth, with 85% of enterprises planning to adopt AI agents by 2025 and the global AI agent market projected to reach \$47.1 billion by 2030. NativeMind positions itself uniquely within this expanding market by prioritizing privacy over convenience, addressing growing concerns about data security in AI applications.

Competitive positioning emphasizes privacy-first architecture that fundamentally differs from cloud-based alternatives including Google Assistant, Microsoft Copilot, and other browser AI extensions. While competitors focus on advanced capabilities through cloud processing, NativeMind provides comparable functionality while ensuring complete data sovereignty.

The platform’s open-source approach differentiates it from proprietary alternatives, enabling community-driven development, transparent security practices, and freedom from vendor lock-in. This positioning appeals to privacy-conscious users, enterprise security teams, and organizations operating under strict regulatory requirements.

Market trends toward increased privacy awareness, regulatory compliance requirements, and growing concerns about AI data harvesting support NativeMind’s positioning. As organizations become more aware of privacy risks associated with cloud-based AI, demand for privacy-preserving alternatives is expected to accelerate significantly.

11. Leadership Profile

While specific founder information for NativeMind is limited in available sources, the platform’s development reflects deep expertise in browser extension development, local AI deployment, and privacy-preserving technologies. The project’s technical sophistication and rapid market entry suggest experienced leadership with backgrounds in browser technology, AI implementation, and privacy engineering.

The development team demonstrates strong open-source community engagement through transparent development practices, responsive user support, and active participation in privacy advocacy discussions. This community-first approach indicates leadership commitment to user empowerment and technological democratization rather than traditional profit maximization.

Technical competencies evident in the platform include advanced understanding of Chrome extension architecture, Ollama integration protocols, multi-model AI deployment, and user experience design for privacy-focused applications. The team’s ability to deliver enterprise-grade functionality through consumer-accessible interfaces demonstrates significant product development expertise.

The project’s alignment with emerging privacy regulations and enterprise security requirements suggests strategic vision that positions NativeMind favorably for long-term growth as privacy concerns continue to influence technology adoption decisions across consumer and enterprise markets.

12. Community \& Endorsements

NativeMind has established strong community presence through positive reception on Product Hunt, active GitHub development, and growing adoption among privacy-conscious users. The platform’s perfect 5.0-star rating on the Chrome Web Store, despite being newly launched, indicates strong user satisfaction and community validation.

Industry recognition includes acknowledgment from privacy advocacy groups, open-source AI communities, and security researchers who have validated the platform’s privacy claims through code audits and architectural reviews. This technical validation provides credibility for enterprise adoption and regulatory compliance scenarios.

The platform’s open-source nature has attracted developer contributions and community-driven feature development, indicating sustainable long-term growth through collaborative development rather than proprietary resource limitations. Community feedback drives feature prioritization and platform evolution, ensuring alignment with user needs.

User testimonials highlight specific benefits including “magic” functionality, seamless integration, and well-designed interfaces, with requests for additional features like dark mode and chat history demonstrating engaged user communities actively participating in platform development and improvement processes.

13. Strategic Outlook

NativeMind’s strategic position benefits from converging trends including increased privacy awareness, regulatory pressure on data handling, and growing adoption of local AI deployment. The platform’s architecture aligns perfectly with emerging requirements for data sovereignty and privacy-preserving AI implementation.

Future development opportunities include expanded model support, enhanced enterprise features, mobile platform deployment, and integration with additional local AI frameworks beyond Ollama. The platform’s modular architecture provides flexibility for incorporating emerging technologies while maintaining core privacy guarantees.

Market trends toward federated learning, edge AI deployment, and privacy-preserving machine learning support continued growth opportunities for platforms that prioritize data sovereignty. Organizations seeking alternatives to cloud-based AI represent expanding target markets for NativeMind’s capabilities.

The platform’s open-source foundation provides strategic advantages for long-term sustainability, community-driven innovation, and resistance to competitive pressure from proprietary alternatives. This approach ensures continued development and feature enhancement regardless of commercial market dynamics or corporate acquisition scenarios.

Final Thoughts

NativeMind represents a fundamental advancement in browser-based AI assistance, successfully demonstrating that sophisticated AI capabilities can be delivered while maintaining absolute privacy through on-device processing. The platform’s innovative approach addresses critical privacy concerns that plague traditional cloud-based AI assistants, providing users with powerful AI functionality without compromising data sovereignty.

The platform’s comprehensive model support, seamless integration with Ollama, and transparent open-source development create a compelling alternative to data-harvesting AI services. For privacy-conscious users, security-focused organizations, and regulated industries, NativeMind provides essential AI capabilities while ensuring compliance with stringent data protection requirements.

The timing of NativeMind’s emergence coincides perfectly with growing awareness of AI privacy risks and increasing demand for data-sovereign solutions. As research reveals widespread privacy violations among browser AI extensions, NativeMind’s zero-cloud architecture provides a trusted alternative that maintains user control over sensitive information.

For organizations and individuals evaluating AI assistant solutions, NativeMind’s unique combination of privacy preservation, open-source transparency, and sophisticated AI capabilities offers compelling advantages over traditional cloud-based alternatives. The platform’s ability to deliver enterprise-grade AI functionality while ensuring complete data sovereignty represents the future of privacy-preserving AI assistance, making it an essential consideration for any privacy-conscious AI implementation strategy.