Table of Contents

Overview

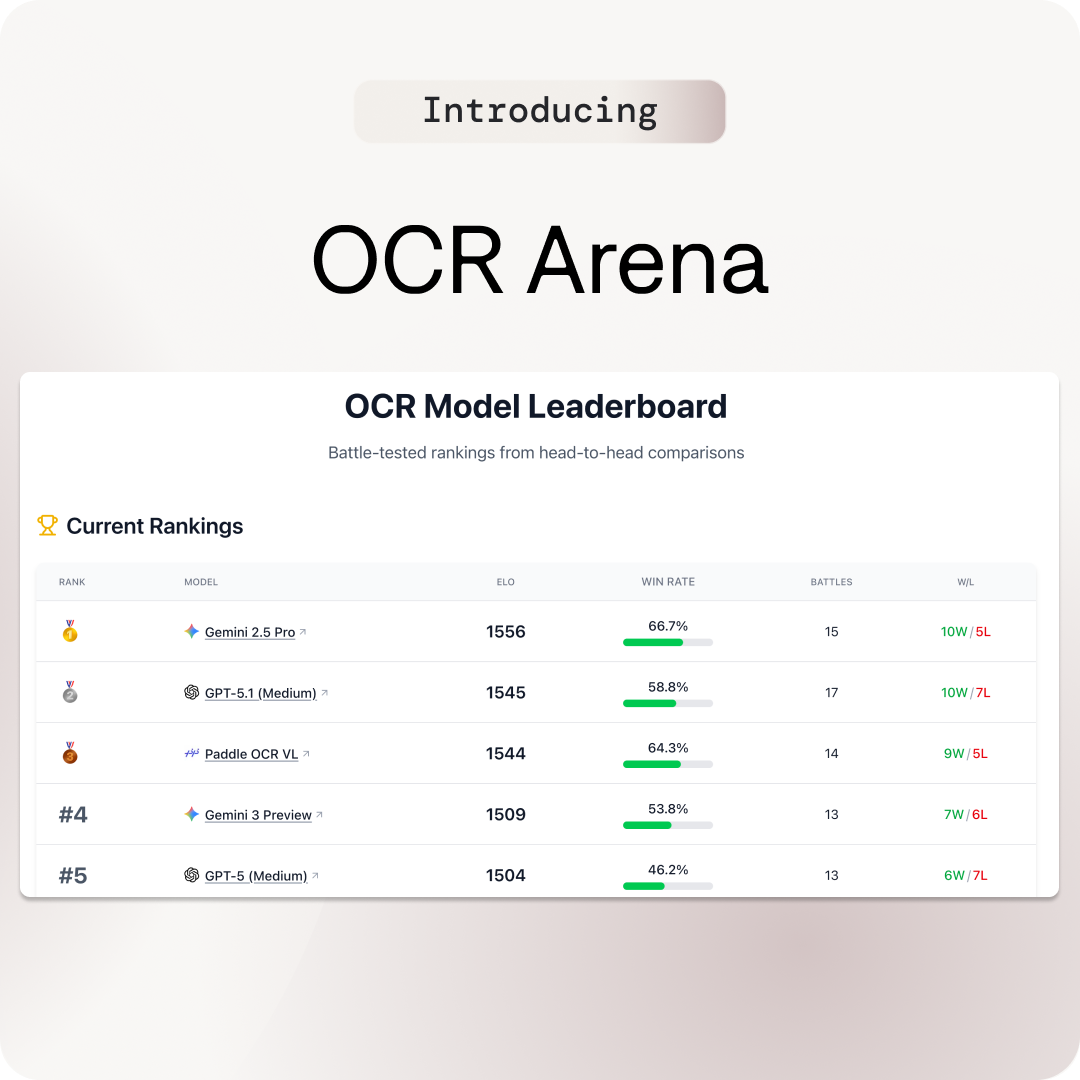

Selecting the optimal Optical Character Recognition or Vision Language Model for document processing presents significant challenges for developers and data scientists. With dozens of commercial and open-source options claiming superior performance, determining which model handles specific document types most accurately requires practical testing. OCR Arena addresses this evaluation gap by providing a free, interactive platform for head-to-head model comparison. Launched in November 2025, the platform enables users to upload their own documents and observe multiple OCR and VLM models process them simultaneously in anonymous battles. Users then vote for the best output, contributing to a community-driven Elo leaderboard that ranks model performance based on real-world document processing rather than abstract academic benchmarks.

The platform draws inspiration from LMSYS’s Chatbot Arena, which successfully crowdsourced language model evaluation through pairwise comparisons. OCR Arena applies this competitive arena methodology specifically to document understanding tasks, creating transparent rankings that reflect practical performance on diverse, user-submitted documents rather than standardized test sets.

Key Features

OCR Arena provides several core capabilities designed to simplify model evaluation and selection:

Document Battle Arena: Users upload documents in PDF, JPEG, or PNG formats to initiate comparison battles. The platform accepts single-page and multi-page documents, including scanned images, digital PDFs, screenshots, invoices, receipts, contracts, handwritten notes, and complex layouts with tables and mixed content. This flexibility allows testing on documents that match actual use cases rather than idealized samples.

Anonymous Multi-Model Processing: When a document is uploaded, OCR Arena automatically routes it to 10+ integrated OCR and VLM models running in parallel. The models process the document anonymously—users see outputs labeled as “Model A” and “Model B” without knowing which specific system generated each result. This anonymization eliminates brand bias, encouraging users to judge outputs purely on quality rather than reputation.

Side-by-Side Output Comparison: Results from different models appear in adjacent panels, enabling direct visual comparison of text extraction accuracy, formatting preservation, table recognition, and overall output quality. Users can quickly identify which model correctly handled difficult elements like handwriting, low-quality scans, or complex layouts.

Community Voting System: After reviewing outputs, users vote for the model that performed best. Votes are submitted with simple clicks, making participation effortless. Each vote contributes to Elo score calculations that update model rankings in real time, creating a dynamic leaderboard that evolves as more users contribute evaluations.

Public Elo Leaderboard: A transparent ranking system displays all integrated models sorted by Elo rating, win rate percentage, and total battle count. As of late November 2025, Google’s Gemini 3 leads the leaderboard with an 82.7% win rate. The leaderboard provides visibility into comparative performance, helping users identify top-performing models for their document types.

Random Document Testing: For users wanting standardized comparison across models, the platform offers a “random document” feature that selects pre-uploaded files representing diverse document types and complexity levels. This ensures benchmarking consistency across different models without requiring users to upload their own files.

How It Works

OCR Arena operates through a straightforward workflow designed for rapid evaluation:

Users access the platform through a web browser without requiring account creation or login. The battle interface presents an upload area where users drag and drop documents or click to browse files. Supported formats include PDF, JPEG, and PNG.

Once uploaded, the platform’s backend automatically distributes the document to multiple integrated OCR and VLM models. These models include both commercial APIs like Google Cloud Vision, GPT-4 Vision, Claude Vision, and Gemini, as well as open-source alternatives like PaddleOCR, Tesseract derivatives, and specialized document understanding models. Processing occurs in parallel to minimize wait times.

Results appear in a side-by-side comparison interface, typically showing two anonymized outputs at a time. Users examine each output’s accuracy, formatting, completeness, and overall quality. The interface highlights differences in text extraction, table recognition, layout preservation, and handling of challenging elements like handwriting or low-resolution scans.

After reviewing the outputs, users vote for the superior model by clicking a selection button. The vote is immediately recorded and contributes to Elo score recalculation using the Bradley-Terry model, which statistically estimates model strength based on pairwise comparison outcomes. This mirrors the methodology used in competitive gaming and the LMSYS Chatbot Arena.

Following the vote, the platform reveals which models produced which outputs, allowing users to learn which specific systems performed best on their document type. Users can then initiate additional battles with new documents or the same document processed by different model pairs, building comprehensive understanding of model strengths and weaknesses.

The leaderboard updates continuously as votes accumulate, providing real-time rankings based on community consensus. Models gain or lose Elo points with each battle outcome, creating competitive dynamics that incentivize model developers to improve performance.

Use Cases

OCR Arena serves diverse stakeholders in the document AI ecosystem:

Model Selection for Production Deployments: Developers building document processing pipelines can test candidate OCR APIs and open-source models on representative samples from their actual data. Rather than relying on vendor marketing claims or academic benchmark numbers, teams evaluate models on invoices, contracts, forms, or other domain-specific documents. This empirical testing reduces the risk of investing in models that underperform on real-world data.

Domain-Specific Benchmarking: Organizations with specialized document types—medical records, legal contracts, historical archives, handwritten forms, multilingual documents—can assess which models handle their unique challenges most effectively. OCR Arena’s user-upload approach enables testing on edge cases that standardized benchmarks often overlook.

Comparative Research and Model Evaluation: Academic researchers and model developers use the platform to understand how their OCR systems perform relative to state-of-the-art alternatives. The transparent leaderboard and anonymized voting provide unbiased feedback on model strengths and weaknesses across diverse document types.

Due Diligence for API Selection: Enterprises evaluating commercial OCR APIs from Google Cloud, AWS, Azure, or specialized providers can conduct side-by-side comparisons before committing to specific vendors. The platform enables informed procurement decisions based on performance rather than vendor relationships.

Community Knowledge Building: The collective intelligence generated by thousands of user votes creates a valuable public resource documenting which models excel at specific tasks. Users benefit from community knowledge when selecting models for new projects, while model developers gain insights into performance gaps requiring improvement.

Educational Exploration: Students and professionals learning about OCR technology can experiment with multiple models without needing API keys, subscriptions, or technical setup. The platform democratizes access to leading OCR systems for educational purposes.

Pros and Cons

Advantages

OCR Arena offers several compelling benefits for document AI practitioners:

The completely free access model eliminates financial barriers to model evaluation. Unlike proprietary benchmarking services or API trials with limited free tiers, OCR Arena provides unlimited testing at no cost. This accessibility benefits independent developers, startups, researchers, and students without evaluation budgets.

The community-driven voting system creates transparent, unbiased rankings grounded in real-world performance rather than vendor-funded benchmarks or cherry-picked test sets. The Elo rating methodology provides statistically robust performance estimates based on pairwise comparisons, similar to competitive chess rankings. This transparency helps users trust the leaderboard as a genuine performance indicator.

The visual side-by-side comparison interface makes quality assessment intuitive and effortless. Users need no technical expertise to identify which model produced better output—they simply compare results and vote. This simplicity encourages participation from non-technical stakeholders who understand document quality requirements even without OCR expertise.

The document-centric approach represents a fundamental advantage over academic benchmarks. Standard OCR evaluation datasets like IAM Handwriting, FUNSD, or SROIE consist of carefully curated samples that may not reflect the messy, varied documents encountered in production systems. OCR Arena lets users test on their actual documents, revealing performance on the specific challenges their applications face.

The platform creates network effects where community contributions benefit all users. As more people upload diverse documents and vote, the leaderboard becomes increasingly representative of real-world performance across varied use cases. This collective intelligence exceeds what individual organizations could generate through isolated testing.

The anonymous model presentation eliminates brand bias, encouraging objective evaluation. Users judge outputs purely on quality rather than being influenced by brand reputation, pricing, or marketing positioning.

Disadvantages

OCR Arena also faces several inherent limitations:

The accuracy of leaderboard rankings depends entirely on voting quality and consistency. If users vote carelessly, favor specific output styles regardless of accuracy, or lack domain expertise to judge specialized documents, the rankings may not reflect actual model performance. Crowd wisdom assumes crowd competence, which varies across participants.

The platform includes only pre-integrated models, limiting coverage to systems the developers have specifically added. Emerging OCR models, proprietary enterprise solutions, or specialized systems for niche domains may not be available for comparison. Users cannot test arbitrary models without platform operator involvement.

The evaluation methodology focuses exclusively on output accuracy and quality as judged by human voters. The platform provides no metrics for processing speed, latency, computational cost, API pricing, deployment complexity, language support, or other factors critical to production model selection. A model may rank highly for accuracy but prove impractical due to slow processing or prohibitive costs.

The reliance on user-uploaded documents means leaderboard rankings reflect the specific document types the community submits. If most users test on English invoices, the leaderboard may not generalize to handwritten notes in Asian languages or historical manuscripts. The rankings represent current community testing priorities rather than absolute model capabilities across all possible document types.

Privacy-sensitive organizations may hesitate to upload confidential documents to a public platform, even with assurances of secure processing. This limits participation from enterprises with strict data policies, potentially skewing the leaderboard toward consumer and SMB use cases.

The platform’s newness means the leaderboard is based on relatively limited battle data compared to established benchmarks with years of evaluation history. Rankings may exhibit volatility as more votes accumulate and statistical confidence improves.

How Does It Compare?

OCR Arena operates within a growing ecosystem of document AI evaluation tools and services, each serving distinct purposes:

Direct OCR Arena Competitors

Fast360: An open-source OCR arena platform positioning itself as the “industry’s first Open Source OCR Arena.” Fast360 provides free, no-login benchmarking of 7 top-tier OCR models including Marker, MinerU, MonkeyOCR, Docling, and others specifically optimized for PDF-to-Markdown conversion. The platform emphasizes speed alongside accuracy, targeting users building knowledge bases and RAG pipelines from PDFs. Compared to OCR Arena, Fast360 offers narrower model coverage with specialization in document-to-structured-text workflows. OCR Arena provides broader VLM integration and Elo-based community rankings, while Fast360 focuses on open-source transparency and PDF conversion use cases.

Roboflow Arena (OCR): Roboflow’s Arena platform includes an OCR-specific section enabling anonymous model battles for text recognition tasks. Users upload text images, vote on extraction quality, and reveal winning models. Roboflow Arena integrates with Roboflow’s broader computer vision ecosystem, including object detection and segmentation challenges. Compared to OCR Arena, Roboflow emphasizes integration with its model training and deployment platform, targeting users already within the Roboflow ecosystem. OCR Arena functions as a standalone evaluation tool without requiring ecosystem adoption.

Cloud OCR Services

Google Cloud Vision OCR (Document AI): Google’s enterprise OCR service consistently ranks among the most accurate commercial offerings, achieving 98%+ accuracy on clean documents in independent benchmarks. Document AI provides layout-aware OCR with table extraction, key-value pair detection, and form parsing. Available as managed cloud API with pay-per-page pricing. Compared to OCR Arena, Google Cloud Vision represents an actual OCR product rather than an evaluation platform. Users select Google based on benchmarks (potentially including OCR Arena rankings) when deploying production systems. Google Cloud Vision may appear as one of the models being evaluated within OCR Arena battles.

Amazon Textract: AWS’s document analysis service offering OCR plus intelligent document processing for invoices, receipts, identity documents, and forms. Textract provides synchronous and asynchronous processing with built-in

entity extraction. Pricing follows AWS consumption model. Like Google Cloud Vision, Textract is a production OCR service that may be included among the models OCR Arena evaluates, but serves a different function—delivering OCR capabilities rather than comparing them.

Azure AI Document Intelligence: Microsoft’s document processing service (formerly Form Recognizer) combining OCR with pre-built and custom document models. Supports both cloud API and on-premises container deployment for data sovereignty requirements. Azure AI Document Intelligence competes with Google and AWS as a commercial OCR provider, not as an evaluation platform. OCR Arena may help users decide whether Azure’s accuracy justifies its pricing for their use cases.

Open-Source OCR Tools

Tesseract OCR: The most established open-source OCR engine with 100+ language support and CPU-optimized processing. Tesseract achieves 95%+ accuracy on clean printed text but struggles with handwriting and complex layouts. Widely used in cost-conscious deployments and situations requiring fully local processing without cloud dependencies. Tesseract likely appears as one of the models evaluated in OCR Arena, allowing users to compare its performance against commercial alternatives. OCR Arena helps answer “Is Tesseract good enough for my documents, or do I need a commercial API?”

PaddleOCR: Open-source OCR framework from Baidu’s PaddlePaddle ecosystem supporting 80+ languages with optimized models for mobile and server deployment. PaddleOCR excels in multilingual scenarios and complex document layouts, achieving competitive accuracy with commercial systems on many tasks. Offers Apache 2.0 licensing for commercial use. OCR Arena likely includes PaddleOCR among evaluated models, enabling users to assess whether this free alternative matches their accuracy requirements.

EasyOCR: Python-based OCR library supporting 80+ languages with focus on ease of use and rapid integration. Built on PyTorch with GPU acceleration support. Popular among developers building quick prototypes and MVPs. EasyOCR may appear in OCR Arena evaluations, allowing users to gauge its accuracy relative to more sophisticated alternatives before committing to it for production deployments.

DeepSeek OCR: Recently released vision-language OCR system emphasizing context compression—it encodes document images into compressed representations that LLMs can process. Reports 97% decoding precision at 10x compression, 60% at 20x compression. Optimized for long-document processing in RAG and agent pipelines. DeepSeek OCR likely appears in OCR Arena given its recent prominence, enabling the community to validate claimed performance across diverse real-world documents.

Traditional OCR Benchmarks and Datasets

Academic Benchmark Datasets: Standardized evaluation sets like IAM Handwriting Database, FUNSD (Form Understanding), SROIE (receipt OCR), DocVQA (document visual question answering), and OCRBench provide controlled performance measurement on specific document types. These datasets enable reproducible research comparison but use fixed, artificial test sets that may not reflect production document diversity. OCR Arena differentiates by testing on user documents representing actual deployment scenarios, complementing academic benchmarks with practical performance data.

Hugging Face Model Cards and Spaces: Hugging Face hosts numerous OCR and VLM models with performance metrics reported by model authors. Users can demo models through Spaces interfaces. However, reported metrics may reflect optimized test conditions rather than real-world robustness. OCR Arena provides independent, community-validated performance assessment through blind comparison and voting, offering verification of claimed capabilities.

Commercial OCR Evaluation Services

ABBYY FineReader: High-accuracy commercial OCR software supporting 190+ languages with strong performance on complex layouts and multilingual documents. Available as SDK for integration or standalone application. ABBYY emphasizes on-premises deployment for data sovereignty and regulatory compliance. OCR Arena may include ABBYY in model comparisons if API access is available, helping users assess whether its premium pricing delivers superior accuracy.

Document AI Platforms (Docsumo, Nanonets, etc.): Specialized intelligent document processing platforms combining OCR with extraction, validation, and workflow automation. These platforms target specific verticals like accounting, healthcare, or logistics with pre-trained extractors for invoices, receipts, medical forms, etc. They compete less with OCR Arena and more with complete document workflow solutions. Users might reference OCR Arena rankings when selecting the underlying OCR engine for custom document processing pipelines.

Key Differentiators

OCR Arena distinguishes itself through several unique characteristics:

Community-Driven Evaluation Philosophy: Unlike vendor-provided benchmarks or academic datasets, OCR Arena generates rankings from crowdsourced judgments on diverse, real-world documents. This approach captures practical performance across varied use cases rather than optimized performance on standardized test sets.

Elo-Based Competitive Ranking: The adoption of Elo ratings from competitive gaming and chess creates a statistically robust ranking system that accounts for opponent strength and confidence intervals. This methodology provides more nuanced assessment than simple accuracy percentages.

Anonymous Blind Testing: By hiding model identities during evaluation, OCR Arena eliminates brand bias that influences perception when users know they’re testing “Google” versus “open-source alternative.” Blind testing encourages objective quality assessment.

Free, Zero-Setup Access: Unlike commercial OCR APIs requiring subscriptions, credits, or complex setup, OCR Arena provides immediate access to multiple leading models without financial or technical barriers. This democratizes high-quality OCR evaluation for individual developers and resource-constrained organizations.

Focus on Document Understanding, Not Image Recognition: While general computer vision platforms like Roboflow Arena cover object detection and segmentation, OCR Arena specializes specifically in text extraction and document understanding, attracting a focused community of document AI practitioners.

The platform succeeds as a complement to rather than replacement for other evaluation approaches. Users benefit from triangulating OCR Arena community rankings, traditional benchmark metrics, vendor-provided specifications, and custom testing on private data when making production model selections.

Pricing and Availability

OCR Arena operates as a completely free platform requiring no account creation, subscription, or hidden fees. Users access all features—document upload, multi-model comparison, voting, and leaderboard viewing—without payment. The website at ocrarena.ai provides immediate access through any modern web browser.

The free model reflects a strategic decision to maximize community participation and data collection rather than direct monetization. The platform’s value to the developer team likely derives from brand building, research insights, model performance data, and potential enterprise consulting opportunities rather than subscription revenue.

The platform is maintained by a small team, with developer Ishaan Agrawal publicly documenting the full-stack development process including database modeling, GPU deployment, and interface design. The project launched through Y Combinator’s network and gained visibility through Product Hunt, Reddit communities, and professional networks.

Long-term sustainability beyond the launch period has not been publicly detailed. Possible future monetization approaches could include enterprise features, API access to aggregated performance data, premium tiers with advanced analytics, or consulting services for organizations selecting OCR models.

Final Thoughts

OCR Arena represents a valuable contribution to the document AI ecosystem by addressing a genuine pain point: the difficulty of selecting appropriate OCR models without extensive, time-consuming testing. By providing free, transparent, community-driven benchmarking, the platform democratizes access to model evaluation previously available only to well-resourced organizations with dedicated testing infrastructure.

The platform is particularly valuable for developers in the model selection phase who need empirical performance data on their specific document types before committing to OCR APIs or integration efforts. The Elo leaderboard provides quick guidance on top-performing models, while direct document upload enables validation that highly-ranked models actually perform well on domain-specific documents.

However, users should recognize OCR Arena as one component of comprehensive evaluation rather than a complete selection methodology. The platform excels at comparative accuracy assessment but provides no information about latency, cost, deployment complexity, API reliability, customer support, compliance certifications, or contractual terms—all critical factors for production deployments. Additionally, leaderboard rankings reflect community testing patterns, which may not match specific organizational requirements for specialized document types or languages.

The platform’s success will ultimately depend on sustained community participation. A small, homogenous user base submitting similar document types could produce leaderboards with limited generalizability. Conversely, diverse, active participation from users across industries, languages, and document types would create increasingly valuable collective intelligence about real-world OCR performance.

For developers evaluating OCR models, data scientists benchmarking document processing pipelines, or product managers selecting vendors, OCR Arena provides an excellent starting point. The platform enables rapid comparative testing without financial or technical barriers, generating actionable insights that complement vendor specifications, academic benchmarks, and proprietary testing. As the community grows and battle data accumulates, OCR Arena has potential to become the de facto public reference for practical OCR model performance—the equivalent of LMSYS Chatbot Arena for document understanding.