Table of Contents

Overview

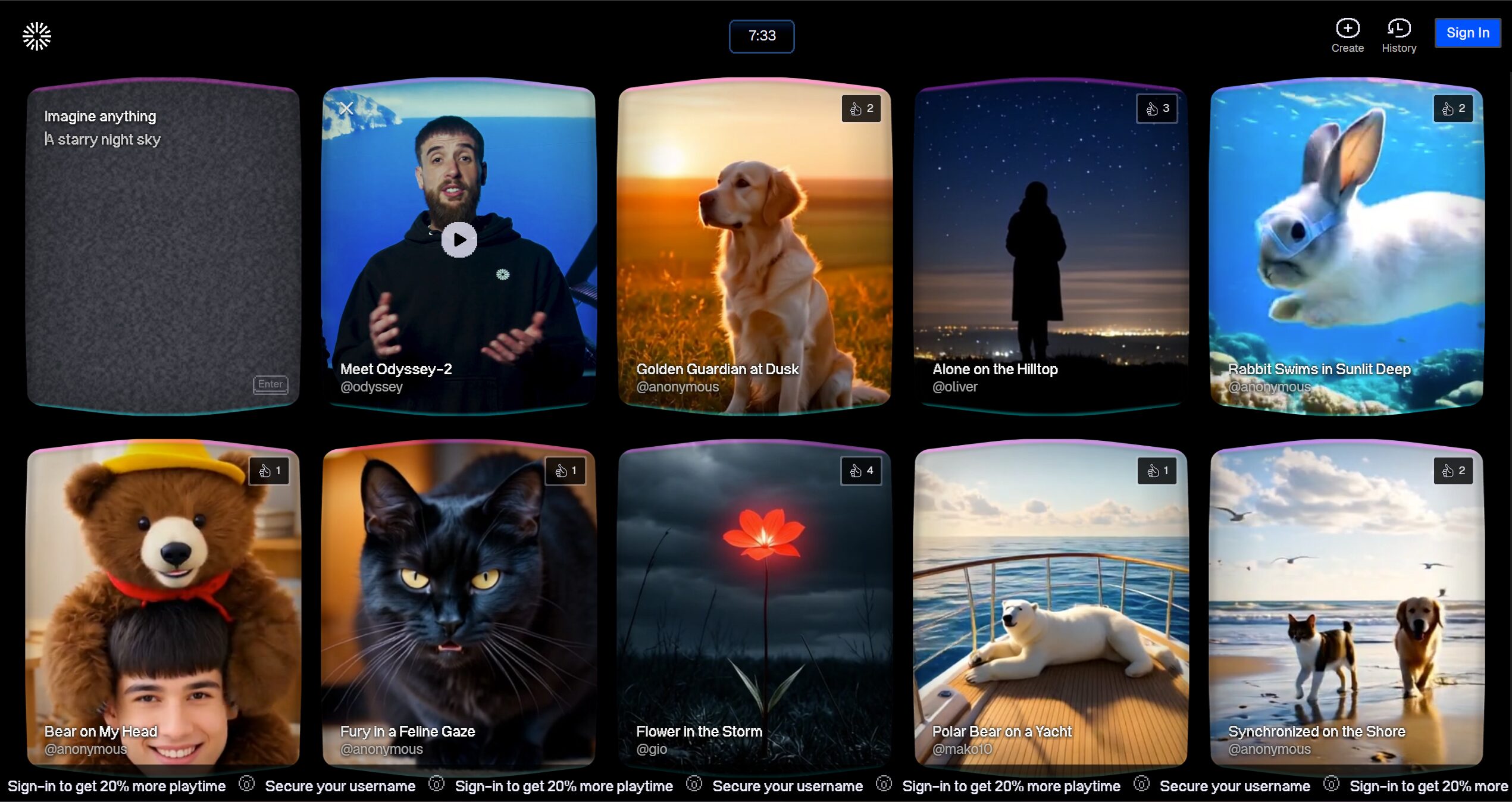

Welcome to the frontier of interactive video creation. Odyssey-2 represents a breakthrough in AI video technology, introducing the first commercially available model that generates video in real-time while responding to user input. Rather than waiting minutes for a fixed video clip to render, Odyssey-2 streams AI-generated video instantly at 20 frames per second, allowing you to guide its narrative interactively through natural text prompts. This transforms video from a static, predetermined experience into a living, adaptive medium that evolves in response to your creative direction.

Developed by Odyssey Systems (founded 2024 by Oliver Cameron and Jeff Hawke, veterans of autonomous vehicle AI research at Cruise and Wayve), Odyssey-2 represents a fundamental shift in how we think about computational storytelling. The platform uses a causal, autoregressive architecture that generates each frame based solely on prior frames and your text input, enabling true open-ended interaction rather than pre-planned narratives. This technical approach enables the system to handle multi-minute continuous streams while maintaining visual coherence and responsiveness to user guidance.

Key Features

Odyssey-2 brings capabilities to market that distinguish it meaningfully from existing video generation platforms:

- Real-time AI video generation: Experience video content created instantaneously without pre-rendering delays, with new frames appearing every 50 milliseconds as you interact with the model.

- Responsive streaming performance: Delivers continuous video output at 20 FPS baseline, with capability to reach up to 30 FPS on enterprise GPU infrastructure, ensuring smooth, immersive interaction without buffering.

- Text-guided interactive control: Direct the video’s evolution using natural language prompts entered during playback, with the model continuously adapting and regenerating video content based on your input without interrupting the stream.

- Emergent narrative generation: The model employs a causal architecture that generates each frame from context of prior frames and your instructions, enabling truly open-ended storytelling where user input at any moment reshapes all possible futures.

How It Works

Odyssey-2’s operation differs fundamentally from batch-processing video generation tools. Rather than submitting a prompt, waiting several minutes, and receiving a fixed output, you initiate an interactive session by entering an initial prompt. The system immediately begins streaming video to your screen. As it plays, you can type new prompts or modify your direction through a natural language interface. The model processes your input and regenerates upcoming frames accordingly, with response latency under 100 milliseconds. This creates a collaborative experience where your creative direction is implemented continuously throughout playback, rather than applied in post-production edits. Long-form coherence is maintained through the model’s training on causal generation, which learns temporal dynamics of motion, lighting, and physical behavior from extensive video datasets.

Use Cases

Odyssey-2’s real-time, user-steered generation unlocks applications that weren’t feasible with traditional video production or batch-based AI video tools:

- Interactive storytelling and entertainment: Create dynamic narratives where audience members, viewers, or collaborators can influence plot development, character actions, and scene evolution in real-time, generating truly unique outcomes with each session.

- Rapid game and experience prototyping: Visualize game environments and interactive scenarios instantly, testing environmental variations, character behaviors, and narrative branches without extensive development cycles, dramatically accelerating design iteration.

- Live audience-driven performances: Develop engaging experiences where broadcast content, live streams, or performances adapt dynamically to viewer input, real-time audience polls, or creator direction, creating unprecedented engagement opportunities.

- Educational and training applications: Build learning experiences that respond to student input or demonstrate real-time consequence and causality, enabling dynamic explanations and adaptive lesson pathways.

- Emergent creative research: Explore the boundaries of AI video generation through real-time experimentation, discovering novel visual effects and narrative possibilities through interactive exploration.

Pros \& Cons

Like any frontier technology still in active development, Odyssey-2 offers distinctive advantages alongside current limitations worth understanding.

Advantages

- Pioneering real-time interactivity: Delivers the first commercially available model enabling live, user-directed video generation, eliminating wait times that define existing generative video tools and creating fundamentally new creative workflows.

- Exceptional creative flexibility: Offers unparalleled freedom to continuously steer, modify, and explore video content, supporting spontaneous creative iteration and discovery within a single continuous generation session.

- Immediate iterative feedback: See results of your creative direction within milliseconds, fostering a highly responsive creative process that feels more like dialogue with the AI than task submission, enabling rapid experimentation.

Disadvantages

- Early-stage technology: As a nascent model still in active development, users may encounter limitations typical of frontier software, including evolving feature sets, occasional generation artifacts, and refinements to interaction models.

- Resolution and visual fidelity constraints: Current output operates at 512×512 resolution, reflecting the technical tradeoff inherent to real-time generation systems. Visual quality, while coherent and dynamic, does not yet match the fidelity of pre-rendered professional video production or longer-generation-time batch models like OpenAI Sora or Runway Gen-3 Alpha.

- Emerging use case landscape: As real-time interactive video is a nascent category, best practices and optimal workflows are still being established through community experimentation and developer innovation.

How Does It Compare?

In the current landscape of AI video generation (November 2025), Odyssey-2 occupies a unique category that differs fundamentally from established tools.

Traditional batch-based tools like Pika, Runway Gen-3 Alpha, and OpenAI Sora excel at generating high-fidelity, fixed video clips from text prompts. These platforms prioritize output quality over generation speed, typically requiring 1-3 minutes of processing to produce 5-10 seconds of video. Once generated, these videos are static—any changes require resubmitting the prompt and waiting for new processing. Other batch tools in this category include Kling 2.1 and Luma Ray 2, which similarly focus on maximizing visual quality through extended generation times.

Odyssey-2 reverses these tradeoffs. Instead of optimizing for maximum visual fidelity, it optimizes for responsiveness and interactivity. Streams begin instantly, latency remains under 100ms, and users maintain creative control throughout playback through real-time prompting. This makes Odyssey-2 exceptional for interactive applications like live experiences, educational tools, game design, and audience-driven content—use cases where batch generation and fixed outputs fundamentally don’t fit.

The technical enabler is Odyssey-2’s causal architecture. Unlike bidirectional models that reference both past and future context, Odyssey-2 generates each frame from prior frames and current input only. This constraint prevents certain optimizations but enables the model to respond authentically to real-time user direction. It’s the architectural choice that makes interactivity possible. Currently, Odyssey-2 remains the only commercial tool offering this real-time interactive video generation capability.

For creators prioritizing maximum visual polish and detail for finished content, tools like Runway and Sora remain superior. For interactive experiences, real-time prototyping, and audience-engaged content, Odyssey-2’s capabilities are currently unmatched in the market. The choice depends entirely on whether your workflow prioritizes output fidelity or user interactivity.

Final Thoughts

Odyssey-2 marks a significant inflection point in AI video technology—the moment when video generation transitions from a batch processing task to an interactive, real-time creative medium. While current limitations in resolution and visual fidelity prevent it from replacing traditional video production, its real-time responsiveness and interactive capabilities unlock entirely new categories of creative expression.

This early-stage tool invites creative exploration from artists, educators, developers, and storytellers willing to work at the frontier of a new medium. The underlying technology—causal, autoregressive video generation—points toward profound future capabilities: world simulators that respond authentically to human direction, educational experiences that adapt in real-time to student understanding, and entertainment that evolves based on audience participation.

For those ready to experiment with the next evolution of AI video, Odyssey-2 deserves serious attention.