Table of Contents

Overview

Automatic speech recognition achieved remarkable progress in recent decades, yet this advancement concentrated on high-resource languages with abundant training data available online. Approximately 7,000 languages exist globally, yet most ASR systems focus on dozens to hundreds of well-represented languages, leaving billions of speakers without access to transcription technology. This linguistic inequality reflects broader digital divides—speakers of minority, indigenous, and regional languages lack speech-enabled applications, accessibility tools, and cultural preservation technologies.

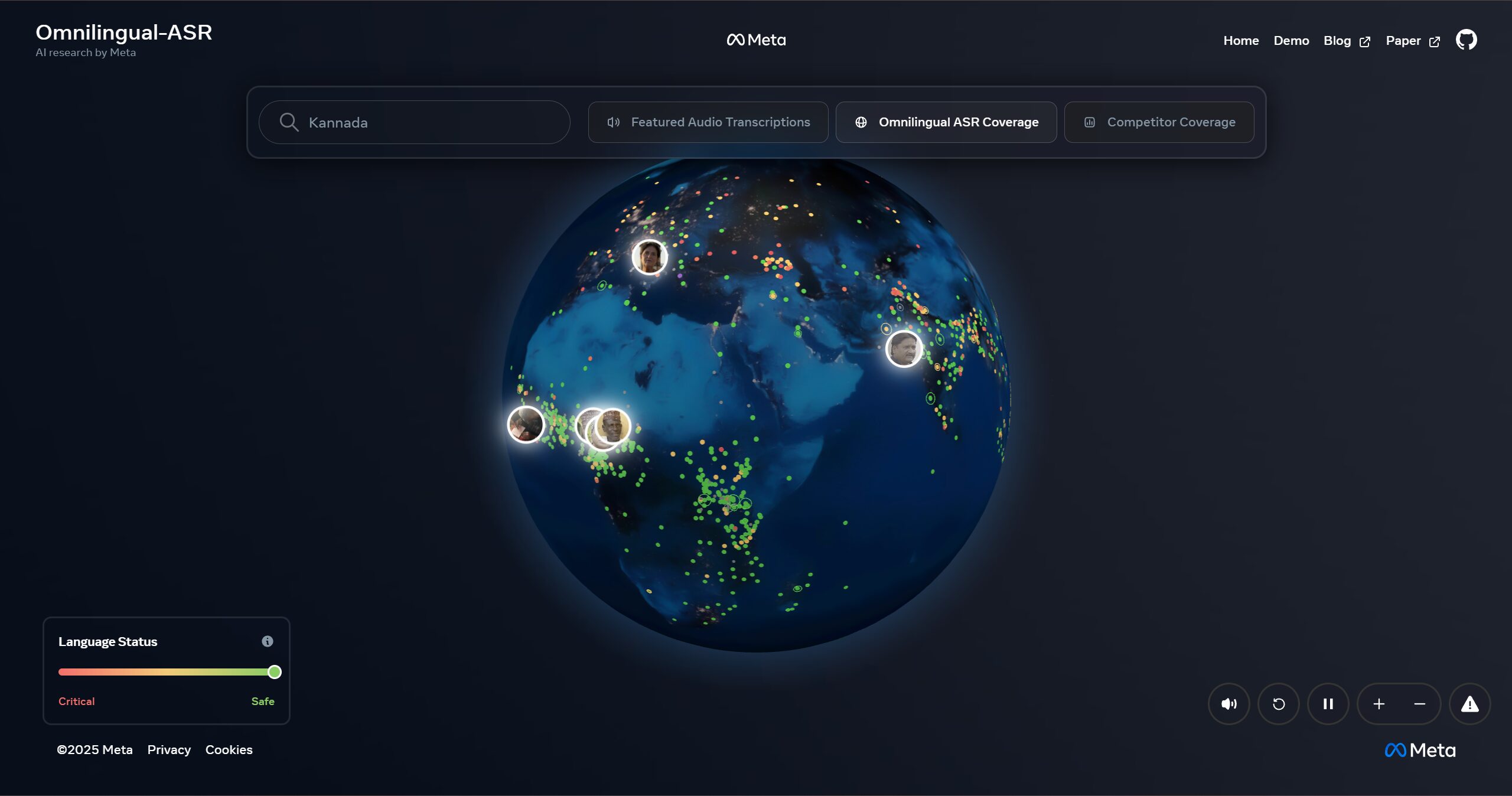

Meta’s Omnilingual ASR addresses this profound gap through unprecedented language coverage: delivering automatic speech recognition across 1,600+ languages, including 500 low-resource languages never before transcribed by AI systems. Rather than viewing multilingual ASR as engineering challenge requiring data collection and model retraining for each new language, Omnilingual ASR adopts generative LLM-based architecture enabling zero-shot learning—new languages activate from just a handful of audio-text examples without retraining. Launched November 2025 under Apache 2.0 license, Omnilingual ASR represents Meta’s most significant commitment to linguistic democratization in AI.

Key Features

Omnilingual ASR delivers comprehensive automatic speech recognition capabilities specifically designed for global linguistic diversity:

- Unprecedented Language Coverage (1,600+): Transcribes speech across 1,600+ languages including 500 previously unsupported low-resource languages. This represents roughly one-quarter of global linguistic diversity and 40+ times larger coverage than established commercial systems (Whisper ~90 languages; Google Speech-to-Text ~120 languages).

- LLM-Inspired Generative Architecture: Employs encoder-decoder design combining 7-billion-parameter wav2vec 2.0 speech representation model with LLM-inspired decoder. This generative approach—fundamentally different from discriminative models used by competitors—enables emergent zero-shot capability and sophisticated speech understanding impossible with traditional ASR architectures.

- In-Context Learning for Language Extensibility: Speakers of unsupported languages extend system capability through in-context learning (inspired by LLM few-shot capabilities). Providing just a handful of paired audio-text examples enables immediate transcription without data collection campaigns, computational resources, or technical expertise. This democratizes language addition process enabling community-led expansion.

- Exceptional Performance on Low-Resource Languages: Character error rates below 10% achieved for 78% of supported 1,600+ languages. Critically, performance remains strong across languages trained on minimal data (less than 10 hours for many low-resource languages). This contrasts competitors’ typical performance degradation with limited training data.

- Model Suite for Diverse Deployment Contexts: Family of models from compact 300-million-parameter versions optimized for low-power devices (mobile phones, embedded systems, edge devices) to full 7-billion-parameter versions maximizing accuracy. This range addresses extreme deployment constraints from resource-limited environments to high-accuracy server deployments.

- Omnilingual ASR Corpus (350 Underserved Languages): Publicly released training dataset covering 350 previously understudied languages. At 4.3+ million total training hours, represents “largest ultra-low-resource spontaneous ASR dataset ever made available” assembled through partnerships with Mozilla Foundation (Common Voice), Lanfrica/NaijaVoices, and community-compensated data collection from native speakers in underrepresented regions.

- Self-Supervised Learning at Scale: Scaled self-supervised learning (SSL) to 7 billion parameters, learning robust multilingual speech representations from unlabeled audio. This approach reduces reliance on expensive manual annotation, enabling cost-effective expansion across thousands of languages.

- Encoder-Decoder Variants for Optimization: Two decoder implementations—traditional CTC (Connectionist Temporal Classification) decoder for lightweight deployment, and LLM-inspired transformer decoder for maximum performance. Developers select optimal variant per deployment requirements.

- Multilingual Training Corpus: Integrated public datasets with community-sourced speech recordings gathered through compensated partnerships reaching languages with little digital presence. This represents deliberate effort toward equitable data collection across diverse communities.

- Apache 2.0 Open-Source Release: Full model weights, code, and Omnilingual ASR Corpus released under permissive licensing enabling commercial and research use without restriction. Transparent open-source approach lower deployment barriers compared to proprietary solutions.

How It Works

Omnilingual ASR transforms multilingual speech recognition from data-intensive engineering problem into accessible generative process:

Foundation Phase: Self-supervised pre-training scales wav2vec 2.0 speech encoder to 7 billion parameters across 4.3+ million hours of multilingual audio. This unsupervised learning discovers robust speech representations capturing phonetic, linguistic, and acoustic patterns across 1,600+ languages without requiring transcribed labels.

Training Phase: With pre-trained representations established, two decoder variants train on transcribed audio:

- CTC Decoder: Lightweight traditional automatic speech recognition decoder optimized for inference speed and model size

- LLM-ASR Decoder: Transformer-based decoder inspired by large language model design enabling sophisticated sequence-to-sequence transcription with enhanced capability

Supervised Training: Decoders train on massive multilingual corpus with carefully tuned language upsampling. Higher-resource languages (English, Mandarin, Spanish) undersampled relative to lower-resource languages (Javanese, Tigrinya, Hausa) ensuring balanced performance across resource spectrum rather than optimizing exclusively for well-represented languages.

Zero-Shot Generalization: Once trained, LLM-inspired architecture enables zero-shot inference on previously unseen languages. Providing handful of paired audio-text examples (typically 5-10 pairs) prompts model to adapt representations and produce transcriptions for new languages—mechanism analogous to in-context learning in large language models.

Deployment: Users select appropriate model (300M for mobile, 7B for server deployment), choose decoder variant (CTC vs LLM-ASR), and specify language. System accepts audio under 40 seconds (unlimited-length support planned) and returns transcription with optional confidence scores.

Use Cases

Omnilingual ASR’s unprecedented language coverage addresses scenarios where linguistic diversity creates application barriers:

- Accessibility Tools for Low-Resource Languages: Individuals speaking minority, indigenous, or regional languages access speech-to-text technology previously unavailable. Screen readers incorporating Omnilingual ASR enable digital access for communities historically excluded from accessibility technology.

- Multilingual Transcription at Scale: Media organizations, archivists, and researchers transcribe audio content spanning hundreds of languages. Documentary films, field recordings, oral histories, and international broadcasts transcribe across languages without proportional increase in labor or cost.

- Language Preservation and Cultural Documentation: Indigenous communities document traditional languages before linguistic extinction. Omnilingual ASR enables community members to transcribe sacred texts, traditional knowledge, cultural recordings in native languages with minimal technical infrastructure.

- Call Center Analytics Across Global Markets: Multinational organizations monitor customer calls across markets speaking diverse languages. Analytics spanning 1,600+ languages identify customer sentiment, compliance issues, and quality patterns globally rather than limiting to high-resource languages.

- Accessibility for Minority Language Speakers: Individuals with hearing impairments speaking minority languages receive live captioning and real-time transcription. Audiobooks, podcasts, and multimedia content reach deaf communities across linguistic diversity previously blocked by transcription unavailability.

- Multilingual Research and Linguistic Analysis: Researchers studying language evolution, linguistic variation, code-switching, and phonological patterns across diverse languages access ASR infrastructure previously limited to well-resourced languages. Linguistic diversity research becomes empirically tractable across thousands of languages.

- Automated Subtitle Generation for Global Content: Video creators generate subtitles across 1,600+ languages automatically. International organizations, educational platforms, and media companies expand global reach without subtitle localization labor.

Advantages

- Unmatched Language Breadth: 1,600+ languages representing ~23% of global linguistic diversity and 15-50x broader coverage than competitors. Encompasses languages with minimal digital presence and previously unsupported linguistic communities.

- Access Without Data Collection: In-context learning eliminates traditional ASR expansion bottleneck. New language support requires handful of examples rather than thousands of hours of annotated training data—democratizing language technology infrastructure.

- Open-Source Democratization: Apache 2.0 licensing with released models, code, and training corpus enables unrestricted research and commercial deployment. Removes proprietary licensing barriers enabling global participation.

- Exceptional Low-Resource Performance: Unlike competitors’ performance degradation with limited data, Omnilingual ASR maintains strong accuracy across languages trained on minimal data. 78% of 1,600+ languages achieve sub-10% character error rate.

- Deployment Flexibility: Model family spanning 300M to 7B parameters enables deployment across extreme resource constraints (mobile devices, servers, embedded systems) without compromising language coverage.

- Eliminates Retraining Requirement: Generative LLM-inspired architecture enables extending to new languages through inference-time adaptation rather than expensive model retraining. Lower technical and computational barriers for language community-led expansion.

- Supports Linguistic Minorities: Deliberately designed prioritizing underrepresented languages through partnership-based data collection. Community-compensated data gathering ensures ethical practices with native speakers.

Considerations

- Emerging Platform Status: As November 2025 release, Omnilingual ASR remains relatively new compared to established systems (Whisper released 2022, Google 10+ years). Early adoption carries risks of evolving APIs, changing model architectures, and maturing implementation practices.

- Current Technical Limitations: Inference restricted to audio clips under 40 seconds (unlimited support planned). Production applications may require preprocessing longer audio into segments.

- Performance Tradeoffs vs Specialized Systems: While Omnilingual ASR delivers exceptional breadth, specialized high-resource-language systems (Deepgram Nova-3, GPT-4o Transcribe, Whisper Large v3) may outperform on specific languages. Optimization for breadth inherently involves performance tradeoffs.

- In-Context Learning Quality Variability: Zero-shot performance with few examples, while impressive, cannot match fully-trained systems. Critical applications may require supplementing zero-shot capability with additional data collection and fine-tuning.

- Computational Requirements: 7B model variant demands significant computational resources despite efficiency improvements. Organizations with extreme resource constraints may require 300M variant accepting some accuracy reduction.

- Language Variant Coverage: While supporting 1,600+ languages, coverage of specific dialects and regional variants within languages may vary. Communities requiring specific dialect support should validate before deployment.

How It Compare

Omnilingual ASR operates in speech recognition landscape positioned distinctly from established competitors through language breadth, architecture design, and ethical positioning:

Established Commercial ASR (Whisper, Deepgram, Google Speech-to-Text): OpenAI’s Whisper (released September 2022, ~90 languages) represents state-of-the-art for well-resourced languages through transformer-based architecture trained on 680,000 hours of supervised multilingual data. Deepgram focuses on accuracy and latency optimization for streaming applications, specializing in domain-specific terminology (medical, legal, technical). Google Cloud Speech-to-Text supports ~120 languages with enterprise integration. All three focus on accuracy optimization for supported languages rather than breadth expansion. Omnilingual ASR inverts priorities: breadth (1,600+ languages) rather than specialization, extensibility (in-context learning) rather than fixed language set, accessibility (low-resource focus) rather than market-driven language selection.

Latest OpenAI Models (GPT-4o Transcribe, March 2025): OpenAI released GPT-4o Transcribe achieving lower error rates than Whisper through multimodal GPT-4o foundation. Positioned as premium accuracy solution for critical transcription. Omnilingual ASR focuses on opposite end: maximum language coverage (1,600+ vs ~90) rather than maximum accuracy for individual languages.

Multilingual ASR Research Systems: Academic initiatives (Hugging Face multilingual models, Massively Multilingual Speech Recognition (XLSR) variants) push boundaries in multilingual speech processing. These focus on research contributions rather than production systems. Omnilingual ASR bridges research and production, releasing research-grade system for widespread deployment.

Proprietary Multilingual Systems: Enterprise vendors offer multilingual speech recognition bundled with larger platforms. These lack Omnilingual ASR’s combination of 1,600+ language breadth, open-source accessibility, and explicit low-resource language prioritization.

Omnilingual ASR’s competitive differentiation centers on language breadth + accessibility: 1,600+ languages (15-50x competitors), zero-shot extensibility requiring handful of examples rather than thousands of data hours, open-source release enabling unrestricted use, deliberate prioritization of underrepresented languages through ethical community partnerships, and model suite enabling extreme deployment constraints. For researchers studying linguistic diversity, organizations serving global markets across minority languages, indigenous communities preserving endangered languages, and institutions prioritizing accessibility—Omnilingual ASR delivers capabilities unavailable from established systems. For applications prioritizing accuracy on specific well-resourced languages or requiring specialized domain optimization (legal, medical), specialized competitors remain superior.

Final Thoughts

Speech technology historically concentrated on high-resource languages reflecting economic incentives and available training data. This concentration deepened digital inequality—billions speaking minority languages lacked fundamental voice-enabled technologies. Omnilingual ASR represents different approach: deliberately designing system prioritizing linguistic diversity, enabling low-resource language communities to participate in AI-driven technology rather than remaining peripheral.

Launching 1,600+ language coverage simultaneously represents moonshot ambition—Meta assembled largest multilingual audio corpus ever, scaled self-supervised learning to new parameter scales, designed novel generative ASR architecture, and executed community-compensated data collection across underrepresented regions. Open-source release multiplies impact: researchers, developers, and community organizations adopt infrastructure democratizing speech technology globally.

While Omnilingual ASR remains early-stage platform (November 2025 launch), its potential impact for linguistic minorities, endangered language preservation, accessibility, and digital inclusion is substantial. For organizations committed to building truly global applications serving linguistic diversity rather than optimizing exclusively for profitable markets—Omnilingual ASR provides unprecedented infrastructure. As multilingual AI adoption accelerates, systems optimizing for language breadth and community accessibility rather than specialized accuracy become essential infrastructure for just, inclusive AI development.

https://ai.meta.com/blog/omnilingual-asr-advancing-automatic-speech-recognition/