Table of Contents

Overview

In the rapidly evolving world of AI, voice interaction is becoming increasingly crucial. OpenAI’s GPT-4o Audio Models are stepping up to the plate, offering a powerful suite of tools for real-time speech-to-text and text-to-speech processing. These models promise enhanced accuracy, reduced latency, and broad language support, making them a compelling option for developers looking to build sophisticated voice applications. Let’s dive into what makes these models tick and how they stack up against the competition.

Key Features

GPT-4o Audio Models boast a range of impressive features designed to elevate your voice-based applications:

- Real-time speech-to-text transcription with improved accuracy: Transcribe spoken words into text with remarkable precision, even in noisy environments.

- High-quality text-to-speech synthesis with customizable voices: Generate natural-sounding speech from text, with options to tailor the voice to your specific needs.

- Multilingual support for over 50 languages: Reach a global audience with comprehensive language support.

- Low-latency processing suitable for interactive applications: Experience minimal delays, ensuring seamless and responsive interactions.

- Integration capabilities with various platforms and APIs: Easily incorporate the models into your existing workflows and systems.

How It Works

At its core, GPT-4o Audio Models leverage advanced neural network architectures to process audio inputs and generate corresponding text or speech outputs. These models are trained on vast and diverse datasets, encompassing a wide array of accents, languages, and contexts. This extensive training ensures accurate and natural interactions, regardless of the speaker’s background or the surrounding environment. The complex algorithms work together to analyze audio waveforms, identify phonemes, and construct coherent text or synthesize realistic speech.

Use Cases

The versatility of GPT-4o Audio Models opens up a wide range of potential applications:

- Developing virtual assistants and chatbots with voice capabilities: Create more engaging and intuitive conversational AI experiences.

- Transcribing meetings, lectures, and interviews in real-time: Capture spoken information accurately and efficiently.

- Enhancing accessibility features with speech recognition and synthesis: Make technology more accessible to individuals with disabilities.

- Implementing voice-controlled applications and devices: Enable hands-free control and interaction with various systems.

Pros & Cons

Like any technology, GPT-4o Audio Models have their strengths and weaknesses. Let’s break them down:

Advantages

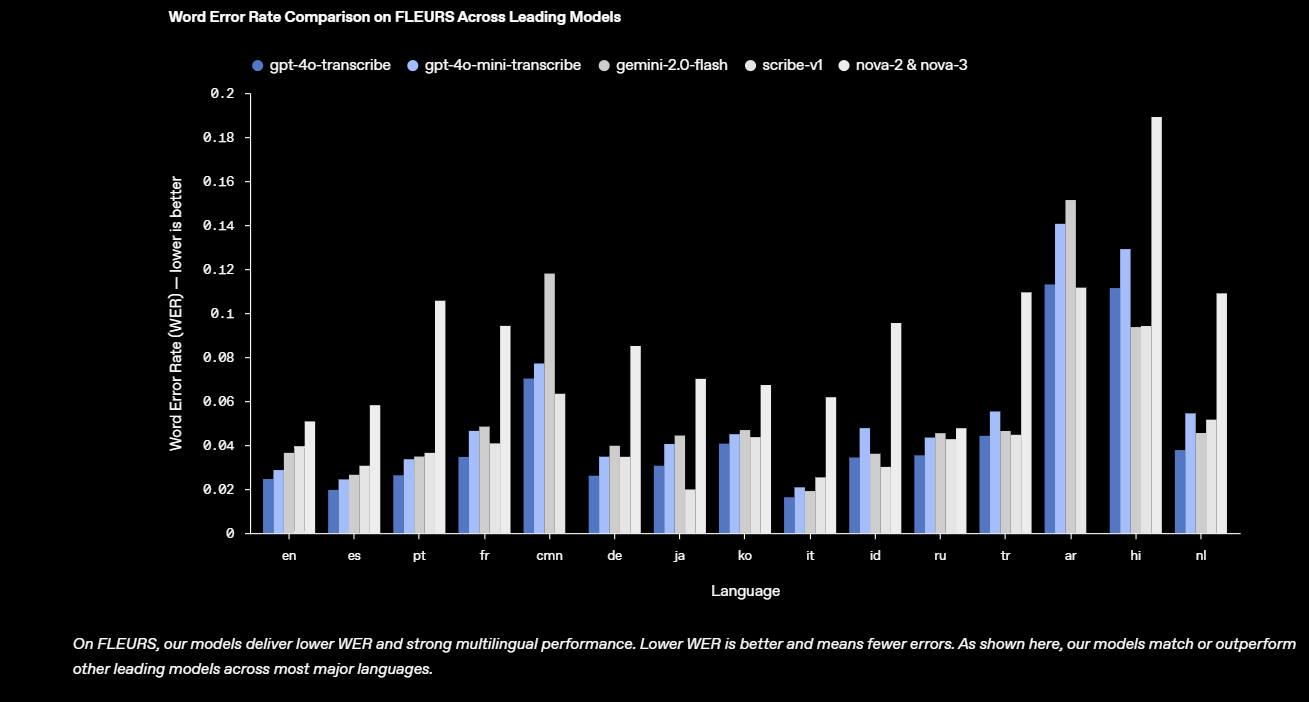

- High accuracy in speech recognition across multiple languages.

- Natural-sounding speech synthesis with customizable options.

- Low latency suitable for real-time applications.

Disadvantages

- Requires internet connectivity for API access.

- Potential privacy concerns with processing sensitive audio data.

How Does It Compare?

When considering alternatives, two prominent competitors emerge: Google Cloud Speech-to-Text and Amazon Transcribe. Google Cloud Speech-to-Text offers robust speech recognition capabilities but may exhibit higher latency compared to GPT-4o. Amazon Transcribe provides scalable transcription services, but its focus is less on real-time interaction, making GPT-4o a more suitable choice for applications requiring immediate responsiveness.

Final Thoughts

OpenAI’s GPT-4o Audio Models represent a significant advancement in the field of voice AI. With their impressive accuracy, low latency, and multilingual support, they offer developers a powerful toolkit for building innovative and engaging voice-based applications. While considerations like internet connectivity and privacy are important, the potential benefits of these models are undeniable. If you’re looking to incorporate cutting-edge voice technology into your projects, GPT-4o Audio Models are definitely worth exploring.

https://openai.com/index/introducing-our-next-generation-audio-models/