Table of Contents

Overview

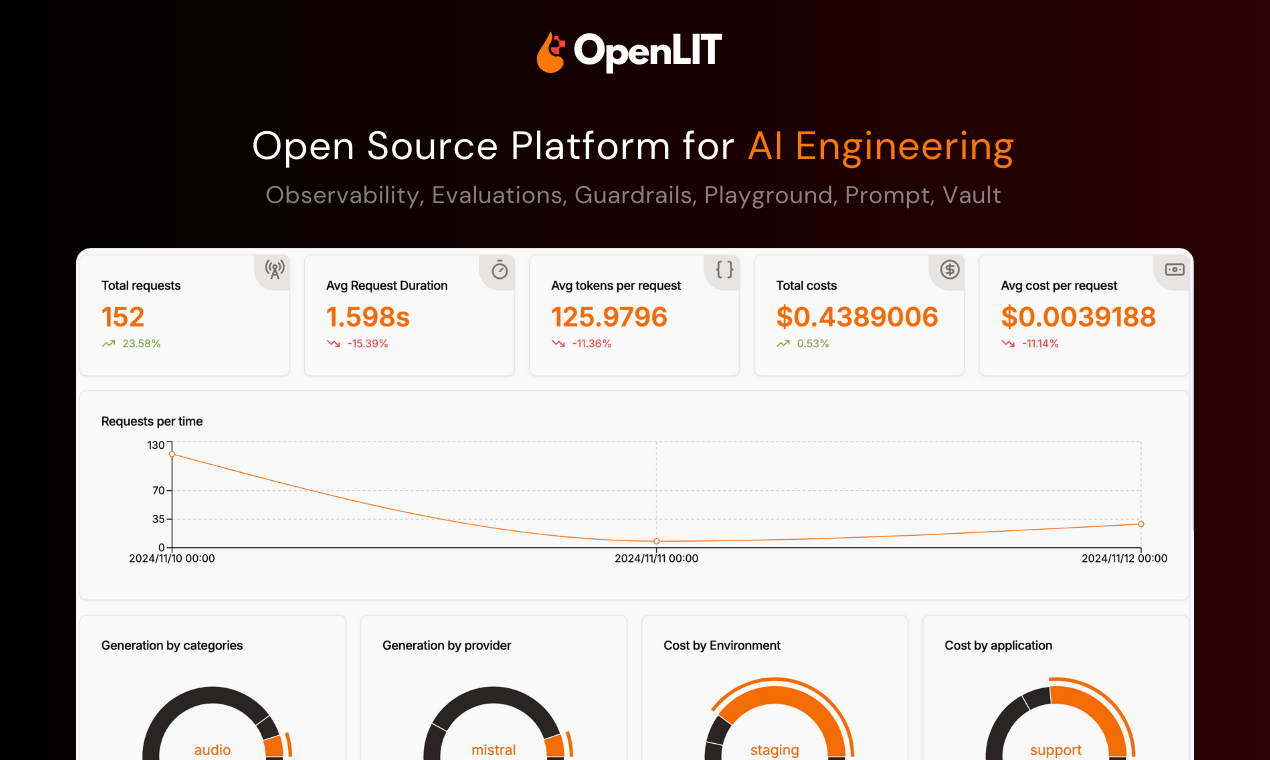

In the rapidly evolving world of AI, building and deploying robust, reliable, and secure applications requires more than just powerful models. It demands comprehensive observability and control. Enter OpenLIT 2.0, an open-source, self-hosted platform designed to empower developers with the tools they need to develop, observe, and optimize their AI applications. Let’s dive into what makes OpenLIT a compelling option for those serious about AI development.

Key Features

OpenLIT 2.0 boasts a suite of features specifically tailored for LLM-powered applications:

- LLM Experimentation: Facilitates A/B testing and experimentation with different LLMs and configurations to identify optimal performance.

- Prompt Management and Versioning: Organize, track, and version your prompts to ensure consistency and reproducibility across your AI applications.

- Secure API Key Handling: Securely store and manage API keys, protecting sensitive credentials from unauthorized access.

- Prompt Injection Protection: Mitigate the risk of prompt injection attacks, safeguarding your applications from malicious inputs.

- OpenTelemetry-native Observability: Leverage OpenTelemetry standards for comprehensive observability, enabling seamless integration with existing monitoring infrastructure.

- Integration with Grafana, Prometheus: Visualize and analyze telemetry data using popular observability tools like Grafana and Prometheus for in-depth insights.

How It Works

OpenLIT works by integrating the OpenLIT SDK into your AI applications. This SDK automatically collects telemetry data related to prompt usage, LLM performance, and application behavior. This data is then visualized through built-in dashboards or can be exported to external observability tools like Grafana and Prometheus. This allows developers to monitor performance, debug issues, track costs, and ensure the security of their AI applications. By providing a clear view into the inner workings of your AI, OpenLIT empowers you to make data-driven decisions and optimize your applications for maximum impact.

Use Cases

OpenLIT 2.0 addresses a wide range of use cases in the AI development lifecycle:

- AI App Development: Streamline the development process by providing real-time insights into application behavior and performance.

- Prompt Testing and Optimization: Experiment with different prompts and evaluate their effectiveness to improve LLM output quality.

- LLM Performance Monitoring: Track key performance indicators (KPIs) such as latency, throughput, and accuracy to identify bottlenecks and optimize LLM performance.

- Cost Management: Monitor API usage and costs associated with LLM deployments to optimize resource allocation and minimize expenses.

- Observability and Debugging: Gain deep visibility into application behavior to identify and resolve issues quickly and efficiently.

- Security Enforcement: Implement prompt injection protection measures to safeguard your applications from malicious attacks.

Pros & Cons

Advantages

- Open-source: Benefit from a transparent and community-driven platform with the flexibility to customize and extend its functionality.

- Self-hosted: Maintain complete control over your data and infrastructure, ensuring data privacy and security.

- Rich observability: Gain comprehensive insights into your AI applications with OpenTelemetry-native observability.

- LLM-optimized: Designed specifically for LLM-powered applications, providing tailored features and capabilities.

- Secure and privacy-focused: Protect sensitive data and credentials with secure API key handling and prompt injection protection.

Disadvantages

- Setup complexity: Requires technical expertise to install and configure the platform.

- Requires familiarity with observability tools: Leveraging the full potential of OpenLIT requires familiarity with tools like Grafana and Prometheus.

How Does It Compare?

When evaluating observability and prompt management tools for AI applications, it’s important to consider alternatives. Langfuse offers similar observability features but may have different integration capabilities. PromptLayer excels in prompt management tools but offers weaker observability features compared to OpenLIT. Arize AI provides broad AI support but is less focused on LLM-specific needs. OpenLIT distinguishes itself with its open-source nature, self-hosting capabilities, and strong focus on LLM observability and security.

Final Thoughts

OpenLIT 2.0 presents a compelling solution for developers seeking a comprehensive, open-source platform for developing and observing AI applications. Its rich feature set, combined with its focus on security and observability, makes it a valuable tool for building robust, reliable, and secure AI solutions. While the setup complexity and reliance on observability tools may present a barrier to entry for some, the benefits of enhanced control, transparency, and security make OpenLIT a worthwhile consideration for those serious about AI development.