Table of Contents

Overview

In the ever-evolving landscape of AI, ensuring your Large Language Models (LLMs) have access to the right information is paramount. But simply feeding them raw data often leads to suboptimal results. Enter Preprocess, a powerful tool designed to supercharge your Retrieval-Augmented Generation (RAG) systems by intelligently chunking documents. By breaking down PDFs and Office documents into meaningful segments, Preprocess ensures your LLMs receive contextually rich and relevant information, leading to more accurate and insightful outputs.

Key Features

Preprocess boasts a suite of features designed to optimize your document processing for LLM integration:

- Semantic Document Chunking: Preprocess goes beyond simple text splitting, analyzing the semantic content of your documents to create chunks that preserve meaning and context.

- Structure-Aware Splitting: The tool intelligently recognizes and leverages the structural layout of your documents, such as headings, paragraphs, and tables, to create more coherent chunks.

- Support for PDF and Office Files: Preprocess seamlessly handles a wide range of common document formats, including PDFs and Microsoft Office files like Word documents and PowerPoint presentations.

- Optimized for RAG: Designed specifically for RAG applications, Preprocess ensures that the resulting chunks are perfectly tailored for efficient retrieval and integration with LLMs.

- Improved LLM Context Handling: By providing LLMs with well-defined and contextually relevant chunks, Preprocess significantly improves their ability to understand and respond to queries accurately.

How It Works

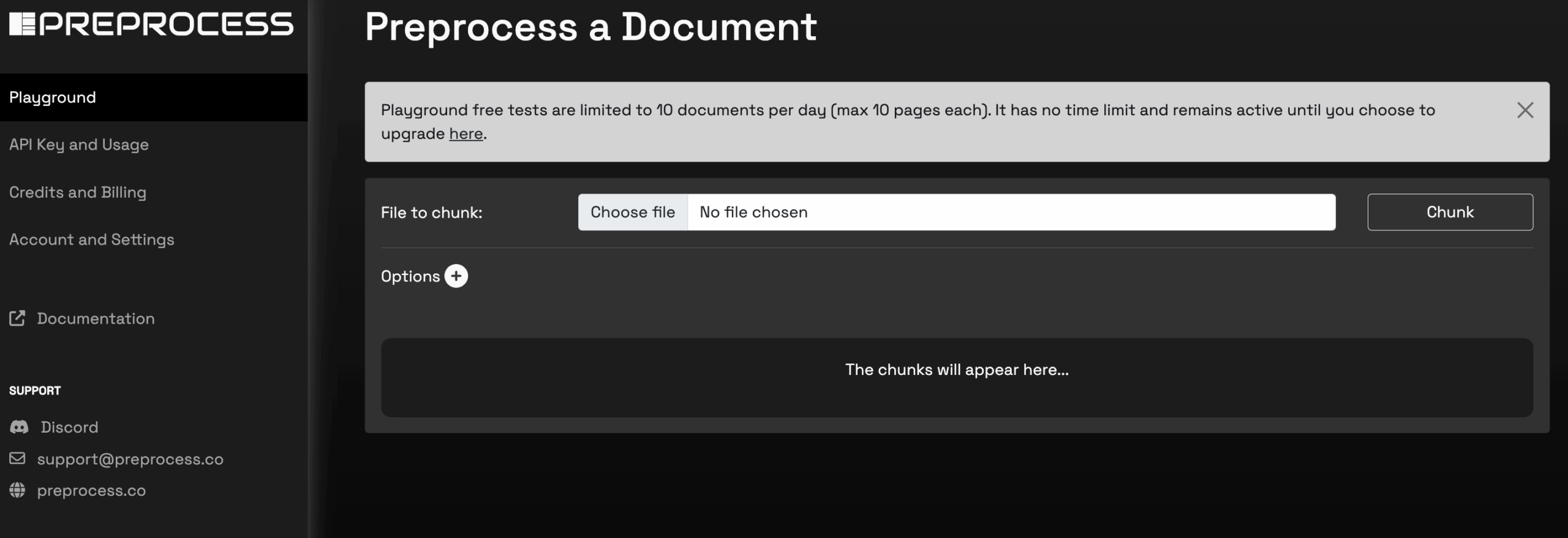

The process is straightforward. Users upload their documents directly to the Preprocess platform. The platform then leverages advanced structure and semantic analysis to parse and segment the documents. This involves identifying key structural elements and understanding the semantic relationships between different parts of the text. The resulting chunks are carefully optimized to ensure maximum context retention when used in LLM pipelines. This optimized output allows for better retrieval and ultimately, more accurate and insightful LLM responses.

Use Cases

Preprocess unlocks a wide range of possibilities for leveraging LLMs with structured and unstructured data:

- Knowledge Base Construction: Build robust and easily searchable knowledge bases by preprocessing your documents into optimized chunks for LLM integration.

- RAG Applications: Enhance the performance of your RAG systems by providing LLMs with contextually rich and relevant document segments.

- AI Chat Systems: Improve the accuracy and relevance of AI chatbot responses by feeding them preprocessed document chunks.

- Document Indexing: Optimize document indexing for faster and more accurate retrieval of information.

- Enterprise Search Optimization: Enhance enterprise search capabilities by preprocessing documents to improve the relevance and accuracy of search results.

Pros & Cons

Like any tool, Preprocess has its strengths and weaknesses. Let’s break them down:

Advantages

- Enhances LLM Retrieval Quality: Preprocess significantly improves the quality of information retrieved by LLMs, leading to more accurate and relevant responses.

- Maintains Semantic Integrity: The tool’s semantic chunking capabilities ensure that the meaning and context of your documents are preserved.

- Supports Diverse Formats: Preprocess handles a variety of common document formats, including PDFs and Office files.

Disadvantages

- May Require Integration Work: Integrating Preprocess into existing LLM pipelines may require some technical expertise.

- Limited to Preprocessing Scope: Preprocess focuses solely on document preprocessing and does not offer other LLM-related functionalities.

How Does It Compare?

When considering alternatives, it’s important to understand the nuances. Unstructured offers a similar scope in document processing, but Preprocess places a greater emphasis on semantic understanding. LangChain Tools, while a broader toolkit for LLM development, is less specialized in the specific task of document chunking. Preprocess truly shines in its focused approach to optimizing document structure and semantics for RAG applications.

Final Thoughts

Preprocess offers a compelling solution for anyone looking to improve the performance of their RAG systems. Its focus on semantic understanding and structure-aware splitting sets it apart from more general-purpose tools. While integration may require some effort, the resulting improvements in LLM retrieval quality make Preprocess a valuable asset for knowledge base construction, AI chat systems, and enterprise search optimization. If you’re serious about maximizing the potential of your LLMs, Preprocess is definitely worth considering.