Table of Contents

Overview

In the ever-evolving landscape of AI, a new contender has emerged, poised to challenge the reigning champions. Meet Qwen2.5-Max, Alibaba Cloud’s flagship large language model. Built with a cutting-edge Mixture-of-Experts architecture and trained on a massive dataset, Qwen2.5-Max is making waves with its impressive performance and versatility. Let’s dive into what makes this model a force to be reckoned with.

Key Features

Qwen2.5-Max boasts a powerful set of features designed for optimal performance:

- Mixture-of-Experts (MoE) with 64 experts: This architecture allows the model to dynamically select the most relevant expert networks for each input, enhancing efficiency and accuracy.

- Pretrained on 20T tokens: The extensive training dataset provides a solid foundation for understanding and generating human-quality text.

- Fine-tuned with SFT and RLHF: Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF) refine the model’s responses, ensuring they are both accurate and aligned with human preferences.

- High benchmark scores: Qwen2.5-Max consistently achieves top scores in leading AI benchmarks, demonstrating its superior capabilities.

- API access via Alibaba Cloud: Seamless integration with Alibaba Cloud services allows developers to easily incorporate Qwen2.5-Max into their applications.

How It Works

Qwen2.5-Max leverages a sophisticated Mixture-of-Experts (MoE) architecture to process inputs. When a prompt is received, the model dynamically selects a subset of its 64 expert networks that are most relevant to the task at hand. This allows for efficient computation and specialized processing. The model’s extensive training on 20 trillion tokens, combined with fine-tuning using SFT and RLHF, enables it to deliver high-quality, contextually appropriate responses across a wide range of tasks. This advanced architecture allows Qwen2.5-Max to minimize computational costs while maximizing output quality.

Use Cases

Qwen2.5-Max’s versatility makes it suitable for a wide array of applications:

- Natural language processing: From text summarization to sentiment analysis, Qwen2.5-Max excels at understanding and manipulating human language.

- Code generation: The model can generate code in various programming languages, assisting developers in automating tasks and accelerating software development.

- Math problem-solving: Qwen2.5-Max can tackle complex mathematical problems, making it a valuable tool for education and research.

- Translation: Accurate and fluent translation between multiple languages opens up new possibilities for global communication and collaboration.

- Enterprise AI applications: Businesses can leverage Qwen2.5-Max to enhance customer service, automate workflows, and gain valuable insights from data.

Pros & Cons

Like any powerful tool, Qwen2.5-Max has its strengths and weaknesses. Let’s break them down:

Advantages

- Strong benchmark performance: Consistently outperforms or matches competitors in leading AI benchmarks.

- Efficient MoE processing: The Mixture-of-Experts architecture allows for efficient computation and scalability.

- Extensive training: The model’s vast training dataset ensures a deep understanding of language and concepts.

- API available: Easy integration with applications through the Alibaba Cloud API.

Disadvantages

- Not open-source: The model’s code is not publicly available, limiting customization options.

- Requires Alibaba Cloud account: Access to Qwen2.5-Max is contingent on having an Alibaba Cloud account.

How Does It Compare?

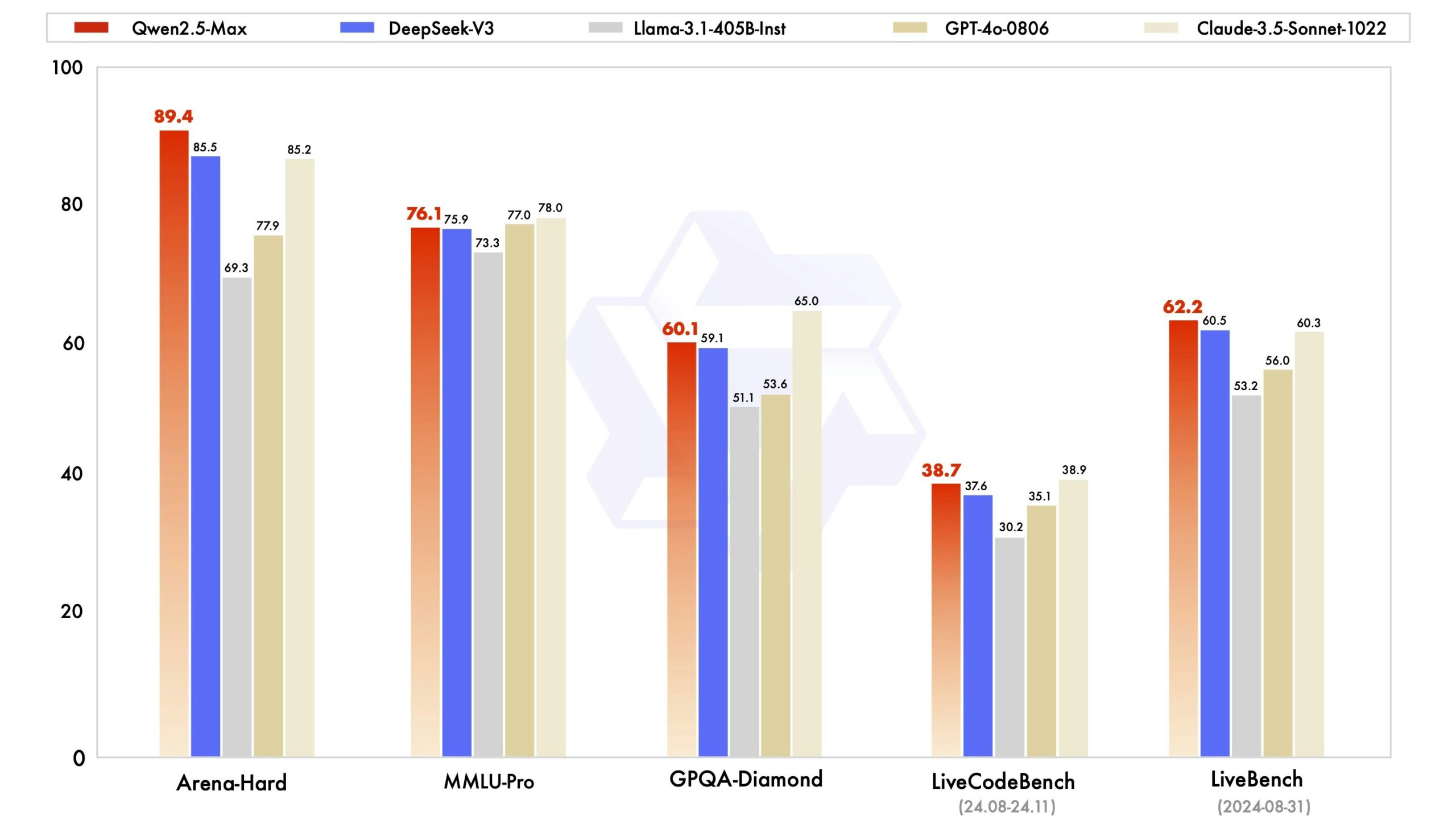

Qwen2.5-Max is positioned to compete with the leading large language models on the market. Here’s how it stacks up against some key competitors:

- DeepSeek V3: Qwen2.5-Max has been outperformed by DeepSeek V3 on Arena-Hard and LiveBench benchmarks.

- GPT-4o: Performance is comparable, with Qwen2.5-Max showing slight advantages in some benchmarks.

- Claude 3.5 Sonnet: Similar performance, with Qwen2.5-Max demonstrating benchmark advantages in certain areas.

Final Thoughts

Qwen2.5-Max represents a significant step forward in the evolution of large language models. Its impressive performance, efficient architecture, and wide range of use cases make it a compelling option for developers and businesses seeking to leverage the power of AI. While the lack of open-source access and reliance on Alibaba Cloud may be drawbacks for some, the model’s capabilities are undeniable. As the AI landscape continues to evolve, Qwen2.5-Max is certainly a model to watch.