Table of Contents

Overview

In the ever-evolving landscape of AI, new large language models (LLMs) are constantly emerging, each vying for a spot in the toolkit of developers, researchers, and businesses. Enter Qwen3, Alibaba’s latest offering to the open-source community. This suite of models, ranging from compact to colossal, boasts impressive capabilities and a unique “Thinking Mode” designed to optimize performance and efficiency. Let’s dive into what makes Qwen3 a contender in the LLM arena.

Key Features

Qwen3 is packed with features that make it a compelling option for various AI applications:

- Open-weight LLMs: Qwen3 models are released with open weights, allowing for transparency, customization, and community-driven development.

- Parameter Range from 0.5B to 235B: This wide range of model sizes offers flexibility, enabling users to choose the right model for their specific computational resources and performance needs.

- Mixture-of-Experts (MoE) Architecture: The MoE architecture allows the larger models to activate only the most relevant parts of the network for a given task, improving efficiency and performance.

- Switchable Thinking Mode: This innovative feature allows users to switch between different inference strategies, balancing reasoning accuracy with computational efficiency. Choose a faster mode for quick tasks or a more thorough mode for complex problem-solving.

- Multilingual: Qwen3 is optimized for handling multiple languages, making it suitable for global applications and diverse user bases.

- Strong in Code and Math Tasks: Qwen3 demonstrates strong performance in coding and mathematical reasoning, making it a valuable tool for developers and researchers in these fields.

How It Works

Qwen3 models are released via open repositories, making them readily accessible to the AI community. The modular architecture allows for switching between inference strategies to prioritize speed or reasoning depth. This flexibility is a key advantage. Users can download the pretrained models and fine-tune them for specific tasks using their own datasets. The open-weight nature of the models also allows for deeper customization and modification of the underlying architecture.

Use Cases

Qwen3’s capabilities lend themselves to a wide range of applications:

- Natural Language Understanding: Analyze and interpret text for sentiment analysis, topic extraction, and more.

- Code Generation: Automate the creation of code snippets and complete programs, boosting developer productivity.

- Mathematical Reasoning: Solve complex mathematical problems and perform calculations with high accuracy.

- Multilingual Applications: Translate languages, generate content in multiple languages, and build multilingual chatbots.

- Research in AI Model Efficiency: Explore new techniques for improving the efficiency and performance of large language models.

Pros & Cons

Like any tool, Qwen3 has its strengths and weaknesses. Let’s break them down:

Advantages

- Open-weight and Adaptable: The open-weight nature allows for customization, fine-tuning, and community contributions.

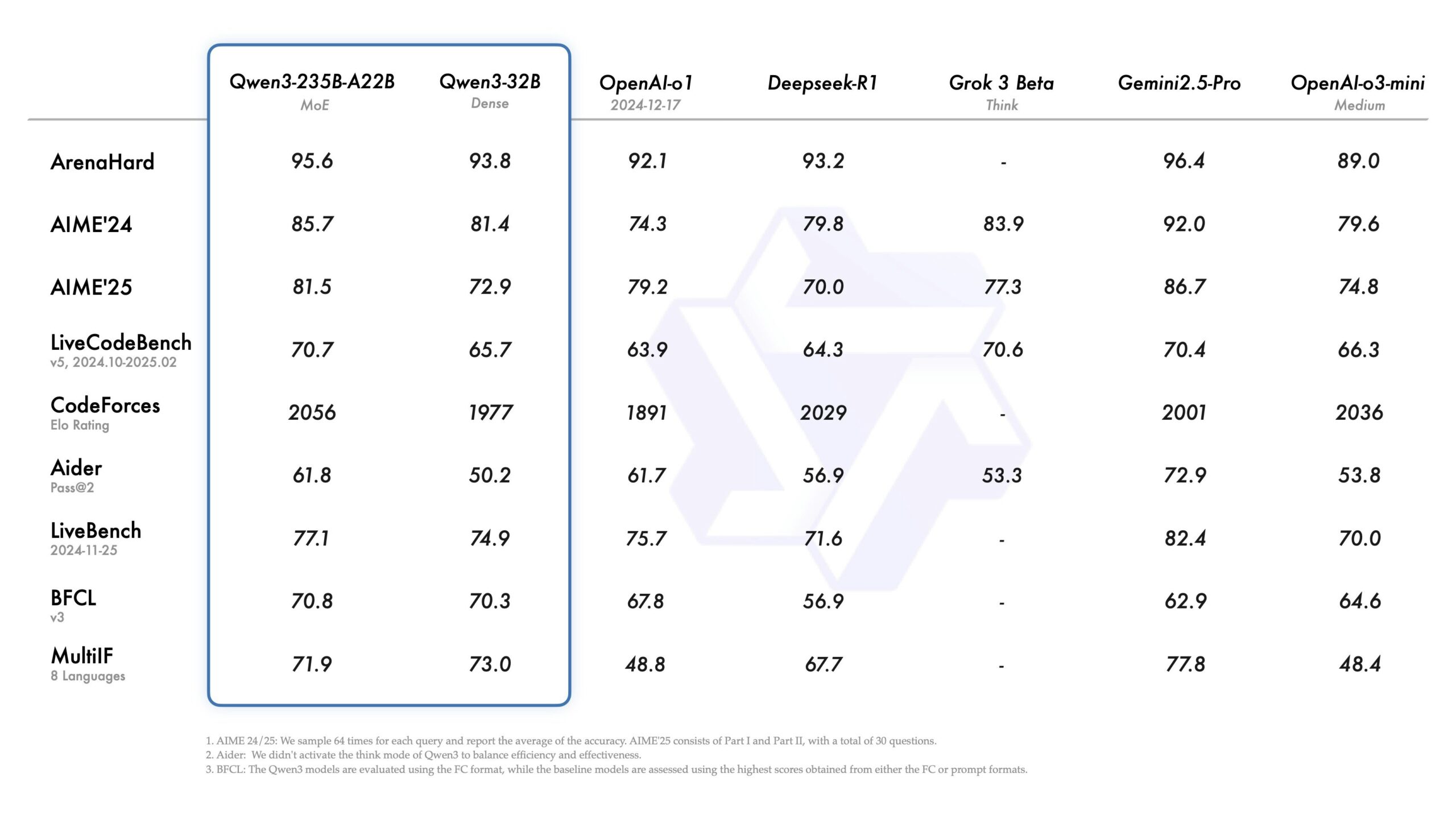

- Competitive Performance in Benchmarks: Qwen3 demonstrates strong performance across various benchmarks, indicating its capabilities in different tasks.

- Flexible Reasoning Modes: The switchable “Thinking Mode” allows users to optimize for speed or accuracy based on the specific requirements of the task.

Disadvantages

- Requires Significant Compute Resources: The larger Qwen3 models require substantial computational power for training and inference.

- Newer Model—Ecosystem and Community Still Growing: As a relatively new model, the ecosystem and community support around Qwen3 are still developing compared to more established models.

How Does It Compare?

When considering Qwen3, it’s helpful to compare it to other similar models.

- LLaMA 3: LLaMA 3 is another prominent open-weight model family. While both aim to provide accessible and customizable LLMs, they differ in their architectural choices and training methodologies.

- GPT-NeoX: GPT-NeoX shares a similar commitment to openness, but its benchmarks are not as recent as Qwen3’s, potentially indicating a performance gap.

Final Thoughts

Qwen3 presents a compelling option for those seeking an open-weight, adaptable, and high-performing large language model. Its switchable “Thinking Mode” and strong performance in coding and math make it a versatile tool for a variety of applications. While it requires significant compute resources and its ecosystem is still growing, Qwen3 is definitely worth considering for your next AI project.