Table of Contents

Rippletide Eval CLI

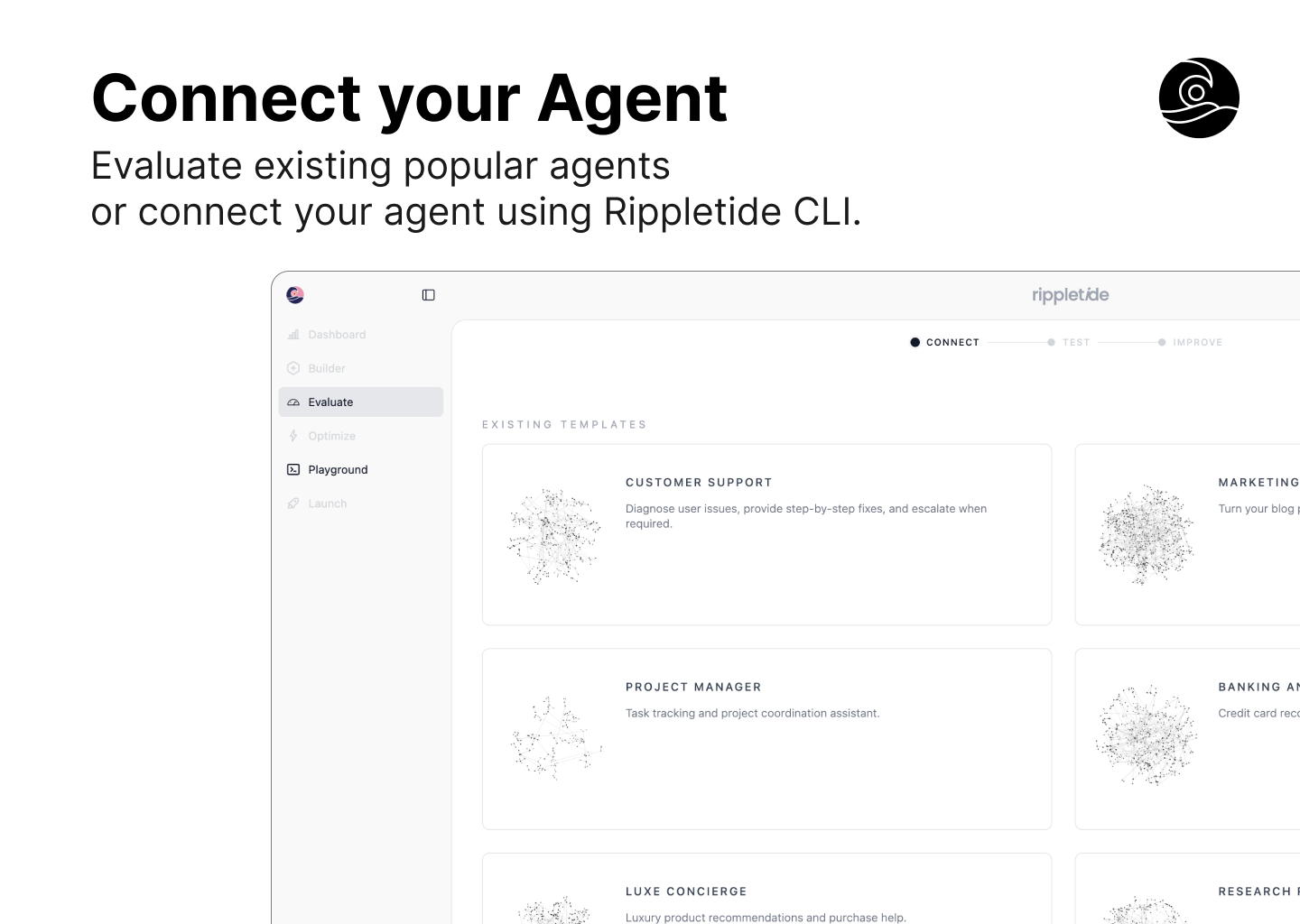

Rippletide Eval CLI is a developer-focused command-line tool designed to benchmark AI agents before they reach production. Launched in January 2026, it addresses the critical gap between deploying an LLM-powered agent and knowing whether it will generate accurate, grounded responses. By automating test generation from the agent’s own data sources and providing quantitative hallucination metrics, it transforms agent evaluation from a subjective “does this feel right?” process into a deterministic, repeatable workflow.

Core Features

- Auto-Generated Test Questions: Automatically creates evaluation datasets by analyzing the agent’s connected data sources (databases, vector stores, APIs), eliminating the need to manually write hundreds of test cases.

- Hallucination Detection with KPIs: Uses a knowledge graph approach to verify each answer against authoritative facts, flagging responses that lack proper grounding or introduce unsupported information.

- Real-Time Terminal Feedback: Displays live progress as tests run, showing pass/fail status and accuracy scores immediately, making debugging fast and interactive.

- Exportable Detailed Reports: Generates structured reports that can be shared with stakeholders or integrated into continuous integration pipelines for regression testing.

- Multi-Source Data Integration: Supports PostgreSQL, Pinecone, and custom APIs out of the box, allowing the CLI to build a verification graph from your actual production data.

How It Works

Developers point the CLI at their agent’s API endpoint and specify the underlying data sources the agent should “know” (such as a Postgres database or a Pinecone index). Rippletide then generates a set of questions designed to test whether the agent can accurately retrieve and reason over that data. As the agent responds, the CLI cross-references each answer against the verified knowledge graph to detect hallucinations, incomplete responses, or logic errors.

Best Use Cases

- Pre-Deployment Testing: Running a final quality check on RAG pipelines before exposing them to end users, ensuring they don’t confidently state incorrect facts.

- CI/CD Integration: Embedding automated agent tests into GitHub Actions or GitLab CI to catch regressions when updating prompts, models, or data sources.

- Debugging Production Incidents: Quickly identifying which types of questions cause an agent to hallucinate by running localized tests against specific knowledge subsets.

- Multi-Agent Comparison: Benchmarking different model providers (GPT-4 vs. Claude vs. Llama) on the same task to choose the most accurate configuration.

Pros and Cons

- Pros: Native terminal experience preferred by engineers; quantitative hallucination metrics make it easier to set quality gates; reproducible test sets allow for consistent evaluation over time; supports localhost endpoints for secure testing.

- Cons: Currently lacks historical trend tracking (side-by-side comparison over weeks/months); CLI-first design is less accessible for non-technical stakeholders who prefer dashboards; requires initial setup to connect data sources.

Pricing

Free (at Launch): Rippletide Eval CLI launched with free access to encourage adoption among developer teams. Long-term pricing for advanced features or enterprise support has not been publicly detailed as of January 2026.

How Does It Compare?

- DeepEval: A Python testing framework (similar to Pytest) that offers comprehensive RAG metrics and CI/CD integration. Rippletide differentiates with its interactive CLI experience rather than requiring Python test files.

- RAGAS: An open-source evaluation library focused on RAG-specific metrics (context precision, faithfulness). RAGAS is lighter for experimentation, while Rippletide adds the “live testing” workflow and knowledge graph verification.

- LangSmith: Built specifically for LangChain users, offering deep tracing and debugging. Rippletide is framework-agnostic and focuses purely on the evaluation step rather than full observability.

- Arize Phoenix: An open-source monitoring platform with agent evaluation capabilities. Phoenix emphasizes long-term production monitoring, while Rippletide focuses on fast, iterative pre-deployment testing.

- Maxim AI: A comprehensive platform for agent evaluation with session-level tracking and convergence analysis. Maxim is better for enterprise teams needing dashboards, while Rippletide serves engineers who prefer terminal-native tools.

Final Thoughts

Rippletide Eval CLI fills a crucial niche for teams that value speed and developer ergonomics. In an era where “agents” are moving from demos to customer-facing products, having a lightweight, reproducible way to catch hallucinations before deployment is essential. While it may not replace full observability platforms for production monitoring, it excels as a rapid iteration tool that keeps AI quality checks as simple as running a test suite.