Table of Contents

Overview

In the rapidly expanding landscape of Large Language Model applications, ensuring reliability, transparency, and performance monitoring has become critical for production deployments. Siloam AI emerges as a specialized solution currently in early alpha development, focused on providing comprehensive observability and monitoring specifically for LLM usage. Launched on Product Hunt in July 2025, this platform addresses the growing need for real-time visibility into LLM behavior, with particular emphasis on detecting anomalies and potential hallucinations before they impact end users.

Key Features

Siloam AI is developing a focused set of observability tools specifically designed for LLM applications and workflows.

- Live Request Monitoring: Provides real-time visibility into every API call as it happens, offering complete transparency into LLM traffic without delays or sampling limitations.

- Automated Response Analysis: Employs intelligent analysis systems to automatically flag suspicious responses, inconsistencies, and potential issues before they reach users, ensuring quality control.

- Anomaly detection: Advanced detection capabilities currently in active development to automatically identify unusual or unexpected behavior patterns in LLM outputs.

- Hallucination detection: A crucial feature under development specifically designed to identify instances where LLMs generate factually incorrect, nonsensical, or unreliable information.

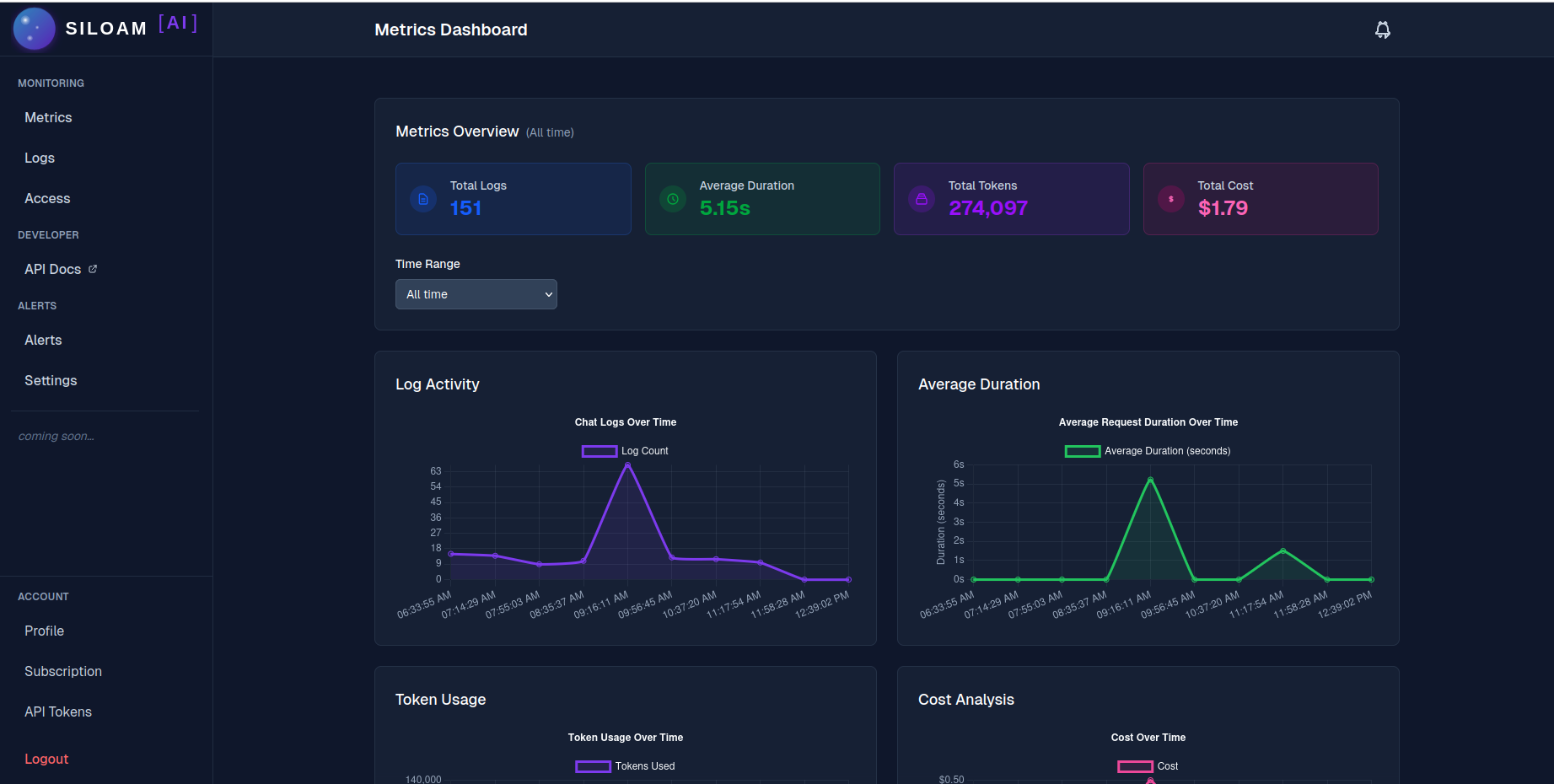

- Usage analytics: Comprehensive analytics and reporting tools that help understand patterns and trends in LLM interactions, enabling data-driven optimization of resource allocation and performance.

- Advanced search and filtering: Powerful search capabilities that enable filtering through millions of requests in milliseconds, with options to filter by model, user, error type, or custom tags.

- Scalable architecture: Designed to accommodate teams of all sizes, from small startups to large enterprises, with flexible scaling options.

How It Works

Siloam AI integrates directly into existing LLM workflows through API-based logging and monitoring. The platform captures comprehensive data about LLM requests and responses in real-time, including model information, input prompts, generated outputs, session identifiers, token usage, and associated costs. This data is then processed through automated analysis systems that evaluate responses for quality, consistency, and potential issues. The platform provides intuitive dashboards and search interfaces that enable teams to quickly identify trends, spot anomalies, and investigate specific interactions, fostering improved reliability and accountability in LLM deployments.

Use Cases

Siloam AI addresses several critical challenges in LLM application development and production management.

- Production LLM monitoring: Ensures consistent reliability and accuracy of LLM outputs in live production environments, providing early warning systems for performance degradation or quality issues.

- Quality assurance and testing: Enables systematic evaluation of LLM responses during development and testing phases, helping teams identify and address potential problems before deployment.

- Cost optimization and resource management: Provides detailed insights into token usage, API costs, and performance metrics, enabling teams to optimize resource allocation and manage operational expenses.

- Compliance and audit support: Maintains comprehensive logs and analysis of LLM interactions to support regulatory compliance, audit requirements, and responsible AI governance practices.

- Debugging and troubleshooting: Offers detailed tracing and analysis capabilities that help developers quickly identify and resolve issues in complex LLM applications and workflows.

Pros \& Cons

As an early-stage platform, Siloam AI presents both promising capabilities and inherent limitations associated with its development status.

Advantages

- LLM-specific focus: Purpose-built specifically for Large Language Model observability, ensuring specialized features and deep understanding of LLM-related challenges and requirements.

- Real-time monitoring capabilities: Provides immediate visibility into LLM performance and behavior without sampling or delays, enabling rapid response to issues.

- Comprehensive anomaly detection: Advanced automated systems designed to identify various types of issues including hallucinations, inconsistencies, and unexpected behaviors.

- Scalable pricing model: Designed to be accessible and affordable for teams of all sizes, with transparent pricing structure and no hidden fees.

- Active development and feedback integration: Early alpha status allows for direct user feedback incorporation and rapid feature development based on real-world needs.

Disadvantages

- Early development stage: Being in early alpha means some core features are still under development and may not be fully mature or stable for critical production use.

- Limited feature completeness: While promising, the full suite of planned features is not yet available, potentially limiting immediate utility for complex use cases.

- Minimal documentation and resources: As a new platform, comprehensive documentation, tutorials, and community resources may be limited during the early adoption phase.

- Unproven production stability: Lack of extensive real-world testing and user feedback means long-term reliability and performance characteristics remain to be established.

How Does It Compare?

The LLM observability market has evolved rapidly, with Siloam AI entering a competitive landscape that includes both established platforms and specialized solutions. Arthur AI represents the enterprise-focused segment, offering comprehensive AI management capabilities including Arthur Shield firewall protection, extensive model monitoring, and enterprise-grade security features. Founded in 2018, Arthur provides a mature platform with proven scalability, making it suitable for large organizations with complex AI deployments.

Arize Phoenix takes an open-source approach, focusing primarily on experimentation and development stages of LLM applications. Phoenix excels in embedding analysis, RAG evaluation, and provides strong support for research and development workflows. Its open-source nature and integration with the broader Arize ecosystem make it particularly attractive for teams already invested in MLOps practices.

LangSmith, developed by the creators of LangChain, offers deep integration with the LangChain ecosystem and provides comprehensive tracing capabilities. Its commercial offering includes advanced features for production monitoring, though it requires existing LangChain adoption for optimal benefits. Langfuse provides another open-source alternative with strong prompt management capabilities and production-ready features, while Helicone focuses on proxy-based monitoring with simple integration requirements.

Portkey and TruLens round out the competitive landscape, with Portkey offering LLM gateway functionality combined with observability, and TruLens specializing in qualitative analysis and feedback mechanisms for LLM evaluation.

Siloam AI differentiates itself through its early alpha accessibility, allowing users to influence development direction, and its specific focus on real-time monitoring with planned advanced anomaly and hallucination detection capabilities. While it lacks the maturity and feature completeness of established players, its development approach and specialized focus position it as a potentially valuable alternative for teams seeking lightweight, focused LLM observability solutions.

Final Thoughts

Siloam AI represents an emerging solution in the critical and rapidly evolving LLM observability space. Its early alpha status, while presenting certain limitations, offers the unique opportunity for early adopters to shape the platform’s development and gain access to specialized LLM monitoring capabilities. The platform’s focus on real-time monitoring, automated anomaly detection, and hallucination identification addresses fundamental challenges that organizations face when deploying LLM applications in production environments.

The success of Siloam AI will largely depend on its ability to deliver on its planned feature set, particularly the advanced detection capabilities currently under development, and its capacity to scale effectively as the platform matures. For organizations seeking lightweight, focused LLM observability solutions and willing to engage with early-stage technology, Siloam AI presents a promising option worth monitoring as it progresses through its development phases.

As the LLM observability market continues to mature, platforms like Siloam AI that focus specifically on the unique challenges of Large Language Model monitoring may find significant opportunities to serve organizations looking for specialized, rather than general-purpose, AI monitoring solutions.

https://siloam.ai